제품

제품

제품

Elevating AI Content Creation with Sora 2 and TwelveLabs MCP

Hrishikesh Yadav

Hrishikesh Yadav

Hrishikesh Yadav

This guide cuts straight to the core: leveraging the power of TwelveLabs MCP to supercharge your AI video generation workflows. Forget compatibility headaches; integrating MCP ensures seamless, system-wide collaboration, enabling effortlessly complex, chained pipelines across various compliant tools and servers.

This guide cuts straight to the core: leveraging the power of TwelveLabs MCP to supercharge your AI video generation workflows. Forget compatibility headaches; integrating MCP ensures seamless, system-wide collaboration, enabling effortlessly complex, chained pipelines across various compliant tools and servers.

뉴스레터 구독하기

최신 영상 AI 소식과 활용 팁, 업계 인사이트까지 한눈에 받아보세요

AI로 영상을 검색하고, 분석하고, 탐색하세요.

2025. 12. 24.

2025. 12. 24.

2025. 12. 24.

8 Minutes

8 Minutes

8 Minutes

링크 복사하기

링크 복사하기

링크 복사하기

Introduction

This guide cuts straight to the core: leveraging the power of TwelveLabs MCP to supercharge your AI video generation workflows. Forget compatibility headaches; integrating MCP ensures seamless, system-wide collaboration, enabling effortlessly complex, chained pipelines across various compliant tools and servers.

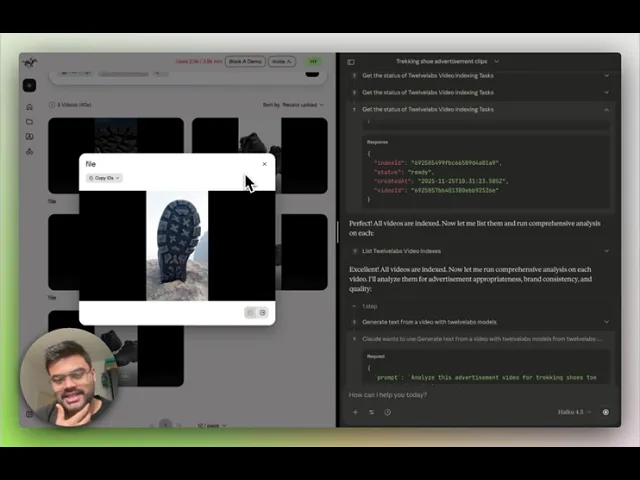

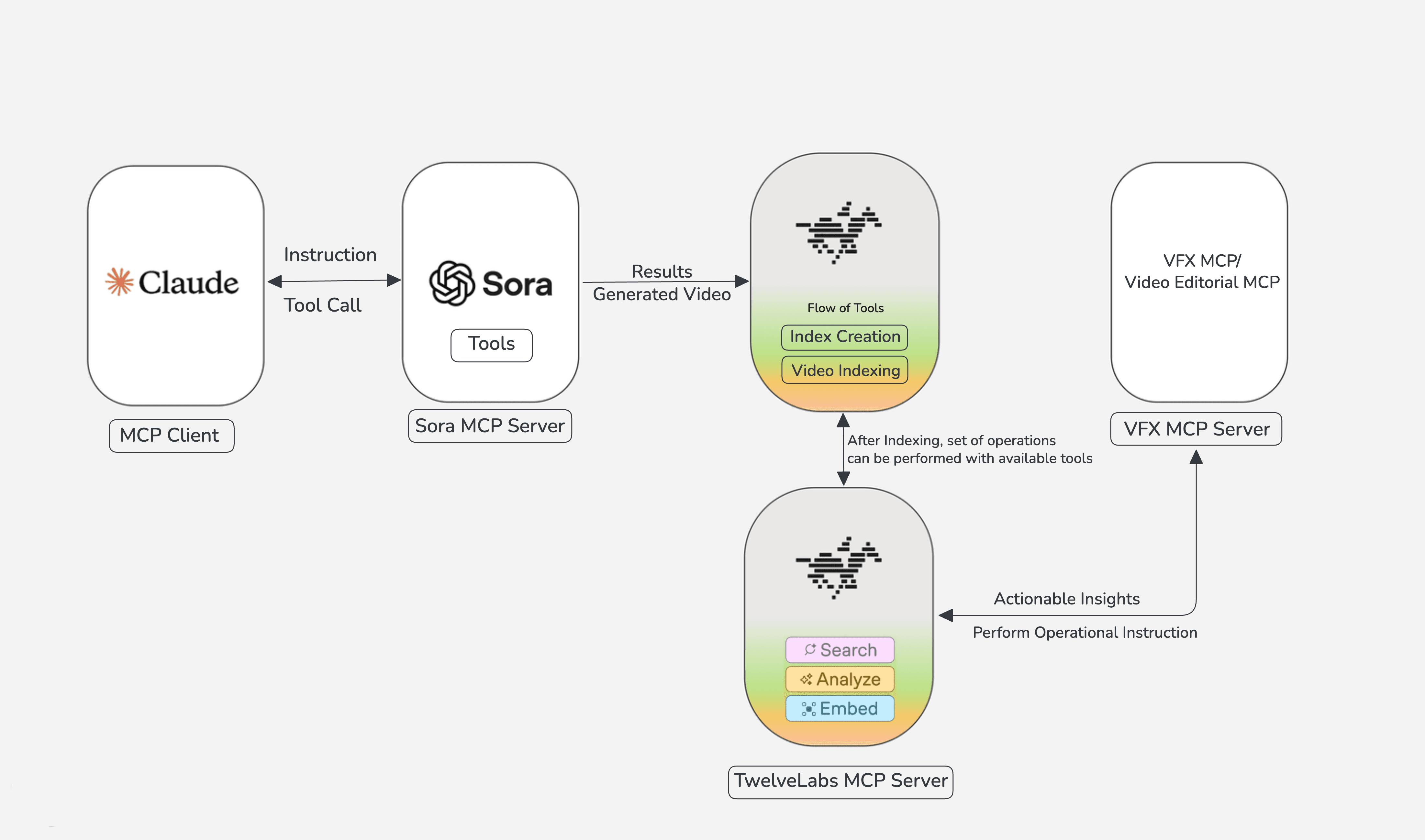

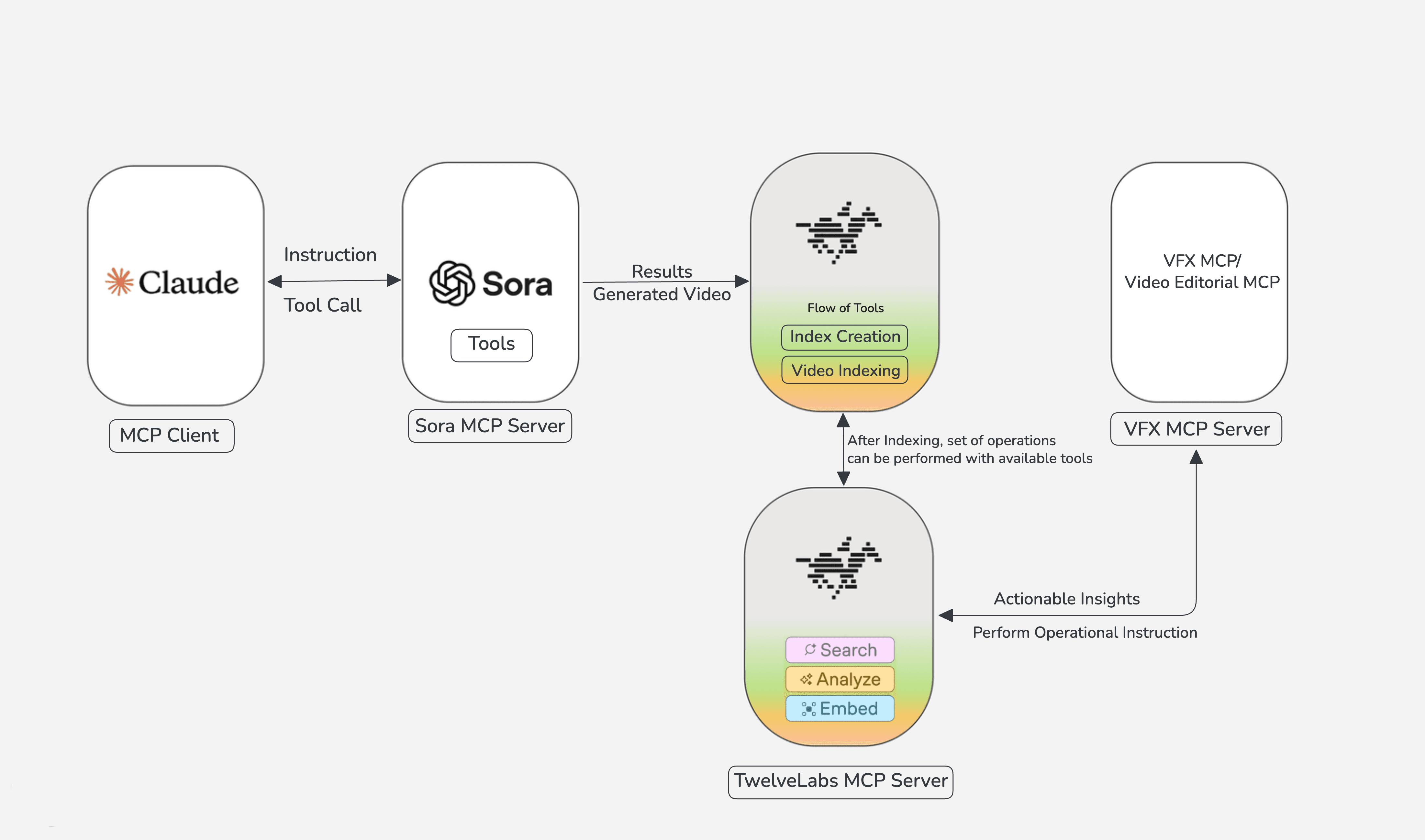

Specifically, you will discover how to forge an impactful, production-ready workflow for social media and beyond by combining TwelveLabs MCP with cutting-edge video generation tools like the Sora 2 MCP server. Injecting video intelligence into every step of the pipeline unlocks richer, smarter, and truly dynamic content. We cover essential strategies and practical applications, from cinematic filmmaking and content creation to precision-targeted advertising.

Ready to build? Start now with the installation and configuration steps in the installation guide, or skip the reading and jump straight into the detailed tutorial video for a complete hands-on setup and prompting masterclass.

Integrating the TwelveLabs MCP on Claude Desktop

Step 1: Access the Setup Guide

Follow the official installation guide: https://mcp-install-instructions.alpic.cloud/servers/twelvelabs-mcp

This resource provides the definitive, step-by-step procedure for adding the TwelveLabs MCP server to your chosen MCP client.

Step 2: Obtain Your TwelveLabs API Key

Log in to your TwelveLabs account and retrieve your API key. This key is non-negotiable for setup, enabling the MCP server to securely and seamlessly access TwelveLabs APIs on your behalf.

Step 3: Select Your Preferred MCP Client

The setup guide contains specific instructions tailored for major clients, including Claude Desktop, Cursor, Goose, VS Code, and Claude Code. Choose your environment.

Step 4: Connect and Validate

After installation, confirm the TwelveLabs MCP server is correctly registered within your client's toolset. A successful connection verifies that your client is ready to execute TwelveLabs tools with zero friction.

Content Creation Workflow using TwelveLabs and Sora 2 MCP

Setup of Sora2 MCP

Clone the repository: https://github.com/Hrishikesh332/sora2-mcp

Make sure to install the required npm packages, set your OpenAI API key in the

.envfile, and configure it properly in the MCP server settings inside Claude Desktop. Also, ensure to read the README thoroughly to know more about the available tools.MCP Server Config for the Sora 2

{ "mcpServers": { "sora-server": { "command": "node", "args": ["/ABSOLUTE/PATH/TO/sora-mcp/dist/stdio-server.js"], "env": { "OPENAI_API_KEY": "your-openai-api-key-here", "DOWNLOAD_DIR": "/Users/yourname/Downloads/sora" } } } }

Steps for Effective Usage

Get started by configuring your environment with the TwelveLabs MCP and the Sora 2 Video Generation MCP within your preferred MCP client server. The workflow kicks off with your prompt—the scene definition, narration, or detailed video specifications. For Sora 2 MCP, if you're aiming for a personalized, input-driven video, make sure to provide the reference input path.

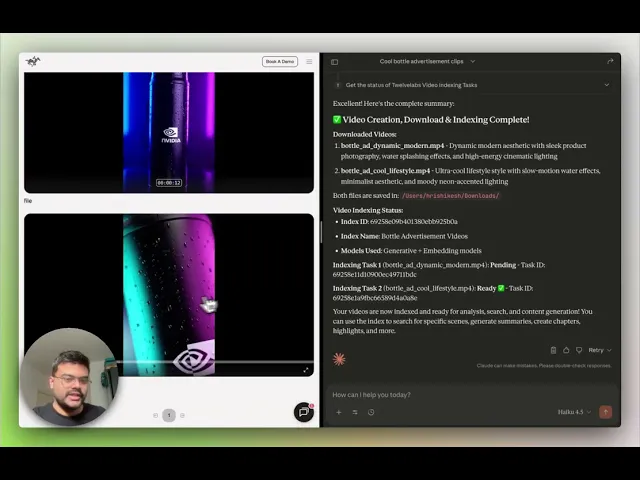

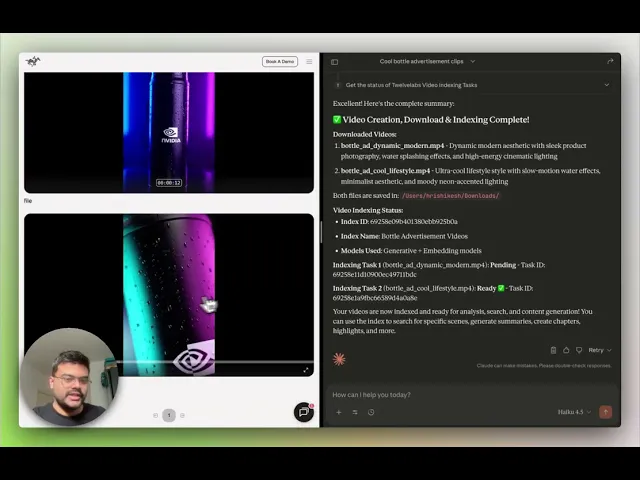

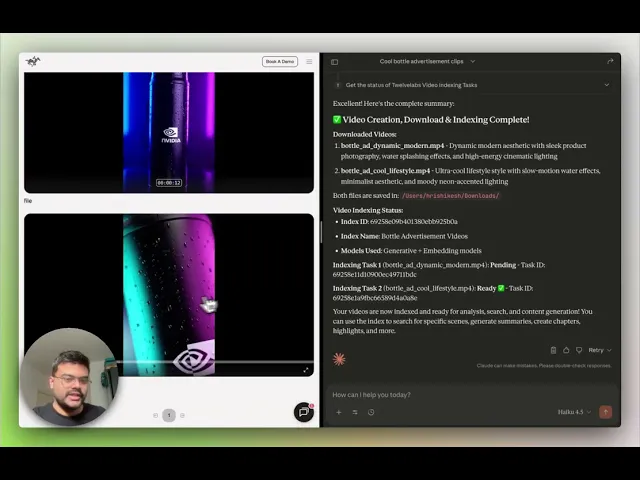

Upon initiation, Sora 2 MCP delivers multiple scene-aligned video variations based on your script. The critical next step is indexing these generated videos using the TwelveLabs MCP. Once indexing and monitoring are complete, you can run deep analysis on all indexed videos. This is where you extract the value: generating editor instructions for maximum engagement, producing accurate transcripts, verifying brand consistency, or determining the optimal scene alignment and sequence.

To truly unlock effective usage and leverage video intelligence as contextual data for your AI Agents, integrate other MCPs. Connect the File Handling MCP, the VFX MCP, or utilize the native capabilities of Claude Desktop to execute ffmpeg commands. This allows you to programmatically perform actions like trimming, merging, quality enhancement, applying transitions, or overlaying elements, all guided by the insights from your video analysis.

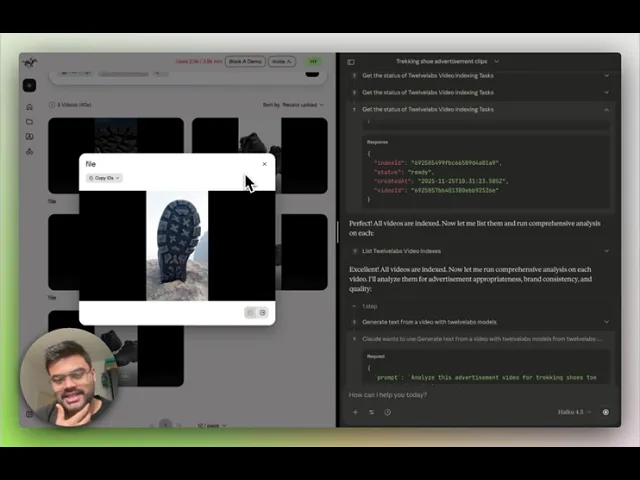

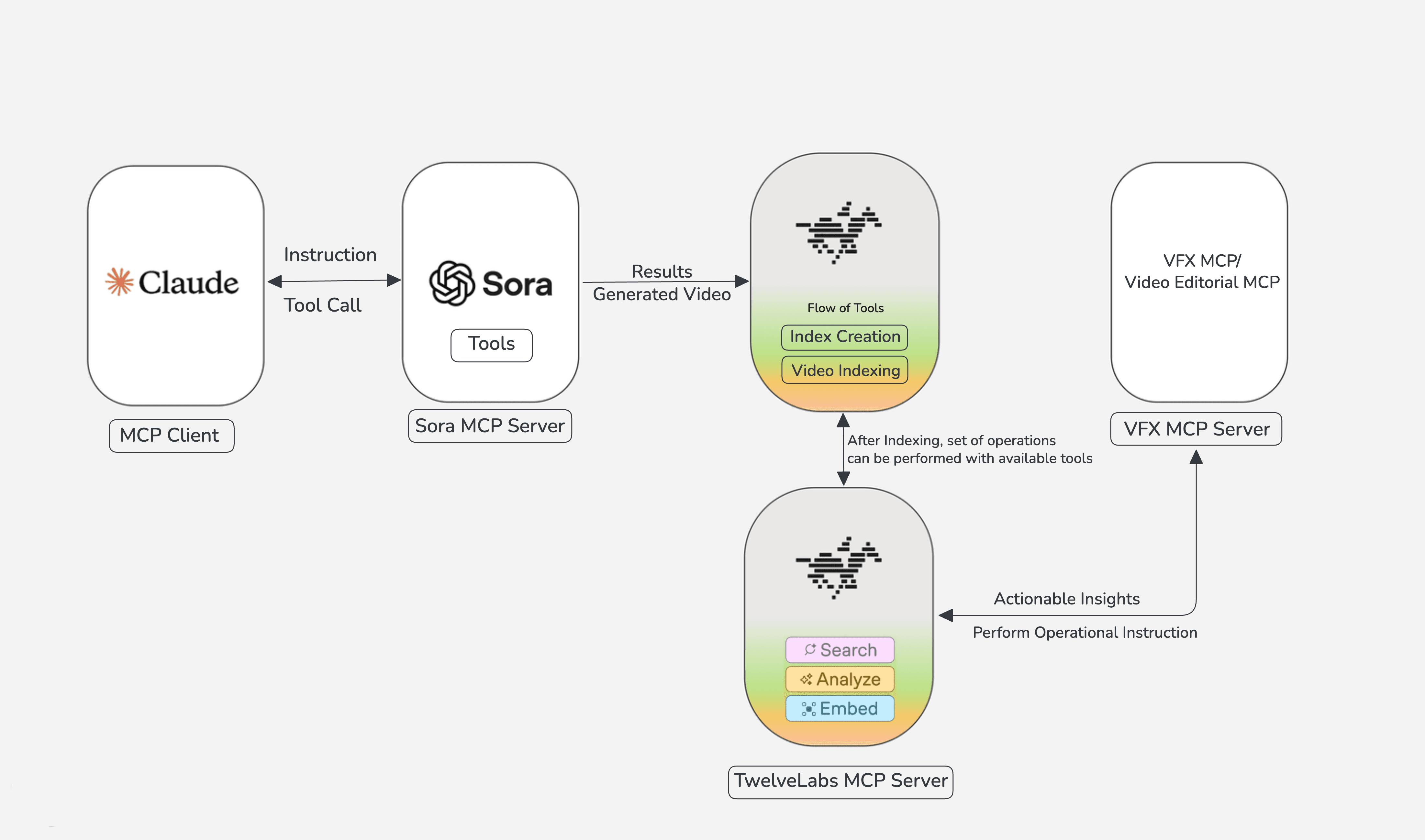

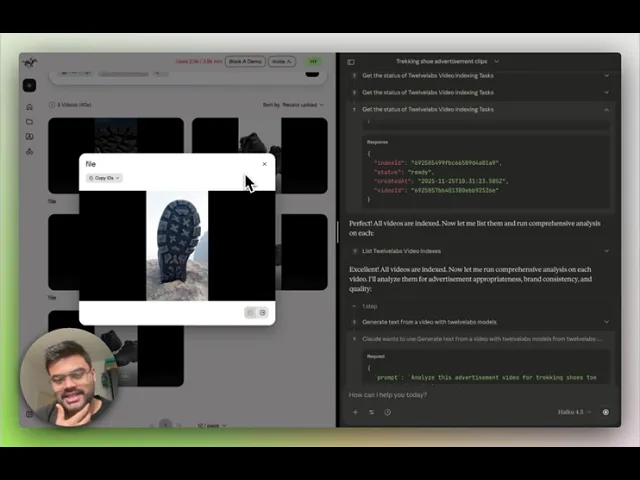

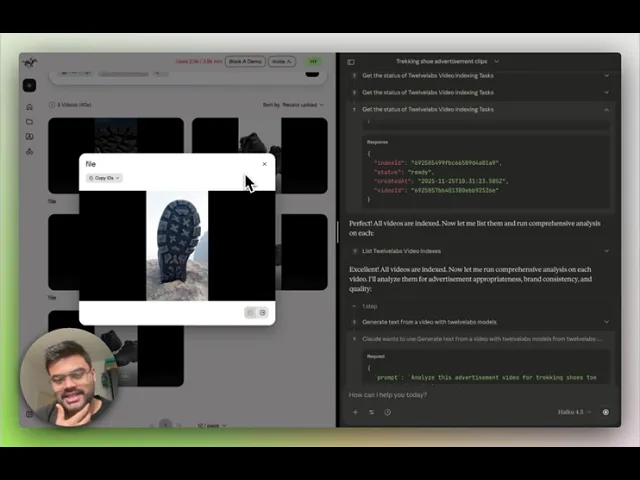

Below is a detailed walkthrough demonstrating how to generate personalized advertisements given any object as input. It showcases how powerful video understanding utilities are applied to extract actionable insights about in-video actions, directly informing targeted editing decisions for specific audiences. The walkthrough also covers essential operations using the VFX MCP.

The Convergence of Video Intelligence and Content Creation

The application of content understanding to generated video assets facilitates and enables diverse avenues for optimization and workflow automation. The combined utilization of the TwelveLabs MCP and the Sora 2 Video Generation MCP ensures that every output is contextually coherent, and that assets acquire capabilities for personalization insights (e.g., tailored advertisements for specific demographics) and operational context for autonomous tools to execute automated actions on the video content. The extensive range of possibilities is detailed below:

A. Enhanced Content Moderation and Brand Compliance

The various video generation outputs have raised IP and likeness concerns, the TwelveLabs MCP can detect the copyrighted characters, logos, or any unsafe asset so that it can be flag, or provide the warning for the assets having the problem.

The quality control and brand checks, can be done in the iteration loop, which could refine the prompt to match the expectation of the user from the output, by getting the feedback by analyzing the generated video content assets especially for the personalized ads, and further it can be extended for further actionable insights from the TwelveLabs MCP analyze.

B. Comprehensive Metadata Generation

The TwelveLabs MCP is capable of automatically extracting granular metadata from generated videos, including topics, objects, actions, concise descriptions, and tags. Given that metadata assignment is a labor-intensive manual process, its automation not only conserves time but also improves recommendation systems and enhances content discoverability, searchability, and contextual relevance, especially when managing a substantial volume of generated video assets.

C. Contextualization for AI Agents

The application of Video Intelligence to generated video assets contributes to the contextualization necessary for AI Agents within the workflow, thereby extending their capability from interpretive analysis to active execution.

This enriched contextual layer allows the AI Agent or connected MCPs to perform actions on the video content. Agents can execute various operational actions, such as automated editorial decision-making, storyline validation, continuity checks, brand tone alignment, goal-driven content restructuring, and more. Agents can infer the significance of the content, its relation to specific scenes, and what subsequent actions or transformations should be initiated, which increases efficacy and can be characterized as "Vibe Editing" for video content.

D. Multilingual Support for Global Accessibility

The multilingual functionality within the TwelveLabs analysis utility improves video generation workflows by enabling accessibility features, such as translated subtitles, captions, and time-aligned transcripts across multiple languages. This enhanced linguistic utility ensures that every generated video asset is globally deployable or suitable for the target audience and is precisely timestamped for subsequent autonomous tasks.

AI Agents can then leverage this multilingual output for automated dubbing, region-specific editorial modifications, and the application of system-generated captions, resulting in a pipeline that is more adaptive and context-aware.

Next-Level Experimentation Beyond the Tutorial

You can push the boundaries of video creation by exploring how powerful video generation and deep video understanding can forge truly actionable, end-to-end workflows is key to unlocking next-generation applications. Here are advanced experimental directions you can immediately tackle with Sora 2 MCP and TwelveLabs MCP:

🎨 Automated Scene Expansion & Regeneration Loops

Leverage TwelveLabs' scene intelligence to pinpoint cinematic flaws, inconsistencies, or prime opportunities within a generated clip. Use those precise insights to instruct Sora 2 MCP to surgically regenerate or seamlessly extend specific scenes.

✂️ Auto Highlights, Cutdowns & Multi-Platform Reformatting

Instantly transform a single, raw Sora-generated video into a complete suite of platform-optimized assets: ultra-short clips, high-impact teasers, full trailers, compelling thumbnails, and polished vertical edits—all ready for deployment.

🤖 Agent-Ready Knowledge Bundles

Don't just generate video—generate data. Structure the content into powerful bundles of transcripts, detailed descriptions, accurate character timelines, and critical key moments. This is the structured data AI agents need to execute complex, utility-focused video editing tasks autonomously.

📡 Actionable Feedback Loops for Model Iteration

Identify and flag critical model issues—visual artifacts, physics violations, off-model characters, or unwanted scene elements—and automatically channel that structured feedback directly back into a Sora regeneration pipeline for immediate, precise correction.

Conclusion

The synergy between Sora 2's state-of-the-art generative video capabilities and TwelveLabs' deep video intelligence isn't just about creating media; it's about transforming it into an actionable, trustworthy, and highly functional asset. This combined MCP workflow gives developers the power to engineer rich workflows, automate critical content decisions, and structure media with meaningful, execution-ready context. The detailed guide demonstrates how generated video content can directly fuel personalized advertisement creation, using insights to dynamically trigger modifications, custom branding, or targeted messaging. Together, the TwelveLabs MCP tools empower developers, marketers, and researchers to engage with and build upon video in truly meaningful, programmatic ways.

Additional Resources on MCP

Install the MCP Server

Get started now. Use our Installation Guide to integrate the TwelveLabs MCP Server into your client.

Dive into the API Documentation

Explore the complete power of the protocol. Check out our Model Context Protocol documentation for detailed usage and seamless integration guides.

Build Your Own MCP Server

Interested in owning the stack? If you're a developer eager to create your own specialized MCP server, you can easily launch and host it on Alpic (free beta).

Introduction

This guide cuts straight to the core: leveraging the power of TwelveLabs MCP to supercharge your AI video generation workflows. Forget compatibility headaches; integrating MCP ensures seamless, system-wide collaboration, enabling effortlessly complex, chained pipelines across various compliant tools and servers.

Specifically, you will discover how to forge an impactful, production-ready workflow for social media and beyond by combining TwelveLabs MCP with cutting-edge video generation tools like the Sora 2 MCP server. Injecting video intelligence into every step of the pipeline unlocks richer, smarter, and truly dynamic content. We cover essential strategies and practical applications, from cinematic filmmaking and content creation to precision-targeted advertising.

Ready to build? Start now with the installation and configuration steps in the installation guide, or skip the reading and jump straight into the detailed tutorial video for a complete hands-on setup and prompting masterclass.

Integrating the TwelveLabs MCP on Claude Desktop

Step 1: Access the Setup Guide

Follow the official installation guide: https://mcp-install-instructions.alpic.cloud/servers/twelvelabs-mcp

This resource provides the definitive, step-by-step procedure for adding the TwelveLabs MCP server to your chosen MCP client.

Step 2: Obtain Your TwelveLabs API Key

Log in to your TwelveLabs account and retrieve your API key. This key is non-negotiable for setup, enabling the MCP server to securely and seamlessly access TwelveLabs APIs on your behalf.

Step 3: Select Your Preferred MCP Client

The setup guide contains specific instructions tailored for major clients, including Claude Desktop, Cursor, Goose, VS Code, and Claude Code. Choose your environment.

Step 4: Connect and Validate

After installation, confirm the TwelveLabs MCP server is correctly registered within your client's toolset. A successful connection verifies that your client is ready to execute TwelveLabs tools with zero friction.

Content Creation Workflow using TwelveLabs and Sora 2 MCP

Setup of Sora2 MCP

Clone the repository: https://github.com/Hrishikesh332/sora2-mcp

Make sure to install the required npm packages, set your OpenAI API key in the

.envfile, and configure it properly in the MCP server settings inside Claude Desktop. Also, ensure to read the README thoroughly to know more about the available tools.MCP Server Config for the Sora 2

{ "mcpServers": { "sora-server": { "command": "node", "args": ["/ABSOLUTE/PATH/TO/sora-mcp/dist/stdio-server.js"], "env": { "OPENAI_API_KEY": "your-openai-api-key-here", "DOWNLOAD_DIR": "/Users/yourname/Downloads/sora" } } } }

Steps for Effective Usage

Get started by configuring your environment with the TwelveLabs MCP and the Sora 2 Video Generation MCP within your preferred MCP client server. The workflow kicks off with your prompt—the scene definition, narration, or detailed video specifications. For Sora 2 MCP, if you're aiming for a personalized, input-driven video, make sure to provide the reference input path.

Upon initiation, Sora 2 MCP delivers multiple scene-aligned video variations based on your script. The critical next step is indexing these generated videos using the TwelveLabs MCP. Once indexing and monitoring are complete, you can run deep analysis on all indexed videos. This is where you extract the value: generating editor instructions for maximum engagement, producing accurate transcripts, verifying brand consistency, or determining the optimal scene alignment and sequence.

To truly unlock effective usage and leverage video intelligence as contextual data for your AI Agents, integrate other MCPs. Connect the File Handling MCP, the VFX MCP, or utilize the native capabilities of Claude Desktop to execute ffmpeg commands. This allows you to programmatically perform actions like trimming, merging, quality enhancement, applying transitions, or overlaying elements, all guided by the insights from your video analysis.

Below is a detailed walkthrough demonstrating how to generate personalized advertisements given any object as input. It showcases how powerful video understanding utilities are applied to extract actionable insights about in-video actions, directly informing targeted editing decisions for specific audiences. The walkthrough also covers essential operations using the VFX MCP.

The Convergence of Video Intelligence and Content Creation

The application of content understanding to generated video assets facilitates and enables diverse avenues for optimization and workflow automation. The combined utilization of the TwelveLabs MCP and the Sora 2 Video Generation MCP ensures that every output is contextually coherent, and that assets acquire capabilities for personalization insights (e.g., tailored advertisements for specific demographics) and operational context for autonomous tools to execute automated actions on the video content. The extensive range of possibilities is detailed below:

A. Enhanced Content Moderation and Brand Compliance

The various video generation outputs have raised IP and likeness concerns, the TwelveLabs MCP can detect the copyrighted characters, logos, or any unsafe asset so that it can be flag, or provide the warning for the assets having the problem.

The quality control and brand checks, can be done in the iteration loop, which could refine the prompt to match the expectation of the user from the output, by getting the feedback by analyzing the generated video content assets especially for the personalized ads, and further it can be extended for further actionable insights from the TwelveLabs MCP analyze.

B. Comprehensive Metadata Generation

The TwelveLabs MCP is capable of automatically extracting granular metadata from generated videos, including topics, objects, actions, concise descriptions, and tags. Given that metadata assignment is a labor-intensive manual process, its automation not only conserves time but also improves recommendation systems and enhances content discoverability, searchability, and contextual relevance, especially when managing a substantial volume of generated video assets.

C. Contextualization for AI Agents

The application of Video Intelligence to generated video assets contributes to the contextualization necessary for AI Agents within the workflow, thereby extending their capability from interpretive analysis to active execution.

This enriched contextual layer allows the AI Agent or connected MCPs to perform actions on the video content. Agents can execute various operational actions, such as automated editorial decision-making, storyline validation, continuity checks, brand tone alignment, goal-driven content restructuring, and more. Agents can infer the significance of the content, its relation to specific scenes, and what subsequent actions or transformations should be initiated, which increases efficacy and can be characterized as "Vibe Editing" for video content.

D. Multilingual Support for Global Accessibility

The multilingual functionality within the TwelveLabs analysis utility improves video generation workflows by enabling accessibility features, such as translated subtitles, captions, and time-aligned transcripts across multiple languages. This enhanced linguistic utility ensures that every generated video asset is globally deployable or suitable for the target audience and is precisely timestamped for subsequent autonomous tasks.

AI Agents can then leverage this multilingual output for automated dubbing, region-specific editorial modifications, and the application of system-generated captions, resulting in a pipeline that is more adaptive and context-aware.

Next-Level Experimentation Beyond the Tutorial

You can push the boundaries of video creation by exploring how powerful video generation and deep video understanding can forge truly actionable, end-to-end workflows is key to unlocking next-generation applications. Here are advanced experimental directions you can immediately tackle with Sora 2 MCP and TwelveLabs MCP:

🎨 Automated Scene Expansion & Regeneration Loops

Leverage TwelveLabs' scene intelligence to pinpoint cinematic flaws, inconsistencies, or prime opportunities within a generated clip. Use those precise insights to instruct Sora 2 MCP to surgically regenerate or seamlessly extend specific scenes.

✂️ Auto Highlights, Cutdowns & Multi-Platform Reformatting

Instantly transform a single, raw Sora-generated video into a complete suite of platform-optimized assets: ultra-short clips, high-impact teasers, full trailers, compelling thumbnails, and polished vertical edits—all ready for deployment.

🤖 Agent-Ready Knowledge Bundles

Don't just generate video—generate data. Structure the content into powerful bundles of transcripts, detailed descriptions, accurate character timelines, and critical key moments. This is the structured data AI agents need to execute complex, utility-focused video editing tasks autonomously.

📡 Actionable Feedback Loops for Model Iteration

Identify and flag critical model issues—visual artifacts, physics violations, off-model characters, or unwanted scene elements—and automatically channel that structured feedback directly back into a Sora regeneration pipeline for immediate, precise correction.

Conclusion

The synergy between Sora 2's state-of-the-art generative video capabilities and TwelveLabs' deep video intelligence isn't just about creating media; it's about transforming it into an actionable, trustworthy, and highly functional asset. This combined MCP workflow gives developers the power to engineer rich workflows, automate critical content decisions, and structure media with meaningful, execution-ready context. The detailed guide demonstrates how generated video content can directly fuel personalized advertisement creation, using insights to dynamically trigger modifications, custom branding, or targeted messaging. Together, the TwelveLabs MCP tools empower developers, marketers, and researchers to engage with and build upon video in truly meaningful, programmatic ways.

Additional Resources on MCP

Install the MCP Server

Get started now. Use our Installation Guide to integrate the TwelveLabs MCP Server into your client.

Dive into the API Documentation

Explore the complete power of the protocol. Check out our Model Context Protocol documentation for detailed usage and seamless integration guides.

Build Your Own MCP Server

Interested in owning the stack? If you're a developer eager to create your own specialized MCP server, you can easily launch and host it on Alpic (free beta).

Introduction

This guide cuts straight to the core: leveraging the power of TwelveLabs MCP to supercharge your AI video generation workflows. Forget compatibility headaches; integrating MCP ensures seamless, system-wide collaboration, enabling effortlessly complex, chained pipelines across various compliant tools and servers.

Specifically, you will discover how to forge an impactful, production-ready workflow for social media and beyond by combining TwelveLabs MCP with cutting-edge video generation tools like the Sora 2 MCP server. Injecting video intelligence into every step of the pipeline unlocks richer, smarter, and truly dynamic content. We cover essential strategies and practical applications, from cinematic filmmaking and content creation to precision-targeted advertising.

Ready to build? Start now with the installation and configuration steps in the installation guide, or skip the reading and jump straight into the detailed tutorial video for a complete hands-on setup and prompting masterclass.

Integrating the TwelveLabs MCP on Claude Desktop

Step 1: Access the Setup Guide

Follow the official installation guide: https://mcp-install-instructions.alpic.cloud/servers/twelvelabs-mcp

This resource provides the definitive, step-by-step procedure for adding the TwelveLabs MCP server to your chosen MCP client.

Step 2: Obtain Your TwelveLabs API Key

Log in to your TwelveLabs account and retrieve your API key. This key is non-negotiable for setup, enabling the MCP server to securely and seamlessly access TwelveLabs APIs on your behalf.

Step 3: Select Your Preferred MCP Client

The setup guide contains specific instructions tailored for major clients, including Claude Desktop, Cursor, Goose, VS Code, and Claude Code. Choose your environment.

Step 4: Connect and Validate

After installation, confirm the TwelveLabs MCP server is correctly registered within your client's toolset. A successful connection verifies that your client is ready to execute TwelveLabs tools with zero friction.

Content Creation Workflow using TwelveLabs and Sora 2 MCP

Setup of Sora2 MCP

Clone the repository: https://github.com/Hrishikesh332/sora2-mcp

Make sure to install the required npm packages, set your OpenAI API key in the

.envfile, and configure it properly in the MCP server settings inside Claude Desktop. Also, ensure to read the README thoroughly to know more about the available tools.MCP Server Config for the Sora 2

{ "mcpServers": { "sora-server": { "command": "node", "args": ["/ABSOLUTE/PATH/TO/sora-mcp/dist/stdio-server.js"], "env": { "OPENAI_API_KEY": "your-openai-api-key-here", "DOWNLOAD_DIR": "/Users/yourname/Downloads/sora" } } } }

Steps for Effective Usage

Get started by configuring your environment with the TwelveLabs MCP and the Sora 2 Video Generation MCP within your preferred MCP client server. The workflow kicks off with your prompt—the scene definition, narration, or detailed video specifications. For Sora 2 MCP, if you're aiming for a personalized, input-driven video, make sure to provide the reference input path.

Upon initiation, Sora 2 MCP delivers multiple scene-aligned video variations based on your script. The critical next step is indexing these generated videos using the TwelveLabs MCP. Once indexing and monitoring are complete, you can run deep analysis on all indexed videos. This is where you extract the value: generating editor instructions for maximum engagement, producing accurate transcripts, verifying brand consistency, or determining the optimal scene alignment and sequence.

To truly unlock effective usage and leverage video intelligence as contextual data for your AI Agents, integrate other MCPs. Connect the File Handling MCP, the VFX MCP, or utilize the native capabilities of Claude Desktop to execute ffmpeg commands. This allows you to programmatically perform actions like trimming, merging, quality enhancement, applying transitions, or overlaying elements, all guided by the insights from your video analysis.

Below is a detailed walkthrough demonstrating how to generate personalized advertisements given any object as input. It showcases how powerful video understanding utilities are applied to extract actionable insights about in-video actions, directly informing targeted editing decisions for specific audiences. The walkthrough also covers essential operations using the VFX MCP.

The Convergence of Video Intelligence and Content Creation

The application of content understanding to generated video assets facilitates and enables diverse avenues for optimization and workflow automation. The combined utilization of the TwelveLabs MCP and the Sora 2 Video Generation MCP ensures that every output is contextually coherent, and that assets acquire capabilities for personalization insights (e.g., tailored advertisements for specific demographics) and operational context for autonomous tools to execute automated actions on the video content. The extensive range of possibilities is detailed below:

A. Enhanced Content Moderation and Brand Compliance

The various video generation outputs have raised IP and likeness concerns, the TwelveLabs MCP can detect the copyrighted characters, logos, or any unsafe asset so that it can be flag, or provide the warning for the assets having the problem.

The quality control and brand checks, can be done in the iteration loop, which could refine the prompt to match the expectation of the user from the output, by getting the feedback by analyzing the generated video content assets especially for the personalized ads, and further it can be extended for further actionable insights from the TwelveLabs MCP analyze.

B. Comprehensive Metadata Generation

The TwelveLabs MCP is capable of automatically extracting granular metadata from generated videos, including topics, objects, actions, concise descriptions, and tags. Given that metadata assignment is a labor-intensive manual process, its automation not only conserves time but also improves recommendation systems and enhances content discoverability, searchability, and contextual relevance, especially when managing a substantial volume of generated video assets.

C. Contextualization for AI Agents

The application of Video Intelligence to generated video assets contributes to the contextualization necessary for AI Agents within the workflow, thereby extending their capability from interpretive analysis to active execution.

This enriched contextual layer allows the AI Agent or connected MCPs to perform actions on the video content. Agents can execute various operational actions, such as automated editorial decision-making, storyline validation, continuity checks, brand tone alignment, goal-driven content restructuring, and more. Agents can infer the significance of the content, its relation to specific scenes, and what subsequent actions or transformations should be initiated, which increases efficacy and can be characterized as "Vibe Editing" for video content.

D. Multilingual Support for Global Accessibility

The multilingual functionality within the TwelveLabs analysis utility improves video generation workflows by enabling accessibility features, such as translated subtitles, captions, and time-aligned transcripts across multiple languages. This enhanced linguistic utility ensures that every generated video asset is globally deployable or suitable for the target audience and is precisely timestamped for subsequent autonomous tasks.

AI Agents can then leverage this multilingual output for automated dubbing, region-specific editorial modifications, and the application of system-generated captions, resulting in a pipeline that is more adaptive and context-aware.

Next-Level Experimentation Beyond the Tutorial

You can push the boundaries of video creation by exploring how powerful video generation and deep video understanding can forge truly actionable, end-to-end workflows is key to unlocking next-generation applications. Here are advanced experimental directions you can immediately tackle with Sora 2 MCP and TwelveLabs MCP:

🎨 Automated Scene Expansion & Regeneration Loops

Leverage TwelveLabs' scene intelligence to pinpoint cinematic flaws, inconsistencies, or prime opportunities within a generated clip. Use those precise insights to instruct Sora 2 MCP to surgically regenerate or seamlessly extend specific scenes.

✂️ Auto Highlights, Cutdowns & Multi-Platform Reformatting

Instantly transform a single, raw Sora-generated video into a complete suite of platform-optimized assets: ultra-short clips, high-impact teasers, full trailers, compelling thumbnails, and polished vertical edits—all ready for deployment.

🤖 Agent-Ready Knowledge Bundles

Don't just generate video—generate data. Structure the content into powerful bundles of transcripts, detailed descriptions, accurate character timelines, and critical key moments. This is the structured data AI agents need to execute complex, utility-focused video editing tasks autonomously.

📡 Actionable Feedback Loops for Model Iteration

Identify and flag critical model issues—visual artifacts, physics violations, off-model characters, or unwanted scene elements—and automatically channel that structured feedback directly back into a Sora regeneration pipeline for immediate, precise correction.

Conclusion

The synergy between Sora 2's state-of-the-art generative video capabilities and TwelveLabs' deep video intelligence isn't just about creating media; it's about transforming it into an actionable, trustworthy, and highly functional asset. This combined MCP workflow gives developers the power to engineer rich workflows, automate critical content decisions, and structure media with meaningful, execution-ready context. The detailed guide demonstrates how generated video content can directly fuel personalized advertisement creation, using insights to dynamically trigger modifications, custom branding, or targeted messaging. Together, the TwelveLabs MCP tools empower developers, marketers, and researchers to engage with and build upon video in truly meaningful, programmatic ways.

Additional Resources on MCP

Install the MCP Server

Get started now. Use our Installation Guide to integrate the TwelveLabs MCP Server into your client.

Dive into the API Documentation

Explore the complete power of the protocol. Check out our Model Context Protocol documentation for detailed usage and seamless integration guides.

Build Your Own MCP Server

Interested in owning the stack? If you're a developer eager to create your own specialized MCP server, you can easily launch and host it on Alpic (free beta).