Tutorial

Building Video Deep Research with TwelveLabs and Perplexity Sonar

Hrishikesh Yadav

Video Deep Research is a platform that enables intelligent video analysis, deep research, and reliable citation extraction. Unlike traditional search engines, which cannot directly search inside videos or provide verifiable references, the application uses Pegasus 1.2 from TwelveLabs for video understanding and Sonar by Perplexity for knowledge retrieval and citation. This enables researchers, creators, and professionals to explore video content at a semantic level, generate structured insights, and link them with verifiable sources.

Video Deep Research is a platform that enables intelligent video analysis, deep research, and reliable citation extraction. Unlike traditional search engines, which cannot directly search inside videos or provide verifiable references, the application uses Pegasus 1.2 from TwelveLabs for video understanding and Sonar by Perplexity for knowledge retrieval and citation. This enables researchers, creators, and professionals to explore video content at a semantic level, generate structured insights, and link them with verifiable sources.

뉴스레터 구독하기

최신 영상 AI 소식과 활용 팁, 업계 인사이트까지 한눈에 받아보세요

AI로 영상을 검색하고, 분석하고, 탐색하세요.

2025. 10. 7.

13 Minutes

링크 복사하기

Introduction

What if you could search videos as easily as you search text over the web and get every insight with a trusted citation? 🎥🔍

Today, that’s still a missing piece of the web. Search engines don't consider the videos as the input to get the result. This leaves researchers, creators, and professionals stuck manually going through hours of footage, with no reliable way to extract structured insights or verify them.

Video Deep Research changes the way to look on to the video for the research. By combining TwelveLabs Analyze pegasus-1.2 for semantic video understanding with Sonar by Perplexity for citation-powered knowledge retrieval, it transforms videos into a searchable, trustworthy research resource.

Let’s explore how the Video Deep Research application works and how you can build a similar solution with the video understanding and deep research by using the TwelveLabs Python SDK and Perplexity Sonar.

You can explore the demo of the application here: Video Deep Research Application

Prerequisites

Generate an API key by signing up at the TwelveLabs Playground.

Sign Up on Sonar and generate the API KEY to access the model.

Find the repository for this application on Github Repository.

You should be familiar with Python, Flask, and Next.js

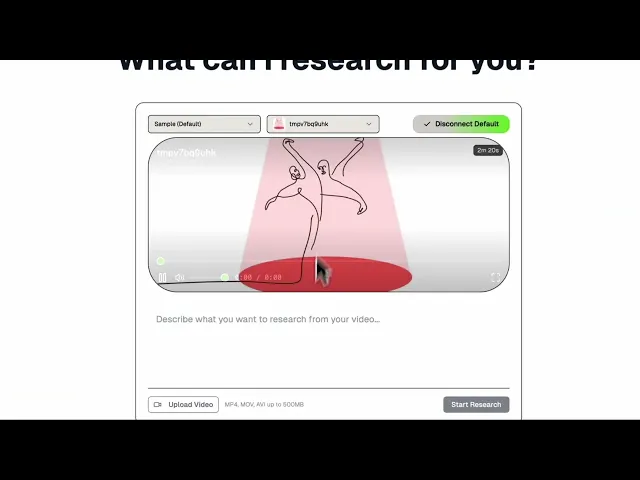

Demo Application

This demo application showcases how video understanding and knowledge retrieval can unlock a completely new way of doing research.

Users can simply upload a video or select from an already indexed video by connecting their TwelveLabs API Key. The application then processes the content, extracts structured insights, and links them to verifiable references, bridging the gap between video and trustworthy research.

Here, you’ll find a detailed walkthrough of the code and the demo so you can build and extend your own Video Deep Research powered application —

Working of the Application

The application supports two modes of interaction -

Using the TwelveLabs API Key: Connect your personal TwelveLabs API Key to access and interact with your own already indexed videos. Once connected, you can select an existing Index ID and respective video.

Upload a Video: When a video is uploaded, the application is designed to automatically use the default TwelveLabs API key for seamless setup. After the upload, the indexing process begins immediately. Once indexing is complete, the most recently uploaded video will be displayed as the latest available content. From this point onward, the query and citation workflow proceeds identically for both interaction modes, following the steps mentioned below.

The complete workflow of the application begins with configuring the TwelveLabs client. This requires setting up the TwelveLabs API key, either by using the environment-provided key or by allowing the user to connect their own key from the client side to access already indexed videos. Once the client is configured, the process follows a sequential flow as outlined below –

Fetch Indexes – Retrieve the list of indexes associated with the connected TwelveLabs account.

Select Video – Choose a video from the desired index for analysis.

Analyze Video – Perform video content analysis on the selected video using the provided query.

Deep Research – Combine the analyzed response with a structured prompt and the user’s query, then pass it to the Sonar research model for deeper reasoning and citation-backed insights.

This structured workflow ensures a smooth progression from video indexing through to advanced research, providing users with detailed, context-aware outputs.

Both approaches are illustrated in the architecture diagram below.

The flow is designed such that once a research response is generated, users can provide follow-up queries to request additional references or deeper insights around the same context. These subsequent queries are routed back into the Sonar research loop, which maintains contextual continuity and expands on the existing knowledge base. This iterative design ensures that each new query builds upon the prior results, allowing for progressively richer and more context-aware research outputs.

Preparation Steps

Obtain your API key from the TwelveLabs Playground and set up your environment variable.

Do create the Index, with the

pegasus-1.2selection via TwelveLabs Playground, and get theindex_idspecifically for this application.Clone the project from Github.

Do obtain the API KEY from the Sonar by Perplexity.

Create a

.envfile containing your TwelveLabs and Sonar credentials. An example of the environment setup can be found here.

Once you've completed these steps, you're ready to start developing!

Walkthrough for the Video Deep-Research App

This tutorial demonstrates how to build an application that can retrieve citations and relevant information from the web based on video content. The project uses Next.js for the frontend and a Flask API (with CORS enabled) for the backend. Our main focus will be on implementing the core backend utility that powers this video deep-research application, along with setting up the complete environment.

For detailed setup instructions and the full code structure, please refer to the README.md file in the GitHub repository.

1 - Workflow for Video Deep-Research Implementation

The most critical step in the workflow is the initialization and handling of the TwelveLabs API key. The application can either load the key directly from the environment for default usage or allow the user to provide their own API key via the client.

backend/service/twelvelabs_service.py (14-36 Line)

class TwelveLabsService: def __init__(self, api_key=None): # If no API key is provided, try to get it from environment variable if api_key is None: api_key = os.environ.get('TWELVELABS_API_KEY', '') self.api_key = api_key # Store API key in the instance self.client = TwelveLabs(api_key=api_key) # Intialize the TwelveLabs client

class TwelveLabsService: def __init__(self, api_key=None): # If no API key is provided, try to get it from environment variable if api_key is None: api_key = os.environ.get('TWELVELABS_API_KEY', '') self.api_key = api_key # Store API key in the instance self.client = TwelveLabs(api_key=api_key) # Intialize the TwelveLabs client

1.1 GET the Indexes

Once the user connects their TwelveLabs API key either through the portal or via the default mode where the key is loaded from the environment, the application retrieves all indexes associated with that account. The retrieved data is then structured and delivered to the frontend for display.

backend/service/twelvelabs_service.py (14-36 Line)

def get_indexes(self): try: print("Fetching indexes...") # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of indexes indexes = self.client.indexes.list() result = [] for index in indexes: # Append a dictionary with relevant index details result.append({ "id": index.id, "name": index.index_name }) print(f"ID: {index.id}") print(f" Name: {index.index_name}") return result except Exception as e: print(f"Error fetching indexes: {e}") return []

def get_indexes(self): try: print("Fetching indexes...") # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of indexes indexes = self.client.indexes.list() result = [] for index in indexes: # Append a dictionary with relevant index details result.append({ "id": index.id, "name": index.index_name }) print(f"ID: {index.id}") print(f" Name: {index.index_name}") return result except Exception as e: print(f"Error fetching indexes: {e}") return []

1.2 GET the list of videos, for respective Index ID

When a user selects an index, the application records the associated index_id. With this index_id, the system invokes the relevant method to fetch and return all videos linked to that index.

backend/service/twelvelabs_service.py (38-70 Line)

def get_videos(self, index_id, page=1): try: # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of videos videos_response = self.client.indexes.videos.list(index_id=index_id, page=page) result = [] for video in videos_response.items: system_metadata = video.system_metadata hls_data = video.hls thumbnail_urls = hls_data.get('thumbnail_urls', []) if hls_data else [] thumbnail_url = thumbnail_urls[0] if thumbnail_urls else None video_url = hls_data.get('video_url') if hls_data else None # Append a dictionary with relevant video details result.append({ "id": video.id, "name": system_metadata.filename if system_metadata and system_metadata.filename else f'Video {video.id}', "duration": system_metadata.duration if system_metadata else 0, "thumbnail_url": thumbnail_url, "video_url": video_url, "width": system_metadata.width if system_metadata else 0, "height": system_metadata.height if system_metadata else 0, "fps": system_metadata.fps if system_metadata else 0, "size": system_metadata.size if system_metadata else 0 }) return result except Exception as e: print(f"Error fetching videos for index {index_id}: {e}") return []

def get_videos(self, index_id, page=1): try: # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of videos videos_response = self.client.indexes.videos.list(index_id=index_id, page=page) result = [] for video in videos_response.items: system_metadata = video.system_metadata hls_data = video.hls thumbnail_urls = hls_data.get('thumbnail_urls', []) if hls_data else [] thumbnail_url = thumbnail_urls[0] if thumbnail_urls else None video_url = hls_data.get('video_url') if hls_data else None # Append a dictionary with relevant video details result.append({ "id": video.id, "name": system_metadata.filename if system_metadata and system_metadata.filename else f'Video {video.id}', "duration": system_metadata.duration if system_metadata else 0, "thumbnail_url": thumbnail_url, "video_url": video_url, "width": system_metadata.width if system_metadata else 0, "height": system_metadata.height if system_metadata else 0, "fps": system_metadata.fps if system_metadata else 0, "size": system_metadata.size if system_metadata else 0 }) return result except Exception as e: print(f"Error fetching videos for index {index_id}: {e}") return []

1.3 Analyze the video content

When a video is selected and a research query is provided by the user, both the video_id and the query are passed to the analyze utility for video understanding. The query is appended to a predefined prompt, which enhances the context and ensures meaningful analyzed response. The prompt used for the video analysis is provided here.

backend/service/twelvelabs_service.py (72-81 Line)

def analyze_video(self, video_id, prompt): try: # Call the TwelveLabs client to analyze the video analysis_response = self.client.analyze( video_id=video_id, prompt=prompt ) return analysis_response.data except Exception as e: print(f"Error analyzing video {video_id}: {e}") raise e

def analyze_video(self, video_id, prompt): try: # Call the TwelveLabs client to analyze the video analysis_response = self.client.analyze( video_id=video_id, prompt=prompt ) return analysis_response.data except Exception as e: print(f"Error analyzing video {video_id}: {e}") raise e

1.4 Deep Research on Analyzed Content

After the initial analysis completes, the workflow proceeds to a deep-research phase powered by the sonar model. The deep_research call constructs a single payload that combines three elements, the user’s original query, the research prompt, and the analysis results and sends that structured input to sonar for further processing. Sonar returns a refined, structured response that includes source citations. The prompt for the deep research prompt can be found here.

backend/service/sonar_service.py (13-52 Line)

def deep_research(self, query, timeout=180): try: # Ensure API key is available if not self.api_key: raise ValueError("API key is required") payload = { "model": "sonar", "messages": [ {"role": "user", "content": query} ] } headers = { "Authorization": f"Bearer {self.api_key}", "Content-Type": "application/json" } # POST request to the API endpoint with timeout response = requests.post( self.base_url, json=payload, headers=headers, timeout=timeout ) # Check if the response was successful if response.status_code == 200: return response.json() else: print(f"Error: {response.status_code} - {response.text}") return {"error": f"API request failed with status {response.status_code}"} except requests.exceptions.Timeout: print("Request timed out") return {"error": "Request timed out - Sonar research is taking too long"} except requests.exceptions.RequestException as e: print(f"Request error: {e}") return {"error": f"Network error: {str(e)}"} except Exception as e: print(f"Error in deep_research: {e}") return {"error": str(e)}

def deep_research(self, query, timeout=180): try: # Ensure API key is available if not self.api_key: raise ValueError("API key is required") payload = { "model": "sonar", "messages": [ {"role": "user", "content": query} ] } headers = { "Authorization": f"Bearer {self.api_key}", "Content-Type": "application/json" } # POST request to the API endpoint with timeout response = requests.post( self.base_url, json=payload, headers=headers, timeout=timeout ) # Check if the response was successful if response.status_code == 200: return response.json() else: print(f"Error: {response.status_code} - {response.text}") return {"error": f"API request failed with status {response.status_code}"} except requests.exceptions.Timeout: print("Request timed out") return {"error": "Request timed out - Sonar research is taking too long"} except requests.exceptions.RequestException as e: print(f"Request error: {e}") return {"error": f"Network error: {str(e)}"} except Exception as e: print(f"Error in deep_research: {e}") return {"error": str(e)}

There are multiple Sonar variants available like sonar, sonar-reasoning, and sonar-deep-research. The sonar-deep-research model supports a reasoning_effort setting (low, medium, high), with medium as the default for a balanced trade-off between deep research and speed. Increasing the reasoning effort to “high” produces more citations and deeper insights but also increases response time and token usage, so choose the model and effort level based on the required thoroughness, latency tolerance, and cost.

By simply changing the model name to sonar-deep-research, the system produces more detailed reasoning along with a greater number of citations. This configuration enables deeper research and richer insights compared to the default sonar model. Below is a demonstration of the enhanced response generated —

1.5 Function calling workflow for the research

The workflow begins with the configuration of the TwelveLabs client, ensuring the API key, index ID, and video ID are properly validated. Once the setup is confirmed, the application fetches the video details from the TwelveLabs service. This ensures the selected video context is well-defined before proceeding further. The results are streamed back to the frontend in real-time using yield, which allows the UI to update progressively rather than waiting for the entire process to finish.

Next, the system invokes the analyze_video method of the TwelveLabs service class defined, passing in the selected video_id and the analysis prompt. The output of this analysis is then included into an enhanced research query, structured with a predefined template. This template enforces a markdown based response format as an instruction and ensures that both the analysis result and the user’s query are aligned into a consistent structure. The enhanced query is then sent to the Sonar service for deep research, which produces a refined and citation-backed response.

backend/routes/api_routes.py (31-162 Line)

def generate_workflow(twelvelabs_api_key, index_id, video_id, analysis_prompt, research_query, research_prompt_template): # Input validation if not twelvelabs_api_key: yield json.dumps({'type': 'error', 'message': 'TwelveLabs API key is required'}) + '\n' return if not index_id or not video_id: yield json.dumps({'type': 'error', 'message': 'Index ID and Video ID are required'}) + '\n' return if not research_query: yield json.dumps({'type': 'error', 'message': 'Research query is required'}) + '\n' return try: twelvelabs_service = TwelveLabsService(api_key=twelvelabs_api_key) # Step 1: Get video details yield safe_json_dumps({ 'type': 'progress', 'step': 'video_details', 'message': 'Fetching video details...', 'progress': 0 }) + '\n' video_details = twelvelabs_service.get_video_details(index_id, video_id) if not video_details: yield safe_json_dumps({'type': 'error', 'message': 'Could not retrieve video details'}) + '\n' return yield safe_json_dumps({ 'type': 'data', 'step': 'video_details', 'data': { 'id': video_details.get('_id', ''), 'filename': video_details.get('system_metadata', {}).get('filename', ''), 'duration': video_details.get('system_metadata', {}).get('duration', 0) }, 'progress': 33 }) + '\n' # Step 2: Analyze video yield safe_json_dumps({ 'type': 'progress', 'step': 'analysis', 'message': 'Analyzing video content...', 'progress': 33 }) + '\n' analysis_result = twelvelabs_service.analyze_video(video_id, analysis_prompt) yield safe_json_dumps({ 'type': 'data', 'step': 'analysis', 'data': analysis_result, 'progress': 66 }) + '\n' # Step 3: Research with context yield safe_json_dumps({ 'type': 'progress', 'step': 'research', 'message': 'Conducting deep research...', 'progress': 66 }) + '\n' enhanced_query = research_prompt_template.format( analysis_result=analysis_result, research_query=research_query ) sonar_service = SonarService() research_result = sonar_service.deep_research(enhanced_query, timeout=180) if 'error' in research_result: yield safe_json_dumps({'type': 'error', 'message': f'Research failed: {research_result["error"]}'}) + '\n' return # Extract research content research_content = "" if research_result and research_result.get('choices'): research_content = research_result['choices'][0].get('message', {}).get('content', '') # Send research content in chunks if large max_chunk_size = 10000 if len(research_content) > max_chunk_size: for i in range(0, len(research_content), max_chunk_size): chunk = research_content[i:i + max_chunk_size] is_final = (i + max_chunk_size) >= len(research_content) yield safe_json_dumps({ 'type': 'research_chunk', 'content': chunk, 'is_final': is_final, 'progress': 80 + (i / len(research_content)) * 20 }) + '\n' # Send completion with chunked content indicator yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': '[CHUNKED_CONTENT]' } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' else: # Send completion with research data yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': research_content } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' except Exception as e: yield safe_json_dumps({'type': 'error', 'message': str(e)}) + '\n'

def generate_workflow(twelvelabs_api_key, index_id, video_id, analysis_prompt, research_query, research_prompt_template): # Input validation if not twelvelabs_api_key: yield json.dumps({'type': 'error', 'message': 'TwelveLabs API key is required'}) + '\n' return if not index_id or not video_id: yield json.dumps({'type': 'error', 'message': 'Index ID and Video ID are required'}) + '\n' return if not research_query: yield json.dumps({'type': 'error', 'message': 'Research query is required'}) + '\n' return try: twelvelabs_service = TwelveLabsService(api_key=twelvelabs_api_key) # Step 1: Get video details yield safe_json_dumps({ 'type': 'progress', 'step': 'video_details', 'message': 'Fetching video details...', 'progress': 0 }) + '\n' video_details = twelvelabs_service.get_video_details(index_id, video_id) if not video_details: yield safe_json_dumps({'type': 'error', 'message': 'Could not retrieve video details'}) + '\n' return yield safe_json_dumps({ 'type': 'data', 'step': 'video_details', 'data': { 'id': video_details.get('_id', ''), 'filename': video_details.get('system_metadata', {}).get('filename', ''), 'duration': video_details.get('system_metadata', {}).get('duration', 0) }, 'progress': 33 }) + '\n' # Step 2: Analyze video yield safe_json_dumps({ 'type': 'progress', 'step': 'analysis', 'message': 'Analyzing video content...', 'progress': 33 }) + '\n' analysis_result = twelvelabs_service.analyze_video(video_id, analysis_prompt) yield safe_json_dumps({ 'type': 'data', 'step': 'analysis', 'data': analysis_result, 'progress': 66 }) + '\n' # Step 3: Research with context yield safe_json_dumps({ 'type': 'progress', 'step': 'research', 'message': 'Conducting deep research...', 'progress': 66 }) + '\n' enhanced_query = research_prompt_template.format( analysis_result=analysis_result, research_query=research_query ) sonar_service = SonarService() research_result = sonar_service.deep_research(enhanced_query, timeout=180) if 'error' in research_result: yield safe_json_dumps({'type': 'error', 'message': f'Research failed: {research_result["error"]}'}) + '\n' return # Extract research content research_content = "" if research_result and research_result.get('choices'): research_content = research_result['choices'][0].get('message', {}).get('content', '') # Send research content in chunks if large max_chunk_size = 10000 if len(research_content) > max_chunk_size: for i in range(0, len(research_content), max_chunk_size): chunk = research_content[i:i + max_chunk_size] is_final = (i + max_chunk_size) >= len(research_content) yield safe_json_dumps({ 'type': 'research_chunk', 'content': chunk, 'is_final': is_final, 'progress': 80 + (i / len(research_content)) * 20 }) + '\n' # Send completion with chunked content indicator yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': '[CHUNKED_CONTENT]' } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' else: # Send completion with research data yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': research_content } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' except Exception as e: yield safe_json_dumps({'type': 'error', 'message': str(e)}) + '\n'

To handle large research outputs, the workflow implements response chunking. Since Sonar may return extensive content with detailed reasoning and numerous citations, sending it as one large JSON risks exceeding payload size limits and causing serialization errors. To prevent this, the response is split into manageable chunks which are streamed sequentially. This design ensures scalability, avoids network bottlenecks, and maintains frontend responsiveness.

2 - Video upload indexing workflow essential

In the alternate workflow, instead of relying on pre-indexed videos, the user directly uploads a new video file. The upload_video_file method handles this process by validating the required inputs such as the API key, index ID, and file path. Once validated, it initiates a request to TwelveLabs task endpoint, creating an upload task with the video file attached. If the task creation is successful, a task ID is returned, which is used to monitor the indexing status.

The method then enters a polling loop, periodically checking the task status until it is either completed, failed, or the defined timeout is reached. When the indexing succeeds, the response includes a video_id, which uniquely identifies the uploaded video and makes it immediately available for further analysis. This ensures the uploaded video becomes the latest available video on the portal. In case of failure or timeout, appropriate error messages are returned, providing robust error handling for the upload and indexing process.

backend/service/twelvelabs_service.py (145-207 Line)

def upload_video_file(self, index_id: str, file_path: str, timeout_seconds: int = 900): import sys try: if not self.api_key: return {"error": "Missing TwelveLabs API key"} if not index_id: return {"error": "Missing index_id"} if not os.path.exists(file_path): return {"error": f"File not found: {file_path}"} print(f"Starting upload for file: {file_path}", file=sys.stderr) tasks_url = "https://api.twelvelabs.io/v1.3/tasks" headers = { "x-api-key": self.api_key } # Create upload task with open(file_path, "rb") as f: files = { "video_file": (os.path.basename(file_path), f) } data = { "index_id": index_id } resp = requests.post(tasks_url, headers=headers, files=files, data=data) if resp.status_code not in (200, 201): return {"error": f"Failed to create upload task: {resp.status_code} {resp.text}"} resp_json = resp.json() if resp.text else {} task_id = resp_json.get("id") or resp_json.get("task_id") or resp_json.get("_id") if not task_id: return {"error": f"No task id returned: {resp_json}"} # Poll task until ready import time start_time = time.time() print(f"Starting to poll task {task_id} for completion...", file=sys.stderr) while time.time() - start_time < timeout_seconds: r = requests.get(f"{tasks_url}/{task_id}", headers=headers) if r.status_code != 200: time.sleep(2) continue task = r.json() if r.text else {} status = task.get("status") print(f"Task {task_id} status: {status}", file=sys.stderr) if status in ("ready", "completed"): video_id = task.get("video_id") or (task.get("data") or {}).get("video_id") print(f"Indexing completed successfully! Video ID: {video_id}", file=sys.stderr) return {"status": status, "video_id": video_id, "task": task} if status in ("failed", "error"): print(f"Indexing failed with status: {status}", file=sys.stderr) return {"error": f"Indexing failed with status {status}", "task": task} time.sleep(2) print(f"Upload timed out after {timeout_seconds} seconds", file=sys.stderr) return {"error": "Upload timed out"}

def upload_video_file(self, index_id: str, file_path: str, timeout_seconds: int = 900): import sys try: if not self.api_key: return {"error": "Missing TwelveLabs API key"} if not index_id: return {"error": "Missing index_id"} if not os.path.exists(file_path): return {"error": f"File not found: {file_path}"} print(f"Starting upload for file: {file_path}", file=sys.stderr) tasks_url = "https://api.twelvelabs.io/v1.3/tasks" headers = { "x-api-key": self.api_key } # Create upload task with open(file_path, "rb") as f: files = { "video_file": (os.path.basename(file_path), f) } data = { "index_id": index_id } resp = requests.post(tasks_url, headers=headers, files=files, data=data) if resp.status_code not in (200, 201): return {"error": f"Failed to create upload task: {resp.status_code} {resp.text}"} resp_json = resp.json() if resp.text else {} task_id = resp_json.get("id") or resp_json.get("task_id") or resp_json.get("_id") if not task_id: return {"error": f"No task id returned: {resp_json}"} # Poll task until ready import time start_time = time.time() print(f"Starting to poll task {task_id} for completion...", file=sys.stderr) while time.time() - start_time < timeout_seconds: r = requests.get(f"{tasks_url}/{task_id}", headers=headers) if r.status_code != 200: time.sleep(2) continue task = r.json() if r.text else {} status = task.get("status") print(f"Task {task_id} status: {status}", file=sys.stderr) if status in ("ready", "completed"): video_id = task.get("video_id") or (task.get("data") or {}).get("video_id") print(f"Indexing completed successfully! Video ID: {video_id}", file=sys.stderr) return {"status": status, "video_id": video_id, "task": task} if status in ("failed", "error"): print(f"Indexing failed with status: {status}", file=sys.stderr) return {"error": f"Indexing failed with status {status}", "task": task} time.sleep(2) print(f"Upload timed out after {timeout_seconds} seconds", file=sys.stderr) return {"error": "Upload timed out"}

The subsequent workflow pipeline follows the same sequence described earlier, once a video is uploaded and indexed, the generated video_id is passed through the workflow for analysis, research enhancement, and citation-backed response. This ensures consistency between the default mode (pre-indexed videos) and the alternate mode (uploaded videos), while maintaining a unified workflow. Below is a quick demonstration highlighting the process of uploading a video, performing indexing, and executing the complete pipeline —

The application supports handling the TwelveLabs API key directly on the client side. This provides users with the flexibility to connect their own API key, enabling them to analyze and conduct research on their previously indexed videos without relying on the default key.

More Ideas to Experiment with the Tutorial

Exploring how video understanding and connected to verifiable research opens the door to even more powerful applications. Here are some experimental directions you can try with TwelveLabs Analyze and Sonar by Perplexity —

🔍 Deep Video Fact-Checking — Automatically verify statements and claims in video content by linking extracted insights to trusted sources and citations.

📑 Semantic Research Review — Generate structured summaries/reviews of video content, highlighting key ideas, references, and actionable insights for faster research.

📚 Enhanced Learning Workflows — Combine video insights with external knowledge repositories to create interactive or guided learning experiences for researchers, students, and content creators.

Conclusion

This tutorial demonstrates how video understanding can transform the way we conduct research and interact with video content. By combining TwelveLabs Analyze for deep video analysis with Sonar by Perplexity for knowledge retrieval and citation, we’ve built a system that turns videos from passive media into structured, citable research tools which empowers researchers, creators, and professionals to explore video content in a meaningful and trustworthy way.

Additional Resources

Learn more about the analyze video engine—Pegasus-1.2. To explore TwelveLabs further and enhance your understanding of video content analysis, check out these resources:

Explore More Use Cases: Visit the Sonar by Perplexity to learn about its deep research capabilities. Small changes in this tutorial can help you explore even more use cases.

Join the Conversation: Share your feedback on this integration in the TwelveLabs Discord.

Explore Tutorials: Dive deeper into TwelveLabs capabilities with our comprehensive tutorials

We encourage you to use these resources to expand your knowledge and create innovative applications using TwelveLabs video understanding technology.

Introduction

What if you could search videos as easily as you search text over the web and get every insight with a trusted citation? 🎥🔍

Today, that’s still a missing piece of the web. Search engines don't consider the videos as the input to get the result. This leaves researchers, creators, and professionals stuck manually going through hours of footage, with no reliable way to extract structured insights or verify them.

Video Deep Research changes the way to look on to the video for the research. By combining TwelveLabs Analyze pegasus-1.2 for semantic video understanding with Sonar by Perplexity for citation-powered knowledge retrieval, it transforms videos into a searchable, trustworthy research resource.

Let’s explore how the Video Deep Research application works and how you can build a similar solution with the video understanding and deep research by using the TwelveLabs Python SDK and Perplexity Sonar.

You can explore the demo of the application here: Video Deep Research Application

Prerequisites

Generate an API key by signing up at the TwelveLabs Playground.

Sign Up on Sonar and generate the API KEY to access the model.

Find the repository for this application on Github Repository.

You should be familiar with Python, Flask, and Next.js

Demo Application

This demo application showcases how video understanding and knowledge retrieval can unlock a completely new way of doing research.

Users can simply upload a video or select from an already indexed video by connecting their TwelveLabs API Key. The application then processes the content, extracts structured insights, and links them to verifiable references, bridging the gap between video and trustworthy research.

Here, you’ll find a detailed walkthrough of the code and the demo so you can build and extend your own Video Deep Research powered application —

Working of the Application

The application supports two modes of interaction -

Using the TwelveLabs API Key: Connect your personal TwelveLabs API Key to access and interact with your own already indexed videos. Once connected, you can select an existing Index ID and respective video.

Upload a Video: When a video is uploaded, the application is designed to automatically use the default TwelveLabs API key for seamless setup. After the upload, the indexing process begins immediately. Once indexing is complete, the most recently uploaded video will be displayed as the latest available content. From this point onward, the query and citation workflow proceeds identically for both interaction modes, following the steps mentioned below.

The complete workflow of the application begins with configuring the TwelveLabs client. This requires setting up the TwelveLabs API key, either by using the environment-provided key or by allowing the user to connect their own key from the client side to access already indexed videos. Once the client is configured, the process follows a sequential flow as outlined below –

Fetch Indexes – Retrieve the list of indexes associated with the connected TwelveLabs account.

Select Video – Choose a video from the desired index for analysis.

Analyze Video – Perform video content analysis on the selected video using the provided query.

Deep Research – Combine the analyzed response with a structured prompt and the user’s query, then pass it to the Sonar research model for deeper reasoning and citation-backed insights.

This structured workflow ensures a smooth progression from video indexing through to advanced research, providing users with detailed, context-aware outputs.

Both approaches are illustrated in the architecture diagram below.

The flow is designed such that once a research response is generated, users can provide follow-up queries to request additional references or deeper insights around the same context. These subsequent queries are routed back into the Sonar research loop, which maintains contextual continuity and expands on the existing knowledge base. This iterative design ensures that each new query builds upon the prior results, allowing for progressively richer and more context-aware research outputs.

Preparation Steps

Obtain your API key from the TwelveLabs Playground and set up your environment variable.

Do create the Index, with the

pegasus-1.2selection via TwelveLabs Playground, and get theindex_idspecifically for this application.Clone the project from Github.

Do obtain the API KEY from the Sonar by Perplexity.

Create a

.envfile containing your TwelveLabs and Sonar credentials. An example of the environment setup can be found here.

Once you've completed these steps, you're ready to start developing!

Walkthrough for the Video Deep-Research App

This tutorial demonstrates how to build an application that can retrieve citations and relevant information from the web based on video content. The project uses Next.js for the frontend and a Flask API (with CORS enabled) for the backend. Our main focus will be on implementing the core backend utility that powers this video deep-research application, along with setting up the complete environment.

For detailed setup instructions and the full code structure, please refer to the README.md file in the GitHub repository.

1 - Workflow for Video Deep-Research Implementation

The most critical step in the workflow is the initialization and handling of the TwelveLabs API key. The application can either load the key directly from the environment for default usage or allow the user to provide their own API key via the client.

backend/service/twelvelabs_service.py (14-36 Line)

class TwelveLabsService: def __init__(self, api_key=None): # If no API key is provided, try to get it from environment variable if api_key is None: api_key = os.environ.get('TWELVELABS_API_KEY', '') self.api_key = api_key # Store API key in the instance self.client = TwelveLabs(api_key=api_key) # Intialize the TwelveLabs client

1.1 GET the Indexes

Once the user connects their TwelveLabs API key either through the portal or via the default mode where the key is loaded from the environment, the application retrieves all indexes associated with that account. The retrieved data is then structured and delivered to the frontend for display.

backend/service/twelvelabs_service.py (14-36 Line)

def get_indexes(self): try: print("Fetching indexes...") # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of indexes indexes = self.client.indexes.list() result = [] for index in indexes: # Append a dictionary with relevant index details result.append({ "id": index.id, "name": index.index_name }) print(f"ID: {index.id}") print(f" Name: {index.index_name}") return result except Exception as e: print(f"Error fetching indexes: {e}") return []

1.2 GET the list of videos, for respective Index ID

When a user selects an index, the application records the associated index_id. With this index_id, the system invokes the relevant method to fetch and return all videos linked to that index.

backend/service/twelvelabs_service.py (38-70 Line)

def get_videos(self, index_id, page=1): try: # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of videos videos_response = self.client.indexes.videos.list(index_id=index_id, page=page) result = [] for video in videos_response.items: system_metadata = video.system_metadata hls_data = video.hls thumbnail_urls = hls_data.get('thumbnail_urls', []) if hls_data else [] thumbnail_url = thumbnail_urls[0] if thumbnail_urls else None video_url = hls_data.get('video_url') if hls_data else None # Append a dictionary with relevant video details result.append({ "id": video.id, "name": system_metadata.filename if system_metadata and system_metadata.filename else f'Video {video.id}', "duration": system_metadata.duration if system_metadata else 0, "thumbnail_url": thumbnail_url, "video_url": video_url, "width": system_metadata.width if system_metadata else 0, "height": system_metadata.height if system_metadata else 0, "fps": system_metadata.fps if system_metadata else 0, "size": system_metadata.size if system_metadata else 0 }) return result except Exception as e: print(f"Error fetching videos for index {index_id}: {e}") return []

1.3 Analyze the video content

When a video is selected and a research query is provided by the user, both the video_id and the query are passed to the analyze utility for video understanding. The query is appended to a predefined prompt, which enhances the context and ensures meaningful analyzed response. The prompt used for the video analysis is provided here.

backend/service/twelvelabs_service.py (72-81 Line)

def analyze_video(self, video_id, prompt): try: # Call the TwelveLabs client to analyze the video analysis_response = self.client.analyze( video_id=video_id, prompt=prompt ) return analysis_response.data except Exception as e: print(f"Error analyzing video {video_id}: {e}") raise e

1.4 Deep Research on Analyzed Content

After the initial analysis completes, the workflow proceeds to a deep-research phase powered by the sonar model. The deep_research call constructs a single payload that combines three elements, the user’s original query, the research prompt, and the analysis results and sends that structured input to sonar for further processing. Sonar returns a refined, structured response that includes source citations. The prompt for the deep research prompt can be found here.

backend/service/sonar_service.py (13-52 Line)

def deep_research(self, query, timeout=180): try: # Ensure API key is available if not self.api_key: raise ValueError("API key is required") payload = { "model": "sonar", "messages": [ {"role": "user", "content": query} ] } headers = { "Authorization": f"Bearer {self.api_key}", "Content-Type": "application/json" } # POST request to the API endpoint with timeout response = requests.post( self.base_url, json=payload, headers=headers, timeout=timeout ) # Check if the response was successful if response.status_code == 200: return response.json() else: print(f"Error: {response.status_code} - {response.text}") return {"error": f"API request failed with status {response.status_code}"} except requests.exceptions.Timeout: print("Request timed out") return {"error": "Request timed out - Sonar research is taking too long"} except requests.exceptions.RequestException as e: print(f"Request error: {e}") return {"error": f"Network error: {str(e)}"} except Exception as e: print(f"Error in deep_research: {e}") return {"error": str(e)}

There are multiple Sonar variants available like sonar, sonar-reasoning, and sonar-deep-research. The sonar-deep-research model supports a reasoning_effort setting (low, medium, high), with medium as the default for a balanced trade-off between deep research and speed. Increasing the reasoning effort to “high” produces more citations and deeper insights but also increases response time and token usage, so choose the model and effort level based on the required thoroughness, latency tolerance, and cost.

By simply changing the model name to sonar-deep-research, the system produces more detailed reasoning along with a greater number of citations. This configuration enables deeper research and richer insights compared to the default sonar model. Below is a demonstration of the enhanced response generated —

1.5 Function calling workflow for the research

The workflow begins with the configuration of the TwelveLabs client, ensuring the API key, index ID, and video ID are properly validated. Once the setup is confirmed, the application fetches the video details from the TwelveLabs service. This ensures the selected video context is well-defined before proceeding further. The results are streamed back to the frontend in real-time using yield, which allows the UI to update progressively rather than waiting for the entire process to finish.

Next, the system invokes the analyze_video method of the TwelveLabs service class defined, passing in the selected video_id and the analysis prompt. The output of this analysis is then included into an enhanced research query, structured with a predefined template. This template enforces a markdown based response format as an instruction and ensures that both the analysis result and the user’s query are aligned into a consistent structure. The enhanced query is then sent to the Sonar service for deep research, which produces a refined and citation-backed response.

backend/routes/api_routes.py (31-162 Line)

def generate_workflow(twelvelabs_api_key, index_id, video_id, analysis_prompt, research_query, research_prompt_template): # Input validation if not twelvelabs_api_key: yield json.dumps({'type': 'error', 'message': 'TwelveLabs API key is required'}) + '\n' return if not index_id or not video_id: yield json.dumps({'type': 'error', 'message': 'Index ID and Video ID are required'}) + '\n' return if not research_query: yield json.dumps({'type': 'error', 'message': 'Research query is required'}) + '\n' return try: twelvelabs_service = TwelveLabsService(api_key=twelvelabs_api_key) # Step 1: Get video details yield safe_json_dumps({ 'type': 'progress', 'step': 'video_details', 'message': 'Fetching video details...', 'progress': 0 }) + '\n' video_details = twelvelabs_service.get_video_details(index_id, video_id) if not video_details: yield safe_json_dumps({'type': 'error', 'message': 'Could not retrieve video details'}) + '\n' return yield safe_json_dumps({ 'type': 'data', 'step': 'video_details', 'data': { 'id': video_details.get('_id', ''), 'filename': video_details.get('system_metadata', {}).get('filename', ''), 'duration': video_details.get('system_metadata', {}).get('duration', 0) }, 'progress': 33 }) + '\n' # Step 2: Analyze video yield safe_json_dumps({ 'type': 'progress', 'step': 'analysis', 'message': 'Analyzing video content...', 'progress': 33 }) + '\n' analysis_result = twelvelabs_service.analyze_video(video_id, analysis_prompt) yield safe_json_dumps({ 'type': 'data', 'step': 'analysis', 'data': analysis_result, 'progress': 66 }) + '\n' # Step 3: Research with context yield safe_json_dumps({ 'type': 'progress', 'step': 'research', 'message': 'Conducting deep research...', 'progress': 66 }) + '\n' enhanced_query = research_prompt_template.format( analysis_result=analysis_result, research_query=research_query ) sonar_service = SonarService() research_result = sonar_service.deep_research(enhanced_query, timeout=180) if 'error' in research_result: yield safe_json_dumps({'type': 'error', 'message': f'Research failed: {research_result["error"]}'}) + '\n' return # Extract research content research_content = "" if research_result and research_result.get('choices'): research_content = research_result['choices'][0].get('message', {}).get('content', '') # Send research content in chunks if large max_chunk_size = 10000 if len(research_content) > max_chunk_size: for i in range(0, len(research_content), max_chunk_size): chunk = research_content[i:i + max_chunk_size] is_final = (i + max_chunk_size) >= len(research_content) yield safe_json_dumps({ 'type': 'research_chunk', 'content': chunk, 'is_final': is_final, 'progress': 80 + (i / len(research_content)) * 20 }) + '\n' # Send completion with chunked content indicator yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': '[CHUNKED_CONTENT]' } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' else: # Send completion with research data yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': research_content } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' except Exception as e: yield safe_json_dumps({'type': 'error', 'message': str(e)}) + '\n'

To handle large research outputs, the workflow implements response chunking. Since Sonar may return extensive content with detailed reasoning and numerous citations, sending it as one large JSON risks exceeding payload size limits and causing serialization errors. To prevent this, the response is split into manageable chunks which are streamed sequentially. This design ensures scalability, avoids network bottlenecks, and maintains frontend responsiveness.

2 - Video upload indexing workflow essential

In the alternate workflow, instead of relying on pre-indexed videos, the user directly uploads a new video file. The upload_video_file method handles this process by validating the required inputs such as the API key, index ID, and file path. Once validated, it initiates a request to TwelveLabs task endpoint, creating an upload task with the video file attached. If the task creation is successful, a task ID is returned, which is used to monitor the indexing status.

The method then enters a polling loop, periodically checking the task status until it is either completed, failed, or the defined timeout is reached. When the indexing succeeds, the response includes a video_id, which uniquely identifies the uploaded video and makes it immediately available for further analysis. This ensures the uploaded video becomes the latest available video on the portal. In case of failure or timeout, appropriate error messages are returned, providing robust error handling for the upload and indexing process.

backend/service/twelvelabs_service.py (145-207 Line)

def upload_video_file(self, index_id: str, file_path: str, timeout_seconds: int = 900): import sys try: if not self.api_key: return {"error": "Missing TwelveLabs API key"} if not index_id: return {"error": "Missing index_id"} if not os.path.exists(file_path): return {"error": f"File not found: {file_path}"} print(f"Starting upload for file: {file_path}", file=sys.stderr) tasks_url = "https://api.twelvelabs.io/v1.3/tasks" headers = { "x-api-key": self.api_key } # Create upload task with open(file_path, "rb") as f: files = { "video_file": (os.path.basename(file_path), f) } data = { "index_id": index_id } resp = requests.post(tasks_url, headers=headers, files=files, data=data) if resp.status_code not in (200, 201): return {"error": f"Failed to create upload task: {resp.status_code} {resp.text}"} resp_json = resp.json() if resp.text else {} task_id = resp_json.get("id") or resp_json.get("task_id") or resp_json.get("_id") if not task_id: return {"error": f"No task id returned: {resp_json}"} # Poll task until ready import time start_time = time.time() print(f"Starting to poll task {task_id} for completion...", file=sys.stderr) while time.time() - start_time < timeout_seconds: r = requests.get(f"{tasks_url}/{task_id}", headers=headers) if r.status_code != 200: time.sleep(2) continue task = r.json() if r.text else {} status = task.get("status") print(f"Task {task_id} status: {status}", file=sys.stderr) if status in ("ready", "completed"): video_id = task.get("video_id") or (task.get("data") or {}).get("video_id") print(f"Indexing completed successfully! Video ID: {video_id}", file=sys.stderr) return {"status": status, "video_id": video_id, "task": task} if status in ("failed", "error"): print(f"Indexing failed with status: {status}", file=sys.stderr) return {"error": f"Indexing failed with status {status}", "task": task} time.sleep(2) print(f"Upload timed out after {timeout_seconds} seconds", file=sys.stderr) return {"error": "Upload timed out"}

The subsequent workflow pipeline follows the same sequence described earlier, once a video is uploaded and indexed, the generated video_id is passed through the workflow for analysis, research enhancement, and citation-backed response. This ensures consistency between the default mode (pre-indexed videos) and the alternate mode (uploaded videos), while maintaining a unified workflow. Below is a quick demonstration highlighting the process of uploading a video, performing indexing, and executing the complete pipeline —

The application supports handling the TwelveLabs API key directly on the client side. This provides users with the flexibility to connect their own API key, enabling them to analyze and conduct research on their previously indexed videos without relying on the default key.

More Ideas to Experiment with the Tutorial

Exploring how video understanding and connected to verifiable research opens the door to even more powerful applications. Here are some experimental directions you can try with TwelveLabs Analyze and Sonar by Perplexity —

🔍 Deep Video Fact-Checking — Automatically verify statements and claims in video content by linking extracted insights to trusted sources and citations.

📑 Semantic Research Review — Generate structured summaries/reviews of video content, highlighting key ideas, references, and actionable insights for faster research.

📚 Enhanced Learning Workflows — Combine video insights with external knowledge repositories to create interactive or guided learning experiences for researchers, students, and content creators.

Conclusion

This tutorial demonstrates how video understanding can transform the way we conduct research and interact with video content. By combining TwelveLabs Analyze for deep video analysis with Sonar by Perplexity for knowledge retrieval and citation, we’ve built a system that turns videos from passive media into structured, citable research tools which empowers researchers, creators, and professionals to explore video content in a meaningful and trustworthy way.

Additional Resources

Learn more about the analyze video engine—Pegasus-1.2. To explore TwelveLabs further and enhance your understanding of video content analysis, check out these resources:

Explore More Use Cases: Visit the Sonar by Perplexity to learn about its deep research capabilities. Small changes in this tutorial can help you explore even more use cases.

Join the Conversation: Share your feedback on this integration in the TwelveLabs Discord.

Explore Tutorials: Dive deeper into TwelveLabs capabilities with our comprehensive tutorials

We encourage you to use these resources to expand your knowledge and create innovative applications using TwelveLabs video understanding technology.

Introduction

What if you could search videos as easily as you search text over the web and get every insight with a trusted citation? 🎥🔍

Today, that’s still a missing piece of the web. Search engines don't consider the videos as the input to get the result. This leaves researchers, creators, and professionals stuck manually going through hours of footage, with no reliable way to extract structured insights or verify them.

Video Deep Research changes the way to look on to the video for the research. By combining TwelveLabs Analyze pegasus-1.2 for semantic video understanding with Sonar by Perplexity for citation-powered knowledge retrieval, it transforms videos into a searchable, trustworthy research resource.

Let’s explore how the Video Deep Research application works and how you can build a similar solution with the video understanding and deep research by using the TwelveLabs Python SDK and Perplexity Sonar.

You can explore the demo of the application here: Video Deep Research Application

Prerequisites

Generate an API key by signing up at the TwelveLabs Playground.

Sign Up on Sonar and generate the API KEY to access the model.

Find the repository for this application on Github Repository.

You should be familiar with Python, Flask, and Next.js

Demo Application

This demo application showcases how video understanding and knowledge retrieval can unlock a completely new way of doing research.

Users can simply upload a video or select from an already indexed video by connecting their TwelveLabs API Key. The application then processes the content, extracts structured insights, and links them to verifiable references, bridging the gap between video and trustworthy research.

Here, you’ll find a detailed walkthrough of the code and the demo so you can build and extend your own Video Deep Research powered application —

Working of the Application

The application supports two modes of interaction -

Using the TwelveLabs API Key: Connect your personal TwelveLabs API Key to access and interact with your own already indexed videos. Once connected, you can select an existing Index ID and respective video.

Upload a Video: When a video is uploaded, the application is designed to automatically use the default TwelveLabs API key for seamless setup. After the upload, the indexing process begins immediately. Once indexing is complete, the most recently uploaded video will be displayed as the latest available content. From this point onward, the query and citation workflow proceeds identically for both interaction modes, following the steps mentioned below.

The complete workflow of the application begins with configuring the TwelveLabs client. This requires setting up the TwelveLabs API key, either by using the environment-provided key or by allowing the user to connect their own key from the client side to access already indexed videos. Once the client is configured, the process follows a sequential flow as outlined below –

Fetch Indexes – Retrieve the list of indexes associated with the connected TwelveLabs account.

Select Video – Choose a video from the desired index for analysis.

Analyze Video – Perform video content analysis on the selected video using the provided query.

Deep Research – Combine the analyzed response with a structured prompt and the user’s query, then pass it to the Sonar research model for deeper reasoning and citation-backed insights.

This structured workflow ensures a smooth progression from video indexing through to advanced research, providing users with detailed, context-aware outputs.

Both approaches are illustrated in the architecture diagram below.

The flow is designed such that once a research response is generated, users can provide follow-up queries to request additional references or deeper insights around the same context. These subsequent queries are routed back into the Sonar research loop, which maintains contextual continuity and expands on the existing knowledge base. This iterative design ensures that each new query builds upon the prior results, allowing for progressively richer and more context-aware research outputs.

Preparation Steps

Obtain your API key from the TwelveLabs Playground and set up your environment variable.

Do create the Index, with the

pegasus-1.2selection via TwelveLabs Playground, and get theindex_idspecifically for this application.Clone the project from Github.

Do obtain the API KEY from the Sonar by Perplexity.

Create a

.envfile containing your TwelveLabs and Sonar credentials. An example of the environment setup can be found here.

Once you've completed these steps, you're ready to start developing!

Walkthrough for the Video Deep-Research App

This tutorial demonstrates how to build an application that can retrieve citations and relevant information from the web based on video content. The project uses Next.js for the frontend and a Flask API (with CORS enabled) for the backend. Our main focus will be on implementing the core backend utility that powers this video deep-research application, along with setting up the complete environment.

For detailed setup instructions and the full code structure, please refer to the README.md file in the GitHub repository.

1 - Workflow for Video Deep-Research Implementation

The most critical step in the workflow is the initialization and handling of the TwelveLabs API key. The application can either load the key directly from the environment for default usage or allow the user to provide their own API key via the client.

backend/service/twelvelabs_service.py (14-36 Line)

class TwelveLabsService: def __init__(self, api_key=None): # If no API key is provided, try to get it from environment variable if api_key is None: api_key = os.environ.get('TWELVELABS_API_KEY', '') self.api_key = api_key # Store API key in the instance self.client = TwelveLabs(api_key=api_key) # Intialize the TwelveLabs client

1.1 GET the Indexes

Once the user connects their TwelveLabs API key either through the portal or via the default mode where the key is loaded from the environment, the application retrieves all indexes associated with that account. The retrieved data is then structured and delivered to the frontend for display.

backend/service/twelvelabs_service.py (14-36 Line)

def get_indexes(self): try: print("Fetching indexes...") # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of indexes indexes = self.client.indexes.list() result = [] for index in indexes: # Append a dictionary with relevant index details result.append({ "id": index.id, "name": index.index_name }) print(f"ID: {index.id}") print(f" Name: {index.index_name}") return result except Exception as e: print(f"Error fetching indexes: {e}") return []

1.2 GET the list of videos, for respective Index ID

When a user selects an index, the application records the associated index_id. With this index_id, the system invokes the relevant method to fetch and return all videos linked to that index.

backend/service/twelvelabs_service.py (38-70 Line)

def get_videos(self, index_id, page=1): try: # Check if API key is available if not self.api_key: print("No API key available") return [] # Use TwelveLabs client to get a list of videos videos_response = self.client.indexes.videos.list(index_id=index_id, page=page) result = [] for video in videos_response.items: system_metadata = video.system_metadata hls_data = video.hls thumbnail_urls = hls_data.get('thumbnail_urls', []) if hls_data else [] thumbnail_url = thumbnail_urls[0] if thumbnail_urls else None video_url = hls_data.get('video_url') if hls_data else None # Append a dictionary with relevant video details result.append({ "id": video.id, "name": system_metadata.filename if system_metadata and system_metadata.filename else f'Video {video.id}', "duration": system_metadata.duration if system_metadata else 0, "thumbnail_url": thumbnail_url, "video_url": video_url, "width": system_metadata.width if system_metadata else 0, "height": system_metadata.height if system_metadata else 0, "fps": system_metadata.fps if system_metadata else 0, "size": system_metadata.size if system_metadata else 0 }) return result except Exception as e: print(f"Error fetching videos for index {index_id}: {e}") return []

1.3 Analyze the video content

When a video is selected and a research query is provided by the user, both the video_id and the query are passed to the analyze utility for video understanding. The query is appended to a predefined prompt, which enhances the context and ensures meaningful analyzed response. The prompt used for the video analysis is provided here.

backend/service/twelvelabs_service.py (72-81 Line)

def analyze_video(self, video_id, prompt): try: # Call the TwelveLabs client to analyze the video analysis_response = self.client.analyze( video_id=video_id, prompt=prompt ) return analysis_response.data except Exception as e: print(f"Error analyzing video {video_id}: {e}") raise e

1.4 Deep Research on Analyzed Content

After the initial analysis completes, the workflow proceeds to a deep-research phase powered by the sonar model. The deep_research call constructs a single payload that combines three elements, the user’s original query, the research prompt, and the analysis results and sends that structured input to sonar for further processing. Sonar returns a refined, structured response that includes source citations. The prompt for the deep research prompt can be found here.

backend/service/sonar_service.py (13-52 Line)

def deep_research(self, query, timeout=180): try: # Ensure API key is available if not self.api_key: raise ValueError("API key is required") payload = { "model": "sonar", "messages": [ {"role": "user", "content": query} ] } headers = { "Authorization": f"Bearer {self.api_key}", "Content-Type": "application/json" } # POST request to the API endpoint with timeout response = requests.post( self.base_url, json=payload, headers=headers, timeout=timeout ) # Check if the response was successful if response.status_code == 200: return response.json() else: print(f"Error: {response.status_code} - {response.text}") return {"error": f"API request failed with status {response.status_code}"} except requests.exceptions.Timeout: print("Request timed out") return {"error": "Request timed out - Sonar research is taking too long"} except requests.exceptions.RequestException as e: print(f"Request error: {e}") return {"error": f"Network error: {str(e)}"} except Exception as e: print(f"Error in deep_research: {e}") return {"error": str(e)}

There are multiple Sonar variants available like sonar, sonar-reasoning, and sonar-deep-research. The sonar-deep-research model supports a reasoning_effort setting (low, medium, high), with medium as the default for a balanced trade-off between deep research and speed. Increasing the reasoning effort to “high” produces more citations and deeper insights but also increases response time and token usage, so choose the model and effort level based on the required thoroughness, latency tolerance, and cost.

By simply changing the model name to sonar-deep-research, the system produces more detailed reasoning along with a greater number of citations. This configuration enables deeper research and richer insights compared to the default sonar model. Below is a demonstration of the enhanced response generated —

1.5 Function calling workflow for the research

The workflow begins with the configuration of the TwelveLabs client, ensuring the API key, index ID, and video ID are properly validated. Once the setup is confirmed, the application fetches the video details from the TwelveLabs service. This ensures the selected video context is well-defined before proceeding further. The results are streamed back to the frontend in real-time using yield, which allows the UI to update progressively rather than waiting for the entire process to finish.

Next, the system invokes the analyze_video method of the TwelveLabs service class defined, passing in the selected video_id and the analysis prompt. The output of this analysis is then included into an enhanced research query, structured with a predefined template. This template enforces a markdown based response format as an instruction and ensures that both the analysis result and the user’s query are aligned into a consistent structure. The enhanced query is then sent to the Sonar service for deep research, which produces a refined and citation-backed response.

backend/routes/api_routes.py (31-162 Line)

def generate_workflow(twelvelabs_api_key, index_id, video_id, analysis_prompt, research_query, research_prompt_template): # Input validation if not twelvelabs_api_key: yield json.dumps({'type': 'error', 'message': 'TwelveLabs API key is required'}) + '\n' return if not index_id or not video_id: yield json.dumps({'type': 'error', 'message': 'Index ID and Video ID are required'}) + '\n' return if not research_query: yield json.dumps({'type': 'error', 'message': 'Research query is required'}) + '\n' return try: twelvelabs_service = TwelveLabsService(api_key=twelvelabs_api_key) # Step 1: Get video details yield safe_json_dumps({ 'type': 'progress', 'step': 'video_details', 'message': 'Fetching video details...', 'progress': 0 }) + '\n' video_details = twelvelabs_service.get_video_details(index_id, video_id) if not video_details: yield safe_json_dumps({'type': 'error', 'message': 'Could not retrieve video details'}) + '\n' return yield safe_json_dumps({ 'type': 'data', 'step': 'video_details', 'data': { 'id': video_details.get('_id', ''), 'filename': video_details.get('system_metadata', {}).get('filename', ''), 'duration': video_details.get('system_metadata', {}).get('duration', 0) }, 'progress': 33 }) + '\n' # Step 2: Analyze video yield safe_json_dumps({ 'type': 'progress', 'step': 'analysis', 'message': 'Analyzing video content...', 'progress': 33 }) + '\n' analysis_result = twelvelabs_service.analyze_video(video_id, analysis_prompt) yield safe_json_dumps({ 'type': 'data', 'step': 'analysis', 'data': analysis_result, 'progress': 66 }) + '\n' # Step 3: Research with context yield safe_json_dumps({ 'type': 'progress', 'step': 'research', 'message': 'Conducting deep research...', 'progress': 66 }) + '\n' enhanced_query = research_prompt_template.format( analysis_result=analysis_result, research_query=research_query ) sonar_service = SonarService() research_result = sonar_service.deep_research(enhanced_query, timeout=180) if 'error' in research_result: yield safe_json_dumps({'type': 'error', 'message': f'Research failed: {research_result["error"]}'}) + '\n' return # Extract research content research_content = "" if research_result and research_result.get('choices'): research_content = research_result['choices'][0].get('message', {}).get('content', '') # Send research content in chunks if large max_chunk_size = 10000 if len(research_content) > max_chunk_size: for i in range(0, len(research_content), max_chunk_size): chunk = research_content[i:i + max_chunk_size] is_final = (i + max_chunk_size) >= len(research_content) yield safe_json_dumps({ 'type': 'research_chunk', 'content': chunk, 'is_final': is_final, 'progress': 80 + (i / len(research_content)) * 20 }) + '\n' # Send completion with chunked content indicator yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': '[CHUNKED_CONTENT]' } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' else: # Send completion with research data yield safe_json_dumps({ 'type': 'complete', 'data': { 'research': { 'choices': [{ 'message': { 'content': research_content } }], 'citations': research_result.get('citations', [])[:10], 'usage': research_result.get('usage', {}) }, 'sources': research_result.get('search_results', [])[:10] }, 'progress': 100 }) + '\n' except Exception as e: yield safe_json_dumps({'type': 'error', 'message': str(e)}) + '\n'

To handle large research outputs, the workflow implements response chunking. Since Sonar may return extensive content with detailed reasoning and numerous citations, sending it as one large JSON risks exceeding payload size limits and causing serialization errors. To prevent this, the response is split into manageable chunks which are streamed sequentially. This design ensures scalability, avoids network bottlenecks, and maintains frontend responsiveness.

2 - Video upload indexing workflow essential

In the alternate workflow, instead of relying on pre-indexed videos, the user directly uploads a new video file. The upload_video_file method handles this process by validating the required inputs such as the API key, index ID, and file path. Once validated, it initiates a request to TwelveLabs task endpoint, creating an upload task with the video file attached. If the task creation is successful, a task ID is returned, which is used to monitor the indexing status.

The method then enters a polling loop, periodically checking the task status until it is either completed, failed, or the defined timeout is reached. When the indexing succeeds, the response includes a video_id, which uniquely identifies the uploaded video and makes it immediately available for further analysis. This ensures the uploaded video becomes the latest available video on the portal. In case of failure or timeout, appropriate error messages are returned, providing robust error handling for the upload and indexing process.

backend/service/twelvelabs_service.py (145-207 Line)

def upload_video_file(self, index_id: str, file_path: str, timeout_seconds: int = 900): import sys try: if not self.api_key: return {"error": "Missing TwelveLabs API key"} if not index_id: return {"error": "Missing index_id"} if not os.path.exists(file_path): return {"error": f"File not found: {file_path}"} print(f"Starting upload for file: {file_path}", file=sys.stderr) tasks_url = "https://api.twelvelabs.io/v1.3/tasks" headers = { "x-api-key": self.api_key } # Create upload task with open(file_path, "rb") as f: files = { "video_file": (os.path.basename(file_path), f) } data = { "index_id": index_id } resp = requests.post(tasks_url, headers=headers, files=files, data=data) if resp.status_code not in (200, 201): return {"error": f"Failed to create upload task: {resp.status_code} {resp.text}"} resp_json = resp.json() if resp.text else {} task_id = resp_json.get("id") or resp_json.get("task_id") or resp_json.get("_id") if not task_id: return {"error": f"No task id returned: {resp_json}"} # Poll task until ready import time start_time = time.time() print(f"Starting to poll task {task_id} for completion...", file=sys.stderr) while time.time() - start_time < timeout_seconds: r = requests.get(f"{tasks_url}/{task_id}", headers=headers) if r.status_code != 200: time.sleep(2) continue task = r.json() if r.text else {} status = task.get("status") print(f"Task {task_id} status: {status}", file=sys.stderr) if status in ("ready", "completed"): video_id = task.get("video_id") or (task.get("data") or {}).get("video_id") print(f"Indexing completed successfully! Video ID: {video_id}", file=sys.stderr) return {"status": status, "video_id": video_id, "task": task} if status in ("failed", "error"): print(f"Indexing failed with status: {status}", file=sys.stderr) return {"error": f"Indexing failed with status {status}", "task": task} time.sleep(2) print(f"Upload timed out after {timeout_seconds} seconds", file=sys.stderr) return {"error": "Upload timed out"}

The subsequent workflow pipeline follows the same sequence described earlier, once a video is uploaded and indexed, the generated video_id is passed through the workflow for analysis, research enhancement, and citation-backed response. This ensures consistency between the default mode (pre-indexed videos) and the alternate mode (uploaded videos), while maintaining a unified workflow. Below is a quick demonstration highlighting the process of uploading a video, performing indexing, and executing the complete pipeline —

The application supports handling the TwelveLabs API key directly on the client side. This provides users with the flexibility to connect their own API key, enabling them to analyze and conduct research on their previously indexed videos without relying on the default key.

More Ideas to Experiment with the Tutorial

Exploring how video understanding and connected to verifiable research opens the door to even more powerful applications. Here are some experimental directions you can try with TwelveLabs Analyze and Sonar by Perplexity —

🔍 Deep Video Fact-Checking — Automatically verify statements and claims in video content by linking extracted insights to trusted sources and citations.

📑 Semantic Research Review — Generate structured summaries/reviews of video content, highlighting key ideas, references, and actionable insights for faster research.

📚 Enhanced Learning Workflows — Combine video insights with external knowledge repositories to create interactive or guided learning experiences for researchers, students, and content creators.

Conclusion

This tutorial demonstrates how video understanding can transform the way we conduct research and interact with video content. By combining TwelveLabs Analyze for deep video analysis with Sonar by Perplexity for knowledge retrieval and citation, we’ve built a system that turns videos from passive media into structured, citable research tools which empowers researchers, creators, and professionals to explore video content in a meaningful and trustworthy way.

Additional Resources

Learn more about the analyze video engine—Pegasus-1.2. To explore TwelveLabs further and enhance your understanding of video content analysis, check out these resources:

Explore More Use Cases: Visit the Sonar by Perplexity to learn about its deep research capabilities. Small changes in this tutorial can help you explore even more use cases.

Join the Conversation: Share your feedback on this integration in the TwelveLabs Discord.

Explore Tutorials: Dive deeper into TwelveLabs capabilities with our comprehensive tutorials

We encourage you to use these resources to expand your knowledge and create innovative applications using TwelveLabs video understanding technology.

Related articles

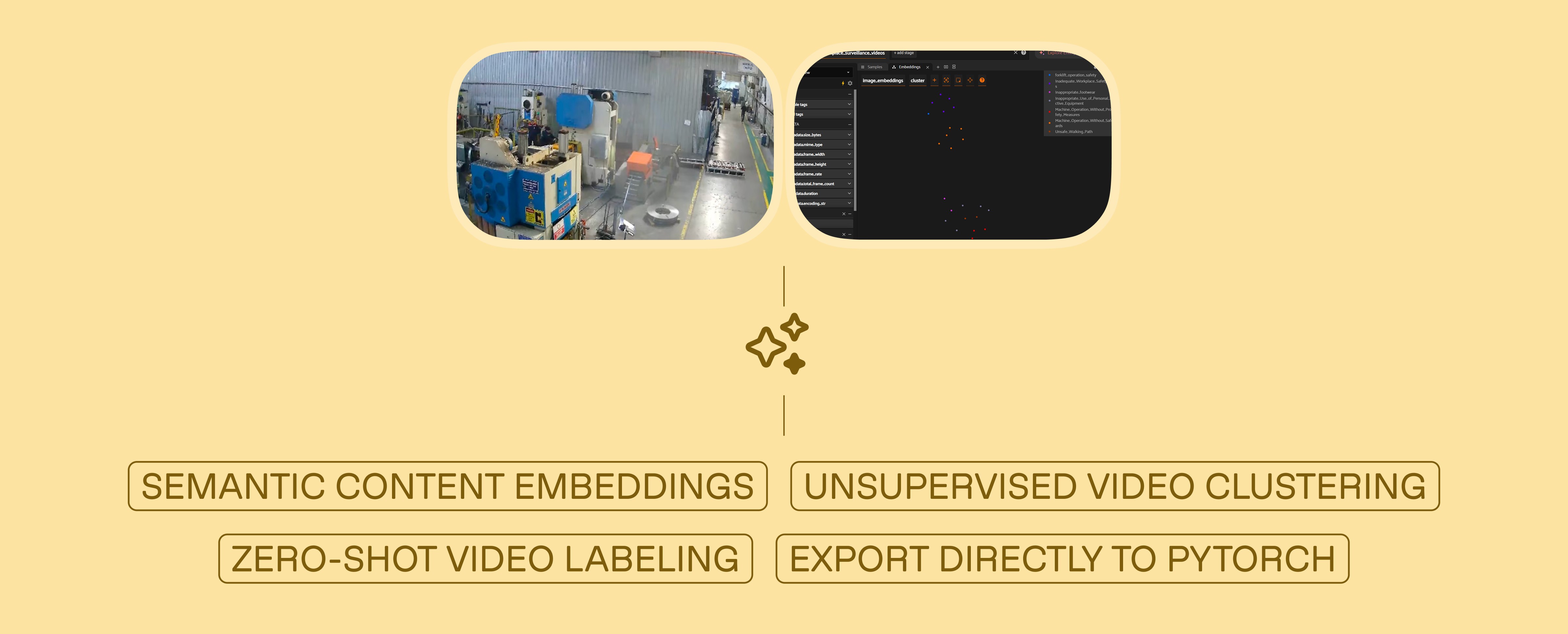

From Raw Surveillance to Training-Ready Datasets in Minutes: Building an Automated Video Curator with TwelveLabs and FiftyOne

From Manual Review to Automated Intelligence: Building a Surgical Video Analysis Platform with YOLO and Twelve Labs

Building Recurser: Iterative AI Video Enhancement with TwelveLabs and Google Veo

Building an AI-Powered Sponsorship ROI Analysis Platform with TwelveLabs