Tutorial

Tutorial

Tutorial

Building An AI-Powered Lecture Analysis Platform with TwelveLabs API

Nathan Che

Nathan Che

Nathan Che

This tutorial demonstrates the TwelveLabs lecture content enhancer, built to help educators and students enhance learning and understanding of content with AI generated data and insights. Not only that, this application serves as an unbiased benchmark between current video models on the market, specifically between AWS Nova and Google Gemini video understanding models. Given the same prompt, topK, topP, and temperature settings, results will be compared side-by-side, alongside generation time. It is hard to compare video model output with statistics, so the best way is to visually compare given short and long form content videos. By showcasing these results, we hope to promote and help visualize TwelveLab’s video model’s increased accuracy, speed, and comprehensive AI inference power. This will also be an AWS-first application that interacts with services like AWS DynamoDB, AWS Bedrock, and AWS Lambda for asynchronous API invocation, marking it as a good entry point to using TwelveLabs in AWS Bedrock.

This tutorial demonstrates the TwelveLabs lecture content enhancer, built to help educators and students enhance learning and understanding of content with AI generated data and insights. Not only that, this application serves as an unbiased benchmark between current video models on the market, specifically between AWS Nova and Google Gemini video understanding models. Given the same prompt, topK, topP, and temperature settings, results will be compared side-by-side, alongside generation time. It is hard to compare video model output with statistics, so the best way is to visually compare given short and long form content videos. By showcasing these results, we hope to promote and help visualize TwelveLab’s video model’s increased accuracy, speed, and comprehensive AI inference power. This will also be an AWS-first application that interacts with services like AWS DynamoDB, AWS Bedrock, and AWS Lambda for asynchronous API invocation, marking it as a good entry point to using TwelveLabs in AWS Bedrock.

뉴스레터 구독하기

최신 영상 AI 소식과 활용 팁, 업계 인사이트까지 한눈에 받아보세요

AI로 영상을 검색하고, 분석하고, 탐색하세요.

2025. 9. 12.

2025. 9. 12.

2025. 9. 12.

15 Minutes

15 Minutes

15 Minutes

링크 복사하기

링크 복사하기

링크 복사하기

Tutorial Guide

Note: Alongside a step-by-step tutorial of the software, this article delves heavily into benchmarking and cost analysis between models. This means that this article is highly comprehensive and we recommend skipping certain sections depending on your role:

Software Engineers — Those that want to learn and build the app from scratch.

Go from start to finish, but feel free to skim any “Deep Dives”

Software Architects — Those learning how to integrate TwelveLabs with AWS.

Skim through the technical code details, but read and analyze technical architecture diagrams.

Decision Makers — Those wondering if TwelveLabs is the correct solution for your business.

Jump straight to the “Deep Dive” topics on benchmarking and cost analysis.

I highly recommend all roles watch at least the application demo to get a high level overview of what we have built.

Introduction

What if teachers could instantly transform their static video lectures into interactive study guides, chapters, and practice questions to enrich student learning outside of the classroom?✨

In this tutorial we will be building a Lecture Content Analyzer platform using TwelveLabs and AWS. It will be an end-to-end system that allows teachers to transform their static video content in one simple upload into:

Targeted Practice Questions

Custom Study Guides

Time-stamped Video Chapters

Transcripts for accessibility

Pacing Recommendations

Detailed Notes | Summaries

And much more…

At its core we have TwelveLabs Pegasus and Marengo models providing deep second-to-second video understanding and comprehensive multimodal embeddings, allowing us to generate highly relevant video-accurate content and recommendation embeddings.

This multimodal understanding is built on top of AWS, with the latest AWS Bedrock TwelveLabs Partnership, allowing for easy TwelveLabs integration into AWS and access to 100+ AWS services to support your software.

Finally, you’ll be able to visualize and compare TwelveLabs Pegasus against Google Gemini and AWS Nova. We’ll dive deep into model benchmarks, visual comparisons, and detailed cost analysis for each model respectively.

Application Demo

If you’d like to see it in action, feel free to watch the video demo below or following along with the code in this Github repo before starting the tutorial!

Live Application Link: https://twelve-labs-education-poc-s7sz.vercel.app/

Learning Objectives

In this tutorial you will:

Learn how TwelveLabs seamlessly integrates with AWS services

Configure your own AWS services including DynamoDB and S3.

Understand key concepts like output inference quality, indexing time, and inference time.

Conduct detailed cost analysis between various multimodal models on the market.

Analyze technical architecture diagrams and decisions that allow multimodal models to be performant with your frontend interface.

Prerequisites

Python 3.8+: Download Python | Python.org

Node.JS 3.8+: Node.js — Download Node.js®

AWS CLI: Setting up the AWS CLI - AWS Command Line Interface

Terraform (Optional): Install Terraform | Terraform | HashiCorp Developer

TwelveLabs API Key: Authentication | TwelveLabs

TwelveLabs Index: Python SDK | TwelveLabs

AWS Access Key: Credentials - Boto3 1.40.12 documentation

Google Gemini API Key: Using Gemini API keys | Google AI for Developers

Intermediate understanding of Python and JavaScript.

Local Environment Setup

1 - Pull the repository to the local environment.

>> git2 - Provision the required AWS resources by running the Terraform script from your local repository.

* For those who want to understand the AWS integration and configure each service by hand, you can follow the manual setup instructions in the README: GitHub Repo README.

3 - Add environment variables into the frontend and backend folder.

.env.local (/frontend/)

TWELVE_LABS_API_KEY=... NEXT_PUBLIC_TWELVE_LABS_INDEX_ID=... AWS_ACCESS_KEY_ID=... AWS_SECRET_ACCESS_KEY=... AWS_S3_BUCKET_NAME=twelvelabs-lecture-content-poc AWS_REGION=us-east-1 GEMINI_API_KEY=... BLOB_READ_WRITE_TOKEN=... NEXT_PUBLIC_API_URL

.env (/api/)

TWELVE_LABS_API_KEY=... TWELVE_LABS_INDEX_ID=... AWS_ACCESS_KEY=... AWS_SECRET_ACCESS_KEY=... AWS_ACCOUNT_ID=... DYNAMODB_CONTENT_TABLE_NAME=twelvelabs-education-video-poc DYNAMODB_CONTENT_USER_NAME=twelvelabs-education-user-poc S3_BUCKET_NAME=twelvelabs-lecture-content-poc GOOGLE_API_KEY

4 - Create a virtual environment and install Python packages.

Navigate to your GitHub repository and run the following command:

>> python -mThen activate your virtual environment and install all Python requirements by running the commands:

>> .\venv\Scripts\activate

>> pip install -r

5 - Start frontend sample application using Node Package Manager (NPM).

Inside your GitHub repository, navigate to your frontend folder in console and type the following:

>> npmThen navigate to localhost:3000 to access the site.

* Ensure that NPM is installed: Downloading and installing Node.js and npm | npm Docs

6 - Start the backend by running main.py in the API folder of the GitHub repository.

Inside your GitHub repository, navigate to your API folder in the console and type the following:

Leave this running as it will host the REST APIs that connect to your AWS console and the AI models!

Phase 1: Lecture Indexing

Great! Now that you have your local environment and AWS infrastructure set up we can get started! Let’s begin with indexing the video into the various cloud providers. Here is the technical architecture for this process:

The website, when given a video, immediately converts it to a video blob (Binary Large Object) living inside your browser’s memory that we can then convert into bytes. These bytes act as a universal language, understood by all computers, that can be transformed into an MP4 file that we send with an API request to Google Cloud Provider and AWS S3 buckets respectively.

/frontend/…/api/upload-gemini/route.js (Lines 23 - 58)

// Convert file to buffer const bytes = await file.arrayBuffer(); const buffer = Buffer.from(bytes); // Create a temporary file path const tempFileName = `${userName}-${Date.now()}-${file.name}`; const tempFilePath = join(tmpdir(), tempFileName); // Write the buffer to a temporary file await writeFile(tempFilePath, buffer); try { // Upload file to GCP using Google Client SDK. const myfile = await googleClient.files.upload({ file: tempFilePath, config: { mimeType: file.type || 'video/mp4' }, }); return NextResponse.json({ success: true, geminiFileId: myfile.uri, fileName: file.name }); } finally { await writeFile(tempFilePath, ''); }

💡 Design Choice: Why don’t we also upload to TwelveLabs? Isn’t that our main multimodal AI model?

This is thanks to the recent AWS partnership, which integrates TwelveLabs directly into AWS Bedrock. This means:

Video Storage Integration into S3: TwelveLabs models can now directly view and analyze your videos in your S3 bucket, as opposed to uploading to TwelveLabs directly.

Data Compliance & Security: Your video and software no longer need extra compliance if you want to use TwelveLabs, as now your videos can stay in your AWS infrastructure while still meeting AW Cloud Compliance standards.

Easy Developer Integration: Instead of downloading separate SDKs for TwelveLabs and Boto3 for AWS, everything can now be handled in Boto3! This simplifies the development process and ensures a more streamlined code base in production.

Read more about our recent partnership here: TwelveLabs x AWS Amazon Bedrock - Twelve Labs!

By reducing the number of cloud providers we have to upload this video to, users have a seamless user experience as indexing and video upload times are drastically reduced! In the code below, we make use of Promise.all to concurrently upload to Google Cloud Provider and AWS S3.

/frontend/…/dashboard/courses/page.js

// Upload asynchronously to all cloud providers. const [s3UploadResult, geminiUploadResult] = await Promise.all([ uploadToS3(), uploadToGemini(), ]);

💡Design Choice: Why did we upload to Google as well instead of a single video database like an AWS S3 bucket? Wouldn’t it save money?

The answer lies in the simple fact that providers like Google Gemini require files over 20MB to be uploaded to their cloud provider, Google Cloud Provider. This incurs additional charges. Prior to the AWS partnership you also had to store your videos on a TwelveLabs index, but with this new partnership, your video data can now all be centralized onto a single AWS infrastructure!

PSA: Though I will say the native TwelveLabs platform also offers powerful features like embedding visualization.

Lastly, we will store metadata regarding our video inside an AWS DynamoDB table for easy retrieval later.

async def upload_video(video_params: VideoRequest = Depends(get_video_id_from_request)): try: db_handler = DBHandler() db_handler.upload_video_ids(twelve_labs_video_id=video_params.twelve_labs_video_id, s3_key=video_params.s3_key, gemini_file_id=video_params.gemini_file_id) except Exception as e: return DefaultResponse(status='error', message=str(e), status_code=500) return DefaultResponse(status='success', message='Video uploaded successfully', status_code=200)

Feel free to take a look into the helpers module of the API folder if you’d like to learn more, however it is definitely not required due to the volume of code for this tutorial. Here is the upload_video_ids() method used to upload the keys into your DYNAMODB_CONTENT_TABLE_NAME table.

def upload_video_ids(self, twelve_labs_video_id: str, s3_key: str, gemini_file_id: str): table_name = os.getenv('DYNAMODB_CONTENT_TABLE_NAME') if not table_name: logger.error("DYNAMODB_TABLE_NAME environment variable not set") raise Exception("DYNAMODB_TABLE_NAME environment variable not set") table = self.dynamodb.Table(table_name) logger.info("DynamoDB table reference obtained") item = { 'video_id': twelve_labs_video_id, 's3_key': s3_key, 'gemini_file_id': gemini_file_id, 'created_at': boto3.dynamodb.types.Decimal(str(int(time.time()))), } logger.info(f"Preparing to upload item: {item}") response = table.put_item(Item=item) logger.info(f"DynamoDB put_item response: {response}") return response

So now that we have these videos uploaded to their respective provider’s, we are ready to pass them onto the AI inference stage to generate content for our students and gain improvement insights for our educators!

Phase 2: Lecture Content AI Inference

Now moving on to the fun part, AI inference! Before reading code snippets for the TwelveLab’s Pegasus API calls, we must first understand the technical architecture behind the calls, as using the right API architecture can be the difference between a <30 second inference time and 5+ minute inference time.

As seen above we use Asynchronous Server Gateway Interface (ASGI) FastAPI endpoints to generate content for each different section of the lecture analysis. This is a minor detail, with a major impact towards your user experience.

💡Design Choice: What even is an ASGI and why is that important in our backend API services?

ASGI, also known as the successor to Web Server Gateway Interface (WSGI), is a server architecture built around asynchronous calls and allows for multiple connections and events simultaneously. It does this by switching to alternative tasks while others are “loading”.

Think of this as a chef cooking spaghetti! While his noodles are boiling, the chef won’t just stand there and wait until the noodles are complete before starting the meatballs. Like any modern restaurant with lots of customers, they need to work fast, so they may start the meatballs to multitask.

This same concept is applied to our API calls.For example, while Google Gemini loads the summary, we can also load TwelveLab’s and AWS Nova’s summary, and return to whichever finishes first.

This allows us to generate all the following data concurrently, without having to wait for each API call to each provider to finish (reducing inference time from ~5 minutes to <30 seconds):

Gist (Title, Recommendation Tags, Topics)

Timeline Chapters

Pacing Recommendations

Key Takeaways

Quiz Questions

Student Engagement Opportunities

Summary

Transcript

In order to keep these API endpoints organized, we create a factory class for our different providers as seen below:

/api/providers/llm.py (Lines 1 - 31)

from abc import ABC, abstractmethod class LLMProvider(ABC): @abstractmethod def __init__(self, *args, **kwargs): pass @abstractmethod def generate_chapters(self): pass @abstractmethod def generate_key_takeaways(self): pass @abstractmethod def generate_pacing_recommendations(self): pass @abstractmethod def generate_quiz_questions(self): pass @abstractmethod def generate_engagement(self): pass @abstractmethod def generate_gist(self): pass

Below you can see how the file structure is organized and each provider using this abstract class.

The API endpoints in our FastAPI server can then call the appropriate class and method based with ease.

By separating out each data point into a REST API endpoint, we are able to individually measure inference time, an important measure dictating how long it takes for the AI to return an output. You can also see with this class design in Python how “safe” it is to add more providers and easy for others to check which methods are required.

Deep Dive Topic: Benchmarking & Cost Analysis

Before we show the results it’s important to understand how we are benchmarking the models.

TopP, topK, temperature, and other custom model inference settings are held at default.

Default settings are generally where models perform optimally for the “general use case”.

Learn more about each setting: Temperature, Top-P, Top-K: A Comprehensive Guide | MoonlightDevs - Tech Blog (Credit MoonlightDevs)

All models will be fed the same prompt for each content section to ensure fair input.

Prompts can be viewed in the /api/helpers/prompts.py folder.

Models that support structured output will be assisted with Pydantic.

This is a common developer tool that ensures JSON schema outputs. If not provided, this is generally a flaw in provider model design as it makes it difficult to produce complex objects.

Feel free to read more about how Pydantic works here: Welcome to Pydantic - Pydantic

All models will be passed the same video: https://www.youtube.com/watch?v=5LeZflr8Zfs.

With that out of the way, let’s see the results for output inference quality! This is the primary metric that often dictates the formatting and accuracy of content generated!

As seen above we see varying different qualities between the different models. Of course this “quality” measure is fully dependent on your use case, but based on the YouTube video, here are the takeaways:

Google Gemini: Highly accurate, but not very precise. Fine-tuning and prompt engineering is required to ensure that it doesn’t list every detail about the video. It overlisted recommendation tags, which can be dangerous for a recommendation system that relies on tags.

AWS Nova: Very accurate and precise, but seems to be lacking when it comes to structured output. AWS Nova at this time of writing does not support structured outputs with Pydantic, leading to weird tuning mechanisms like adjusting the temperature and prompting requirements

Read more: Require structured output - Amazon Nova

TwelveLabs: Mixes the right amount of accuracy and precision while having highly accurate structured output. The number of topics could have been more detailed, but that would come down to a matter of prompting as we said “at least 1 topic”.

Now onto something less subjective, the inference time of each model.

As seen above, TwelveLab’s and Google’s models had extremely similar generation times. However there are some key distinctions to point out.

TwelveLabs Cached Data: You may notice that the transcript and gist are near instant for TwelveLabs! This is because we recognize common info like video titles will likely always be needed, so we cache that data inside of our platform allowing near-instant retrieval time.

Long-Form vs. Short-Form Text Content:

Gemini is faster for generating long-form text content. We can see it wins in lengthy JSON structured content like the Chapters and Key Takeaways that require timestamps.

TwelveLabs is faster when it comes to short-form engagement like bullet points and summary.

Finally, for the most important part, cost analysis of each model! To do this in a fair manner and easily, let’s assume we have a 1 hour video and reference each provider’s documentation respectively.

* Inference API Calls: 8 (One for each category)

TwelveLab’s: $2.79

Methodology: TwelveLabs | Pricing Calculator

Video Upload Hours = 1

Disabled Embedding API — Did not use embedding for other models.

Analyze API Usage = 8 — One for each category of data.

Search API Usage = 0

Google Gemini: $10.62 (without additional GCP infrastructure costs)

Google Gemini relies on a pay-by-token policy, where the amount you pay depends on the size of input and output tokens.

Documentation: Understand and count tokens | Gemini API | Google AI for Developers

295 tokens per second of a video.

$1.25 per 1M tokens.

Tokens in an hour video: 3600 seconds * 295 tokens/second = 1062000

Calculations: (1062000 * / 1000000) * 1.25 * 8 = $10.62

AWS Nova: $0.22 (without additional AWS infrastructure costs)

Token Documentation: Video understanding - Amazon Nova

Explicitly estimates 1 hour video to be: 276,480 tokens.

Pricing Documentation: Pricing

AWS Nova Pro model = $0.0008 per 1,000 input tokens

Calculations: (276,480 / 1,000) * 0.0008 = $0.22

Here is a table with all of these insights side-by-side, so that you can make the best informed decision:

Metric | TwelveLabs | Google Gemini | AWS Nova |

Output Inference Quality | ✅ Balanced & Structured: A good mix of accuracy and precision. | 🎯 Accurate but Imprecise: Highly accurate but tends to over-list details and tags. | ⚙️ Accurate but Unstructured: Very accurate and precise, but struggles with formatting. |

Structured Output | ✅ Yes (Reliable): Natively supports and reliably returns structured data. | ✅ Yes (Reliable): Natively supports and reliably returns structured data. | ❌ No (Unreliable): Does not guarantee structured output, requiring extra development effort to parse responses. |

Inference time | Fast (especially cached data): Excels at short-form content and instant retrieval of common data. | Fast (long-form content): Similar overall speed but has an edge in generating lengthy, complex content. | Average: At times can be fast, but generally takes additional 10-20 seconds compared to other models. |

Caching Mechanism | ✅ Yes: Pre-processes and caches transcripts and gists upon upload for instant access. | ❌ No: Each query re-processes the video content. | ❌ No: Each query re-processes the video content. |

Cost | 💸💸 $2.79 | 💸💸💸 $10.62 | 💵 $0.22 |

Pricing Model | Per-Hour & Per-Call: Charges for video length once, then a smaller fee for each analysis call. | Pay-Per-Token: Charges for the total tokens in the video for each query. | Pay-Per-Token: Charges for the total tokens in the video for each query. |

Additional Costs | (Included in platform) | GCP Infrastructure Costs | AWS Infrastructure Costs |

Of course, all models will improve and these metrics can change, but with this platform you can experiment with your own videos and see which video-understanding model works best for your use case!

Phase 3: Student Feedback Loop + Classroom Insights

Now onto the final section, where we not only enable the student feedback loop so teachers can understand their classroom, but truly see how TwelveLabs integrates seamlessly into the AWS technology stack.

As you can see above students now get access to the TwelveLabs generated data, which include the practice questions, chapter timeline, and course summary. This can transform hours of time creating study supplemental material into seconds with TwelveLabs Pegasus text generation capabilities and our AWS infrastructure helps deliver this content securely.

Once students complete these practice questions and watch the video, students receive a report detailing:

Quiz Performance by Chapter — Detailed overview of what they missed and the specific topic.

Personalized Study Recommendations — Targeting exactly what students missed.

Recommended External Video Content — Leveraging TwelveLabs Marengo embeddings for similarity comparison with videos in S3 database.

Mastery Score — Score given by the student’s performance by topic.

With that in mind, let’s see how this works in our technical architecture and examine exactly why AWS integration plays such a key role in full end-to-end applications.

As seen in the technical architecture, we incorporate Claude 3.5 Sonnet from the AWS Bedrock service. Without installing any external packages or utilizing external cloud providers, we have access to text-based reasoning models that can directly interact with our data in AWS DynamoDB.

Furthermore, to enhance student learning we integrated vector embedding YouTube video recommendations. Using the TwelveLabs Marengo Model, we generated context-rich vector embeddings to conduct Euclidian distance based K-nearest neighbor search.

/api/helpers/reasoning.py (lines 262 - 289)

def fetch_related_videos(self, video_id: str): # Fetch original video from S3 Bucket. presigned_urls = DBHandler().fetch_s3_presigned_urls() # Fetch embedding stored in Marengo Model video_object = self.twelvelabs_client.index.video.retrieve(index_id=os.getenv('TWELVE_LABS_INDEX_ID'), id=video_id, embedding_option=['visual-text']) video_duration = video_object.system_metadata.duration video_embedding_segments = video_object.embedding.video_embedding.segments combined_embedding = np.array([]) for segment in video_embedding_segments: combined_embedding = np.concatenate([combined_embedding, segment.embeddings_float]) # Generate embeddings of other YouTube videos. other_video_embeddings = {} for video_url in presigned_urls: video_embedding = self.generate_new_video_embeddings(video_url, combined_embedding, video_duration) other_video_embeddings[video_url] = video_embedding # Conduct K-Nearest Neighbor Search with Euclidian Distance. knn_results = self.knn_search(combined_embedding, other_video_embeddings, 5) return knn_results

Notice how all these features relied on one singular unified API / platform, AWS. This ensures that your data is secure under one platform and makes development extremely streamlined.

Finally, after students submit their assignments, the teacher sees the completed quiz results of each student and analyzes their classroom in their dashboard.

This entire student feedback loop ultimately enriches both learning on the student side, as they are supplemented with AI content, while providing valuable insight for teachers to prepare their next lesson.

This was all thanks to video understanding models like TwelveLabs that could truly understand our video content and automate generation of extremely valuable content that would have taken hours or days to enrich student learning.

Conclusion

Great, thanks for reading along! This tutorial showed you not only how powerful TwelveLabs can be in the education industry, but hopefully provided you with many learning opportunities like:

TwelveLabs AWS Integration — Explaining how TwelveLabs integrated into AWS Bedrock can be a game-changer when it comes to ease of development as well as centralized architecture.

Architecture Explanation & Design Choices — Why certain technical decisions were made either for cost-saving or performance.

Model Benchmarking — Visualizing outputs of market video-understanding models and their inference time to ensure you choose the correct model for your next project.

Check out some more in-depth resources regarding this project here:

TwelveLabs Technical Report: Google Doc

Technical Architecture Diagrams: LucidCharts

API Documentation: Google Doc

GitHub Repository: https://github.com/nathanchess/twelve-labs-education-poc

Live Application Link: https://twelve-labs-education-poc-s7sz.vercel.app/

By combining TwelveLabs with the computation and wide-range of services in AWS, you unlock the power to build highly sophisticated services and software that can modernize the education industry and beyond.

Tutorial Guide

Note: Alongside a step-by-step tutorial of the software, this article delves heavily into benchmarking and cost analysis between models. This means that this article is highly comprehensive and we recommend skipping certain sections depending on your role:

Software Engineers — Those that want to learn and build the app from scratch.

Go from start to finish, but feel free to skim any “Deep Dives”

Software Architects — Those learning how to integrate TwelveLabs with AWS.

Skim through the technical code details, but read and analyze technical architecture diagrams.

Decision Makers — Those wondering if TwelveLabs is the correct solution for your business.

Jump straight to the “Deep Dive” topics on benchmarking and cost analysis.

I highly recommend all roles watch at least the application demo to get a high level overview of what we have built.

Introduction

What if teachers could instantly transform their static video lectures into interactive study guides, chapters, and practice questions to enrich student learning outside of the classroom?✨

In this tutorial we will be building a Lecture Content Analyzer platform using TwelveLabs and AWS. It will be an end-to-end system that allows teachers to transform their static video content in one simple upload into:

Targeted Practice Questions

Custom Study Guides

Time-stamped Video Chapters

Transcripts for accessibility

Pacing Recommendations

Detailed Notes | Summaries

And much more…

At its core we have TwelveLabs Pegasus and Marengo models providing deep second-to-second video understanding and comprehensive multimodal embeddings, allowing us to generate highly relevant video-accurate content and recommendation embeddings.

This multimodal understanding is built on top of AWS, with the latest AWS Bedrock TwelveLabs Partnership, allowing for easy TwelveLabs integration into AWS and access to 100+ AWS services to support your software.

Finally, you’ll be able to visualize and compare TwelveLabs Pegasus against Google Gemini and AWS Nova. We’ll dive deep into model benchmarks, visual comparisons, and detailed cost analysis for each model respectively.

Application Demo

If you’d like to see it in action, feel free to watch the video demo below or following along with the code in this Github repo before starting the tutorial!

Live Application Link: https://twelve-labs-education-poc-s7sz.vercel.app/

Learning Objectives

In this tutorial you will:

Learn how TwelveLabs seamlessly integrates with AWS services

Configure your own AWS services including DynamoDB and S3.

Understand key concepts like output inference quality, indexing time, and inference time.

Conduct detailed cost analysis between various multimodal models on the market.

Analyze technical architecture diagrams and decisions that allow multimodal models to be performant with your frontend interface.

Prerequisites

Python 3.8+: Download Python | Python.org

Node.JS 3.8+: Node.js — Download Node.js®

AWS CLI: Setting up the AWS CLI - AWS Command Line Interface

Terraform (Optional): Install Terraform | Terraform | HashiCorp Developer

TwelveLabs API Key: Authentication | TwelveLabs

TwelveLabs Index: Python SDK | TwelveLabs

AWS Access Key: Credentials - Boto3 1.40.12 documentation

Google Gemini API Key: Using Gemini API keys | Google AI for Developers

Intermediate understanding of Python and JavaScript.

Local Environment Setup

1 - Pull the repository to the local environment.

>> git2 - Provision the required AWS resources by running the Terraform script from your local repository.

* For those who want to understand the AWS integration and configure each service by hand, you can follow the manual setup instructions in the README: GitHub Repo README.

3 - Add environment variables into the frontend and backend folder.

.env.local (/frontend/)

TWELVE_LABS_API_KEY=... NEXT_PUBLIC_TWELVE_LABS_INDEX_ID=... AWS_ACCESS_KEY_ID=... AWS_SECRET_ACCESS_KEY=... AWS_S3_BUCKET_NAME=twelvelabs-lecture-content-poc AWS_REGION=us-east-1 GEMINI_API_KEY=... BLOB_READ_WRITE_TOKEN=... NEXT_PUBLIC_API_URL

.env (/api/)

TWELVE_LABS_API_KEY=... TWELVE_LABS_INDEX_ID=... AWS_ACCESS_KEY=... AWS_SECRET_ACCESS_KEY=... AWS_ACCOUNT_ID=... DYNAMODB_CONTENT_TABLE_NAME=twelvelabs-education-video-poc DYNAMODB_CONTENT_USER_NAME=twelvelabs-education-user-poc S3_BUCKET_NAME=twelvelabs-lecture-content-poc GOOGLE_API_KEY

4 - Create a virtual environment and install Python packages.

Navigate to your GitHub repository and run the following command:

>> python -mThen activate your virtual environment and install all Python requirements by running the commands:

>> .\venv\Scripts\activate

>> pip install -r

5 - Start frontend sample application using Node Package Manager (NPM).

Inside your GitHub repository, navigate to your frontend folder in console and type the following:

>> npmThen navigate to localhost:3000 to access the site.

* Ensure that NPM is installed: Downloading and installing Node.js and npm | npm Docs

6 - Start the backend by running main.py in the API folder of the GitHub repository.

Inside your GitHub repository, navigate to your API folder in the console and type the following:

Leave this running as it will host the REST APIs that connect to your AWS console and the AI models!

Phase 1: Lecture Indexing

Great! Now that you have your local environment and AWS infrastructure set up we can get started! Let’s begin with indexing the video into the various cloud providers. Here is the technical architecture for this process:

The website, when given a video, immediately converts it to a video blob (Binary Large Object) living inside your browser’s memory that we can then convert into bytes. These bytes act as a universal language, understood by all computers, that can be transformed into an MP4 file that we send with an API request to Google Cloud Provider and AWS S3 buckets respectively.

/frontend/…/api/upload-gemini/route.js (Lines 23 - 58)

// Convert file to buffer const bytes = await file.arrayBuffer(); const buffer = Buffer.from(bytes); // Create a temporary file path const tempFileName = `${userName}-${Date.now()}-${file.name}`; const tempFilePath = join(tmpdir(), tempFileName); // Write the buffer to a temporary file await writeFile(tempFilePath, buffer); try { // Upload file to GCP using Google Client SDK. const myfile = await googleClient.files.upload({ file: tempFilePath, config: { mimeType: file.type || 'video/mp4' }, }); return NextResponse.json({ success: true, geminiFileId: myfile.uri, fileName: file.name }); } finally { await writeFile(tempFilePath, ''); }

💡 Design Choice: Why don’t we also upload to TwelveLabs? Isn’t that our main multimodal AI model?

This is thanks to the recent AWS partnership, which integrates TwelveLabs directly into AWS Bedrock. This means:

Video Storage Integration into S3: TwelveLabs models can now directly view and analyze your videos in your S3 bucket, as opposed to uploading to TwelveLabs directly.

Data Compliance & Security: Your video and software no longer need extra compliance if you want to use TwelveLabs, as now your videos can stay in your AWS infrastructure while still meeting AW Cloud Compliance standards.

Easy Developer Integration: Instead of downloading separate SDKs for TwelveLabs and Boto3 for AWS, everything can now be handled in Boto3! This simplifies the development process and ensures a more streamlined code base in production.

Read more about our recent partnership here: TwelveLabs x AWS Amazon Bedrock - Twelve Labs!

By reducing the number of cloud providers we have to upload this video to, users have a seamless user experience as indexing and video upload times are drastically reduced! In the code below, we make use of Promise.all to concurrently upload to Google Cloud Provider and AWS S3.

/frontend/…/dashboard/courses/page.js

// Upload asynchronously to all cloud providers. const [s3UploadResult, geminiUploadResult] = await Promise.all([ uploadToS3(), uploadToGemini(), ]);

💡Design Choice: Why did we upload to Google as well instead of a single video database like an AWS S3 bucket? Wouldn’t it save money?

The answer lies in the simple fact that providers like Google Gemini require files over 20MB to be uploaded to their cloud provider, Google Cloud Provider. This incurs additional charges. Prior to the AWS partnership you also had to store your videos on a TwelveLabs index, but with this new partnership, your video data can now all be centralized onto a single AWS infrastructure!

PSA: Though I will say the native TwelveLabs platform also offers powerful features like embedding visualization.

Lastly, we will store metadata regarding our video inside an AWS DynamoDB table for easy retrieval later.

async def upload_video(video_params: VideoRequest = Depends(get_video_id_from_request)): try: db_handler = DBHandler() db_handler.upload_video_ids(twelve_labs_video_id=video_params.twelve_labs_video_id, s3_key=video_params.s3_key, gemini_file_id=video_params.gemini_file_id) except Exception as e: return DefaultResponse(status='error', message=str(e), status_code=500) return DefaultResponse(status='success', message='Video uploaded successfully', status_code=200)

Feel free to take a look into the helpers module of the API folder if you’d like to learn more, however it is definitely not required due to the volume of code for this tutorial. Here is the upload_video_ids() method used to upload the keys into your DYNAMODB_CONTENT_TABLE_NAME table.

def upload_video_ids(self, twelve_labs_video_id: str, s3_key: str, gemini_file_id: str): table_name = os.getenv('DYNAMODB_CONTENT_TABLE_NAME') if not table_name: logger.error("DYNAMODB_TABLE_NAME environment variable not set") raise Exception("DYNAMODB_TABLE_NAME environment variable not set") table = self.dynamodb.Table(table_name) logger.info("DynamoDB table reference obtained") item = { 'video_id': twelve_labs_video_id, 's3_key': s3_key, 'gemini_file_id': gemini_file_id, 'created_at': boto3.dynamodb.types.Decimal(str(int(time.time()))), } logger.info(f"Preparing to upload item: {item}") response = table.put_item(Item=item) logger.info(f"DynamoDB put_item response: {response}") return response

So now that we have these videos uploaded to their respective provider’s, we are ready to pass them onto the AI inference stage to generate content for our students and gain improvement insights for our educators!

Phase 2: Lecture Content AI Inference

Now moving on to the fun part, AI inference! Before reading code snippets for the TwelveLab’s Pegasus API calls, we must first understand the technical architecture behind the calls, as using the right API architecture can be the difference between a <30 second inference time and 5+ minute inference time.

As seen above we use Asynchronous Server Gateway Interface (ASGI) FastAPI endpoints to generate content for each different section of the lecture analysis. This is a minor detail, with a major impact towards your user experience.

💡Design Choice: What even is an ASGI and why is that important in our backend API services?

ASGI, also known as the successor to Web Server Gateway Interface (WSGI), is a server architecture built around asynchronous calls and allows for multiple connections and events simultaneously. It does this by switching to alternative tasks while others are “loading”.

Think of this as a chef cooking spaghetti! While his noodles are boiling, the chef won’t just stand there and wait until the noodles are complete before starting the meatballs. Like any modern restaurant with lots of customers, they need to work fast, so they may start the meatballs to multitask.

This same concept is applied to our API calls.For example, while Google Gemini loads the summary, we can also load TwelveLab’s and AWS Nova’s summary, and return to whichever finishes first.

This allows us to generate all the following data concurrently, without having to wait for each API call to each provider to finish (reducing inference time from ~5 minutes to <30 seconds):

Gist (Title, Recommendation Tags, Topics)

Timeline Chapters

Pacing Recommendations

Key Takeaways

Quiz Questions

Student Engagement Opportunities

Summary

Transcript

In order to keep these API endpoints organized, we create a factory class for our different providers as seen below:

/api/providers/llm.py (Lines 1 - 31)

from abc import ABC, abstractmethod class LLMProvider(ABC): @abstractmethod def __init__(self, *args, **kwargs): pass @abstractmethod def generate_chapters(self): pass @abstractmethod def generate_key_takeaways(self): pass @abstractmethod def generate_pacing_recommendations(self): pass @abstractmethod def generate_quiz_questions(self): pass @abstractmethod def generate_engagement(self): pass @abstractmethod def generate_gist(self): pass

Below you can see how the file structure is organized and each provider using this abstract class.

The API endpoints in our FastAPI server can then call the appropriate class and method based with ease.

By separating out each data point into a REST API endpoint, we are able to individually measure inference time, an important measure dictating how long it takes for the AI to return an output. You can also see with this class design in Python how “safe” it is to add more providers and easy for others to check which methods are required.

Deep Dive Topic: Benchmarking & Cost Analysis

Before we show the results it’s important to understand how we are benchmarking the models.

TopP, topK, temperature, and other custom model inference settings are held at default.

Default settings are generally where models perform optimally for the “general use case”.

Learn more about each setting: Temperature, Top-P, Top-K: A Comprehensive Guide | MoonlightDevs - Tech Blog (Credit MoonlightDevs)

All models will be fed the same prompt for each content section to ensure fair input.

Prompts can be viewed in the /api/helpers/prompts.py folder.

Models that support structured output will be assisted with Pydantic.

This is a common developer tool that ensures JSON schema outputs. If not provided, this is generally a flaw in provider model design as it makes it difficult to produce complex objects.

Feel free to read more about how Pydantic works here: Welcome to Pydantic - Pydantic

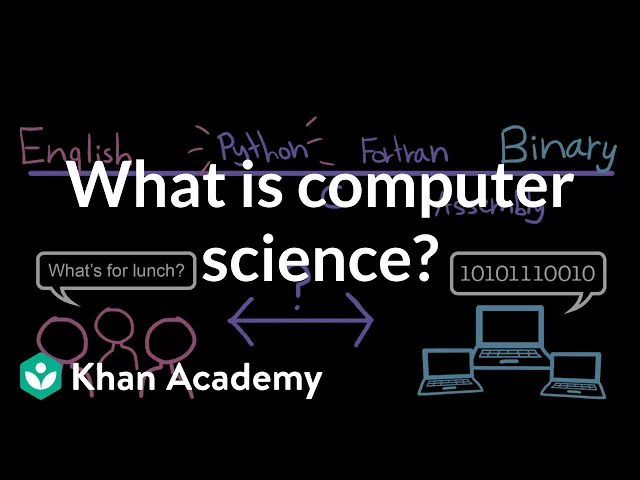

All models will be passed the same video: https://www.youtube.com/watch?v=5LeZflr8Zfs.

With that out of the way, let’s see the results for output inference quality! This is the primary metric that often dictates the formatting and accuracy of content generated!

As seen above we see varying different qualities between the different models. Of course this “quality” measure is fully dependent on your use case, but based on the YouTube video, here are the takeaways:

Google Gemini: Highly accurate, but not very precise. Fine-tuning and prompt engineering is required to ensure that it doesn’t list every detail about the video. It overlisted recommendation tags, which can be dangerous for a recommendation system that relies on tags.

AWS Nova: Very accurate and precise, but seems to be lacking when it comes to structured output. AWS Nova at this time of writing does not support structured outputs with Pydantic, leading to weird tuning mechanisms like adjusting the temperature and prompting requirements

Read more: Require structured output - Amazon Nova

TwelveLabs: Mixes the right amount of accuracy and precision while having highly accurate structured output. The number of topics could have been more detailed, but that would come down to a matter of prompting as we said “at least 1 topic”.

Now onto something less subjective, the inference time of each model.

As seen above, TwelveLab’s and Google’s models had extremely similar generation times. However there are some key distinctions to point out.

TwelveLabs Cached Data: You may notice that the transcript and gist are near instant for TwelveLabs! This is because we recognize common info like video titles will likely always be needed, so we cache that data inside of our platform allowing near-instant retrieval time.

Long-Form vs. Short-Form Text Content:

Gemini is faster for generating long-form text content. We can see it wins in lengthy JSON structured content like the Chapters and Key Takeaways that require timestamps.

TwelveLabs is faster when it comes to short-form engagement like bullet points and summary.

Finally, for the most important part, cost analysis of each model! To do this in a fair manner and easily, let’s assume we have a 1 hour video and reference each provider’s documentation respectively.

* Inference API Calls: 8 (One for each category)

TwelveLab’s: $2.79

Methodology: TwelveLabs | Pricing Calculator

Video Upload Hours = 1

Disabled Embedding API — Did not use embedding for other models.

Analyze API Usage = 8 — One for each category of data.

Search API Usage = 0

Google Gemini: $10.62 (without additional GCP infrastructure costs)

Google Gemini relies on a pay-by-token policy, where the amount you pay depends on the size of input and output tokens.

Documentation: Understand and count tokens | Gemini API | Google AI for Developers

295 tokens per second of a video.

$1.25 per 1M tokens.

Tokens in an hour video: 3600 seconds * 295 tokens/second = 1062000

Calculations: (1062000 * / 1000000) * 1.25 * 8 = $10.62

AWS Nova: $0.22 (without additional AWS infrastructure costs)

Token Documentation: Video understanding - Amazon Nova

Explicitly estimates 1 hour video to be: 276,480 tokens.

Pricing Documentation: Pricing

AWS Nova Pro model = $0.0008 per 1,000 input tokens

Calculations: (276,480 / 1,000) * 0.0008 = $0.22

Here is a table with all of these insights side-by-side, so that you can make the best informed decision:

Metric | TwelveLabs | Google Gemini | AWS Nova |

Output Inference Quality | ✅ Balanced & Structured: A good mix of accuracy and precision. | 🎯 Accurate but Imprecise: Highly accurate but tends to over-list details and tags. | ⚙️ Accurate but Unstructured: Very accurate and precise, but struggles with formatting. |

Structured Output | ✅ Yes (Reliable): Natively supports and reliably returns structured data. | ✅ Yes (Reliable): Natively supports and reliably returns structured data. | ❌ No (Unreliable): Does not guarantee structured output, requiring extra development effort to parse responses. |

Inference time | Fast (especially cached data): Excels at short-form content and instant retrieval of common data. | Fast (long-form content): Similar overall speed but has an edge in generating lengthy, complex content. | Average: At times can be fast, but generally takes additional 10-20 seconds compared to other models. |

Caching Mechanism | ✅ Yes: Pre-processes and caches transcripts and gists upon upload for instant access. | ❌ No: Each query re-processes the video content. | ❌ No: Each query re-processes the video content. |

Cost | 💸💸 $2.79 | 💸💸💸 $10.62 | 💵 $0.22 |

Pricing Model | Per-Hour & Per-Call: Charges for video length once, then a smaller fee for each analysis call. | Pay-Per-Token: Charges for the total tokens in the video for each query. | Pay-Per-Token: Charges for the total tokens in the video for each query. |

Additional Costs | (Included in platform) | GCP Infrastructure Costs | AWS Infrastructure Costs |

Of course, all models will improve and these metrics can change, but with this platform you can experiment with your own videos and see which video-understanding model works best for your use case!

Phase 3: Student Feedback Loop + Classroom Insights

Now onto the final section, where we not only enable the student feedback loop so teachers can understand their classroom, but truly see how TwelveLabs integrates seamlessly into the AWS technology stack.

As you can see above students now get access to the TwelveLabs generated data, which include the practice questions, chapter timeline, and course summary. This can transform hours of time creating study supplemental material into seconds with TwelveLabs Pegasus text generation capabilities and our AWS infrastructure helps deliver this content securely.

Once students complete these practice questions and watch the video, students receive a report detailing:

Quiz Performance by Chapter — Detailed overview of what they missed and the specific topic.

Personalized Study Recommendations — Targeting exactly what students missed.

Recommended External Video Content — Leveraging TwelveLabs Marengo embeddings for similarity comparison with videos in S3 database.

Mastery Score — Score given by the student’s performance by topic.

With that in mind, let’s see how this works in our technical architecture and examine exactly why AWS integration plays such a key role in full end-to-end applications.

As seen in the technical architecture, we incorporate Claude 3.5 Sonnet from the AWS Bedrock service. Without installing any external packages or utilizing external cloud providers, we have access to text-based reasoning models that can directly interact with our data in AWS DynamoDB.

Furthermore, to enhance student learning we integrated vector embedding YouTube video recommendations. Using the TwelveLabs Marengo Model, we generated context-rich vector embeddings to conduct Euclidian distance based K-nearest neighbor search.

/api/helpers/reasoning.py (lines 262 - 289)

def fetch_related_videos(self, video_id: str): # Fetch original video from S3 Bucket. presigned_urls = DBHandler().fetch_s3_presigned_urls() # Fetch embedding stored in Marengo Model video_object = self.twelvelabs_client.index.video.retrieve(index_id=os.getenv('TWELVE_LABS_INDEX_ID'), id=video_id, embedding_option=['visual-text']) video_duration = video_object.system_metadata.duration video_embedding_segments = video_object.embedding.video_embedding.segments combined_embedding = np.array([]) for segment in video_embedding_segments: combined_embedding = np.concatenate([combined_embedding, segment.embeddings_float]) # Generate embeddings of other YouTube videos. other_video_embeddings = {} for video_url in presigned_urls: video_embedding = self.generate_new_video_embeddings(video_url, combined_embedding, video_duration) other_video_embeddings[video_url] = video_embedding # Conduct K-Nearest Neighbor Search with Euclidian Distance. knn_results = self.knn_search(combined_embedding, other_video_embeddings, 5) return knn_results

Notice how all these features relied on one singular unified API / platform, AWS. This ensures that your data is secure under one platform and makes development extremely streamlined.

Finally, after students submit their assignments, the teacher sees the completed quiz results of each student and analyzes their classroom in their dashboard.

This entire student feedback loop ultimately enriches both learning on the student side, as they are supplemented with AI content, while providing valuable insight for teachers to prepare their next lesson.

This was all thanks to video understanding models like TwelveLabs that could truly understand our video content and automate generation of extremely valuable content that would have taken hours or days to enrich student learning.

Conclusion

Great, thanks for reading along! This tutorial showed you not only how powerful TwelveLabs can be in the education industry, but hopefully provided you with many learning opportunities like:

TwelveLabs AWS Integration — Explaining how TwelveLabs integrated into AWS Bedrock can be a game-changer when it comes to ease of development as well as centralized architecture.

Architecture Explanation & Design Choices — Why certain technical decisions were made either for cost-saving or performance.

Model Benchmarking — Visualizing outputs of market video-understanding models and their inference time to ensure you choose the correct model for your next project.

Check out some more in-depth resources regarding this project here:

TwelveLabs Technical Report: Google Doc

Technical Architecture Diagrams: LucidCharts

API Documentation: Google Doc

GitHub Repository: https://github.com/nathanchess/twelve-labs-education-poc

Live Application Link: https://twelve-labs-education-poc-s7sz.vercel.app/

By combining TwelveLabs with the computation and wide-range of services in AWS, you unlock the power to build highly sophisticated services and software that can modernize the education industry and beyond.

Tutorial Guide

Note: Alongside a step-by-step tutorial of the software, this article delves heavily into benchmarking and cost analysis between models. This means that this article is highly comprehensive and we recommend skipping certain sections depending on your role:

Software Engineers — Those that want to learn and build the app from scratch.

Go from start to finish, but feel free to skim any “Deep Dives”

Software Architects — Those learning how to integrate TwelveLabs with AWS.

Skim through the technical code details, but read and analyze technical architecture diagrams.

Decision Makers — Those wondering if TwelveLabs is the correct solution for your business.

Jump straight to the “Deep Dive” topics on benchmarking and cost analysis.

I highly recommend all roles watch at least the application demo to get a high level overview of what we have built.

Introduction

What if teachers could instantly transform their static video lectures into interactive study guides, chapters, and practice questions to enrich student learning outside of the classroom?✨

In this tutorial we will be building a Lecture Content Analyzer platform using TwelveLabs and AWS. It will be an end-to-end system that allows teachers to transform their static video content in one simple upload into:

Targeted Practice Questions

Custom Study Guides

Time-stamped Video Chapters

Transcripts for accessibility

Pacing Recommendations

Detailed Notes | Summaries

And much more…

At its core we have TwelveLabs Pegasus and Marengo models providing deep second-to-second video understanding and comprehensive multimodal embeddings, allowing us to generate highly relevant video-accurate content and recommendation embeddings.

This multimodal understanding is built on top of AWS, with the latest AWS Bedrock TwelveLabs Partnership, allowing for easy TwelveLabs integration into AWS and access to 100+ AWS services to support your software.

Finally, you’ll be able to visualize and compare TwelveLabs Pegasus against Google Gemini and AWS Nova. We’ll dive deep into model benchmarks, visual comparisons, and detailed cost analysis for each model respectively.

Application Demo

If you’d like to see it in action, feel free to watch the video demo below or following along with the code in this Github repo before starting the tutorial!

Live Application Link: https://twelve-labs-education-poc-s7sz.vercel.app/

Learning Objectives

In this tutorial you will:

Learn how TwelveLabs seamlessly integrates with AWS services

Configure your own AWS services including DynamoDB and S3.

Understand key concepts like output inference quality, indexing time, and inference time.

Conduct detailed cost analysis between various multimodal models on the market.

Analyze technical architecture diagrams and decisions that allow multimodal models to be performant with your frontend interface.

Prerequisites

Python 3.8+: Download Python | Python.org

Node.JS 3.8+: Node.js — Download Node.js®

AWS CLI: Setting up the AWS CLI - AWS Command Line Interface

Terraform (Optional): Install Terraform | Terraform | HashiCorp Developer

TwelveLabs API Key: Authentication | TwelveLabs

TwelveLabs Index: Python SDK | TwelveLabs

AWS Access Key: Credentials - Boto3 1.40.12 documentation

Google Gemini API Key: Using Gemini API keys | Google AI for Developers

Intermediate understanding of Python and JavaScript.

Local Environment Setup

1 - Pull the repository to the local environment.

>> git2 - Provision the required AWS resources by running the Terraform script from your local repository.

* For those who want to understand the AWS integration and configure each service by hand, you can follow the manual setup instructions in the README: GitHub Repo README.

3 - Add environment variables into the frontend and backend folder.

.env.local (/frontend/)

TWELVE_LABS_API_KEY=... NEXT_PUBLIC_TWELVE_LABS_INDEX_ID=... AWS_ACCESS_KEY_ID=... AWS_SECRET_ACCESS_KEY=... AWS_S3_BUCKET_NAME=twelvelabs-lecture-content-poc AWS_REGION=us-east-1 GEMINI_API_KEY=... BLOB_READ_WRITE_TOKEN=... NEXT_PUBLIC_API_URL

.env (/api/)

TWELVE_LABS_API_KEY=... TWELVE_LABS_INDEX_ID=... AWS_ACCESS_KEY=... AWS_SECRET_ACCESS_KEY=... AWS_ACCOUNT_ID=... DYNAMODB_CONTENT_TABLE_NAME=twelvelabs-education-video-poc DYNAMODB_CONTENT_USER_NAME=twelvelabs-education-user-poc S3_BUCKET_NAME=twelvelabs-lecture-content-poc GOOGLE_API_KEY

4 - Create a virtual environment and install Python packages.

Navigate to your GitHub repository and run the following command:

>> python -mThen activate your virtual environment and install all Python requirements by running the commands:

>> .\venv\Scripts\activate

>> pip install -r

5 - Start frontend sample application using Node Package Manager (NPM).

Inside your GitHub repository, navigate to your frontend folder in console and type the following:

>> npmThen navigate to localhost:3000 to access the site.

* Ensure that NPM is installed: Downloading and installing Node.js and npm | npm Docs

6 - Start the backend by running main.py in the API folder of the GitHub repository.

Inside your GitHub repository, navigate to your API folder in the console and type the following:

Leave this running as it will host the REST APIs that connect to your AWS console and the AI models!

Phase 1: Lecture Indexing

Great! Now that you have your local environment and AWS infrastructure set up we can get started! Let’s begin with indexing the video into the various cloud providers. Here is the technical architecture for this process:

The website, when given a video, immediately converts it to a video blob (Binary Large Object) living inside your browser’s memory that we can then convert into bytes. These bytes act as a universal language, understood by all computers, that can be transformed into an MP4 file that we send with an API request to Google Cloud Provider and AWS S3 buckets respectively.

/frontend/…/api/upload-gemini/route.js (Lines 23 - 58)

// Convert file to buffer const bytes = await file.arrayBuffer(); const buffer = Buffer.from(bytes); // Create a temporary file path const tempFileName = `${userName}-${Date.now()}-${file.name}`; const tempFilePath = join(tmpdir(), tempFileName); // Write the buffer to a temporary file await writeFile(tempFilePath, buffer); try { // Upload file to GCP using Google Client SDK. const myfile = await googleClient.files.upload({ file: tempFilePath, config: { mimeType: file.type || 'video/mp4' }, }); return NextResponse.json({ success: true, geminiFileId: myfile.uri, fileName: file.name }); } finally { await writeFile(tempFilePath, ''); }

💡 Design Choice: Why don’t we also upload to TwelveLabs? Isn’t that our main multimodal AI model?

This is thanks to the recent AWS partnership, which integrates TwelveLabs directly into AWS Bedrock. This means:

Video Storage Integration into S3: TwelveLabs models can now directly view and analyze your videos in your S3 bucket, as opposed to uploading to TwelveLabs directly.

Data Compliance & Security: Your video and software no longer need extra compliance if you want to use TwelveLabs, as now your videos can stay in your AWS infrastructure while still meeting AW Cloud Compliance standards.

Easy Developer Integration: Instead of downloading separate SDKs for TwelveLabs and Boto3 for AWS, everything can now be handled in Boto3! This simplifies the development process and ensures a more streamlined code base in production.

Read more about our recent partnership here: TwelveLabs x AWS Amazon Bedrock - Twelve Labs!

By reducing the number of cloud providers we have to upload this video to, users have a seamless user experience as indexing and video upload times are drastically reduced! In the code below, we make use of Promise.all to concurrently upload to Google Cloud Provider and AWS S3.

/frontend/…/dashboard/courses/page.js

// Upload asynchronously to all cloud providers. const [s3UploadResult, geminiUploadResult] = await Promise.all([ uploadToS3(), uploadToGemini(), ]);

💡Design Choice: Why did we upload to Google as well instead of a single video database like an AWS S3 bucket? Wouldn’t it save money?

The answer lies in the simple fact that providers like Google Gemini require files over 20MB to be uploaded to their cloud provider, Google Cloud Provider. This incurs additional charges. Prior to the AWS partnership you also had to store your videos on a TwelveLabs index, but with this new partnership, your video data can now all be centralized onto a single AWS infrastructure!

PSA: Though I will say the native TwelveLabs platform also offers powerful features like embedding visualization.

Lastly, we will store metadata regarding our video inside an AWS DynamoDB table for easy retrieval later.

async def upload_video(video_params: VideoRequest = Depends(get_video_id_from_request)): try: db_handler = DBHandler() db_handler.upload_video_ids(twelve_labs_video_id=video_params.twelve_labs_video_id, s3_key=video_params.s3_key, gemini_file_id=video_params.gemini_file_id) except Exception as e: return DefaultResponse(status='error', message=str(e), status_code=500) return DefaultResponse(status='success', message='Video uploaded successfully', status_code=200)

Feel free to take a look into the helpers module of the API folder if you’d like to learn more, however it is definitely not required due to the volume of code for this tutorial. Here is the upload_video_ids() method used to upload the keys into your DYNAMODB_CONTENT_TABLE_NAME table.

def upload_video_ids(self, twelve_labs_video_id: str, s3_key: str, gemini_file_id: str): table_name = os.getenv('DYNAMODB_CONTENT_TABLE_NAME') if not table_name: logger.error("DYNAMODB_TABLE_NAME environment variable not set") raise Exception("DYNAMODB_TABLE_NAME environment variable not set") table = self.dynamodb.Table(table_name) logger.info("DynamoDB table reference obtained") item = { 'video_id': twelve_labs_video_id, 's3_key': s3_key, 'gemini_file_id': gemini_file_id, 'created_at': boto3.dynamodb.types.Decimal(str(int(time.time()))), } logger.info(f"Preparing to upload item: {item}") response = table.put_item(Item=item) logger.info(f"DynamoDB put_item response: {response}") return response

So now that we have these videos uploaded to their respective provider’s, we are ready to pass them onto the AI inference stage to generate content for our students and gain improvement insights for our educators!

Phase 2: Lecture Content AI Inference

Now moving on to the fun part, AI inference! Before reading code snippets for the TwelveLab’s Pegasus API calls, we must first understand the technical architecture behind the calls, as using the right API architecture can be the difference between a <30 second inference time and 5+ minute inference time.

As seen above we use Asynchronous Server Gateway Interface (ASGI) FastAPI endpoints to generate content for each different section of the lecture analysis. This is a minor detail, with a major impact towards your user experience.

💡Design Choice: What even is an ASGI and why is that important in our backend API services?

ASGI, also known as the successor to Web Server Gateway Interface (WSGI), is a server architecture built around asynchronous calls and allows for multiple connections and events simultaneously. It does this by switching to alternative tasks while others are “loading”.

Think of this as a chef cooking spaghetti! While his noodles are boiling, the chef won’t just stand there and wait until the noodles are complete before starting the meatballs. Like any modern restaurant with lots of customers, they need to work fast, so they may start the meatballs to multitask.

This same concept is applied to our API calls.For example, while Google Gemini loads the summary, we can also load TwelveLab’s and AWS Nova’s summary, and return to whichever finishes first.

This allows us to generate all the following data concurrently, without having to wait for each API call to each provider to finish (reducing inference time from ~5 minutes to <30 seconds):

Gist (Title, Recommendation Tags, Topics)

Timeline Chapters

Pacing Recommendations

Key Takeaways

Quiz Questions

Student Engagement Opportunities

Summary

Transcript

In order to keep these API endpoints organized, we create a factory class for our different providers as seen below:

/api/providers/llm.py (Lines 1 - 31)

from abc import ABC, abstractmethod class LLMProvider(ABC): @abstractmethod def __init__(self, *args, **kwargs): pass @abstractmethod def generate_chapters(self): pass @abstractmethod def generate_key_takeaways(self): pass @abstractmethod def generate_pacing_recommendations(self): pass @abstractmethod def generate_quiz_questions(self): pass @abstractmethod def generate_engagement(self): pass @abstractmethod def generate_gist(self): pass

Below you can see how the file structure is organized and each provider using this abstract class.

The API endpoints in our FastAPI server can then call the appropriate class and method based with ease.

By separating out each data point into a REST API endpoint, we are able to individually measure inference time, an important measure dictating how long it takes for the AI to return an output. You can also see with this class design in Python how “safe” it is to add more providers and easy for others to check which methods are required.

Deep Dive Topic: Benchmarking & Cost Analysis

Before we show the results it’s important to understand how we are benchmarking the models.

TopP, topK, temperature, and other custom model inference settings are held at default.

Default settings are generally where models perform optimally for the “general use case”.

Learn more about each setting: Temperature, Top-P, Top-K: A Comprehensive Guide | MoonlightDevs - Tech Blog (Credit MoonlightDevs)

All models will be fed the same prompt for each content section to ensure fair input.

Prompts can be viewed in the /api/helpers/prompts.py folder.

Models that support structured output will be assisted with Pydantic.

This is a common developer tool that ensures JSON schema outputs. If not provided, this is generally a flaw in provider model design as it makes it difficult to produce complex objects.

Feel free to read more about how Pydantic works here: Welcome to Pydantic - Pydantic

All models will be passed the same video: https://www.youtube.com/watch?v=5LeZflr8Zfs.

With that out of the way, let’s see the results for output inference quality! This is the primary metric that often dictates the formatting and accuracy of content generated!

As seen above we see varying different qualities between the different models. Of course this “quality” measure is fully dependent on your use case, but based on the YouTube video, here are the takeaways:

Google Gemini: Highly accurate, but not very precise. Fine-tuning and prompt engineering is required to ensure that it doesn’t list every detail about the video. It overlisted recommendation tags, which can be dangerous for a recommendation system that relies on tags.

AWS Nova: Very accurate and precise, but seems to be lacking when it comes to structured output. AWS Nova at this time of writing does not support structured outputs with Pydantic, leading to weird tuning mechanisms like adjusting the temperature and prompting requirements

Read more: Require structured output - Amazon Nova

TwelveLabs: Mixes the right amount of accuracy and precision while having highly accurate structured output. The number of topics could have been more detailed, but that would come down to a matter of prompting as we said “at least 1 topic”.

Now onto something less subjective, the inference time of each model.

As seen above, TwelveLab’s and Google’s models had extremely similar generation times. However there are some key distinctions to point out.

TwelveLabs Cached Data: You may notice that the transcript and gist are near instant for TwelveLabs! This is because we recognize common info like video titles will likely always be needed, so we cache that data inside of our platform allowing near-instant retrieval time.

Long-Form vs. Short-Form Text Content:

Gemini is faster for generating long-form text content. We can see it wins in lengthy JSON structured content like the Chapters and Key Takeaways that require timestamps.

TwelveLabs is faster when it comes to short-form engagement like bullet points and summary.

Finally, for the most important part, cost analysis of each model! To do this in a fair manner and easily, let’s assume we have a 1 hour video and reference each provider’s documentation respectively.

* Inference API Calls: 8 (One for each category)

TwelveLab’s: $2.79

Methodology: TwelveLabs | Pricing Calculator

Video Upload Hours = 1

Disabled Embedding API — Did not use embedding for other models.

Analyze API Usage = 8 — One for each category of data.

Search API Usage = 0

Google Gemini: $10.62 (without additional GCP infrastructure costs)

Google Gemini relies on a pay-by-token policy, where the amount you pay depends on the size of input and output tokens.

Documentation: Understand and count tokens | Gemini API | Google AI for Developers

295 tokens per second of a video.

$1.25 per 1M tokens.

Tokens in an hour video: 3600 seconds * 295 tokens/second = 1062000

Calculations: (1062000 * / 1000000) * 1.25 * 8 = $10.62

AWS Nova: $0.22 (without additional AWS infrastructure costs)

Token Documentation: Video understanding - Amazon Nova

Explicitly estimates 1 hour video to be: 276,480 tokens.

Pricing Documentation: Pricing

AWS Nova Pro model = $0.0008 per 1,000 input tokens

Calculations: (276,480 / 1,000) * 0.0008 = $0.22

Here is a table with all of these insights side-by-side, so that you can make the best informed decision:

Metric | TwelveLabs | Google Gemini | AWS Nova |

Output Inference Quality | ✅ Balanced & Structured: A good mix of accuracy and precision. | 🎯 Accurate but Imprecise: Highly accurate but tends to over-list details and tags. | ⚙️ Accurate but Unstructured: Very accurate and precise, but struggles with formatting. |

Structured Output | ✅ Yes (Reliable): Natively supports and reliably returns structured data. | ✅ Yes (Reliable): Natively supports and reliably returns structured data. | ❌ No (Unreliable): Does not guarantee structured output, requiring extra development effort to parse responses. |

Inference time | Fast (especially cached data): Excels at short-form content and instant retrieval of common data. | Fast (long-form content): Similar overall speed but has an edge in generating lengthy, complex content. | Average: At times can be fast, but generally takes additional 10-20 seconds compared to other models. |

Caching Mechanism | ✅ Yes: Pre-processes and caches transcripts and gists upon upload for instant access. | ❌ No: Each query re-processes the video content. | ❌ No: Each query re-processes the video content. |

Cost | 💸💸 $2.79 | 💸💸💸 $10.62 | 💵 $0.22 |

Pricing Model | Per-Hour & Per-Call: Charges for video length once, then a smaller fee for each analysis call. | Pay-Per-Token: Charges for the total tokens in the video for each query. | Pay-Per-Token: Charges for the total tokens in the video for each query. |

Additional Costs | (Included in platform) | GCP Infrastructure Costs | AWS Infrastructure Costs |

Of course, all models will improve and these metrics can change, but with this platform you can experiment with your own videos and see which video-understanding model works best for your use case!

Phase 3: Student Feedback Loop + Classroom Insights

Now onto the final section, where we not only enable the student feedback loop so teachers can understand their classroom, but truly see how TwelveLabs integrates seamlessly into the AWS technology stack.

As you can see above students now get access to the TwelveLabs generated data, which include the practice questions, chapter timeline, and course summary. This can transform hours of time creating study supplemental material into seconds with TwelveLabs Pegasus text generation capabilities and our AWS infrastructure helps deliver this content securely.

Once students complete these practice questions and watch the video, students receive a report detailing:

Quiz Performance by Chapter — Detailed overview of what they missed and the specific topic.

Personalized Study Recommendations — Targeting exactly what students missed.

Recommended External Video Content — Leveraging TwelveLabs Marengo embeddings for similarity comparison with videos in S3 database.

Mastery Score — Score given by the student’s performance by topic.

With that in mind, let’s see how this works in our technical architecture and examine exactly why AWS integration plays such a key role in full end-to-end applications.

As seen in the technical architecture, we incorporate Claude 3.5 Sonnet from the AWS Bedrock service. Without installing any external packages or utilizing external cloud providers, we have access to text-based reasoning models that can directly interact with our data in AWS DynamoDB.

Furthermore, to enhance student learning we integrated vector embedding YouTube video recommendations. Using the TwelveLabs Marengo Model, we generated context-rich vector embeddings to conduct Euclidian distance based K-nearest neighbor search.

/api/helpers/reasoning.py (lines 262 - 289)

def fetch_related_videos(self, video_id: str): # Fetch original video from S3 Bucket. presigned_urls = DBHandler().fetch_s3_presigned_urls() # Fetch embedding stored in Marengo Model video_object = self.twelvelabs_client.index.video.retrieve(index_id=os.getenv('TWELVE_LABS_INDEX_ID'), id=video_id, embedding_option=['visual-text']) video_duration = video_object.system_metadata.duration video_embedding_segments = video_object.embedding.video_embedding.segments combined_embedding = np.array([]) for segment in video_embedding_segments: combined_embedding = np.concatenate([combined_embedding, segment.embeddings_float]) # Generate embeddings of other YouTube videos. other_video_embeddings = {} for video_url in presigned_urls: video_embedding = self.generate_new_video_embeddings(video_url, combined_embedding, video_duration) other_video_embeddings[video_url] = video_embedding # Conduct K-Nearest Neighbor Search with Euclidian Distance. knn_results = self.knn_search(combined_embedding, other_video_embeddings, 5) return knn_results

Notice how all these features relied on one singular unified API / platform, AWS. This ensures that your data is secure under one platform and makes development extremely streamlined.

Finally, after students submit their assignments, the teacher sees the completed quiz results of each student and analyzes their classroom in their dashboard.

This entire student feedback loop ultimately enriches both learning on the student side, as they are supplemented with AI content, while providing valuable insight for teachers to prepare their next lesson.

This was all thanks to video understanding models like TwelveLabs that could truly understand our video content and automate generation of extremely valuable content that would have taken hours or days to enrich student learning.

Conclusion

Great, thanks for reading along! This tutorial showed you not only how powerful TwelveLabs can be in the education industry, but hopefully provided you with many learning opportunities like:

TwelveLabs AWS Integration — Explaining how TwelveLabs integrated into AWS Bedrock can be a game-changer when it comes to ease of development as well as centralized architecture.

Architecture Explanation & Design Choices — Why certain technical decisions were made either for cost-saving or performance.

Model Benchmarking — Visualizing outputs of market video-understanding models and their inference time to ensure you choose the correct model for your next project.

Check out some more in-depth resources regarding this project here:

TwelveLabs Technical Report: Google Doc

Technical Architecture Diagrams: LucidCharts

API Documentation: Google Doc

GitHub Repository: https://github.com/nathanchess/twelve-labs-education-poc

Live Application Link: https://twelve-labs-education-poc-s7sz.vercel.app/

By combining TwelveLabs with the computation and wide-range of services in AWS, you unlock the power to build highly sophisticated services and software that can modernize the education industry and beyond.