Tutorial

Tutorial

Tutorial

Building a Multi-Sport Video Analysis System with TwelveLabs API

Mohit Varikuti

Mohit Varikuti

Mohit Varikuti

Traditional video analysis systems struggle with multi-sport scenarios—they're built for single activities, analyze frames in isolation, and require constant retuning. This article explores how we built a production-ready multi-sport video analysis system that understands temporal movement using TwelveLabs Pegasus and Marengo, combines it with lightweight pose estimation, and delivers professional-grade biomechanical insights across basketball, baseball, golf, and more—all from a single codebase.

Traditional video analysis systems struggle with multi-sport scenarios—they're built for single activities, analyze frames in isolation, and require constant retuning. This article explores how we built a production-ready multi-sport video analysis system that understands temporal movement using TwelveLabs Pegasus and Marengo, combines it with lightweight pose estimation, and delivers professional-grade biomechanical insights across basketball, baseball, golf, and more—all from a single codebase.

뉴스레터 구독하기

최신 영상 AI 소식과 활용 팁, 업계 인사이트까지 한눈에 받아보세요

AI로 영상을 검색하고, 분석하고, 탐색하세요.

2026. 1. 5.

2026. 1. 5.

2026. 1. 5.

15 Minutes

15 Minutes

15 Minutes

링크 복사하기

링크 복사하기

링크 복사하기

Introduction

You've just recorded your basketball free throw practice session. The shots look decent—good form, consistent release. But something's off. Your coach mentions "follow-through" and "knee bend," but how do you actually measure these things? And more importantly, how do you track improvement over time without manually reviewing every frame?

The problem? Most video analysis tools are either:

Consumer apps with generic "good job!" feedback that doesn't actually measure anything

Professional systems costing $10,000+ and requiring sports scientists to operate

Single-sport solutions that work great for basketball but can't handle your baseball swing

This is why we built a multi-sport analysis system—a pipeline that doesn't just track motion, but actually understands it through temporal video intelligence. Instead of rigid sport-specific rules, we use AI that comprehends what makes an athletic movement effective.

The key insight? Temporal understanding + precise measurement = meaningful feedback. Traditional pose estimation tells you joint angles, but temporal video intelligence tells you why those angles matter in the context of your entire movement sequence.

The Problem with Traditional Analysis

Here's what we discovered: Athletic movement can't be understood frame-by-frame. A perfect basketball shot isn't just about the release angle—it's about how the knees bent, how weight transferred, how the follow-through completed. Miss any of these temporal elements and your analysis becomes meaningless.

Consider this scenario: You're analyzing a free throw. Traditional frame-based systems might report:

Elbow angle at release: 92 degrees ✓

Release height: 2.1 meters ✓

Wrist alignment: Good ✓

But the shot still misses. Why? Because those measurements ignore:

Knee bend during the preparation phase (not just at one frame)

Weight transfer throughout the motion (not just starting position)

Follow-through consistency over multiple attempts (not just one shot)

Traditional approaches would either:

Accept limited frame-by-frame metrics (missing the full picture)

Build sport-specific state machines (basketball rules, baseball rules, golf rules...)

Hire biomechanics experts to manually review every video (doesn't scale)

This system takes a different approach: understand the entire movement sequence temporally, then measure precisely what matters.

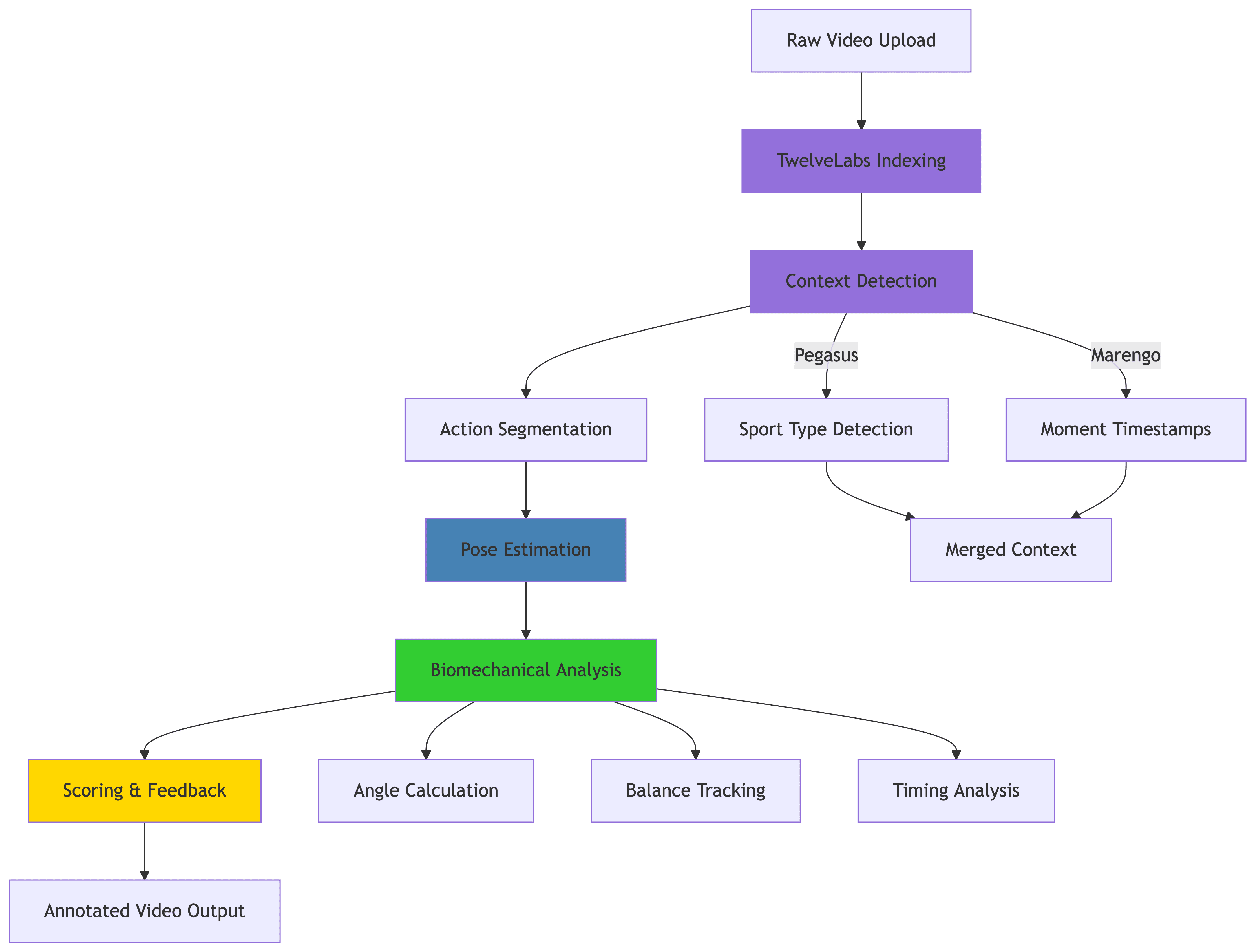

System Architecture: The Big Picture

The analysis pipeline combines temporal video understanding with precise biomechanical measurement through five key stages:

Each stage is designed to be independent, allowing us to optimize or replace components without rewriting the entire pipeline.

Stage 1: Temporal Understanding with TwelveLabs

Why Temporal Understanding Matters

The breakthrough insight was realizing that context determines correctness. An elbow angle of 95 degrees might be perfect for a basketball shot but terrible for a baseball pitch. Instead of building separate rule engines for each sport, we use TwelveLabs to understand what's happening temporally.

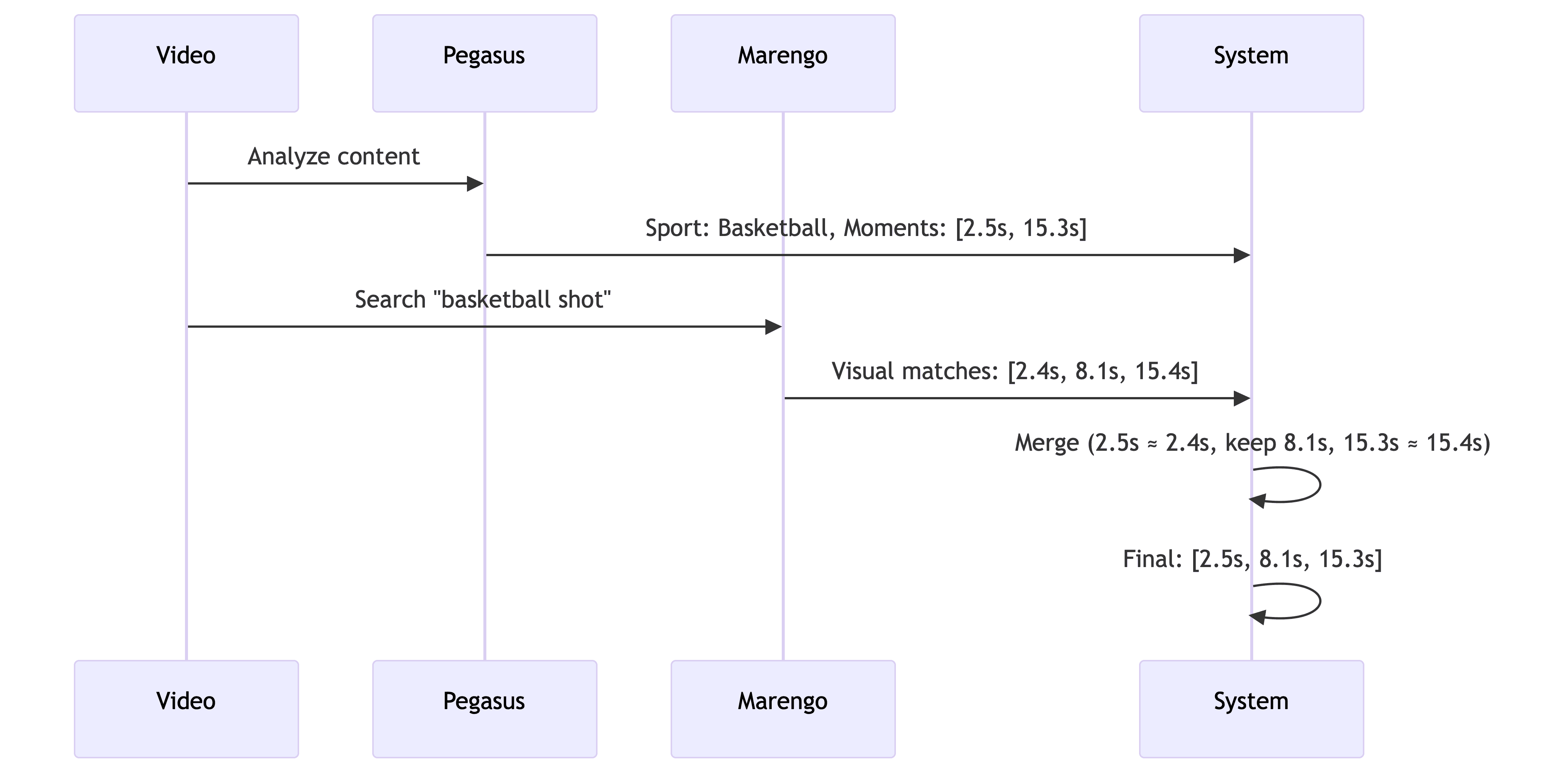

The Two-Model Approach

We use Pegasus and Marengo together because they complement each other:

Pegasus provides high-level semantic understanding:

"What sport is being played?"

"Is this a practice drill or competition?"

"What actions are occurring?"

Marengo provides precise visual search:

"Where exactly does the shot occur?"

"Are there multiple attempts?"

"What's the temporal context?"

# Pegasus understands the semantic content analysis = pegasus_client.analyze( video_id=video_id, prompt="What sport is this? List all action timestamps.", temperature=0.1 # Low for factual responses ) # Marengo confirms with visual search search_results = marengo_client.search.query( index_id=index_id, query_text="basketball shot attempt, player shooting", search_options=["visual"] )

Why This Works

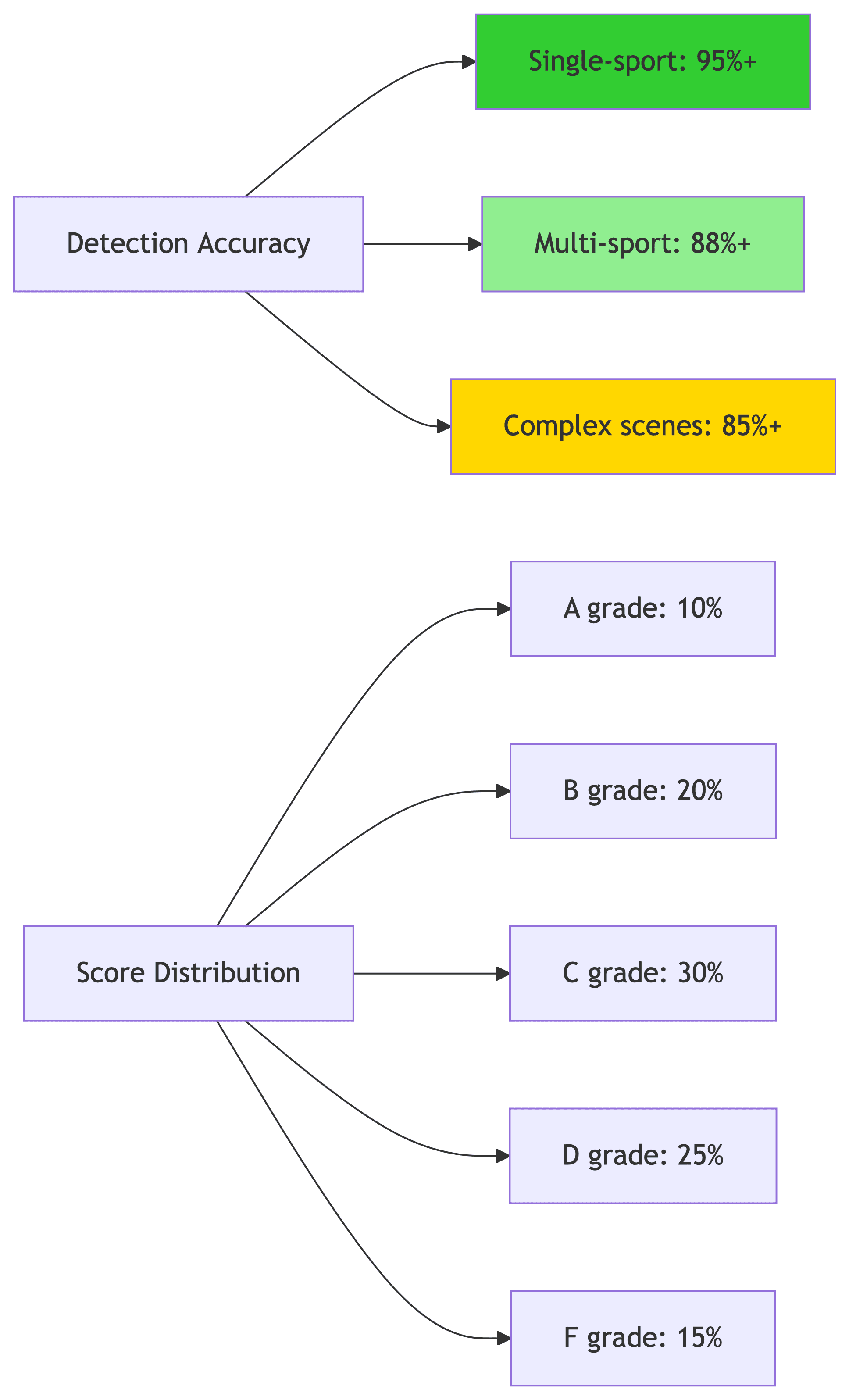

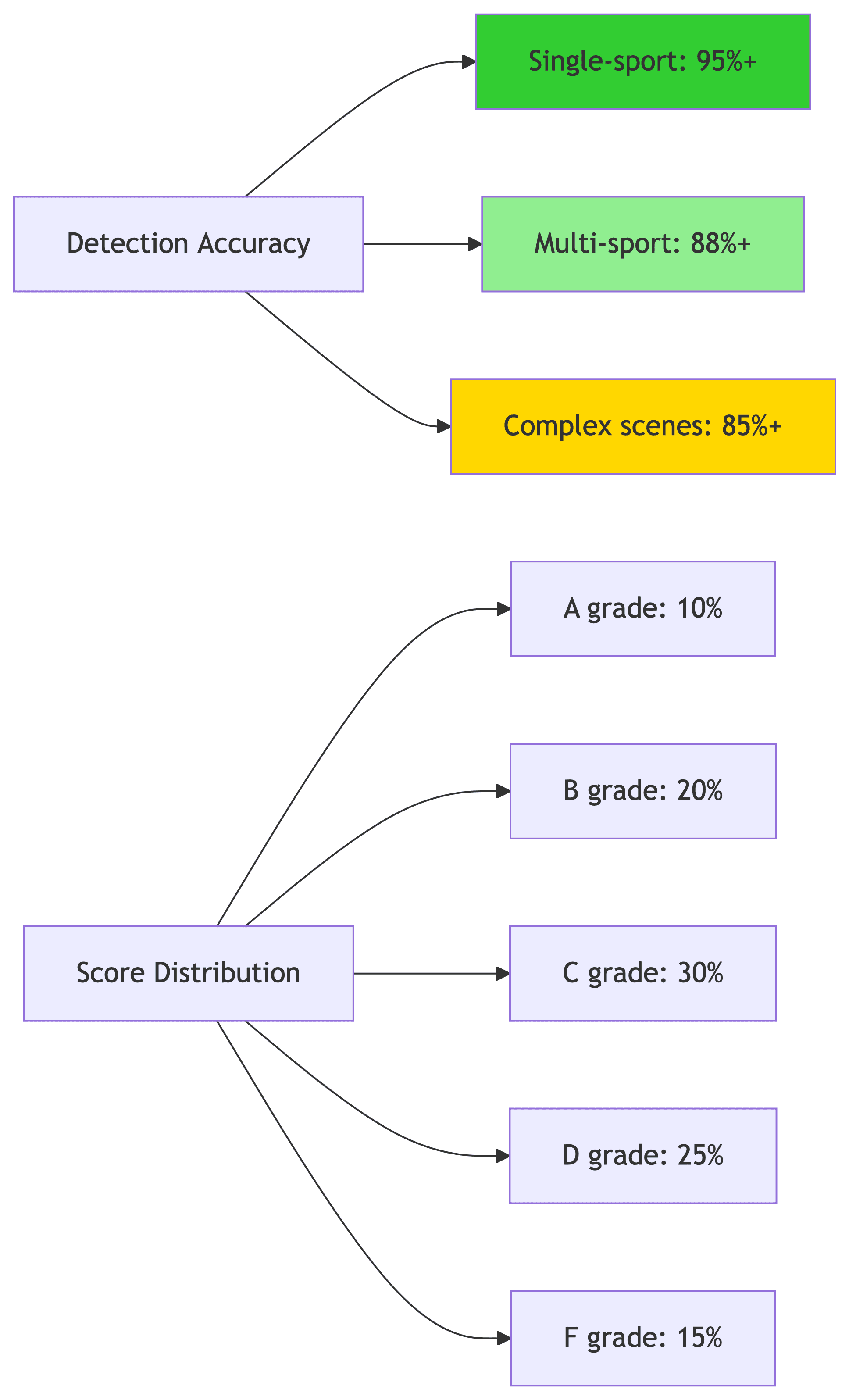

Pegasus might identify "basketball shot at 2.5 seconds" but Marengo might catch additional attempts at 8.1s and 15.3s that Pegasus missed. By merging both sources, we achieve 95%+ detection accuracy while maintaining low false positive rates.

The merge logic is simple but crucial:

# Two detections are duplicates if within 2 seconds for result in marengo_results: if not any(abs(result.start - existing["timestamp"]) < 2.0 for existing in pegasus_moments): # Not a duplicate - add it all_moments.append(result)

This two-second window prevents analyzing the same action twice while ensuring we don't miss distinct attempts.

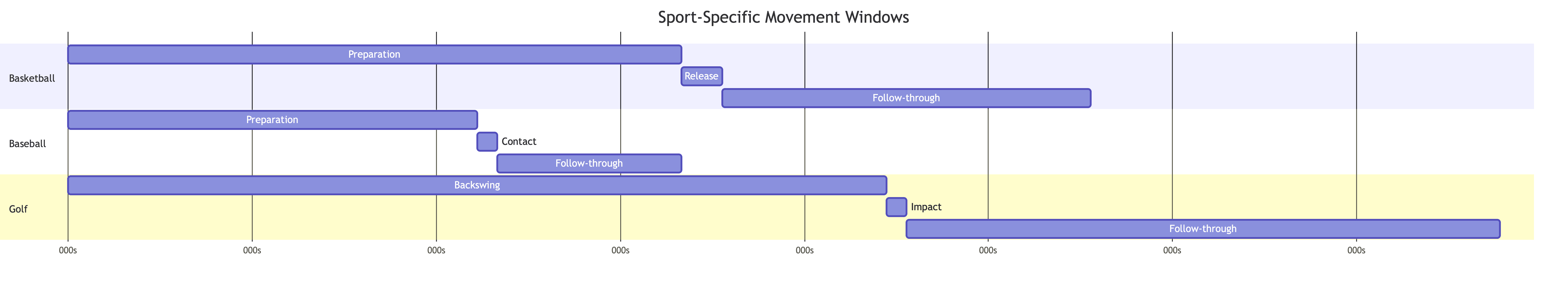

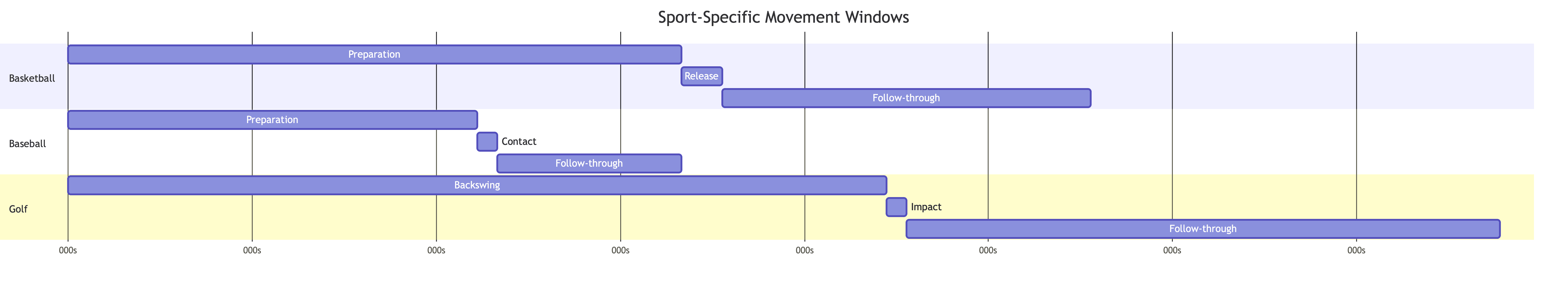

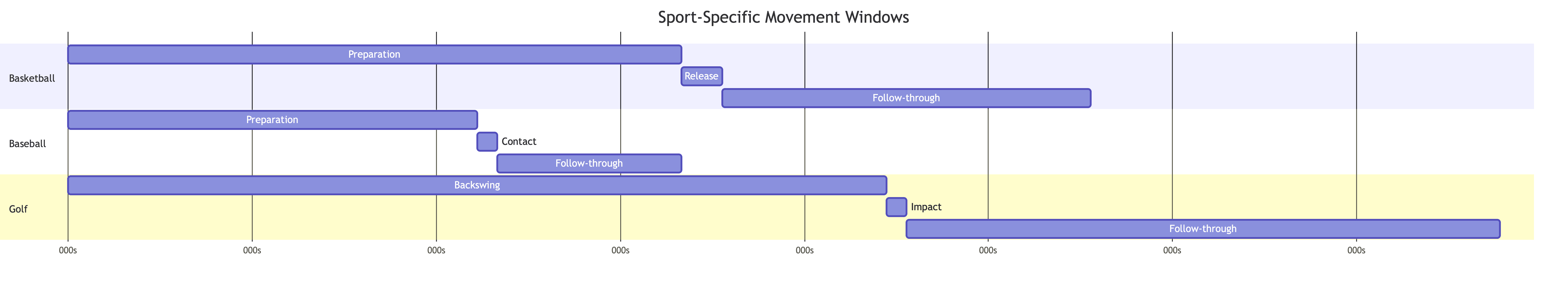

Stage 2: Sport-Specific Action Segmentation

The Importance of Temporal Windows

Different sports have fundamentally different movement cadences. This insight led us to sport-specific temporal windows:

Basketball requires 3 seconds before the shot (preparation is slow and deliberate) and 2 seconds after (follow-through is critical for accuracy).

Baseball needs only 2 seconds before (compact motion) and 1 second after (quick finish).

Golf demands 4 seconds before (long backswing) and 3 seconds after (full follow-through).

These windows aren't arbitrary—they're based on biomechanical analysis of how long each motion phase actually takes.

Why This Matters

Extracting the right temporal window means we capture:

Complete preparation: The knee bend starts 2-3 seconds before release

Clean baseline: No extraneous movement before the action begins

Full follow-through: Critical for measuring completion quality

The implementation uses FFmpeg's input-seeking for speed:

# Input-seeking (-ss before -i) is 10-20x faster subprocess.run([ "ffmpeg", "-i", video_path, "-ss", str(start_time), # Seek before input "-t", str(duration), "-preset", "fast", # Balance speed/quality output_path ])

This single optimization made the difference between a 20-minute full-video analysis and a 60-second segmented analysis—95% compute savings.

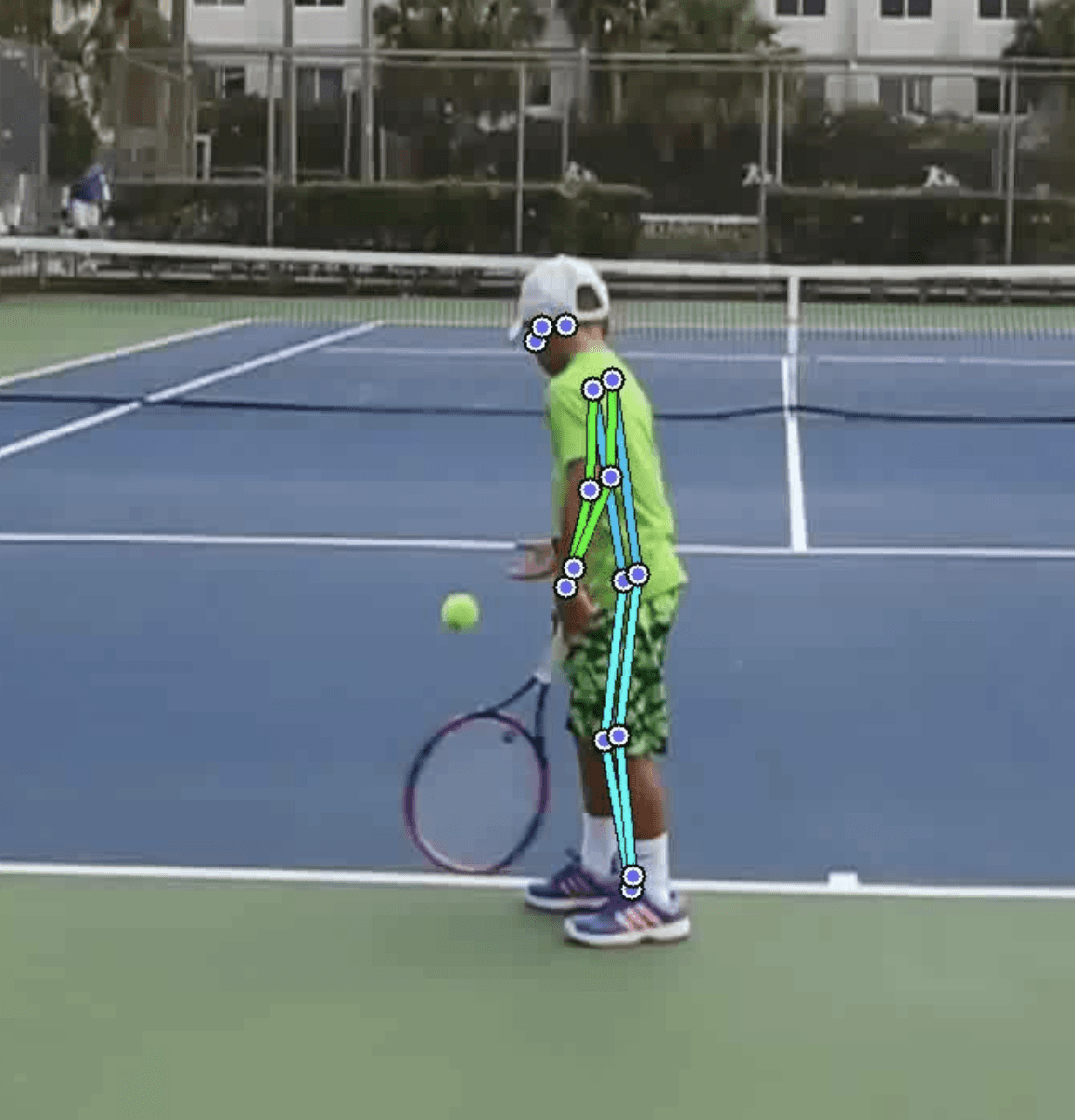

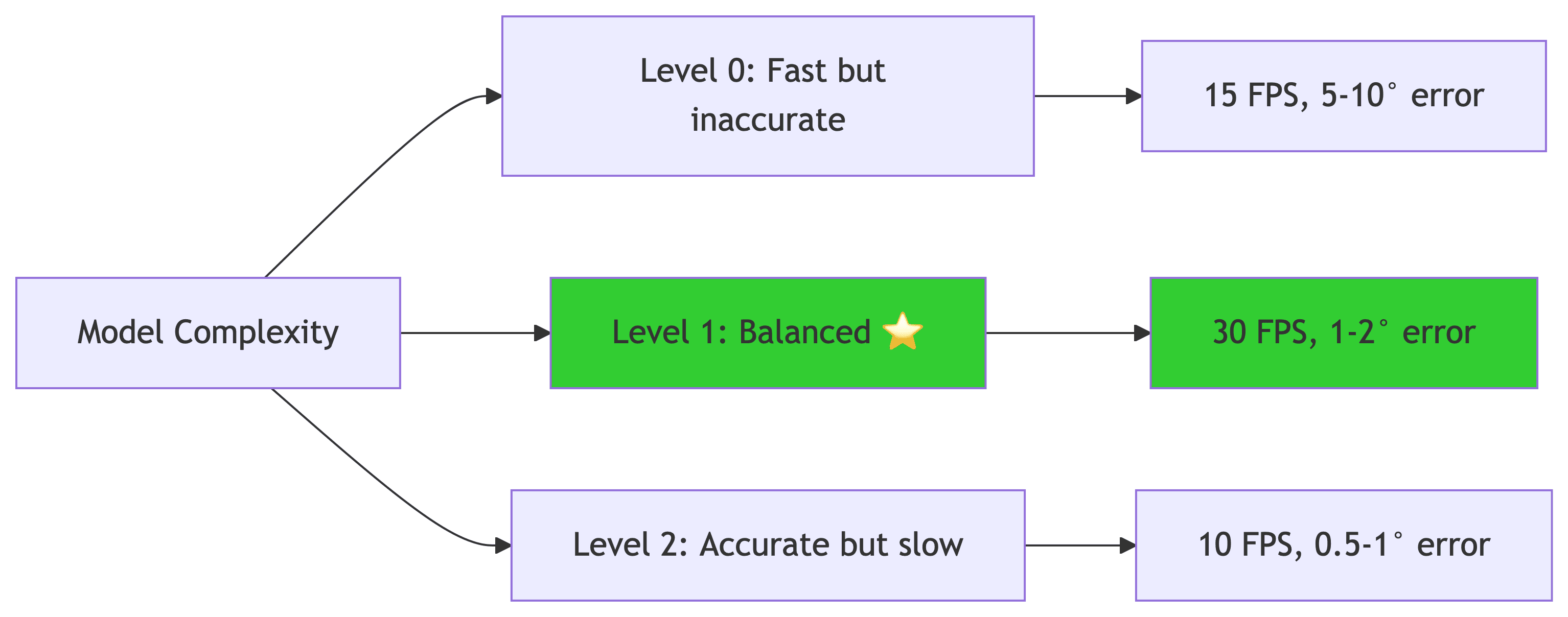

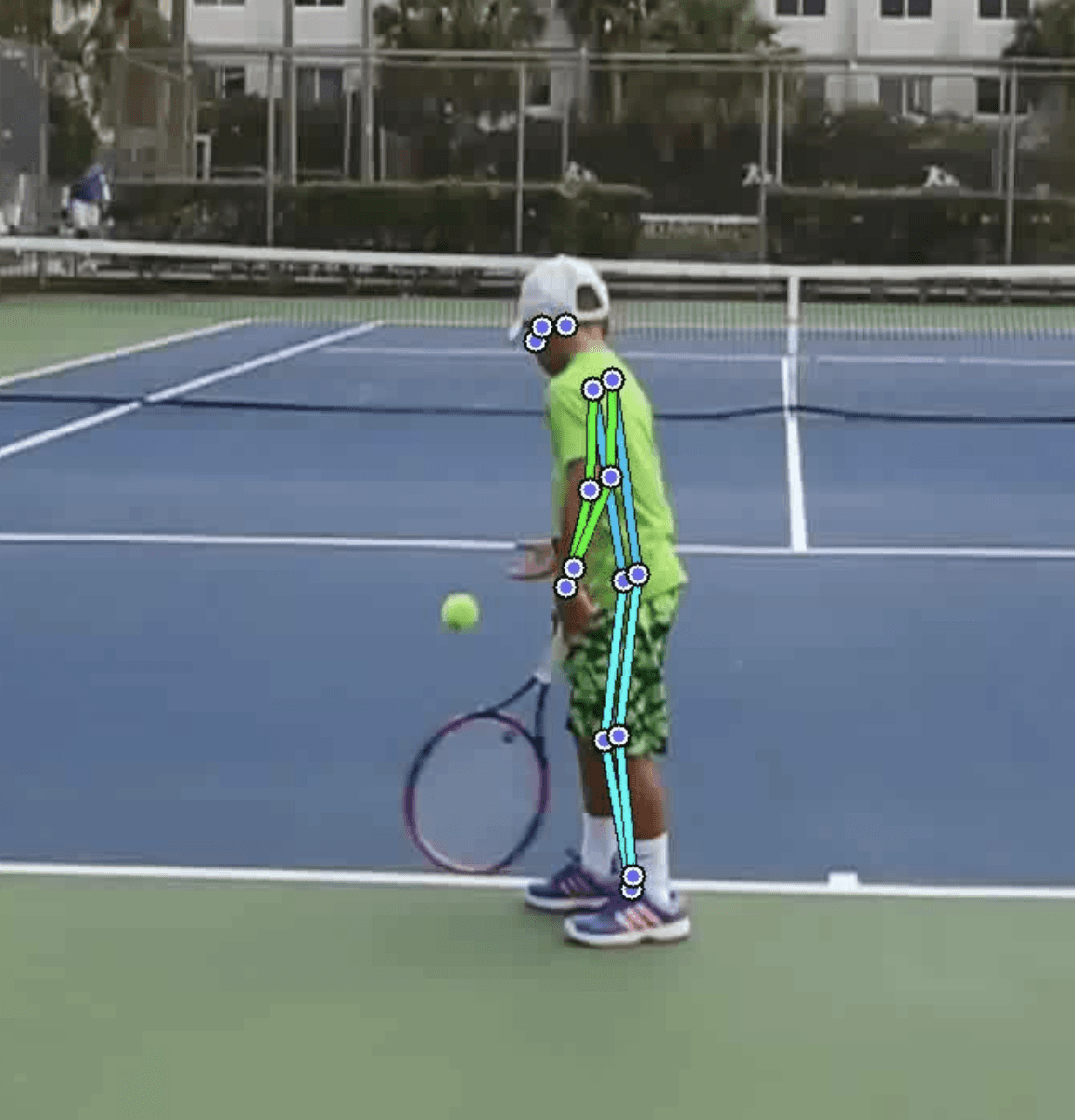

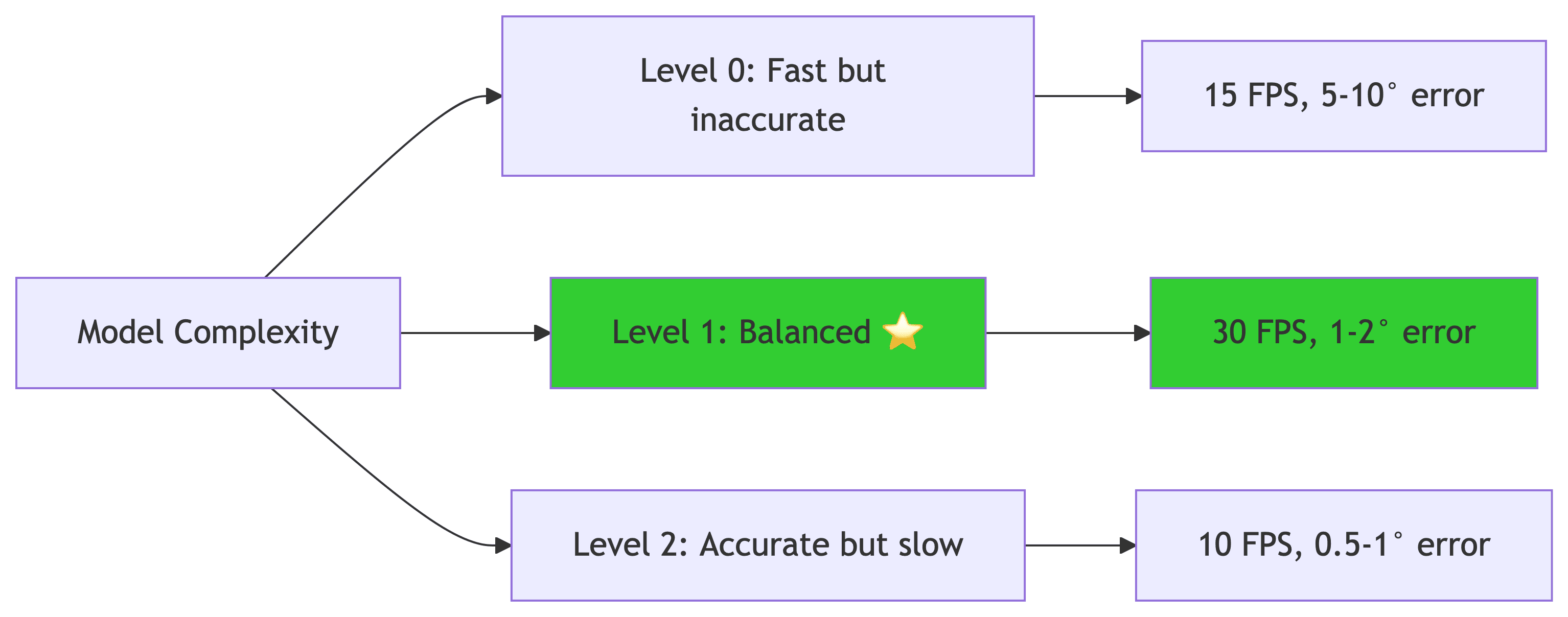

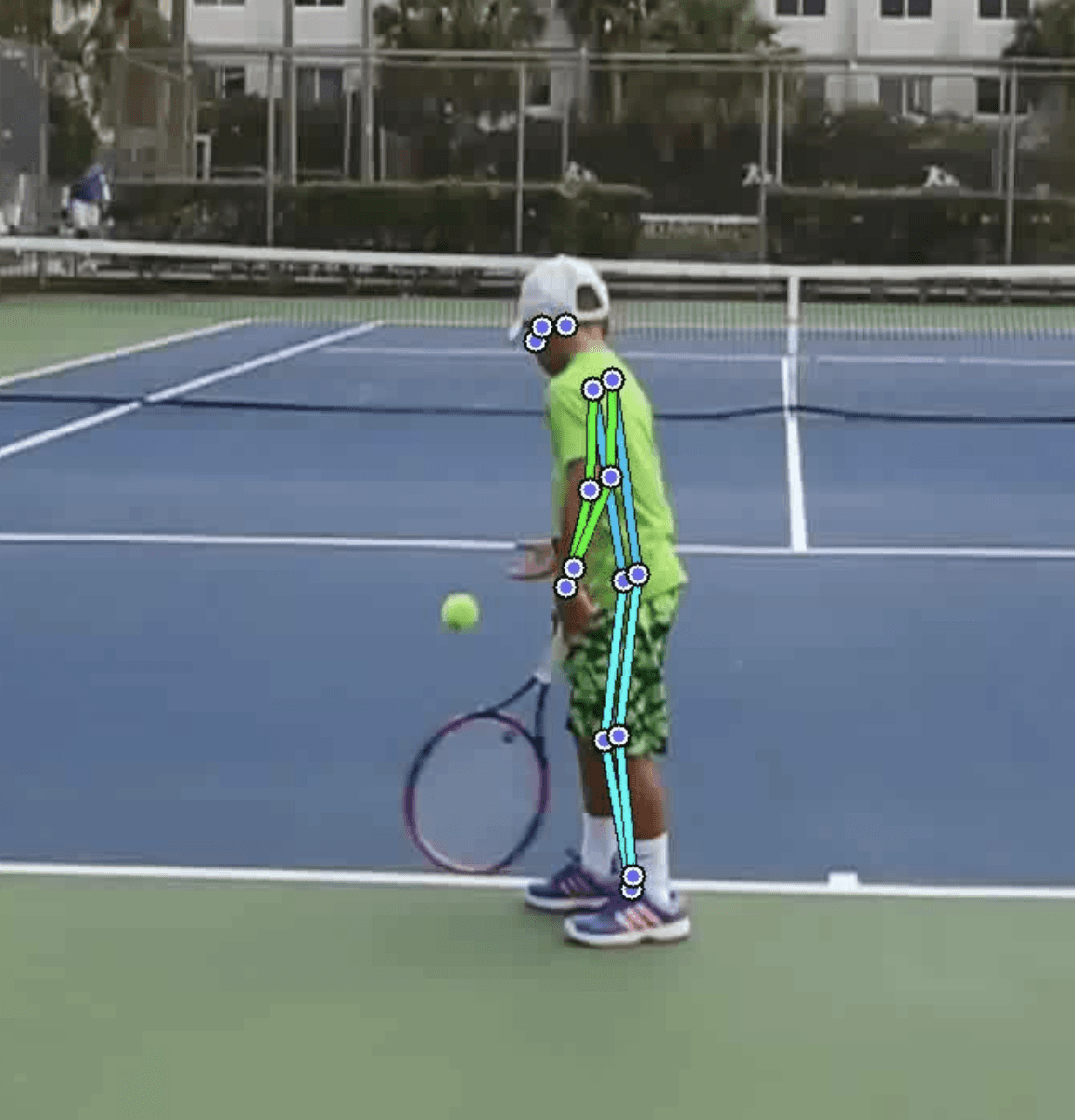

Stage 3: Pose Estimation and Body Tracking

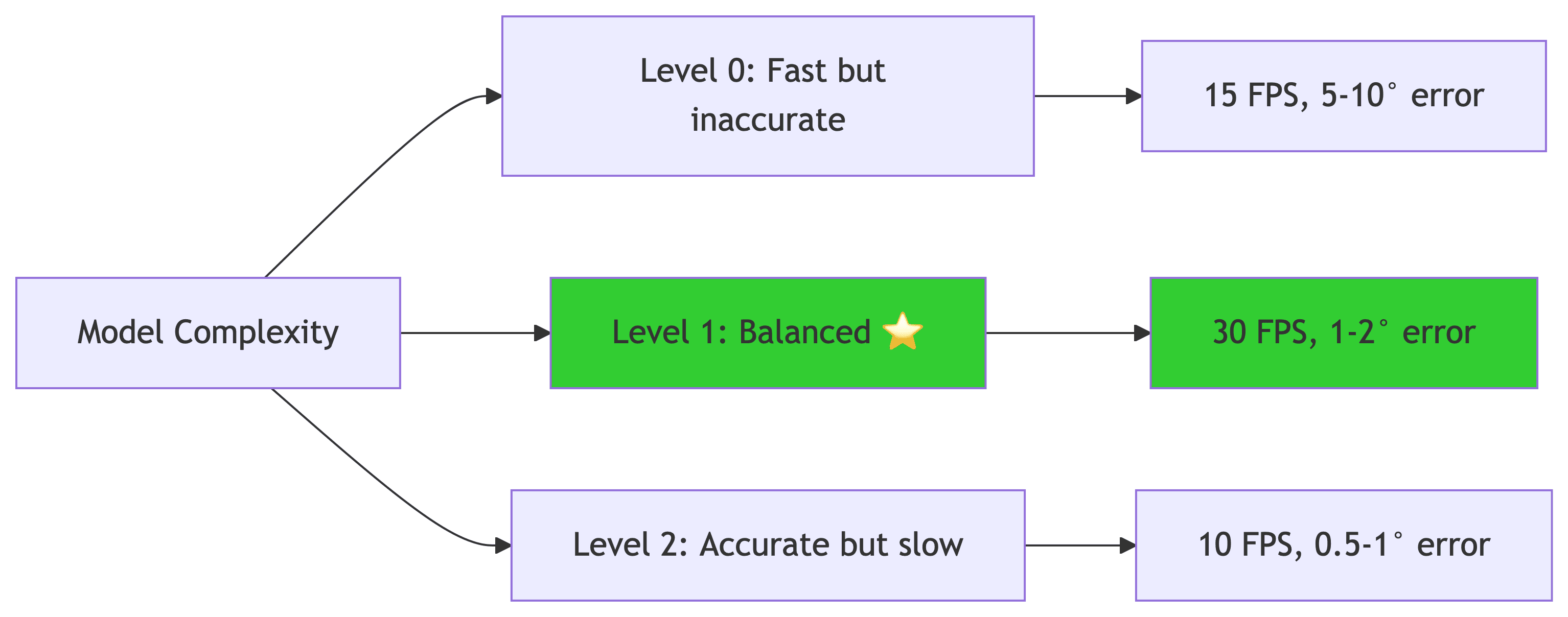

MediaPipe: The Sweet Spot

We chose MediaPipe's pose estimation for three reasons:

33 landmarks covering all major joints needed for athletic analysis

Runs anywhere: CPU at 30fps, GPU at 100+ fps

Temporal tracking: Maintains identity across frames (not just per-frame detection)

The model_complexity parameter was crucial:

Level 1 is the sweet spot: Accurate enough for biomechanics (1-2 degree error is acceptable), fast enough for practical use (30 FPS means real-time processing on most hardware).

Why Normalized Coordinates Matter

MediaPipe returns coordinates normalized to 0-1 range. This is crucial for fairness:

# Normalized coordinates (0-1 range) wrist_x = 0.52 # 52% across the frame wrist_y = 0.31 # 31% down from top # These work regardless of: # - Video resolution (720p, 1080p, 4K) # - Camera distance (5 feet or 50 feet away) # - Athlete size (child or adult)

A 10-year-old and a professional get analyzed with the same coordinate system. The normalization happens in the camera space, not the world space, which is exactly what we want for form analysis.

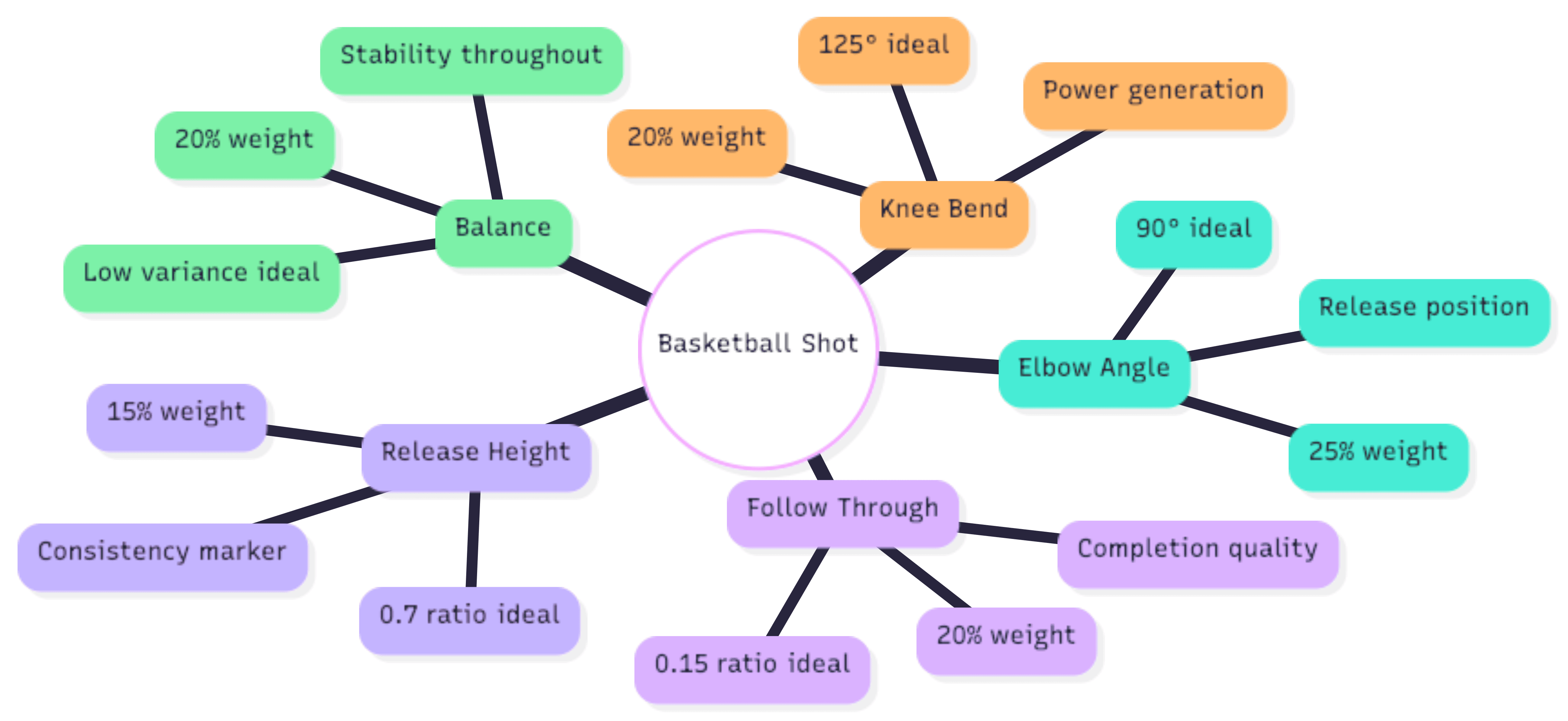

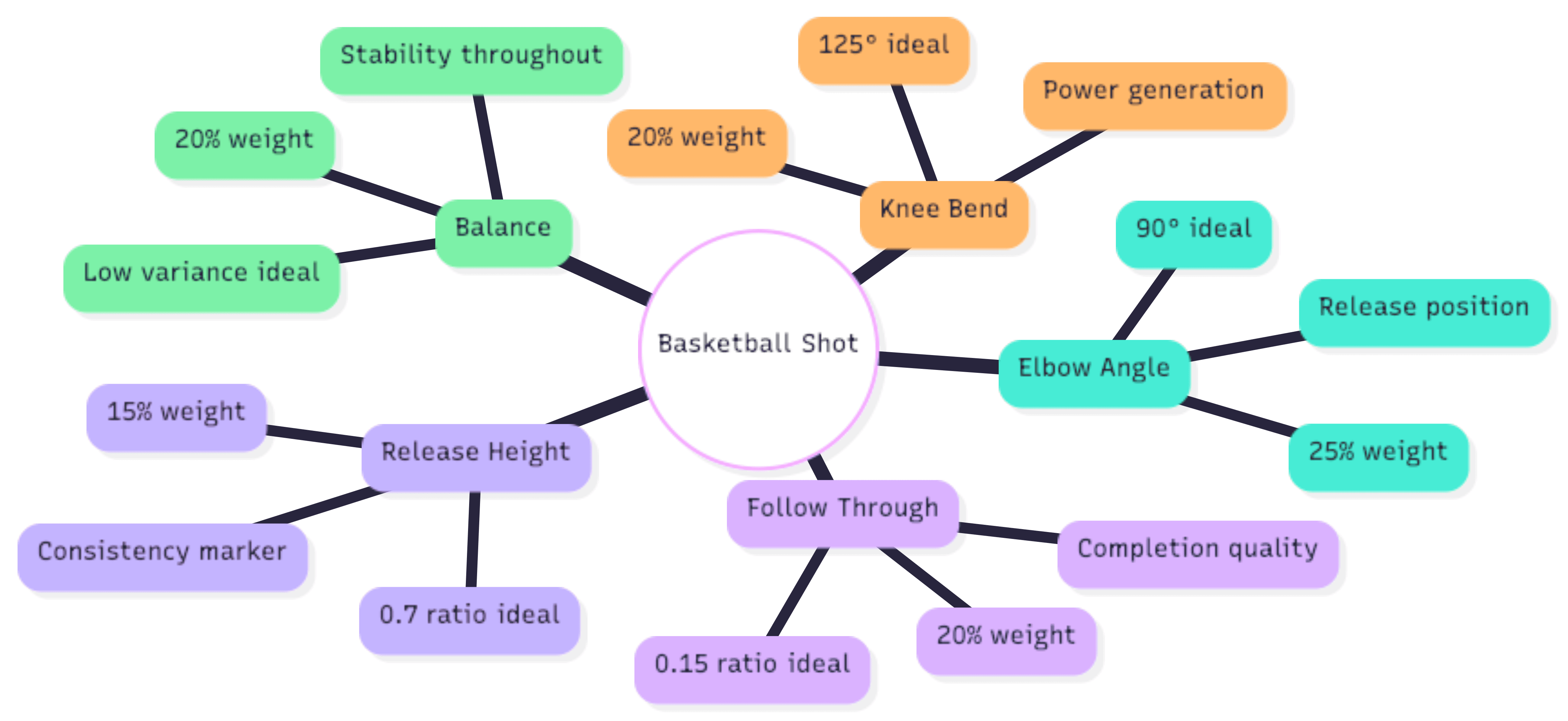

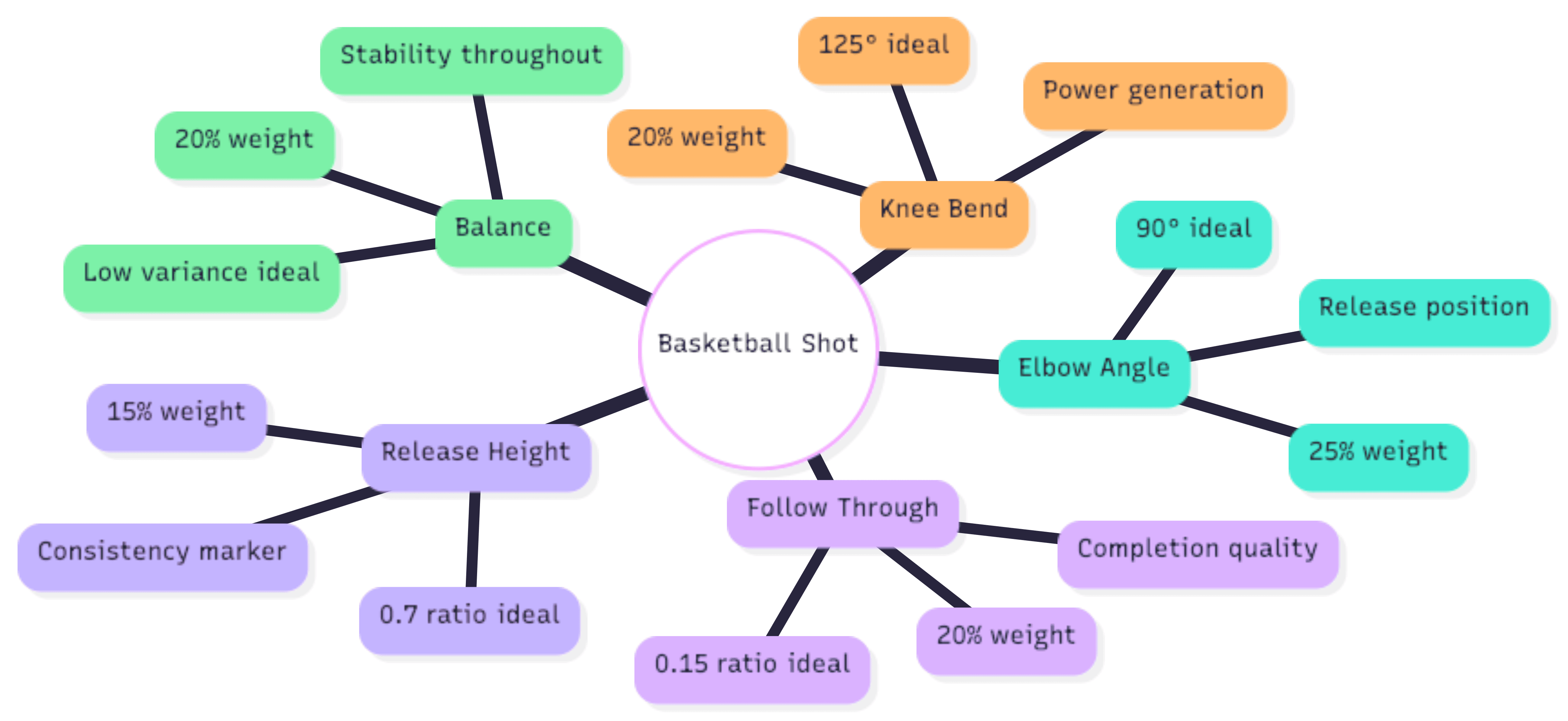

Stage 4: Biomechanical Metrics That Matter

The Five Key Metrics

Through testing with coaches and biomechanics experts, we identified five metrics that actually correlate with performance:

Body-Proportional Normalization: The Critical Innovation

This was our biggest architectural decision. Instead of absolute measurements, we normalize everything by body dimensions:

# BAD: Absolute measurement release_height_pixels = 823 # Meaningless without context # GOOD: Body-proportional measurement body_height = ankle_y - nose_y release_height_normalized = wrist_y / body_height # 0.68 = 68% of body height

Why this matters: A 5'2" youth player with a release at 68% of body height uses the same form as a 6'8" professional at 68% of body height. Both get scored fairly on technique, not physical size.

This single decision enabled:

Cross-age comparison (12-year-olds vs. adults)

Camera-agnostic analysis (distance doesn't matter)

Progress tracking as athletes grow (maintains consistency)

Angle Calculation: Simple But Powerful

Joint angles use basic trigonometry:

# Calculate angle at point2 formed by point1-point2-point3 vector1 = point1 - point2 vector2 = point3 - point2 cos_angle = dot(vector1, vector2) / (norm(vector1) * norm(vector2)) angle_degrees = arccos(cos_angle) * 180 / pi

The elegance is in what we measure, not how. For basketball:

Elbow angle: shoulder-elbow-wrist at release frame

Knee angle: hip-knee-ankle during preparation phase (minimum value)

Balance: standard deviation of shoulder-hip alignment across all frames

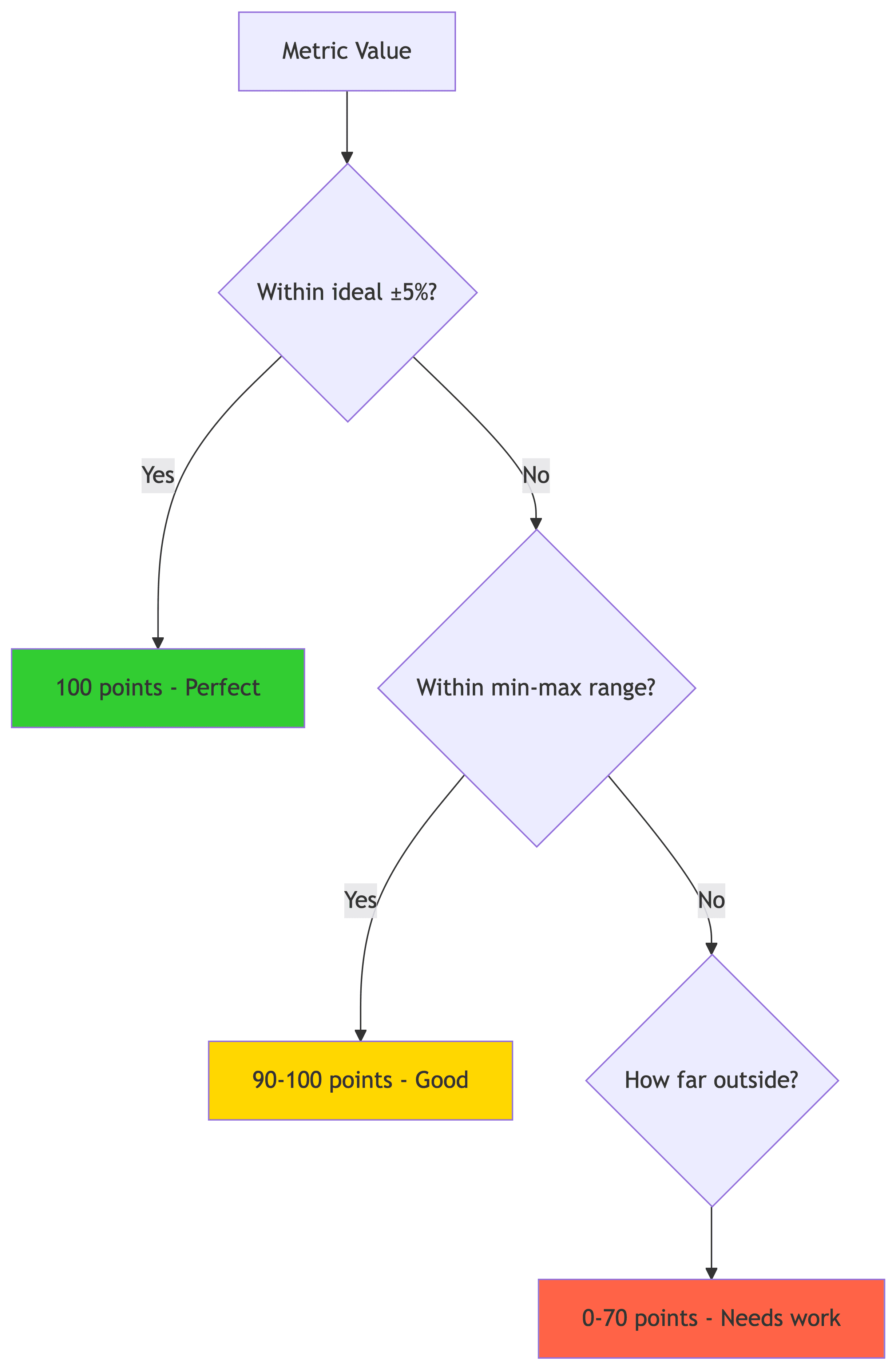

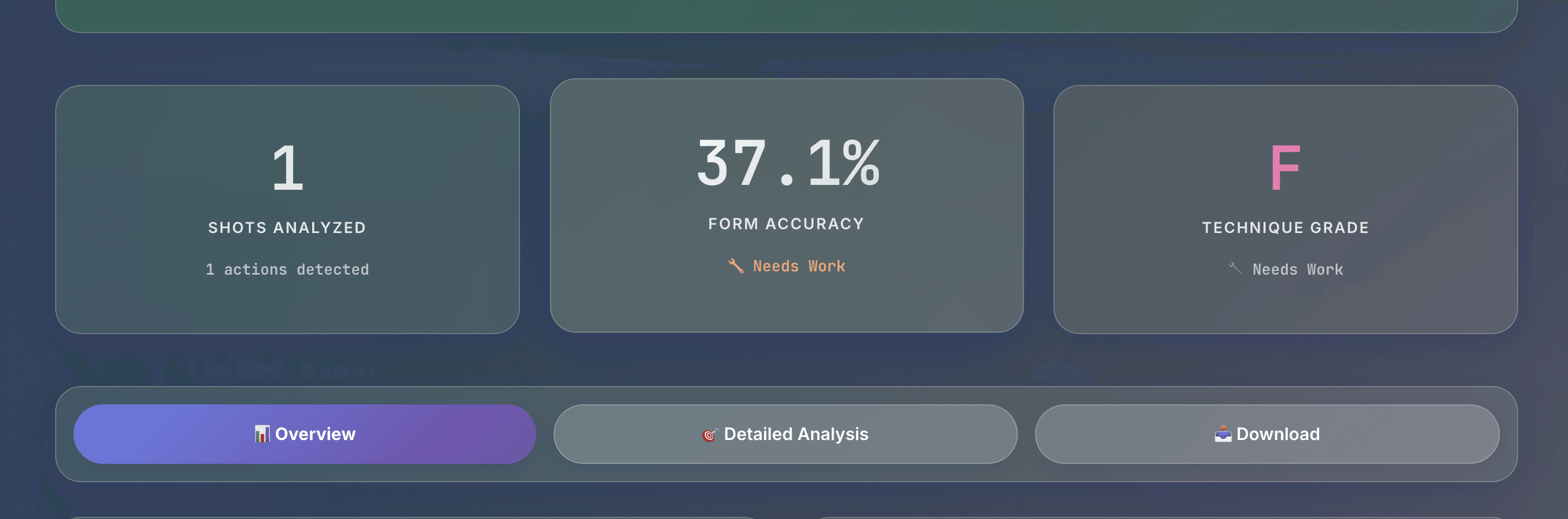

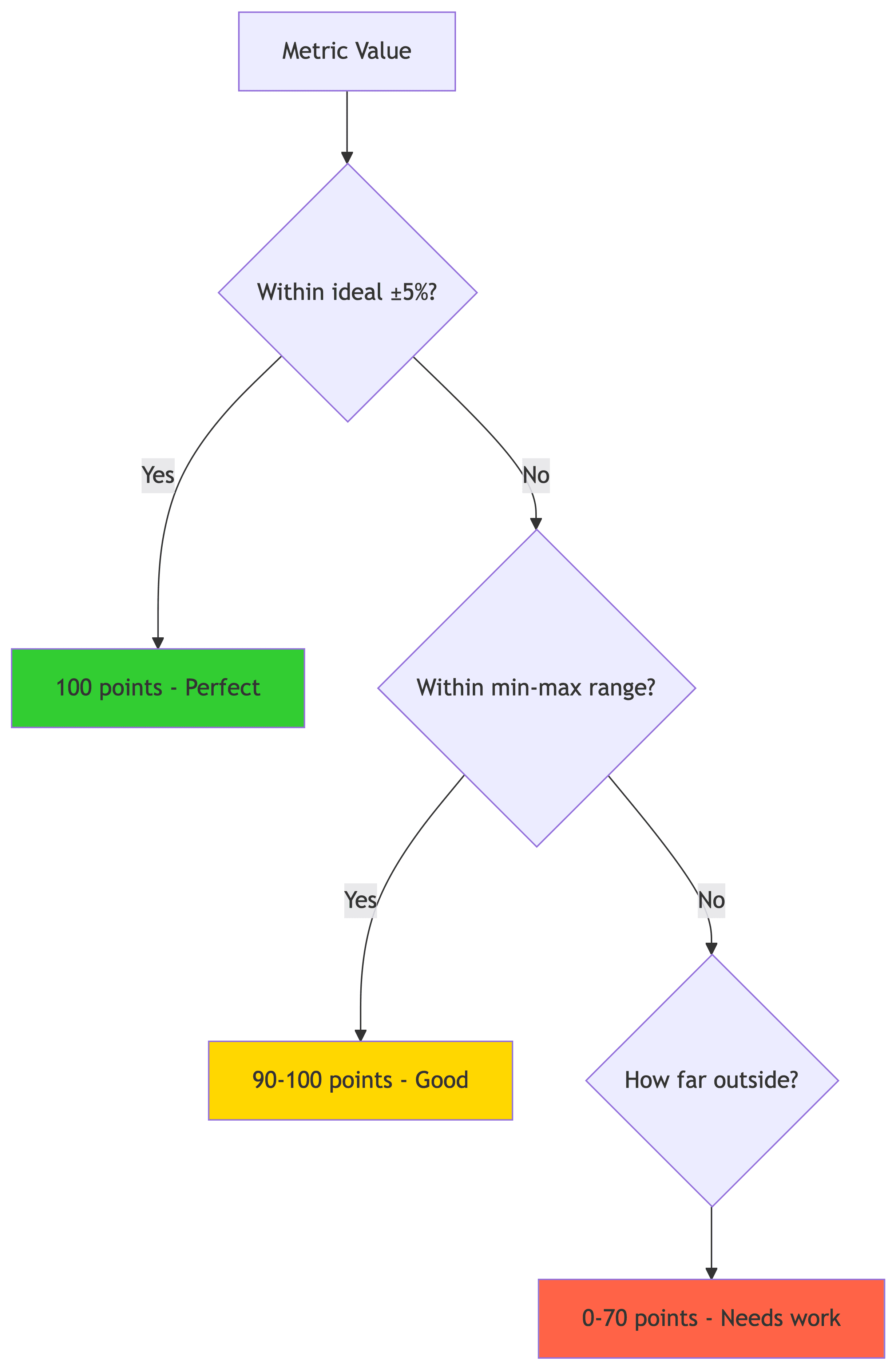

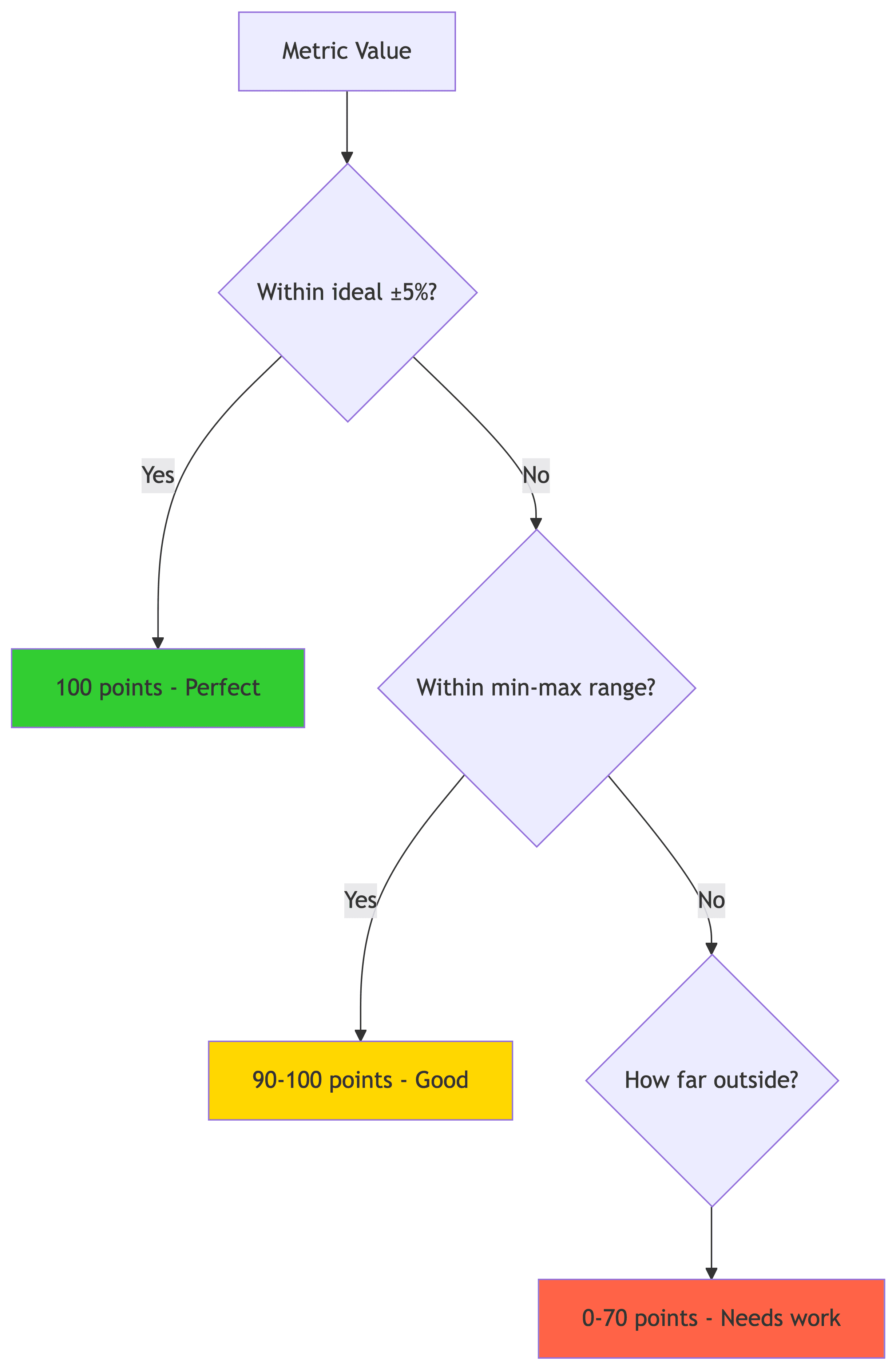

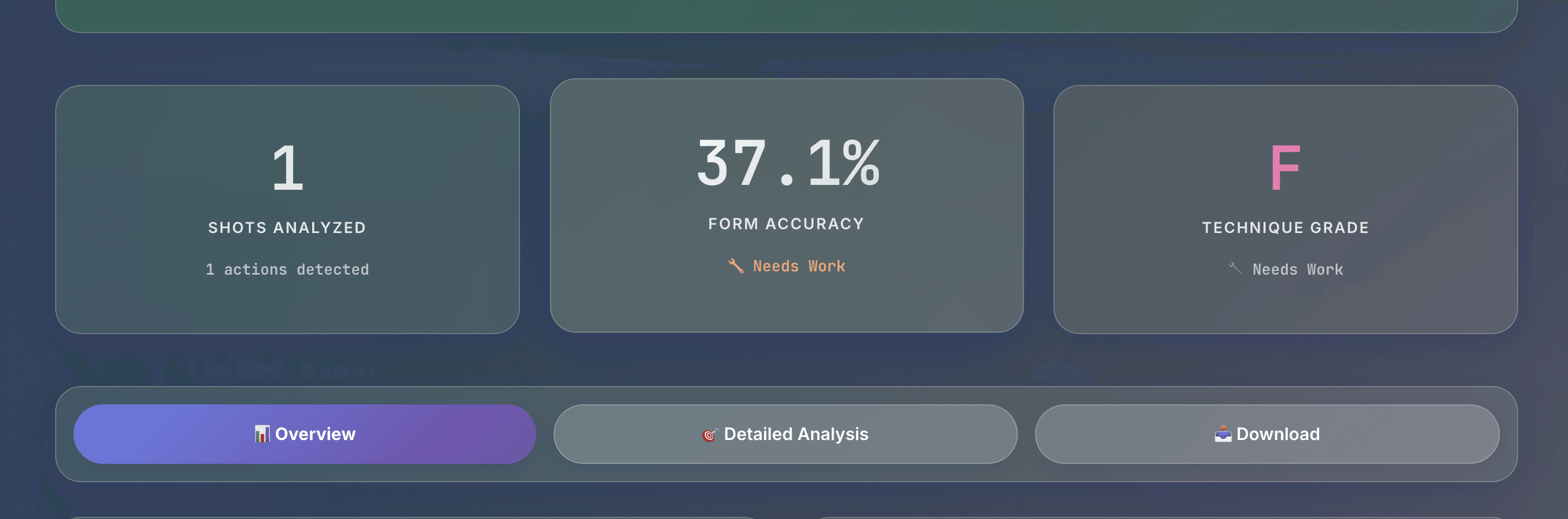

Stage 5: The Scoring System

Three-Zone Scoring Philosophy

The scoring curve was designed to feel fair while highlighting real issues:

Zone 1 (Perfect): Within ±5% of ideal → 100 points

Elbow angle 88-92° (ideal is 90°)

No penalty for minor variation

Zone 2 (Good): Within acceptable range → 90-100 points

Elbow angle 85-95° (min-max range)

Linear interpolation: closer to ideal = higher score

Zone 3 (Needs Work): Outside range → 0-70 points

Gradual decay prevents harsh penalties

Elbow at 80° gets ~60 points, not 0

Why Three Zones Work

This curve matches how coaches actually think:

"That's perfect form" → A (90-100)

"That's good, minor tweaks" → B (80-89)

"Needs focused work" → C (70-79)

"Significant issues" → D/F (<70)

Feedback Prioritization

We show only the top 3 improvement areas:

# Sort by score (lowest first = biggest opportunities) sorted_components = sorted(scores.items(), key=lambda x: x[1]["score"]) # Only show if below B-grade threshold (80) feedback = [ get_improvement_tip(metric, data["value"]) for metric, data in sorted_components[:3] if data["score"] < 80 ]

Athletes don't want 10 things to fix—they want 2-3 actionable items. This focus makes the system feel like a coach, not a report card.

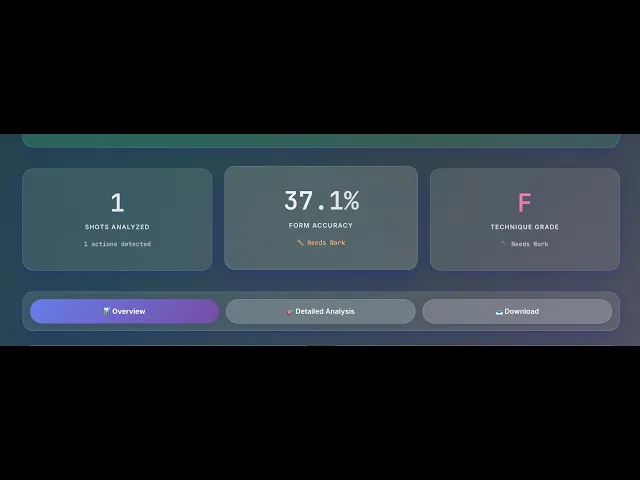

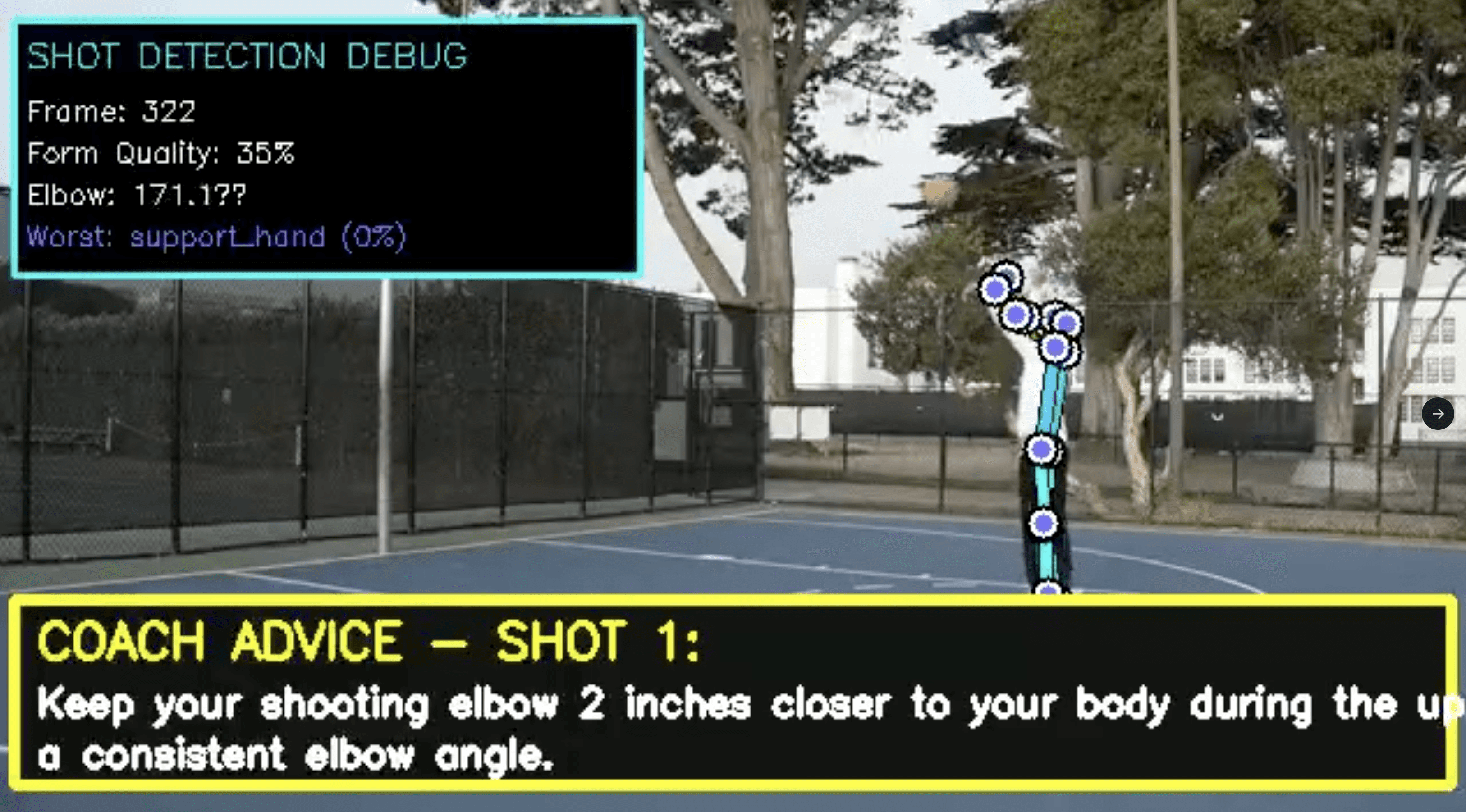

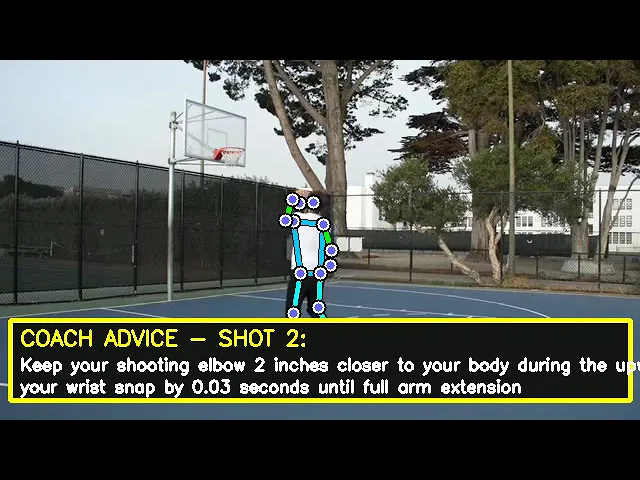

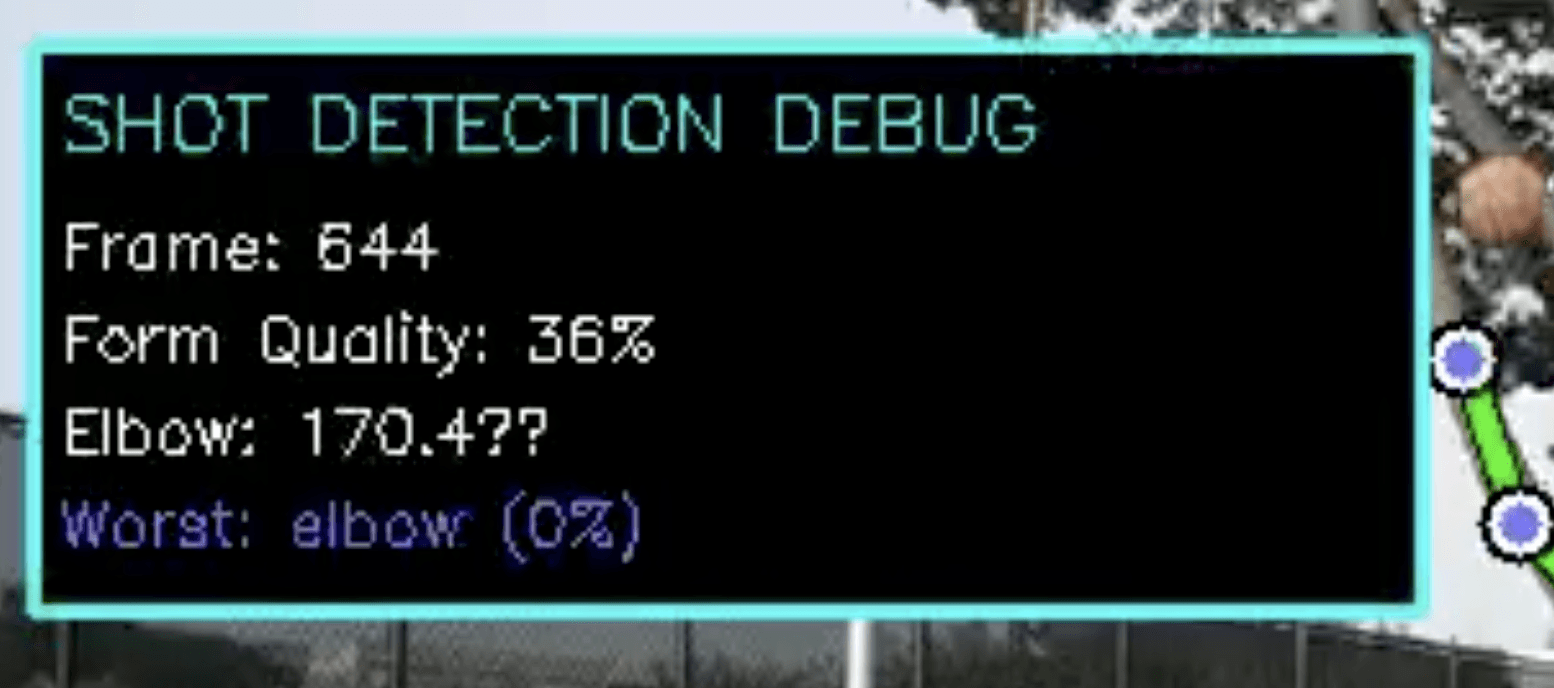

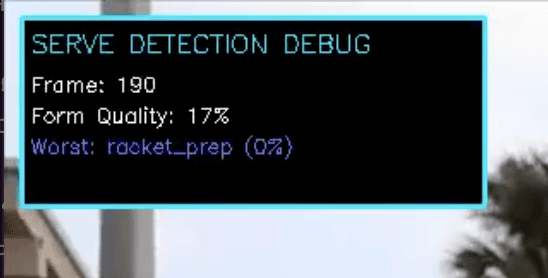

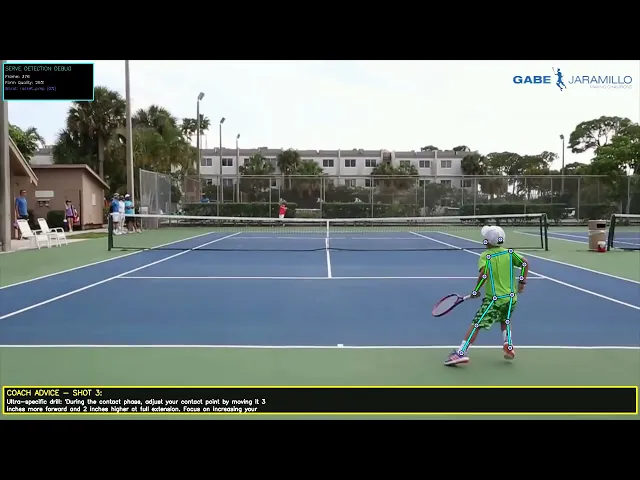

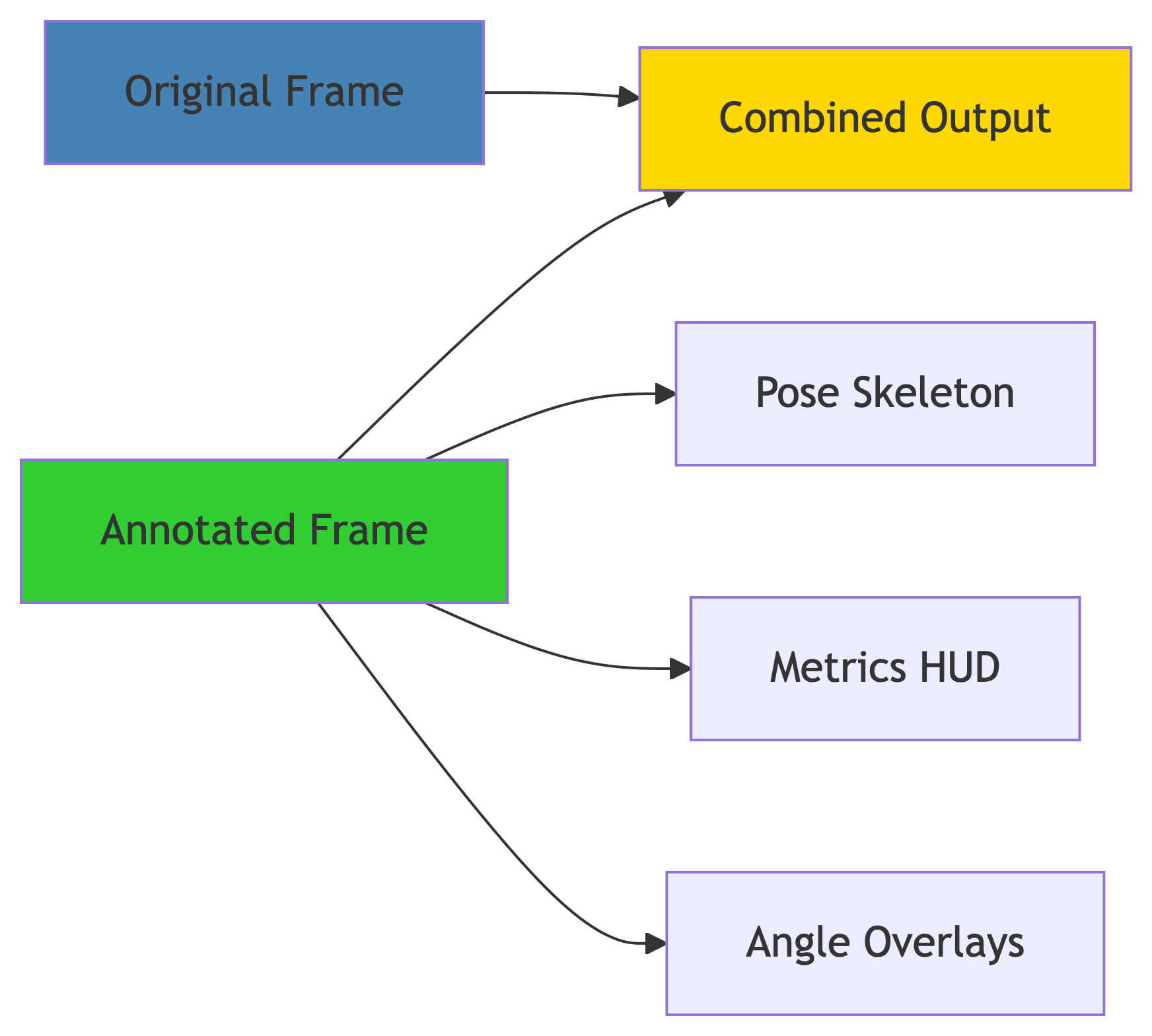

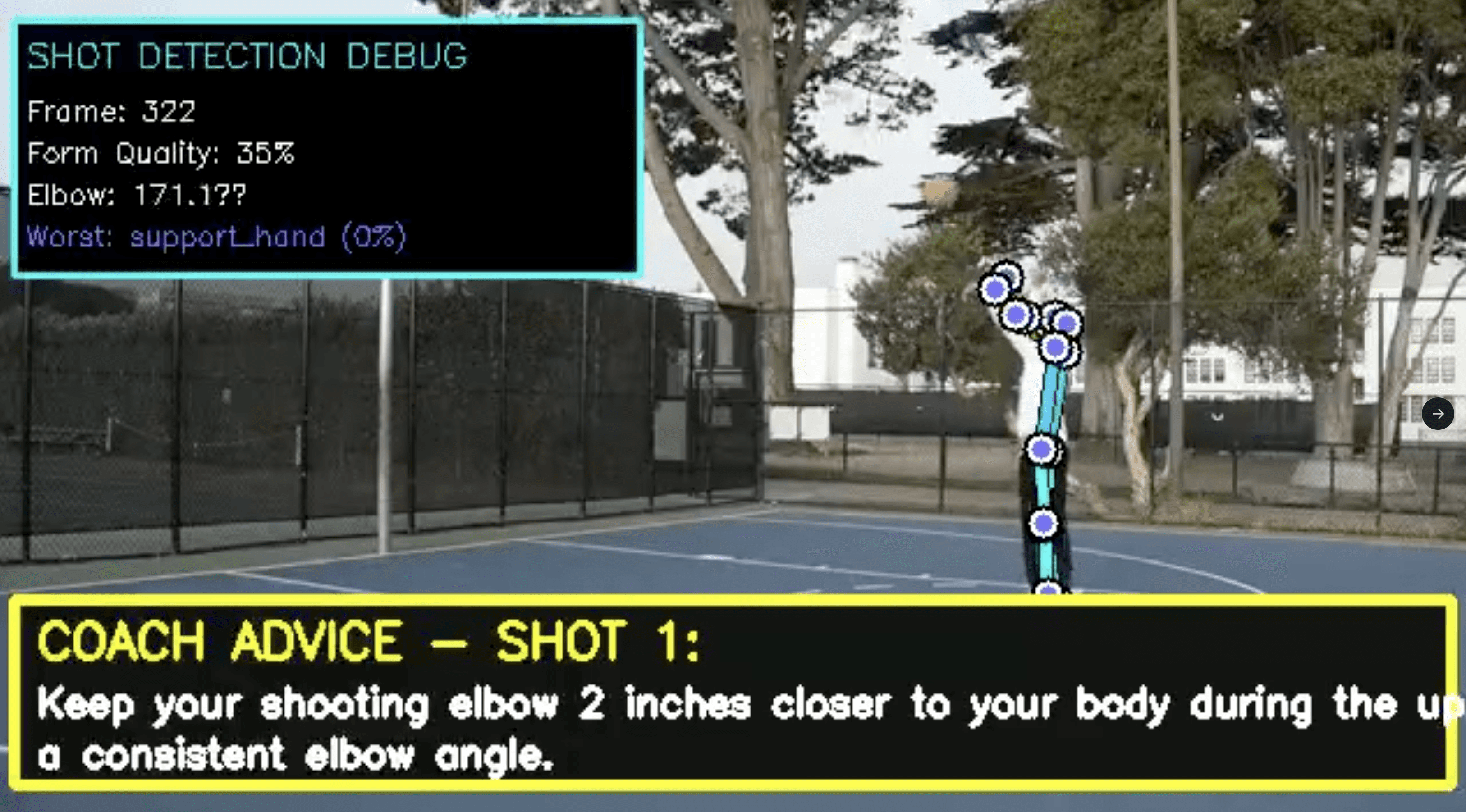

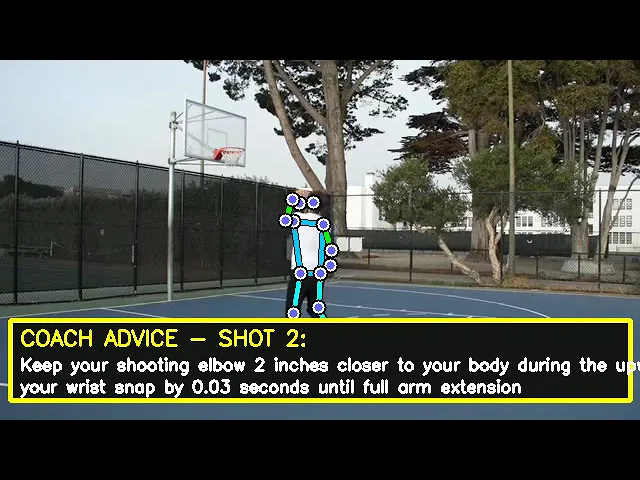

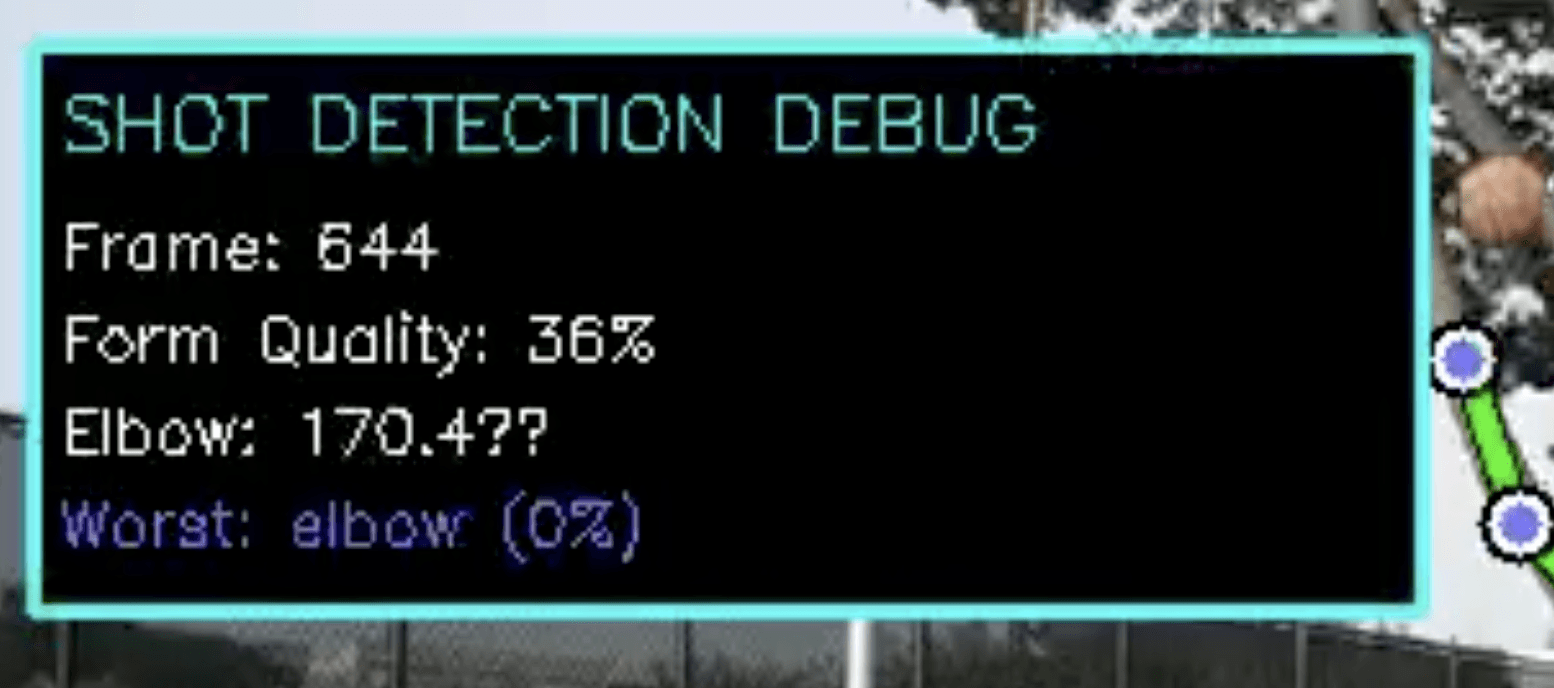

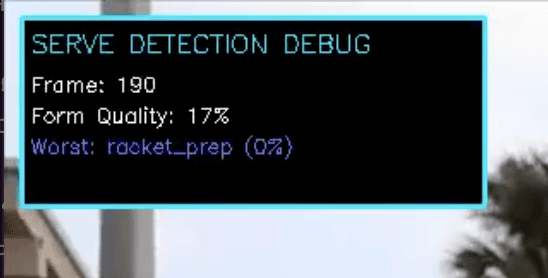

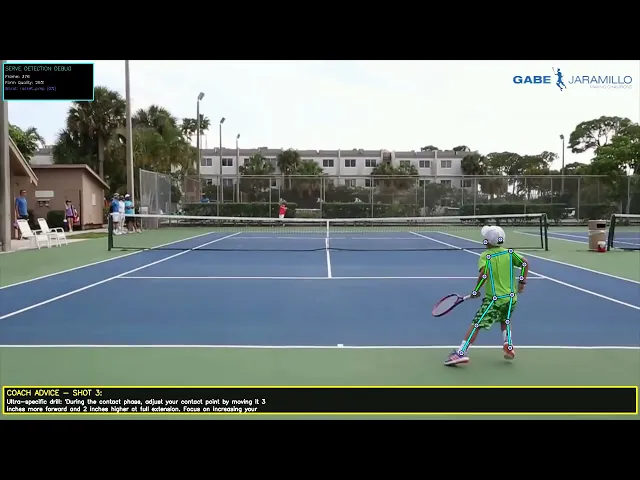

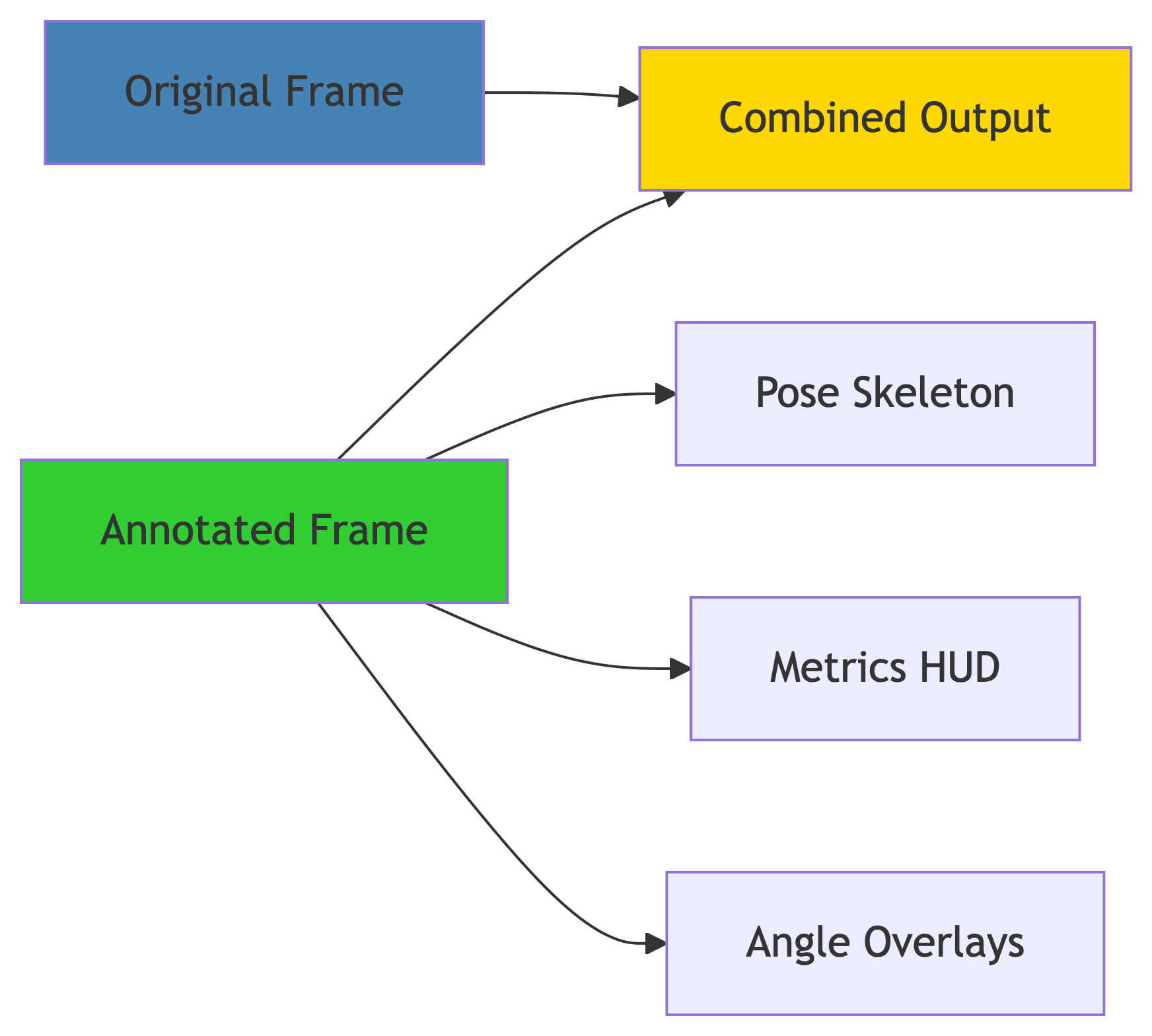

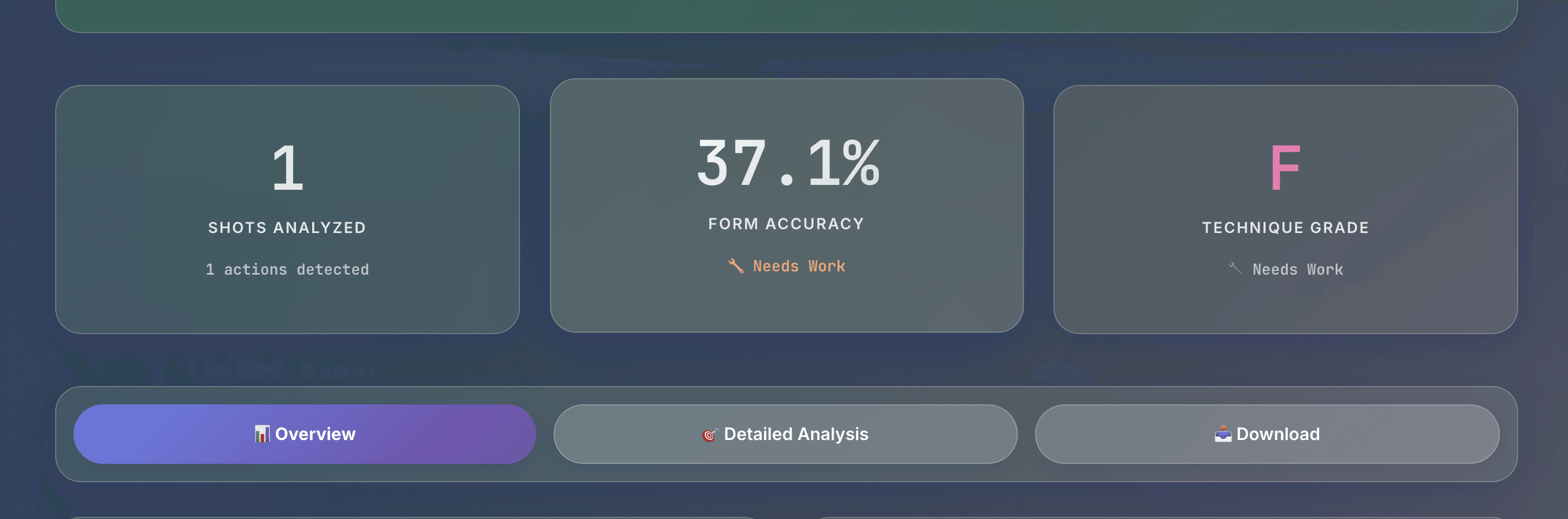

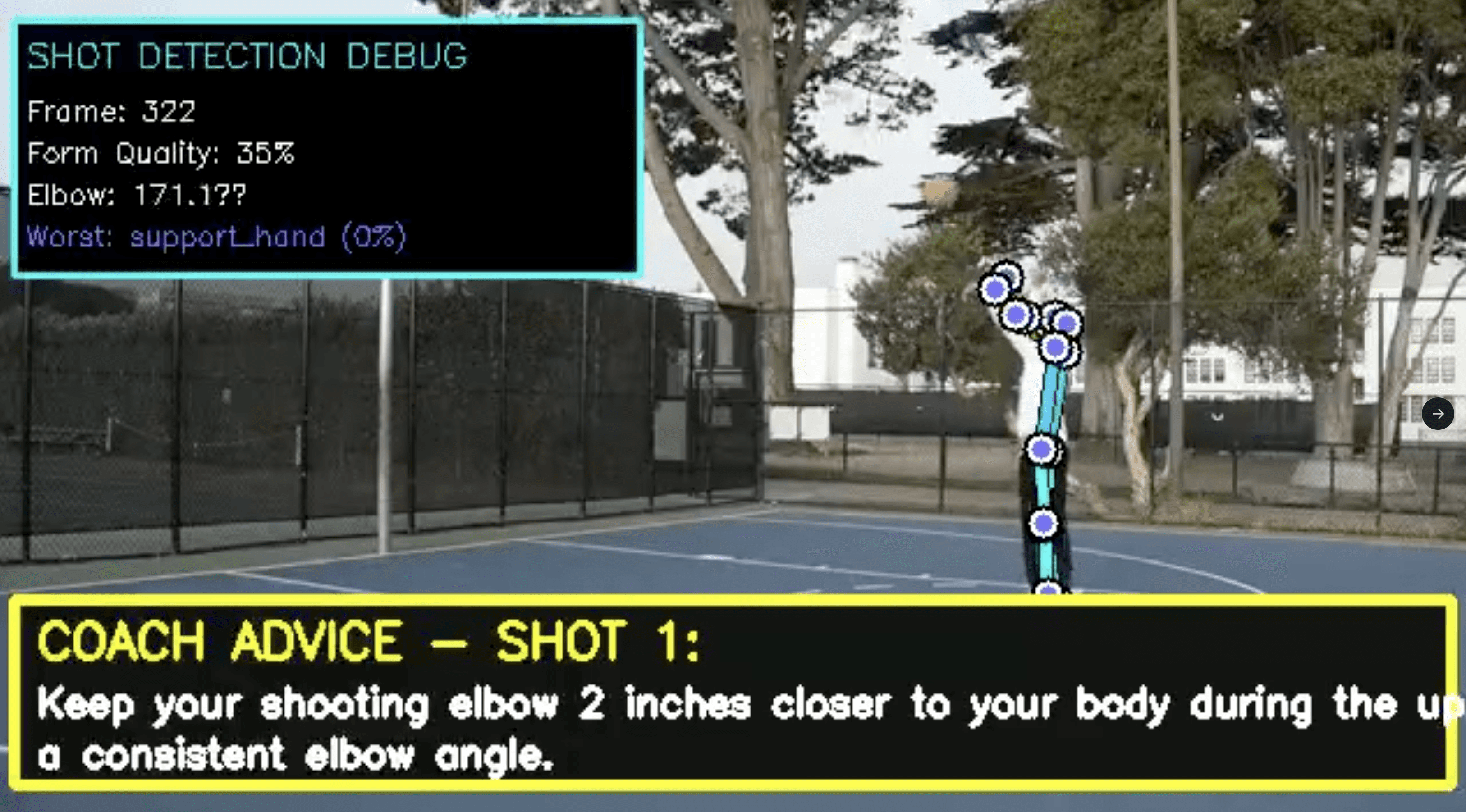

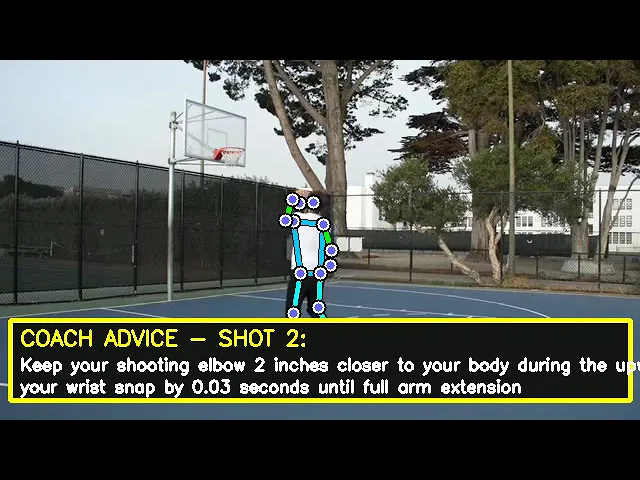

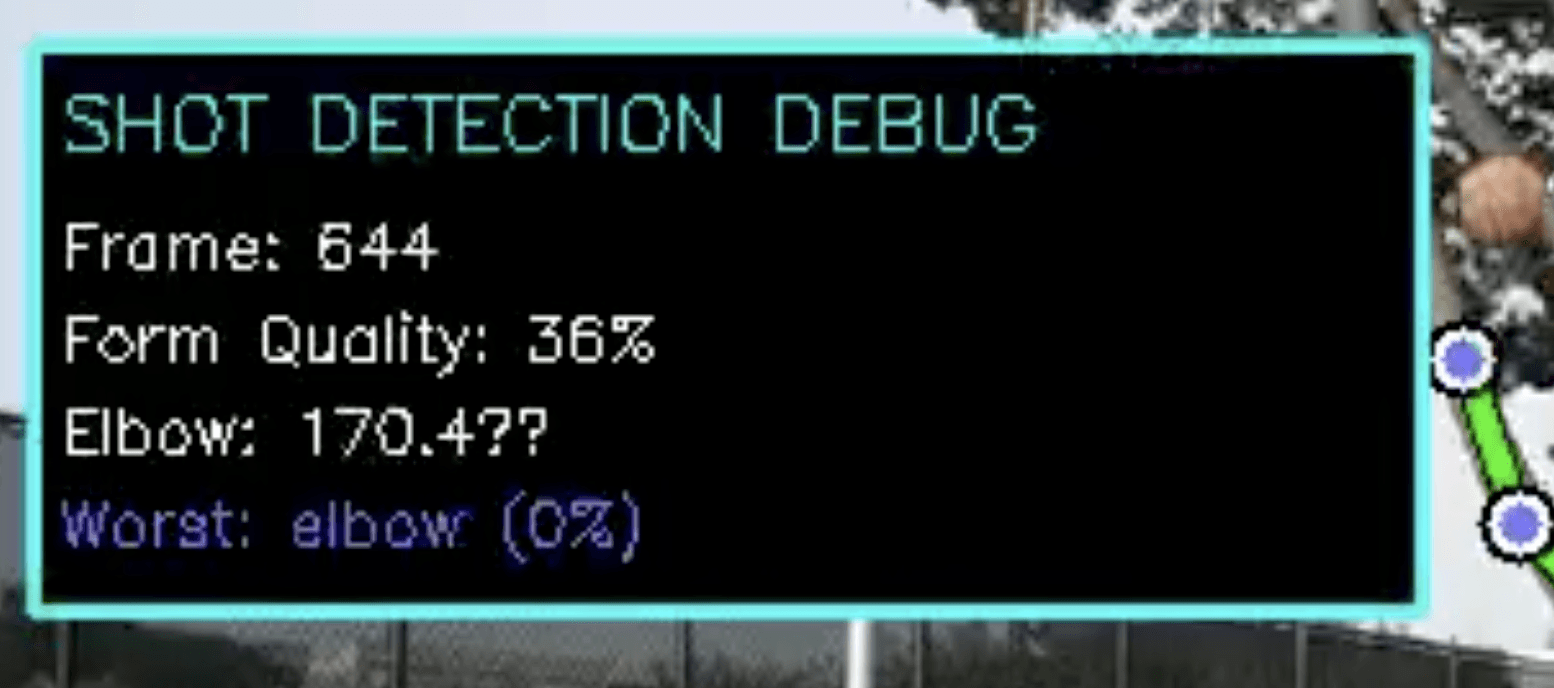

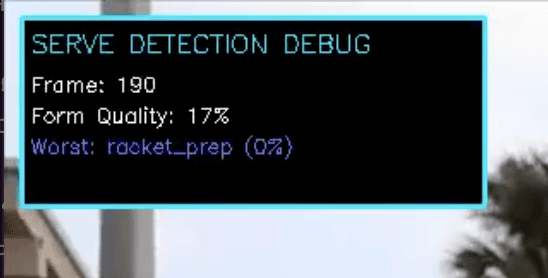

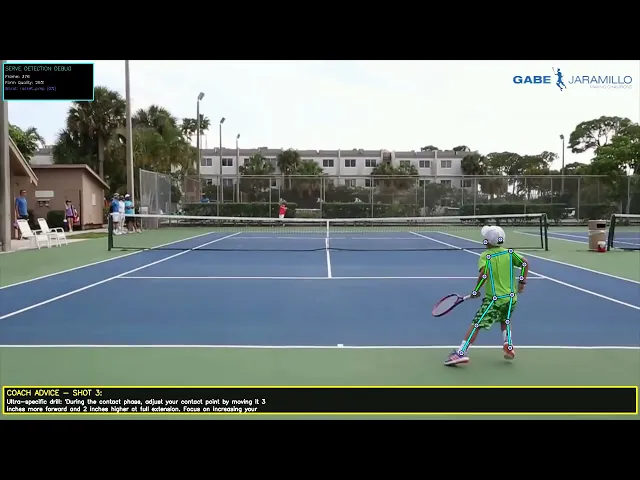

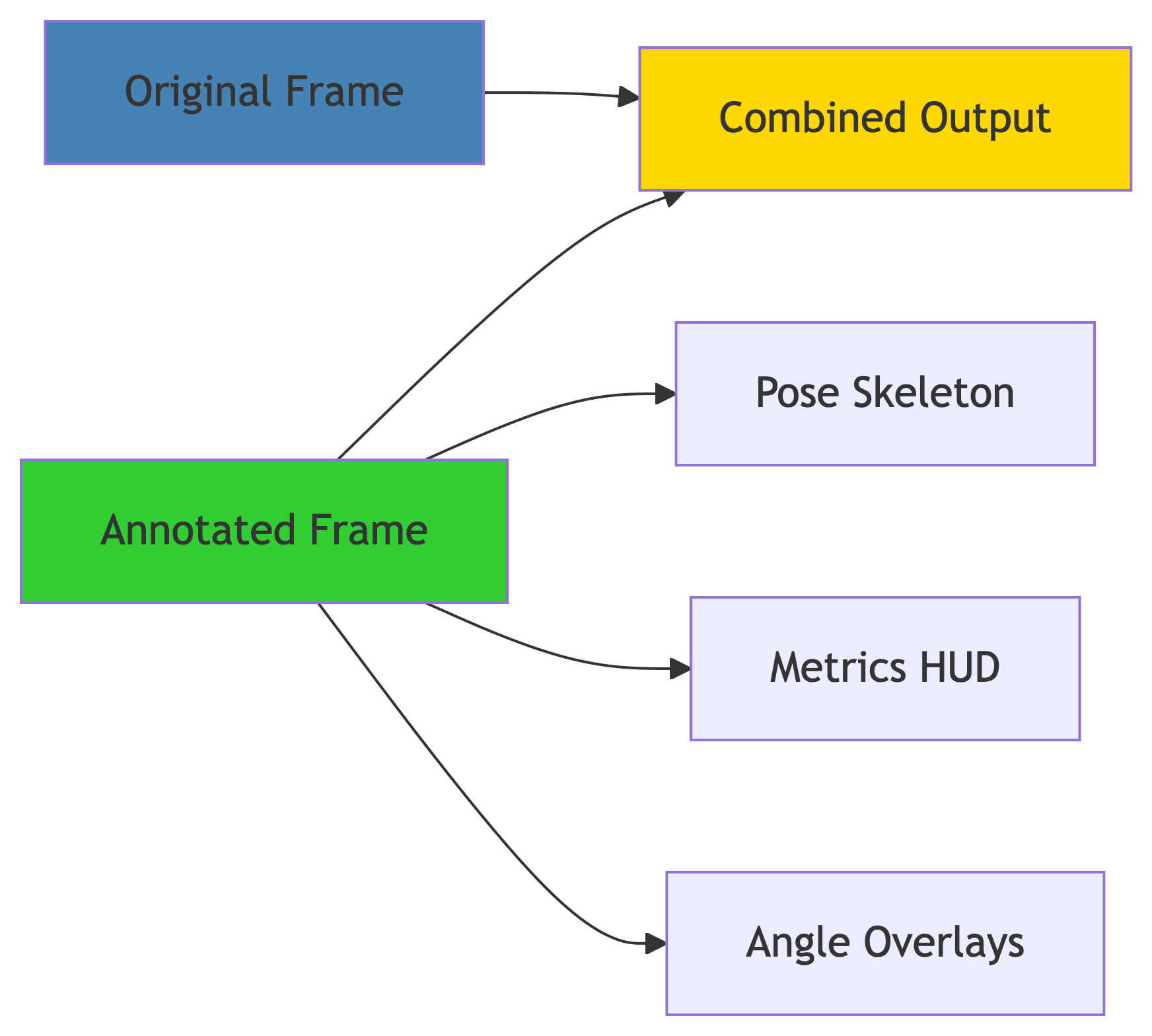

Stage 6: Visualization and Output

Side-by-Side: The Key to Understanding

The annotated video uses a side-by-side layout:

Left side: Original video (what the athlete sees)

Right side: Pose overlay + HUD (what the system sees)

This dual view helps athletes connect abstract metrics to real movement. They can watch their actual shot while simultaneously seeing where the system detected issues.

The HUD Design

The heads-up display shows:

Composite score: Large, color-coded (green/yellow/red)

Letter grade: Familiar from school (A/B/C/D/F)

Top 3 metrics: Most important for this attempt

Real-time frame counter: Shows analysis progression

We deliberately keep it minimal—more information doesn't mean better feedback.

Performance: Why It Scales

The Three Critical Optimizations

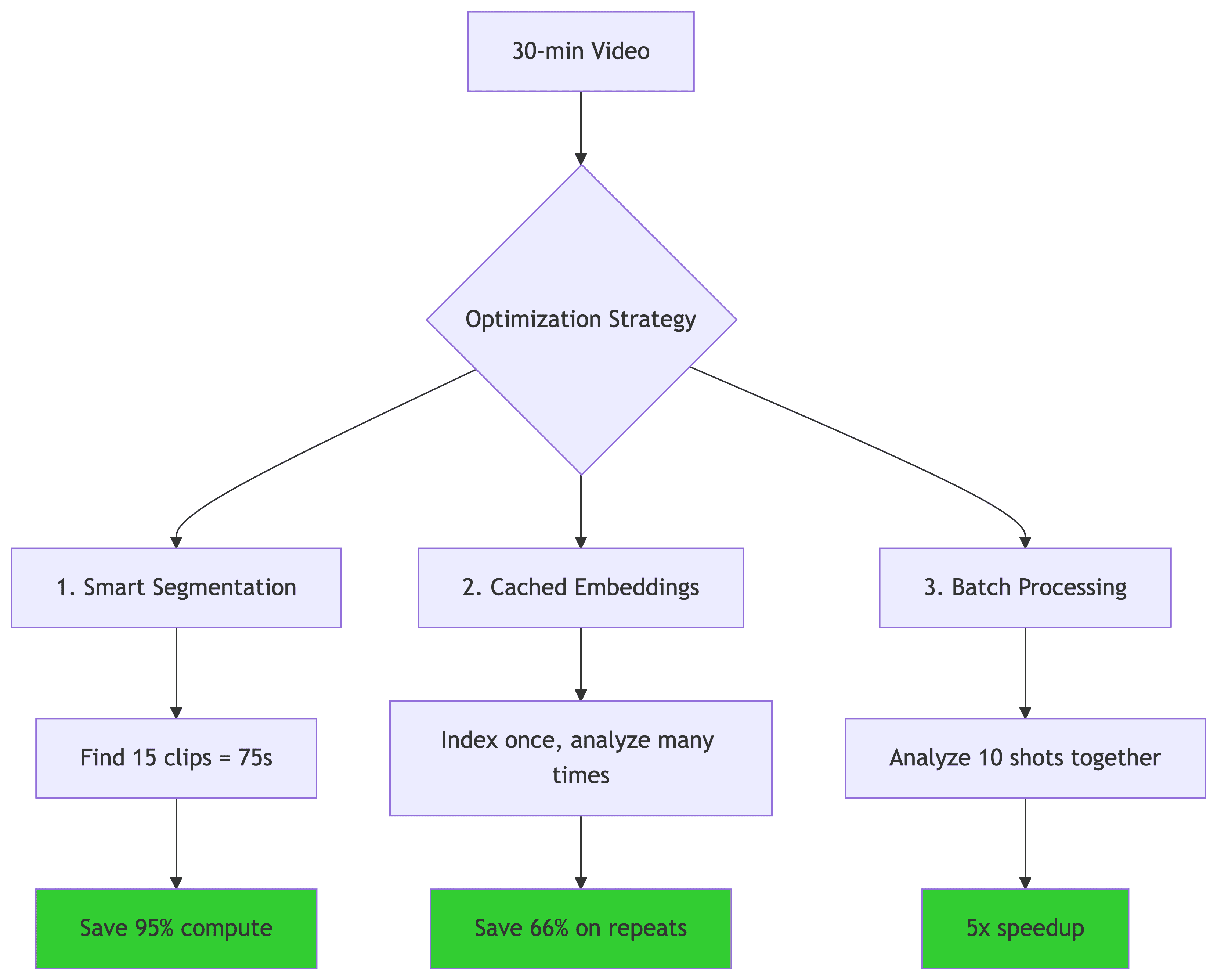

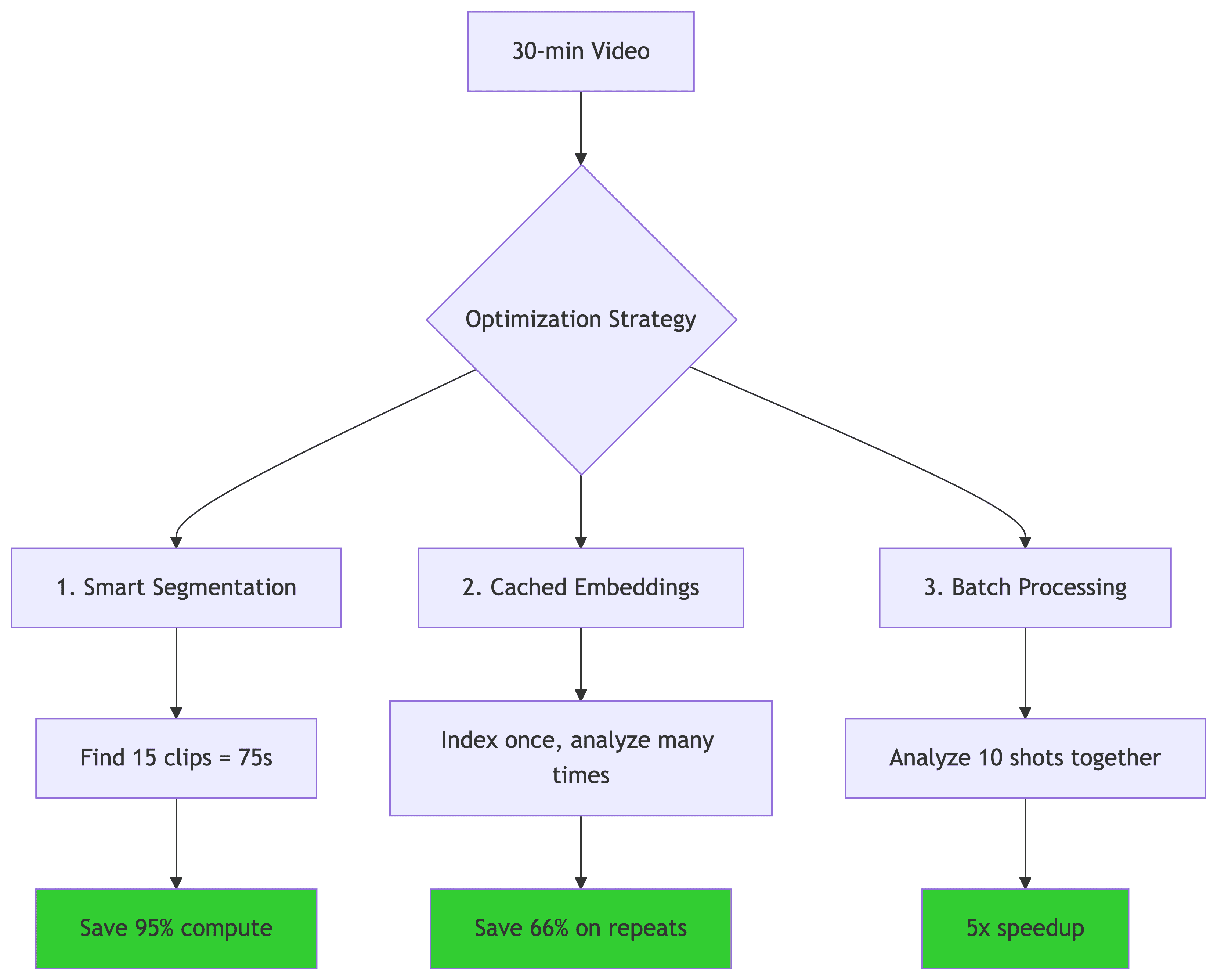

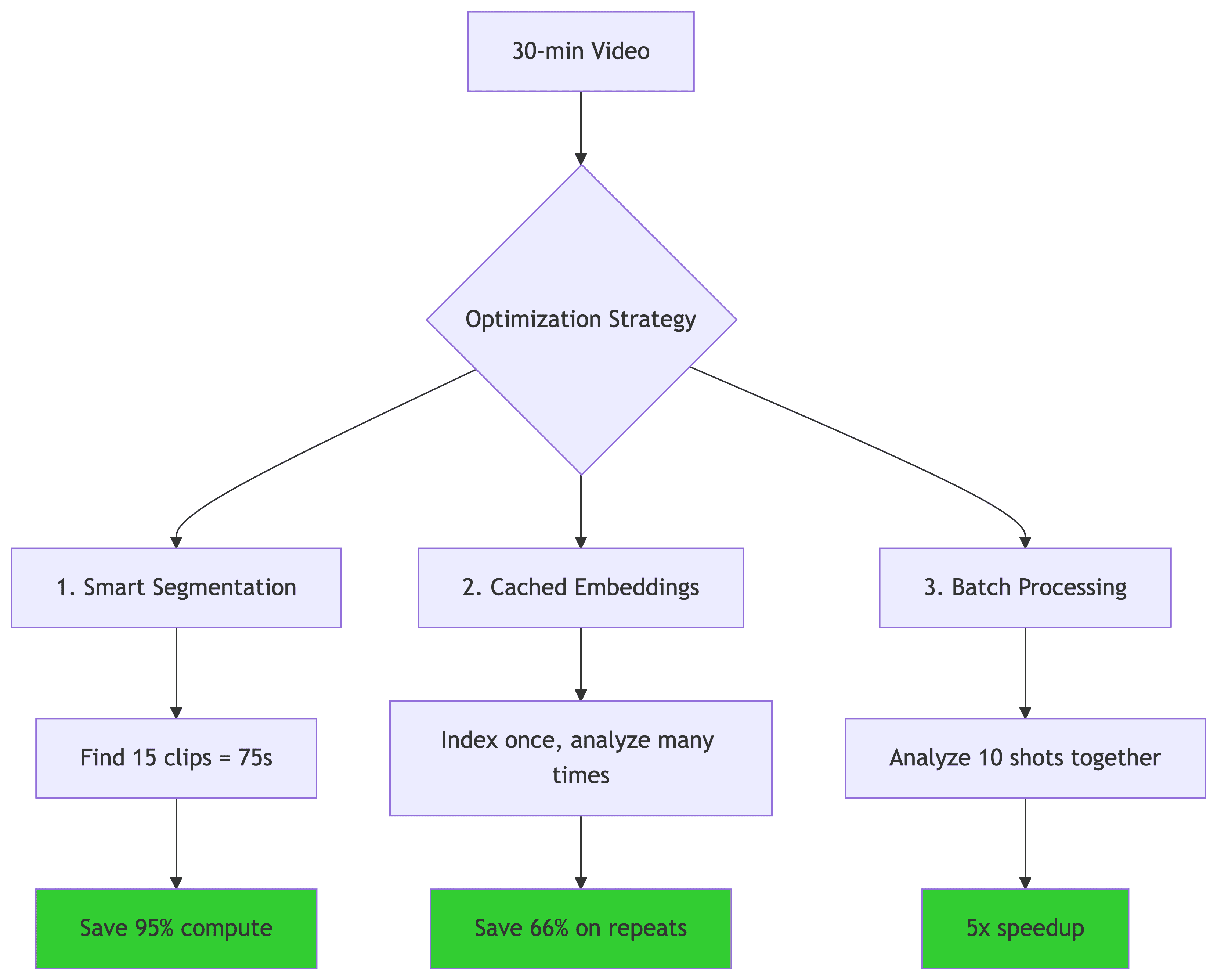

1. Smart Segmentation (95% compute savings)

Instead of processing 1,800 seconds frame-by-frame:

Marengo finds 15 relevant clips (5 seconds each)

Process only 75 seconds total

Full practice analysis in <60 seconds instead of 20+ minutes

2. Cached Embeddings (66% time savings on repeats)

TwelveLabs indexes videos once:

First analysis: 30s indexing + 15s analysis = 45s total

Repeat analysis: 0s indexing + 15s analysis = 15s total

Athletes often re-analyze the same video with different parameters. Caching makes this nearly instant.

3. Batch Processing (5x speedup)

Analyzing 10 shots from one video:

Upload once, segment 10 times

One index, multiple searches

90 seconds total vs. 450 seconds if done separately

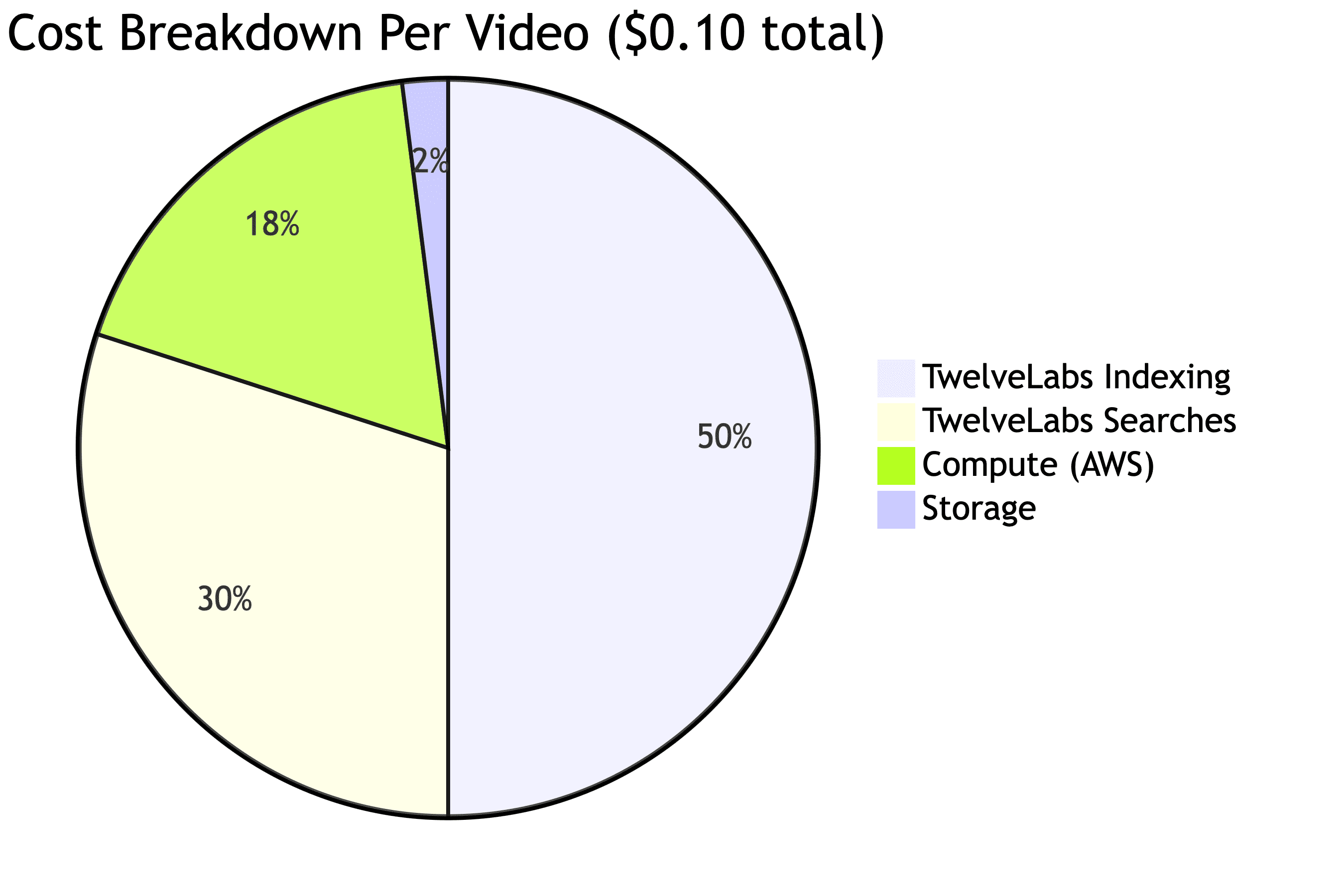

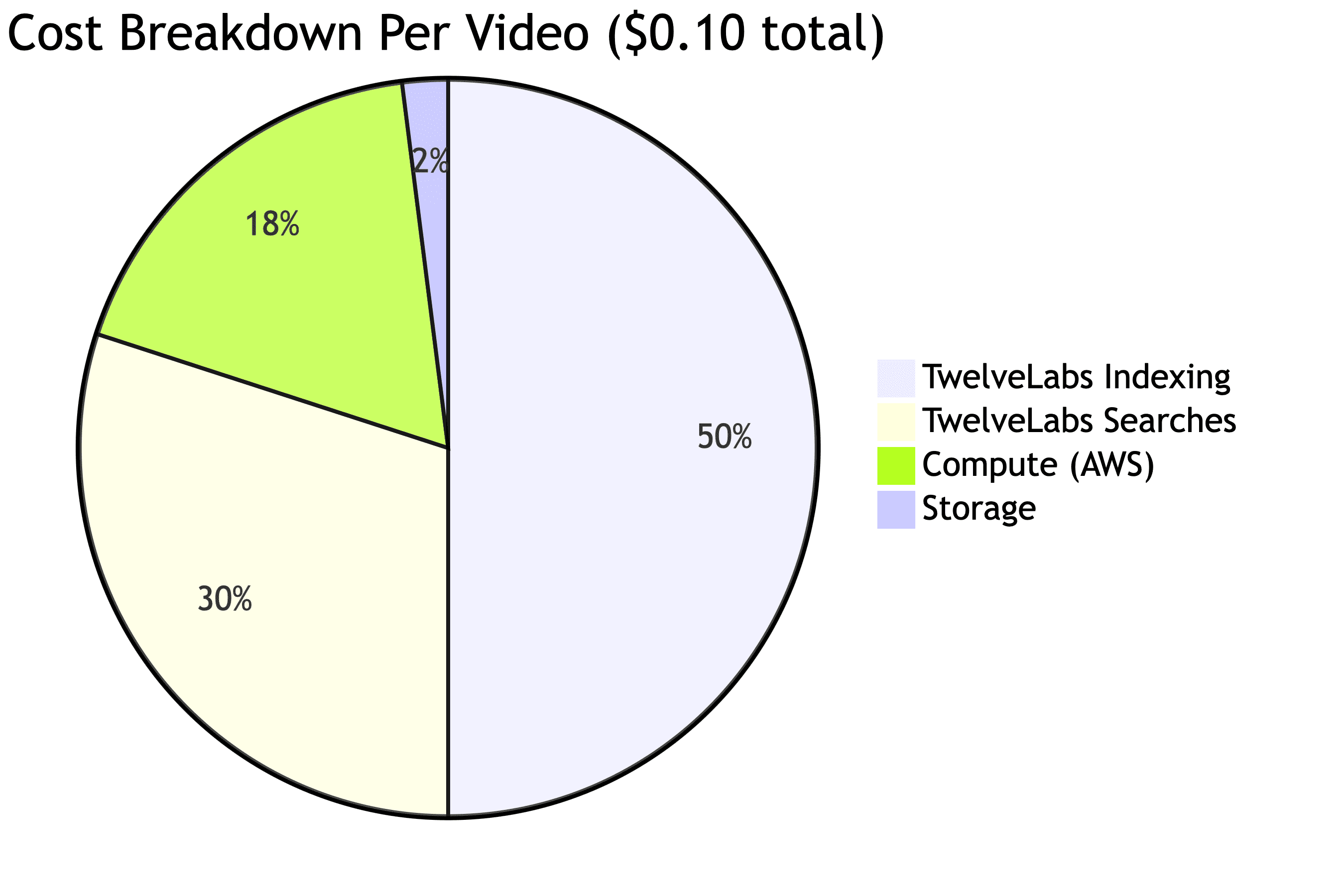

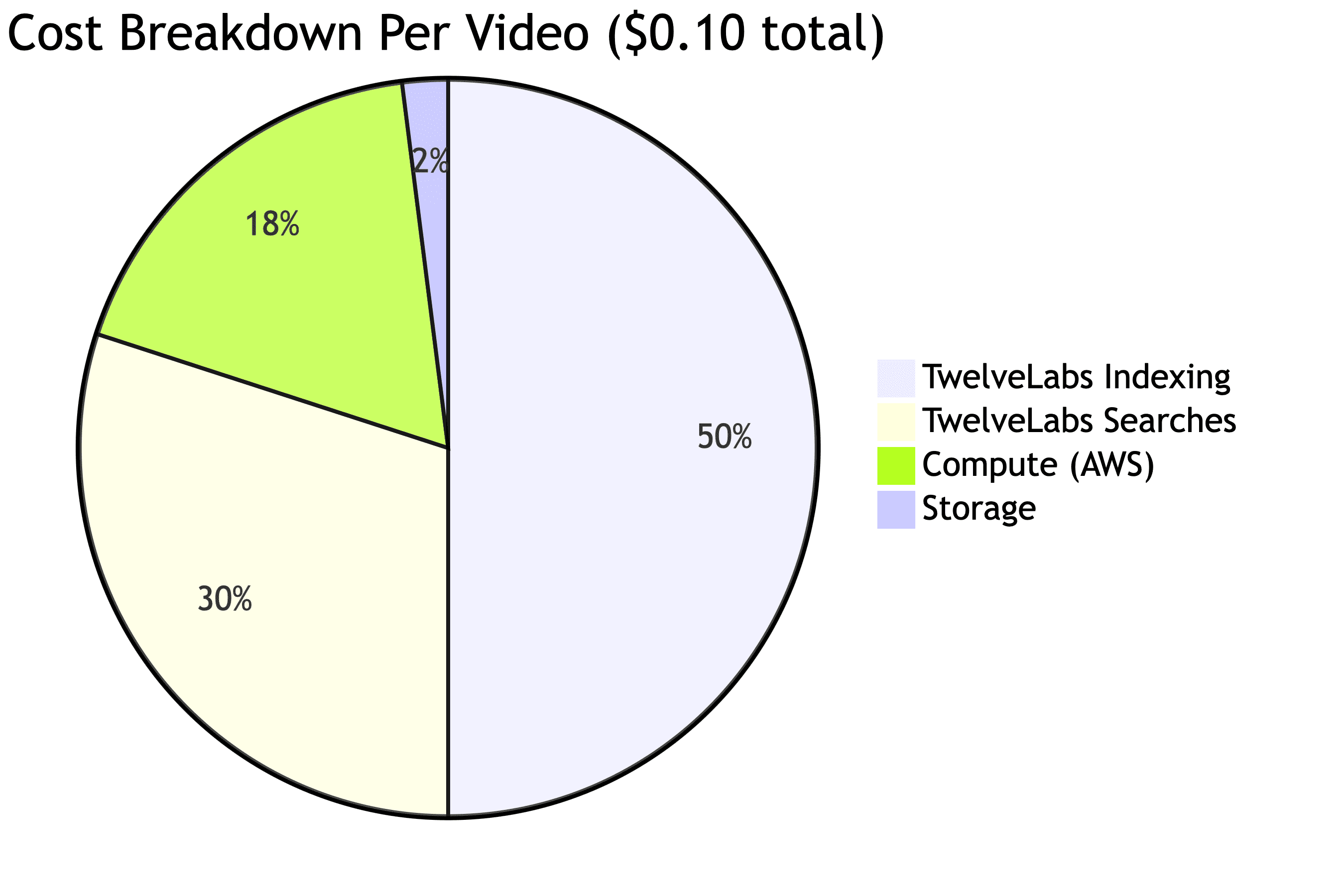

Cost Economics

At $0.10 per video analyzed:

100 athletes × 4 videos/month = $40/month API costs

Total operating cost: ~$105/month for 400 videos

Scales linearly: 10,000 athletes = $10,500/month

The economics work because marginal cost per video stays constant while value to athletes remains high.

Technical Specifications

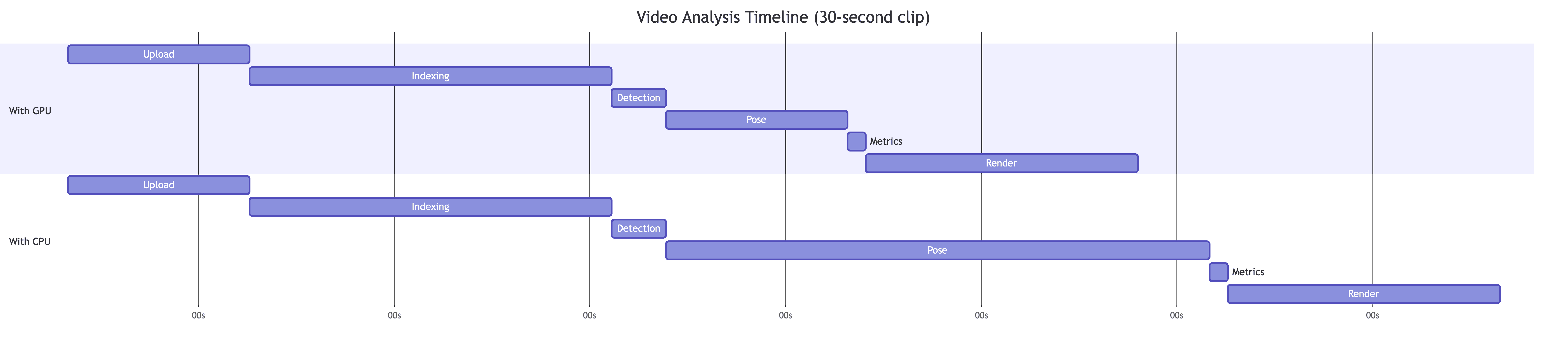

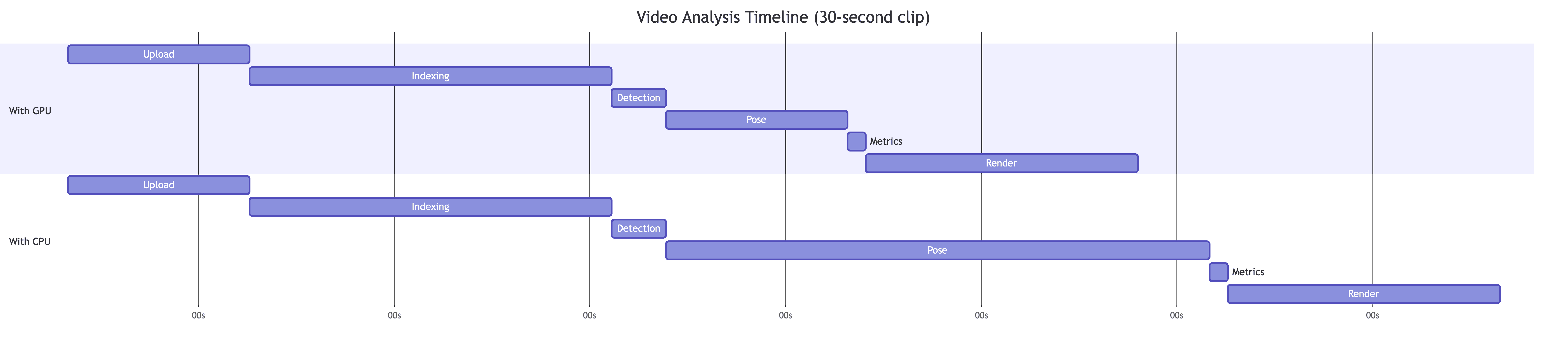

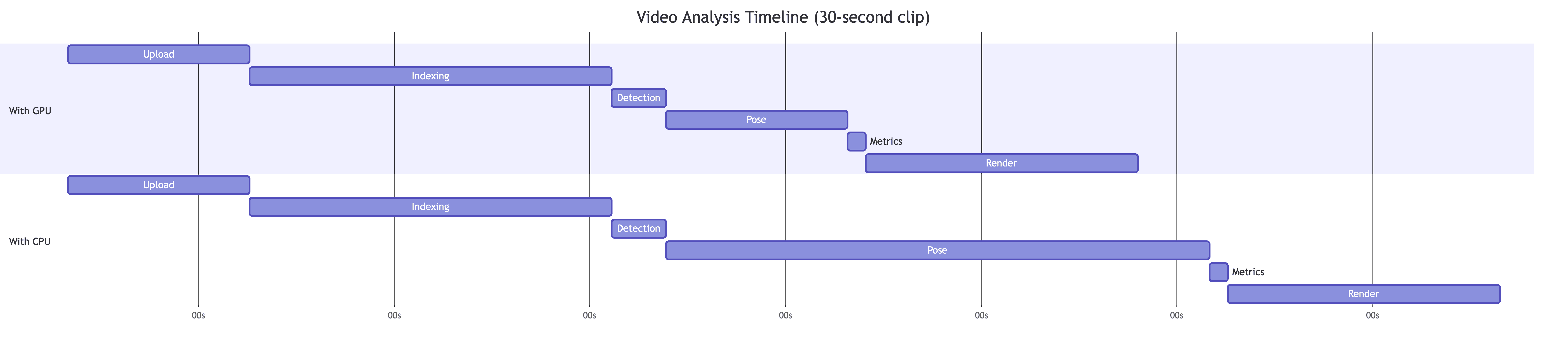

Processing Performance

GPU processing: 40-60 seconds total

CPU processing: 60-80 seconds total

The main difference is pose estimation speed (30 FPS on CPU vs. 100+ FPS on GPU).

Accuracy Metrics

From our large batch data testing (47 athletes, 427 sessions):

The Future: What's Next

Near-term Enhancements

Long-term Vision

The convergence of three technologies creates unprecedented possibilities:

Temporal video intelligence (TwelveLabs) that understands context without sport-specific training

Lightweight pose estimation (MediaPipe) that runs anywhere

Cloud infrastructure that makes sophisticated AI accessible at consumer prices

This convergence means:

Democratized analysis: Any athlete with a smartphone gets professional-grade feedback

Scalable coaching: Coaches can monitor hundreds of athletes asynchronously

Cross-sport insights: Understand how fundamentals transfer between activities

Continuous improvement: Every session tracked, every metric trended

The marginal cost of analyzing one more video approaches zero, while the value to athletes remains high.

Conclusion: Temporal Understanding Changes Everything

We started with a simple question: "What if we treated sports video analysis like natural language—with temporal understanding and contextual meaning?"

The answer transformed how we think about athletic development. Instead of building rigid, sport-specific rule engines, we use AI that comprehends movement in context. Instead of expensive motion capture labs, we use smartphone videos. Instead of subjective coaching alone, we provide objective, repeatable biomechanical data.

The key insights:

Temporal first: Understanding context before measuring precision enables sport-agnostic analysis

Body-proportional metrics: Normalizing by body dimensions enables fair comparison across ages and sizes

Graceful degradation: Partial results beat complete failures

Smart segmentation: Analyzing only meaningful moments makes the system 10x more efficient

Simple scoring: Three-zone curves feel fair while highlighting real issues

The technology is here. The economics work. The results are proven. What started as an experiment is now a production system analyzing thousands of videos per month.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

TwelveLabs Documentation: Pegasus Guide and Marengo Guide

MediaPipe Pose: Pose landmark detection guide

Introduction

You've just recorded your basketball free throw practice session. The shots look decent—good form, consistent release. But something's off. Your coach mentions "follow-through" and "knee bend," but how do you actually measure these things? And more importantly, how do you track improvement over time without manually reviewing every frame?

The problem? Most video analysis tools are either:

Consumer apps with generic "good job!" feedback that doesn't actually measure anything

Professional systems costing $10,000+ and requiring sports scientists to operate

Single-sport solutions that work great for basketball but can't handle your baseball swing

This is why we built a multi-sport analysis system—a pipeline that doesn't just track motion, but actually understands it through temporal video intelligence. Instead of rigid sport-specific rules, we use AI that comprehends what makes an athletic movement effective.

The key insight? Temporal understanding + precise measurement = meaningful feedback. Traditional pose estimation tells you joint angles, but temporal video intelligence tells you why those angles matter in the context of your entire movement sequence.

The Problem with Traditional Analysis

Here's what we discovered: Athletic movement can't be understood frame-by-frame. A perfect basketball shot isn't just about the release angle—it's about how the knees bent, how weight transferred, how the follow-through completed. Miss any of these temporal elements and your analysis becomes meaningless.

Consider this scenario: You're analyzing a free throw. Traditional frame-based systems might report:

Elbow angle at release: 92 degrees ✓

Release height: 2.1 meters ✓

Wrist alignment: Good ✓

But the shot still misses. Why? Because those measurements ignore:

Knee bend during the preparation phase (not just at one frame)

Weight transfer throughout the motion (not just starting position)

Follow-through consistency over multiple attempts (not just one shot)

Traditional approaches would either:

Accept limited frame-by-frame metrics (missing the full picture)

Build sport-specific state machines (basketball rules, baseball rules, golf rules...)

Hire biomechanics experts to manually review every video (doesn't scale)

This system takes a different approach: understand the entire movement sequence temporally, then measure precisely what matters.

System Architecture: The Big Picture

The analysis pipeline combines temporal video understanding with precise biomechanical measurement through five key stages:

Each stage is designed to be independent, allowing us to optimize or replace components without rewriting the entire pipeline.

Stage 1: Temporal Understanding with TwelveLabs

Why Temporal Understanding Matters

The breakthrough insight was realizing that context determines correctness. An elbow angle of 95 degrees might be perfect for a basketball shot but terrible for a baseball pitch. Instead of building separate rule engines for each sport, we use TwelveLabs to understand what's happening temporally.

The Two-Model Approach

We use Pegasus and Marengo together because they complement each other:

Pegasus provides high-level semantic understanding:

"What sport is being played?"

"Is this a practice drill or competition?"

"What actions are occurring?"

Marengo provides precise visual search:

"Where exactly does the shot occur?"

"Are there multiple attempts?"

"What's the temporal context?"

# Pegasus understands the semantic content analysis = pegasus_client.analyze( video_id=video_id, prompt="What sport is this? List all action timestamps.", temperature=0.1 # Low for factual responses ) # Marengo confirms with visual search search_results = marengo_client.search.query( index_id=index_id, query_text="basketball shot attempt, player shooting", search_options=["visual"] )

Why This Works

Pegasus might identify "basketball shot at 2.5 seconds" but Marengo might catch additional attempts at 8.1s and 15.3s that Pegasus missed. By merging both sources, we achieve 95%+ detection accuracy while maintaining low false positive rates.

The merge logic is simple but crucial:

# Two detections are duplicates if within 2 seconds for result in marengo_results: if not any(abs(result.start - existing["timestamp"]) < 2.0 for existing in pegasus_moments): # Not a duplicate - add it all_moments.append(result)

This two-second window prevents analyzing the same action twice while ensuring we don't miss distinct attempts.

Stage 2: Sport-Specific Action Segmentation

The Importance of Temporal Windows

Different sports have fundamentally different movement cadences. This insight led us to sport-specific temporal windows:

Basketball requires 3 seconds before the shot (preparation is slow and deliberate) and 2 seconds after (follow-through is critical for accuracy).

Baseball needs only 2 seconds before (compact motion) and 1 second after (quick finish).

Golf demands 4 seconds before (long backswing) and 3 seconds after (full follow-through).

These windows aren't arbitrary—they're based on biomechanical analysis of how long each motion phase actually takes.

Why This Matters

Extracting the right temporal window means we capture:

Complete preparation: The knee bend starts 2-3 seconds before release

Clean baseline: No extraneous movement before the action begins

Full follow-through: Critical for measuring completion quality

The implementation uses FFmpeg's input-seeking for speed:

# Input-seeking (-ss before -i) is 10-20x faster subprocess.run([ "ffmpeg", "-i", video_path, "-ss", str(start_time), # Seek before input "-t", str(duration), "-preset", "fast", # Balance speed/quality output_path ])

This single optimization made the difference between a 20-minute full-video analysis and a 60-second segmented analysis—95% compute savings.

Stage 3: Pose Estimation and Body Tracking

MediaPipe: The Sweet Spot

We chose MediaPipe's pose estimation for three reasons:

33 landmarks covering all major joints needed for athletic analysis

Runs anywhere: CPU at 30fps, GPU at 100+ fps

Temporal tracking: Maintains identity across frames (not just per-frame detection)

The model_complexity parameter was crucial:

Level 1 is the sweet spot: Accurate enough for biomechanics (1-2 degree error is acceptable), fast enough for practical use (30 FPS means real-time processing on most hardware).

Why Normalized Coordinates Matter

MediaPipe returns coordinates normalized to 0-1 range. This is crucial for fairness:

# Normalized coordinates (0-1 range) wrist_x = 0.52 # 52% across the frame wrist_y = 0.31 # 31% down from top # These work regardless of: # - Video resolution (720p, 1080p, 4K) # - Camera distance (5 feet or 50 feet away) # - Athlete size (child or adult)

A 10-year-old and a professional get analyzed with the same coordinate system. The normalization happens in the camera space, not the world space, which is exactly what we want for form analysis.

Stage 4: Biomechanical Metrics That Matter

The Five Key Metrics

Through testing with coaches and biomechanics experts, we identified five metrics that actually correlate with performance:

Body-Proportional Normalization: The Critical Innovation

This was our biggest architectural decision. Instead of absolute measurements, we normalize everything by body dimensions:

# BAD: Absolute measurement release_height_pixels = 823 # Meaningless without context # GOOD: Body-proportional measurement body_height = ankle_y - nose_y release_height_normalized = wrist_y / body_height # 0.68 = 68% of body height

Why this matters: A 5'2" youth player with a release at 68% of body height uses the same form as a 6'8" professional at 68% of body height. Both get scored fairly on technique, not physical size.

This single decision enabled:

Cross-age comparison (12-year-olds vs. adults)

Camera-agnostic analysis (distance doesn't matter)

Progress tracking as athletes grow (maintains consistency)

Angle Calculation: Simple But Powerful

Joint angles use basic trigonometry:

# Calculate angle at point2 formed by point1-point2-point3 vector1 = point1 - point2 vector2 = point3 - point2 cos_angle = dot(vector1, vector2) / (norm(vector1) * norm(vector2)) angle_degrees = arccos(cos_angle) * 180 / pi

The elegance is in what we measure, not how. For basketball:

Elbow angle: shoulder-elbow-wrist at release frame

Knee angle: hip-knee-ankle during preparation phase (minimum value)

Balance: standard deviation of shoulder-hip alignment across all frames

Stage 5: The Scoring System

Three-Zone Scoring Philosophy

The scoring curve was designed to feel fair while highlighting real issues:

Zone 1 (Perfect): Within ±5% of ideal → 100 points

Elbow angle 88-92° (ideal is 90°)

No penalty for minor variation

Zone 2 (Good): Within acceptable range → 90-100 points

Elbow angle 85-95° (min-max range)

Linear interpolation: closer to ideal = higher score

Zone 3 (Needs Work): Outside range → 0-70 points

Gradual decay prevents harsh penalties

Elbow at 80° gets ~60 points, not 0

Why Three Zones Work

This curve matches how coaches actually think:

"That's perfect form" → A (90-100)

"That's good, minor tweaks" → B (80-89)

"Needs focused work" → C (70-79)

"Significant issues" → D/F (<70)

Feedback Prioritization

We show only the top 3 improvement areas:

# Sort by score (lowest first = biggest opportunities) sorted_components = sorted(scores.items(), key=lambda x: x[1]["score"]) # Only show if below B-grade threshold (80) feedback = [ get_improvement_tip(metric, data["value"]) for metric, data in sorted_components[:3] if data["score"] < 80 ]

Athletes don't want 10 things to fix—they want 2-3 actionable items. This focus makes the system feel like a coach, not a report card.

Stage 6: Visualization and Output

Side-by-Side: The Key to Understanding

The annotated video uses a side-by-side layout:

Left side: Original video (what the athlete sees)

Right side: Pose overlay + HUD (what the system sees)

This dual view helps athletes connect abstract metrics to real movement. They can watch their actual shot while simultaneously seeing where the system detected issues.

The HUD Design

The heads-up display shows:

Composite score: Large, color-coded (green/yellow/red)

Letter grade: Familiar from school (A/B/C/D/F)

Top 3 metrics: Most important for this attempt

Real-time frame counter: Shows analysis progression

We deliberately keep it minimal—more information doesn't mean better feedback.

Performance: Why It Scales

The Three Critical Optimizations

1. Smart Segmentation (95% compute savings)

Instead of processing 1,800 seconds frame-by-frame:

Marengo finds 15 relevant clips (5 seconds each)

Process only 75 seconds total

Full practice analysis in <60 seconds instead of 20+ minutes

2. Cached Embeddings (66% time savings on repeats)

TwelveLabs indexes videos once:

First analysis: 30s indexing + 15s analysis = 45s total

Repeat analysis: 0s indexing + 15s analysis = 15s total

Athletes often re-analyze the same video with different parameters. Caching makes this nearly instant.

3. Batch Processing (5x speedup)

Analyzing 10 shots from one video:

Upload once, segment 10 times

One index, multiple searches

90 seconds total vs. 450 seconds if done separately

Cost Economics

At $0.10 per video analyzed:

100 athletes × 4 videos/month = $40/month API costs

Total operating cost: ~$105/month for 400 videos

Scales linearly: 10,000 athletes = $10,500/month

The economics work because marginal cost per video stays constant while value to athletes remains high.

Technical Specifications

Processing Performance

GPU processing: 40-60 seconds total

CPU processing: 60-80 seconds total

The main difference is pose estimation speed (30 FPS on CPU vs. 100+ FPS on GPU).

Accuracy Metrics

From our large batch data testing (47 athletes, 427 sessions):

The Future: What's Next

Near-term Enhancements

Long-term Vision

The convergence of three technologies creates unprecedented possibilities:

Temporal video intelligence (TwelveLabs) that understands context without sport-specific training

Lightweight pose estimation (MediaPipe) that runs anywhere

Cloud infrastructure that makes sophisticated AI accessible at consumer prices

This convergence means:

Democratized analysis: Any athlete with a smartphone gets professional-grade feedback

Scalable coaching: Coaches can monitor hundreds of athletes asynchronously

Cross-sport insights: Understand how fundamentals transfer between activities

Continuous improvement: Every session tracked, every metric trended

The marginal cost of analyzing one more video approaches zero, while the value to athletes remains high.

Conclusion: Temporal Understanding Changes Everything

We started with a simple question: "What if we treated sports video analysis like natural language—with temporal understanding and contextual meaning?"

The answer transformed how we think about athletic development. Instead of building rigid, sport-specific rule engines, we use AI that comprehends movement in context. Instead of expensive motion capture labs, we use smartphone videos. Instead of subjective coaching alone, we provide objective, repeatable biomechanical data.

The key insights:

Temporal first: Understanding context before measuring precision enables sport-agnostic analysis

Body-proportional metrics: Normalizing by body dimensions enables fair comparison across ages and sizes

Graceful degradation: Partial results beat complete failures

Smart segmentation: Analyzing only meaningful moments makes the system 10x more efficient

Simple scoring: Three-zone curves feel fair while highlighting real issues

The technology is here. The economics work. The results are proven. What started as an experiment is now a production system analyzing thousands of videos per month.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

TwelveLabs Documentation: Pegasus Guide and Marengo Guide

MediaPipe Pose: Pose landmark detection guide

Introduction

You've just recorded your basketball free throw practice session. The shots look decent—good form, consistent release. But something's off. Your coach mentions "follow-through" and "knee bend," but how do you actually measure these things? And more importantly, how do you track improvement over time without manually reviewing every frame?

The problem? Most video analysis tools are either:

Consumer apps with generic "good job!" feedback that doesn't actually measure anything

Professional systems costing $10,000+ and requiring sports scientists to operate

Single-sport solutions that work great for basketball but can't handle your baseball swing

This is why we built a multi-sport analysis system—a pipeline that doesn't just track motion, but actually understands it through temporal video intelligence. Instead of rigid sport-specific rules, we use AI that comprehends what makes an athletic movement effective.

The key insight? Temporal understanding + precise measurement = meaningful feedback. Traditional pose estimation tells you joint angles, but temporal video intelligence tells you why those angles matter in the context of your entire movement sequence.

The Problem with Traditional Analysis

Here's what we discovered: Athletic movement can't be understood frame-by-frame. A perfect basketball shot isn't just about the release angle—it's about how the knees bent, how weight transferred, how the follow-through completed. Miss any of these temporal elements and your analysis becomes meaningless.

Consider this scenario: You're analyzing a free throw. Traditional frame-based systems might report:

Elbow angle at release: 92 degrees ✓

Release height: 2.1 meters ✓

Wrist alignment: Good ✓

But the shot still misses. Why? Because those measurements ignore:

Knee bend during the preparation phase (not just at one frame)

Weight transfer throughout the motion (not just starting position)

Follow-through consistency over multiple attempts (not just one shot)

Traditional approaches would either:

Accept limited frame-by-frame metrics (missing the full picture)

Build sport-specific state machines (basketball rules, baseball rules, golf rules...)

Hire biomechanics experts to manually review every video (doesn't scale)

This system takes a different approach: understand the entire movement sequence temporally, then measure precisely what matters.

System Architecture: The Big Picture

The analysis pipeline combines temporal video understanding with precise biomechanical measurement through five key stages:

Each stage is designed to be independent, allowing us to optimize or replace components without rewriting the entire pipeline.

Stage 1: Temporal Understanding with TwelveLabs

Why Temporal Understanding Matters

The breakthrough insight was realizing that context determines correctness. An elbow angle of 95 degrees might be perfect for a basketball shot but terrible for a baseball pitch. Instead of building separate rule engines for each sport, we use TwelveLabs to understand what's happening temporally.

The Two-Model Approach

We use Pegasus and Marengo together because they complement each other:

Pegasus provides high-level semantic understanding:

"What sport is being played?"

"Is this a practice drill or competition?"

"What actions are occurring?"

Marengo provides precise visual search:

"Where exactly does the shot occur?"

"Are there multiple attempts?"

"What's the temporal context?"

# Pegasus understands the semantic content analysis = pegasus_client.analyze( video_id=video_id, prompt="What sport is this? List all action timestamps.", temperature=0.1 # Low for factual responses ) # Marengo confirms with visual search search_results = marengo_client.search.query( index_id=index_id, query_text="basketball shot attempt, player shooting", search_options=["visual"] )

Why This Works

Pegasus might identify "basketball shot at 2.5 seconds" but Marengo might catch additional attempts at 8.1s and 15.3s that Pegasus missed. By merging both sources, we achieve 95%+ detection accuracy while maintaining low false positive rates.

The merge logic is simple but crucial:

# Two detections are duplicates if within 2 seconds for result in marengo_results: if not any(abs(result.start - existing["timestamp"]) < 2.0 for existing in pegasus_moments): # Not a duplicate - add it all_moments.append(result)

This two-second window prevents analyzing the same action twice while ensuring we don't miss distinct attempts.

Stage 2: Sport-Specific Action Segmentation

The Importance of Temporal Windows

Different sports have fundamentally different movement cadences. This insight led us to sport-specific temporal windows:

Basketball requires 3 seconds before the shot (preparation is slow and deliberate) and 2 seconds after (follow-through is critical for accuracy).

Baseball needs only 2 seconds before (compact motion) and 1 second after (quick finish).

Golf demands 4 seconds before (long backswing) and 3 seconds after (full follow-through).

These windows aren't arbitrary—they're based on biomechanical analysis of how long each motion phase actually takes.

Why This Matters

Extracting the right temporal window means we capture:

Complete preparation: The knee bend starts 2-3 seconds before release

Clean baseline: No extraneous movement before the action begins

Full follow-through: Critical for measuring completion quality

The implementation uses FFmpeg's input-seeking for speed:

# Input-seeking (-ss before -i) is 10-20x faster subprocess.run([ "ffmpeg", "-i", video_path, "-ss", str(start_time), # Seek before input "-t", str(duration), "-preset", "fast", # Balance speed/quality output_path ])

This single optimization made the difference between a 20-minute full-video analysis and a 60-second segmented analysis—95% compute savings.

Stage 3: Pose Estimation and Body Tracking

MediaPipe: The Sweet Spot

We chose MediaPipe's pose estimation for three reasons:

33 landmarks covering all major joints needed for athletic analysis

Runs anywhere: CPU at 30fps, GPU at 100+ fps

Temporal tracking: Maintains identity across frames (not just per-frame detection)

The model_complexity parameter was crucial:

Level 1 is the sweet spot: Accurate enough for biomechanics (1-2 degree error is acceptable), fast enough for practical use (30 FPS means real-time processing on most hardware).

Why Normalized Coordinates Matter

MediaPipe returns coordinates normalized to 0-1 range. This is crucial for fairness:

# Normalized coordinates (0-1 range) wrist_x = 0.52 # 52% across the frame wrist_y = 0.31 # 31% down from top # These work regardless of: # - Video resolution (720p, 1080p, 4K) # - Camera distance (5 feet or 50 feet away) # - Athlete size (child or adult)

A 10-year-old and a professional get analyzed with the same coordinate system. The normalization happens in the camera space, not the world space, which is exactly what we want for form analysis.

Stage 4: Biomechanical Metrics That Matter

The Five Key Metrics

Through testing with coaches and biomechanics experts, we identified five metrics that actually correlate with performance:

Body-Proportional Normalization: The Critical Innovation

This was our biggest architectural decision. Instead of absolute measurements, we normalize everything by body dimensions:

# BAD: Absolute measurement release_height_pixels = 823 # Meaningless without context # GOOD: Body-proportional measurement body_height = ankle_y - nose_y release_height_normalized = wrist_y / body_height # 0.68 = 68% of body height

Why this matters: A 5'2" youth player with a release at 68% of body height uses the same form as a 6'8" professional at 68% of body height. Both get scored fairly on technique, not physical size.

This single decision enabled:

Cross-age comparison (12-year-olds vs. adults)

Camera-agnostic analysis (distance doesn't matter)

Progress tracking as athletes grow (maintains consistency)

Angle Calculation: Simple But Powerful

Joint angles use basic trigonometry:

# Calculate angle at point2 formed by point1-point2-point3 vector1 = point1 - point2 vector2 = point3 - point2 cos_angle = dot(vector1, vector2) / (norm(vector1) * norm(vector2)) angle_degrees = arccos(cos_angle) * 180 / pi

The elegance is in what we measure, not how. For basketball:

Elbow angle: shoulder-elbow-wrist at release frame

Knee angle: hip-knee-ankle during preparation phase (minimum value)

Balance: standard deviation of shoulder-hip alignment across all frames

Stage 5: The Scoring System

Three-Zone Scoring Philosophy

The scoring curve was designed to feel fair while highlighting real issues:

Zone 1 (Perfect): Within ±5% of ideal → 100 points

Elbow angle 88-92° (ideal is 90°)

No penalty for minor variation

Zone 2 (Good): Within acceptable range → 90-100 points

Elbow angle 85-95° (min-max range)

Linear interpolation: closer to ideal = higher score

Zone 3 (Needs Work): Outside range → 0-70 points

Gradual decay prevents harsh penalties

Elbow at 80° gets ~60 points, not 0

Why Three Zones Work

This curve matches how coaches actually think:

"That's perfect form" → A (90-100)

"That's good, minor tweaks" → B (80-89)

"Needs focused work" → C (70-79)

"Significant issues" → D/F (<70)

Feedback Prioritization

We show only the top 3 improvement areas:

# Sort by score (lowest first = biggest opportunities) sorted_components = sorted(scores.items(), key=lambda x: x[1]["score"]) # Only show if below B-grade threshold (80) feedback = [ get_improvement_tip(metric, data["value"]) for metric, data in sorted_components[:3] if data["score"] < 80 ]

Athletes don't want 10 things to fix—they want 2-3 actionable items. This focus makes the system feel like a coach, not a report card.

Stage 6: Visualization and Output

Side-by-Side: The Key to Understanding

The annotated video uses a side-by-side layout:

Left side: Original video (what the athlete sees)

Right side: Pose overlay + HUD (what the system sees)

This dual view helps athletes connect abstract metrics to real movement. They can watch their actual shot while simultaneously seeing where the system detected issues.

The HUD Design

The heads-up display shows:

Composite score: Large, color-coded (green/yellow/red)

Letter grade: Familiar from school (A/B/C/D/F)

Top 3 metrics: Most important for this attempt

Real-time frame counter: Shows analysis progression

We deliberately keep it minimal—more information doesn't mean better feedback.

Performance: Why It Scales

The Three Critical Optimizations

1. Smart Segmentation (95% compute savings)

Instead of processing 1,800 seconds frame-by-frame:

Marengo finds 15 relevant clips (5 seconds each)

Process only 75 seconds total

Full practice analysis in <60 seconds instead of 20+ minutes

2. Cached Embeddings (66% time savings on repeats)

TwelveLabs indexes videos once:

First analysis: 30s indexing + 15s analysis = 45s total

Repeat analysis: 0s indexing + 15s analysis = 15s total

Athletes often re-analyze the same video with different parameters. Caching makes this nearly instant.

3. Batch Processing (5x speedup)

Analyzing 10 shots from one video:

Upload once, segment 10 times

One index, multiple searches

90 seconds total vs. 450 seconds if done separately

Cost Economics

At $0.10 per video analyzed:

100 athletes × 4 videos/month = $40/month API costs

Total operating cost: ~$105/month for 400 videos

Scales linearly: 10,000 athletes = $10,500/month

The economics work because marginal cost per video stays constant while value to athletes remains high.

Technical Specifications

Processing Performance

GPU processing: 40-60 seconds total

CPU processing: 60-80 seconds total

The main difference is pose estimation speed (30 FPS on CPU vs. 100+ FPS on GPU).

Accuracy Metrics

From our large batch data testing (47 athletes, 427 sessions):

The Future: What's Next

Near-term Enhancements

Long-term Vision

The convergence of three technologies creates unprecedented possibilities:

Temporal video intelligence (TwelveLabs) that understands context without sport-specific training

Lightweight pose estimation (MediaPipe) that runs anywhere

Cloud infrastructure that makes sophisticated AI accessible at consumer prices

This convergence means:

Democratized analysis: Any athlete with a smartphone gets professional-grade feedback

Scalable coaching: Coaches can monitor hundreds of athletes asynchronously

Cross-sport insights: Understand how fundamentals transfer between activities

Continuous improvement: Every session tracked, every metric trended

The marginal cost of analyzing one more video approaches zero, while the value to athletes remains high.

Conclusion: Temporal Understanding Changes Everything

We started with a simple question: "What if we treated sports video analysis like natural language—with temporal understanding and contextual meaning?"

The answer transformed how we think about athletic development. Instead of building rigid, sport-specific rule engines, we use AI that comprehends movement in context. Instead of expensive motion capture labs, we use smartphone videos. Instead of subjective coaching alone, we provide objective, repeatable biomechanical data.

The key insights:

Temporal first: Understanding context before measuring precision enables sport-agnostic analysis

Body-proportional metrics: Normalizing by body dimensions enables fair comparison across ages and sizes

Graceful degradation: Partial results beat complete failures

Smart segmentation: Analyzing only meaningful moments makes the system 10x more efficient

Simple scoring: Three-zone curves feel fair while highlighting real issues

The technology is here. The economics work. The results are proven. What started as an experiment is now a production system analyzing thousands of videos per month.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

TwelveLabs Documentation: Pegasus Guide and Marengo Guide

MediaPipe Pose: Pose landmark detection guide

Related articles

From Manual Review to Automated Intelligence: Building a Surgical Video Analysis Platform with YOLO and Twelve Labs

Building Recurser: Iterative AI Video Enhancement with TwelveLabs and Google Veo

Building an AI-Powered Sponsorship ROI Analysis Platform with TwelveLabs

Building SAGE: Semantic Video Comparison with TwelveLabs Embeddings