Tutorial

Tutorial

Tutorial

From Manual Review to Automated Intelligence: Building a Surgical Video Analysis Platform with YOLO and Twelve Labs

Meeran Kim

Meeran Kim

Meeran Kim

Surgeons spend 3–5 hours per procedure manually reviewing laparoscopic footage — logging instruments, identifying phases, writing reports by hand. This tutorial walks through building a platform that pairs YOLO's pixel-precise tool detection with Twelve Labs' multimodal video understanding to automate operative documentation, segment surgical phases, and make entire video libraries searchable by natural language. The result: a hybrid architecture pattern that converts passive surgical recordings into structured, queryable clinical intelligence.

Surgeons spend 3–5 hours per procedure manually reviewing laparoscopic footage — logging instruments, identifying phases, writing reports by hand. This tutorial walks through building a platform that pairs YOLO's pixel-precise tool detection with Twelve Labs' multimodal video understanding to automate operative documentation, segment surgical phases, and make entire video libraries searchable by natural language. The result: a hybrid architecture pattern that converts passive surgical recordings into structured, queryable clinical intelligence.

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Feb 20, 2026

Feb 20, 2026

Feb 20, 2026

9 Minutes

9 Minutes

9 Minutes

Copy link to article

Copy link to article

Copy link to article

Surgeons and residents routinely spend 3–5 hours per procedure reviewing laparoscopic footage frame-by-frame — logging instruments, identifying surgical phases, and writing operative reports by hand. Across a teaching hospital processing hundreds of procedures monthly, that manual review adds up to thousands of hours of clinical time diverted from patient care.

In this tutorial, we build Surgical Video Intelligence, a platform that treats surgical video as a rich, queryable dataset. The core architectural insight: pair YOLO (You Only Look Once) for precise object detection with Twelve Labs' multimodal video understanding API to create a system that can both see which tools are on screen and understand the surgical context around them.

⭐️ What We're Building: A Next.js application that automatically:

Detects surgical tools in real-time with bounding boxes and timestamps

Generates SOAP operative notes (Subjective, Objective, Assessment, Plan) from video evidence

Segments surgeries into chapters (e.g., "Dissection," "Closure")

Enables semantic search across video libraries (e.g., "find all cauterization moments")

Provides Dr. Sage, a conversational AI assistant for follow-up clinical Q&A

📌 Try the live demo | View the GitHub repository

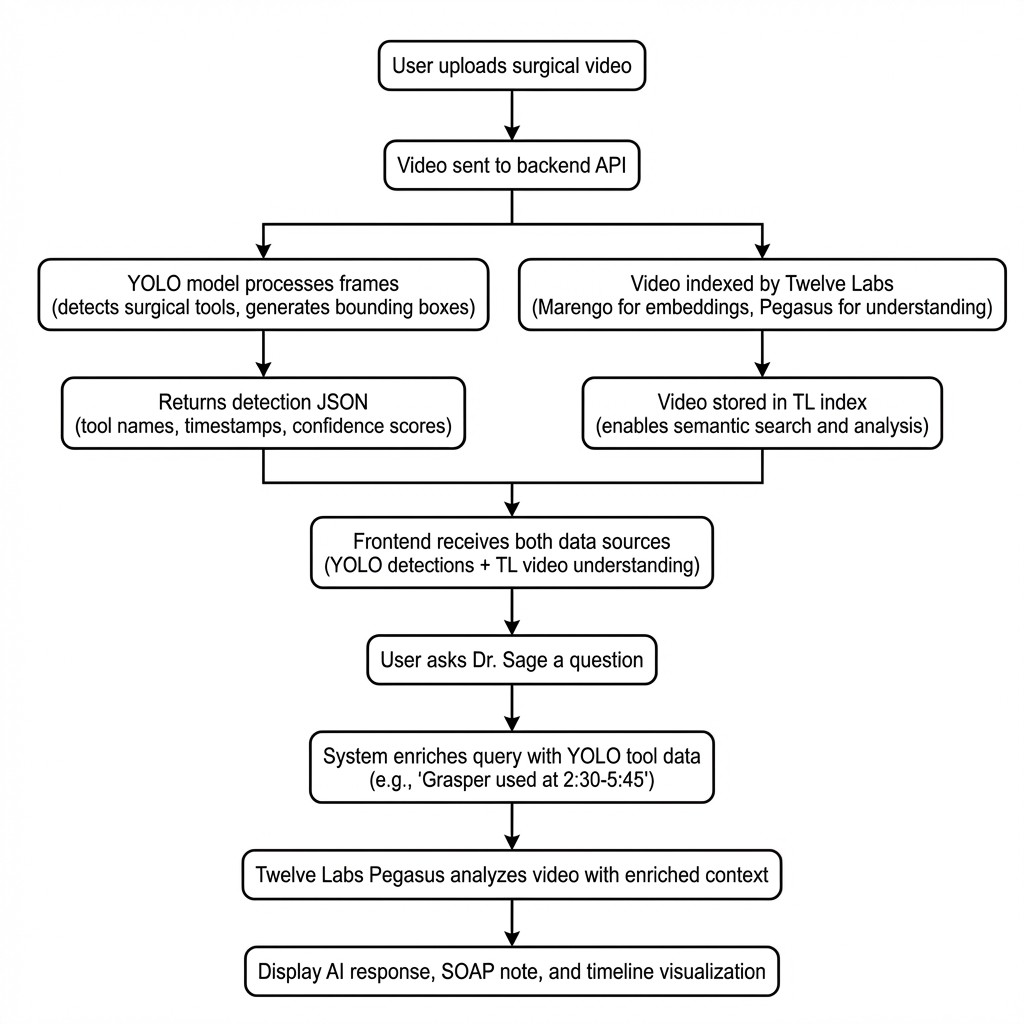

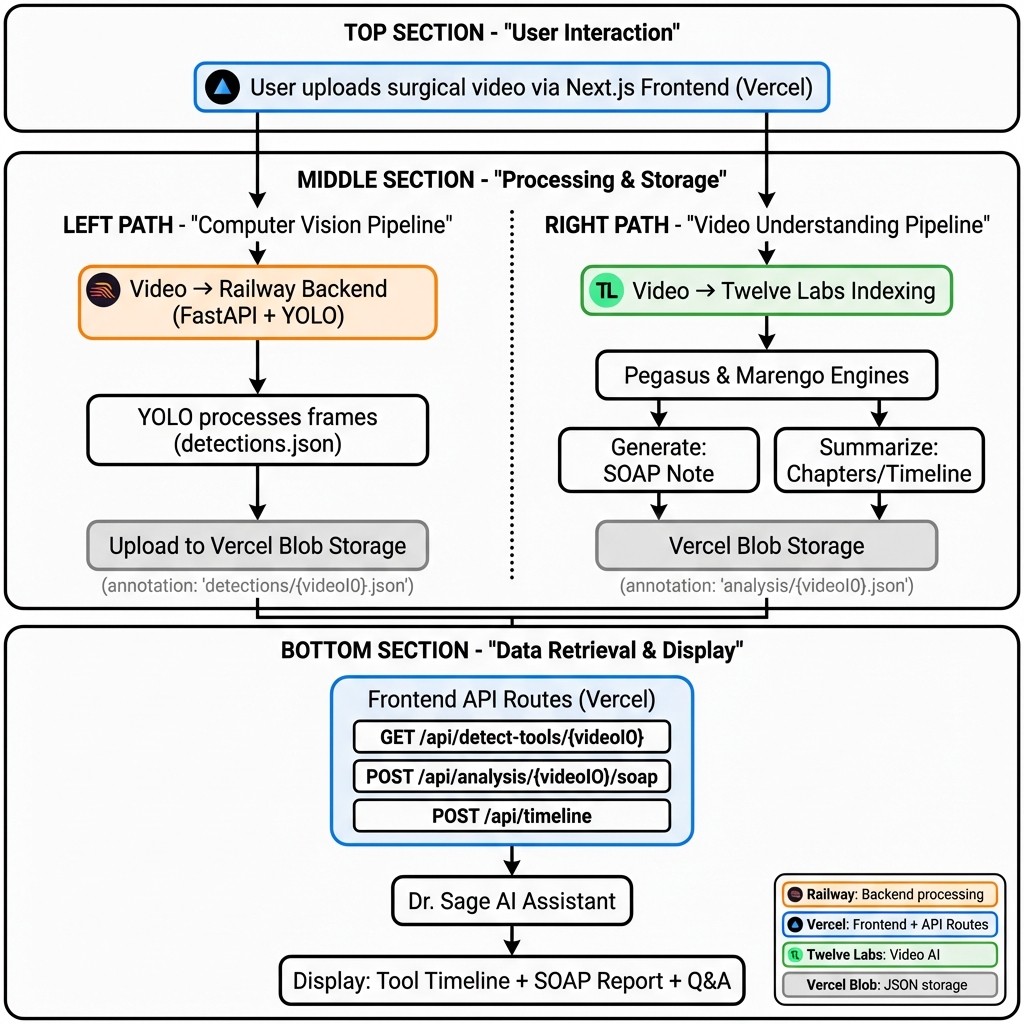

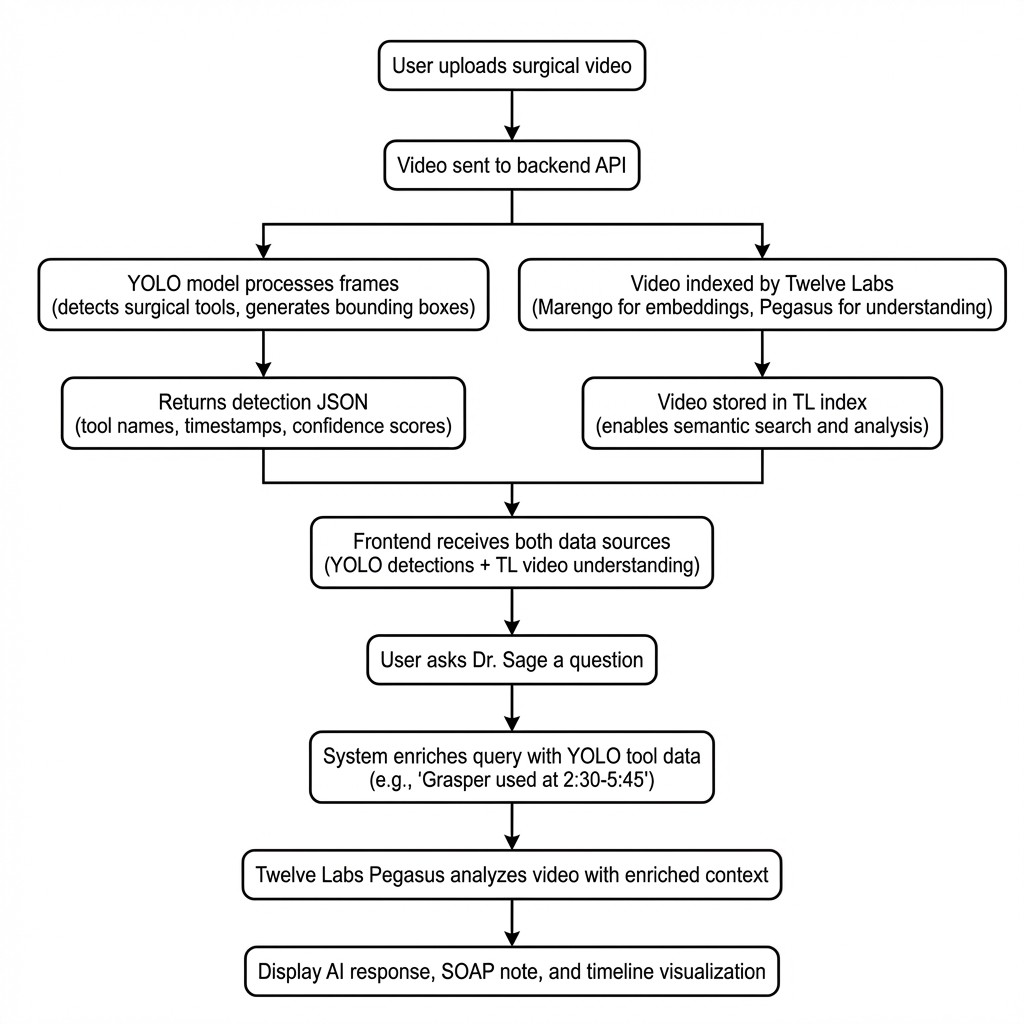

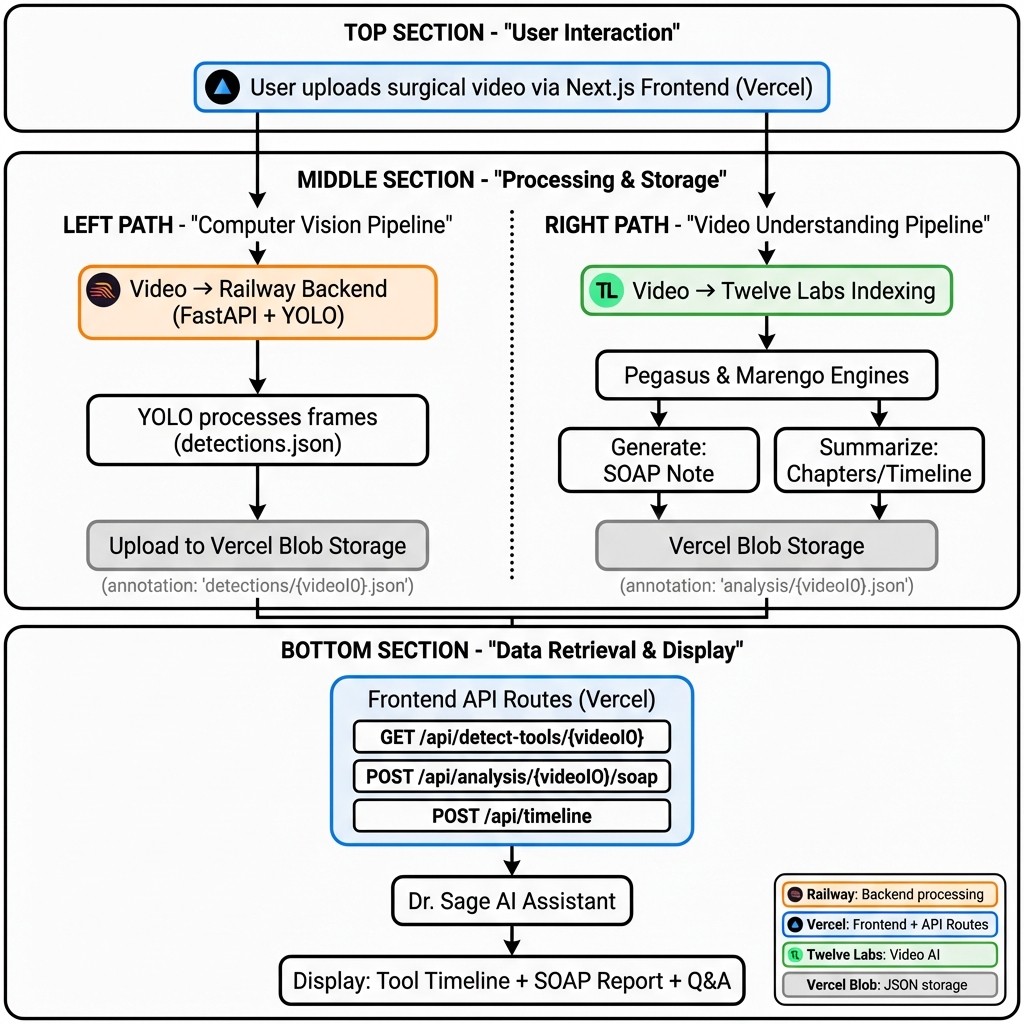

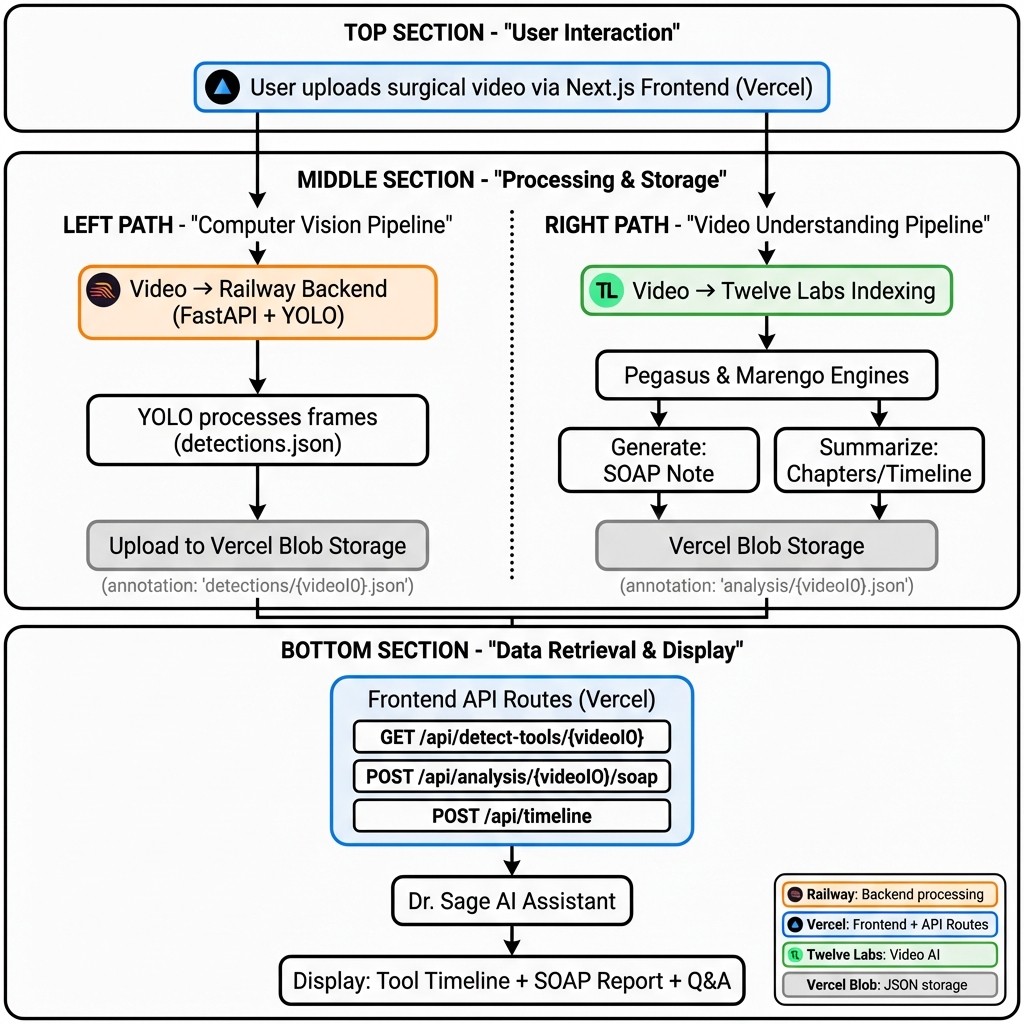

System Architecture

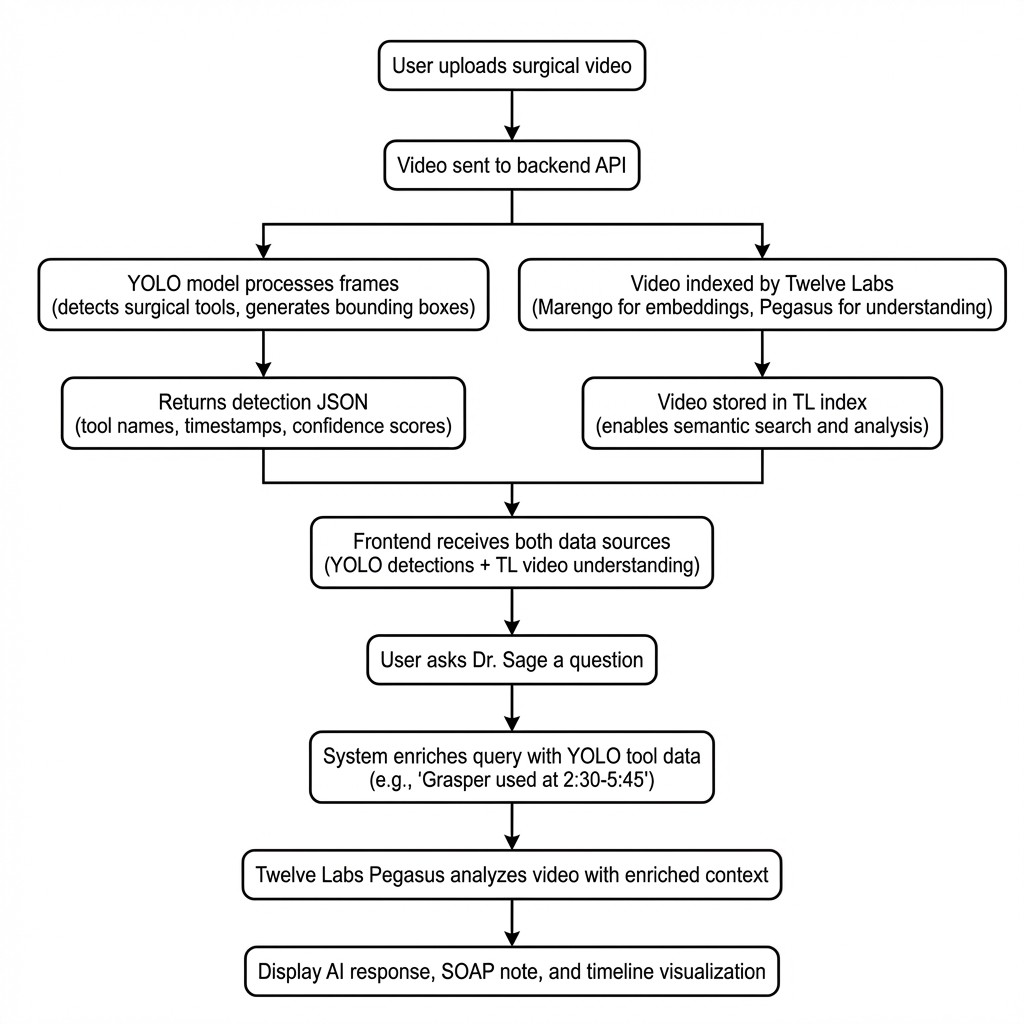

Surgical video analysis requires two fundamentally different capabilities: pixel-precise instrument identification and contextual understanding of what those instruments are doing within a procedure. No single model handles both well.

Our architecture separates these concerns into two parallel pipelines that converge at inference time.

Video Ingestion: The user uploads a laparoscopic surgery video.

Tool Detection: A YOLOv8 model (fine-tuned on 7 distinct surgical instrument classes) scans the video and outputs a structured JSON file of detections with timestamps and bounding boxes.

Video Indexing: The video is indexed by Twelve Labs to enable semantic search (Marengo embeddings) and contextual analysis (Pegasus reasoning).

Context Fusion: When the system generates notes or answers questions, YOLO's detection data is injected into the prompt alongside Twelve Labs' video understanding — grounding the AI's reasoning in verified visual evidence.

This hybrid approach matters because each technology compensates for the other's blind spots. YOLO can identify a grasper with 95% confidence at frame 120, but it cannot tell you whether that grasper is being used for retraction or dissection. Twelve Labs can reason about surgical technique and phase progression, but benefits from hard detection data to prevent hallucinating instrument usage that never occurred.

Application Demo

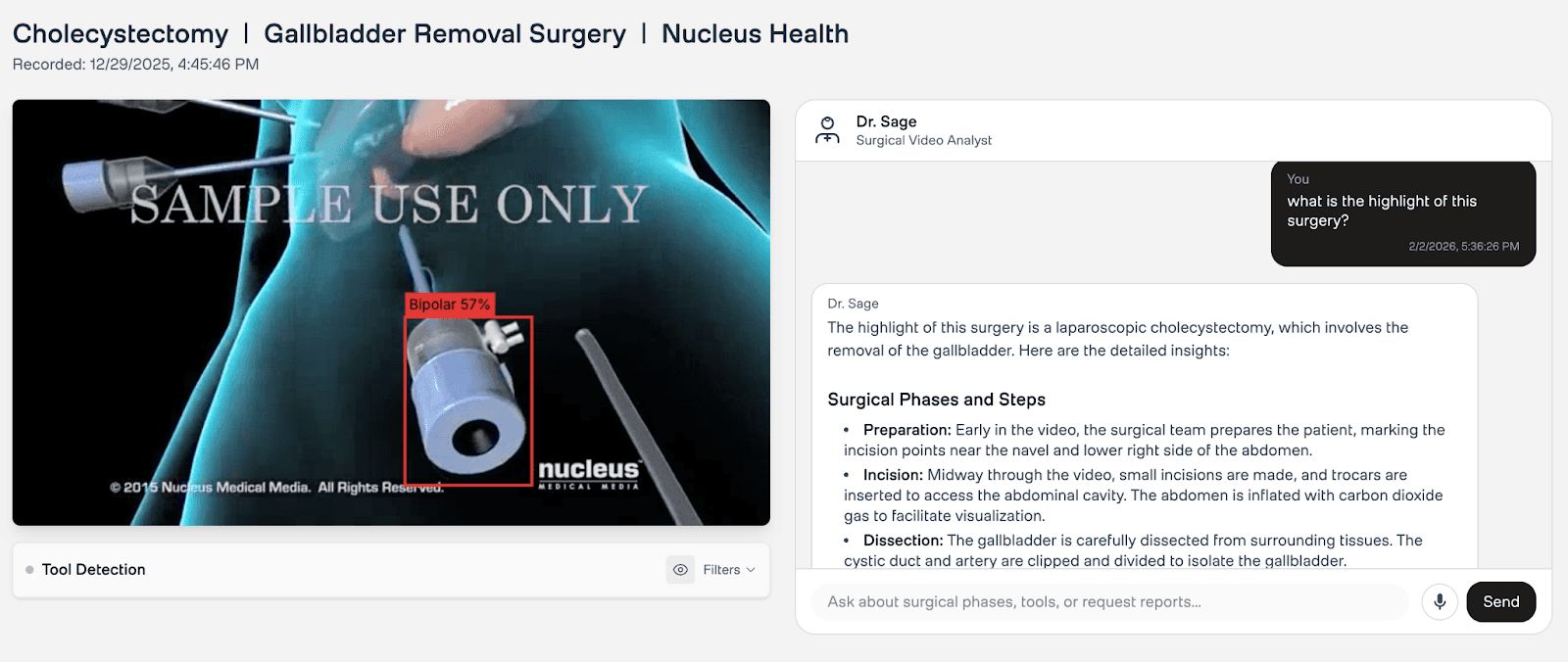

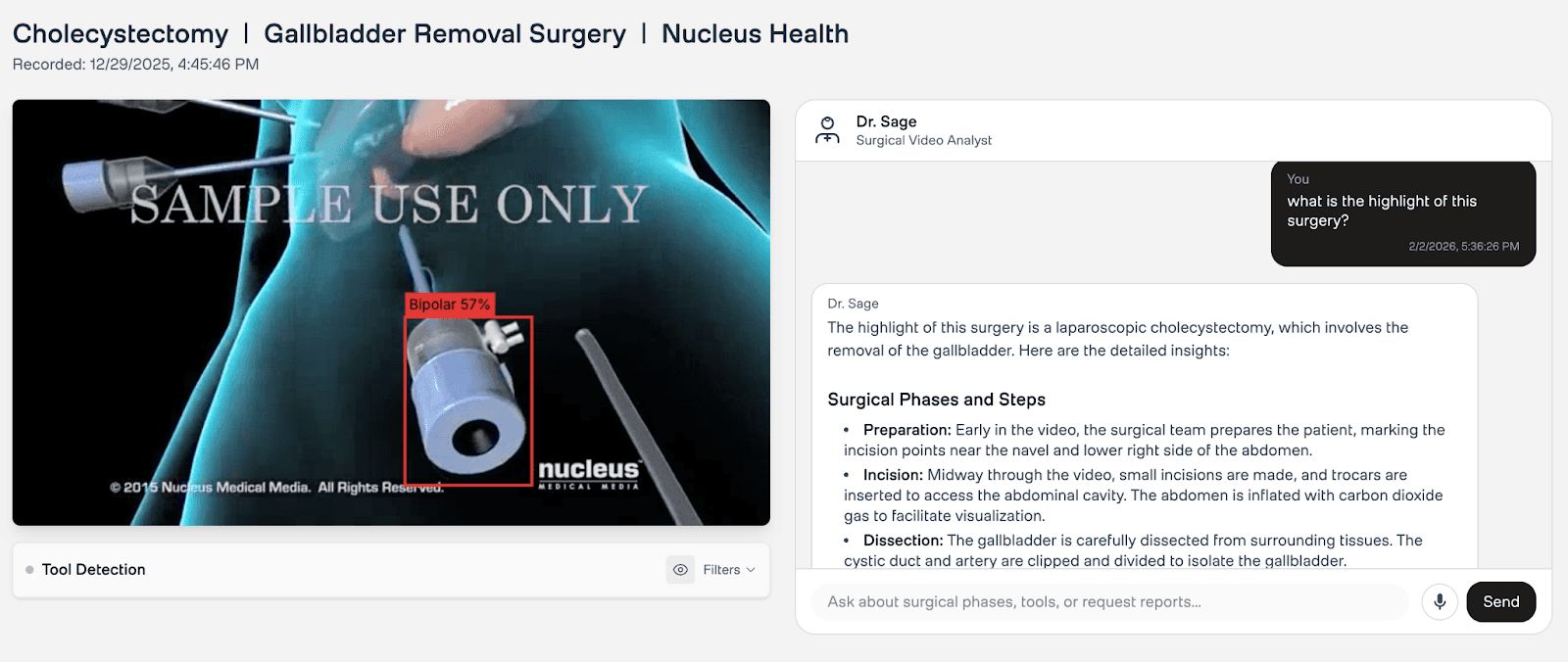

1. Real-Time Tool Detection

The main analysis view provides a synchronized experience. On the left, the video player overlays real-time tool bounding boxes detected by YOLO. Below, a "swimlane" timeline visualizes exactly when each instrument appears — giving educators an instant, scannable map of instrument usage across the entire procedure.

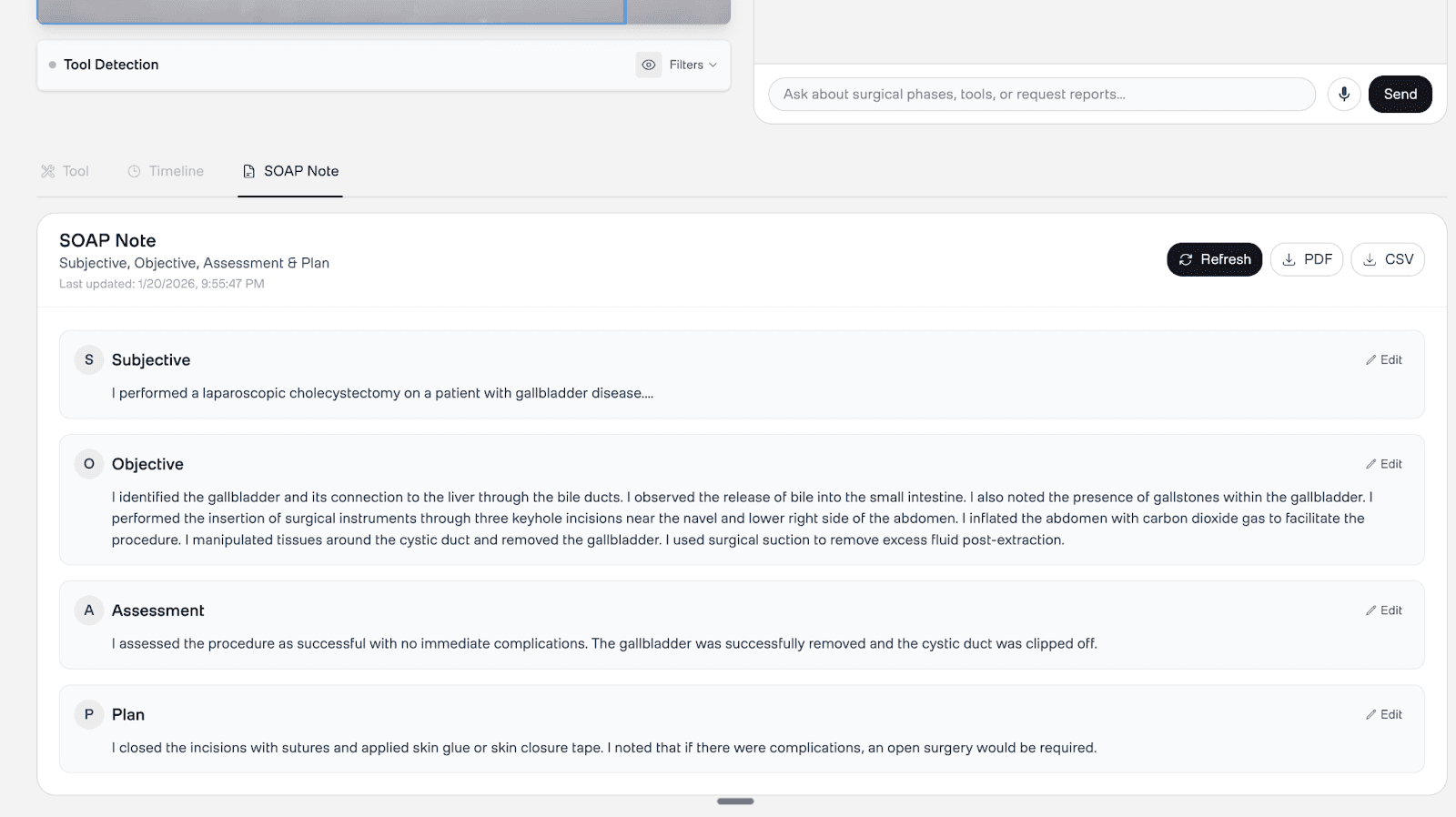

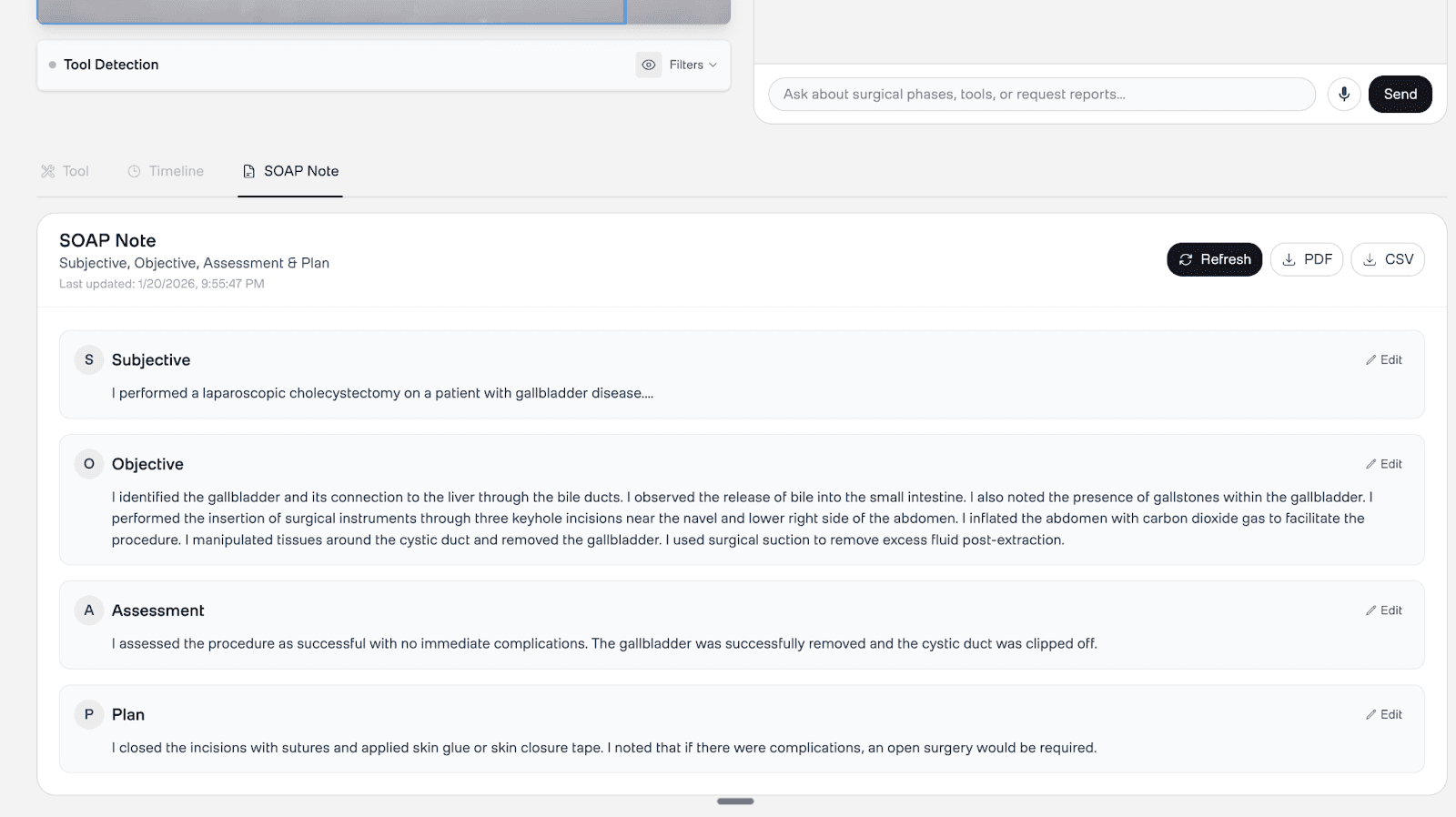

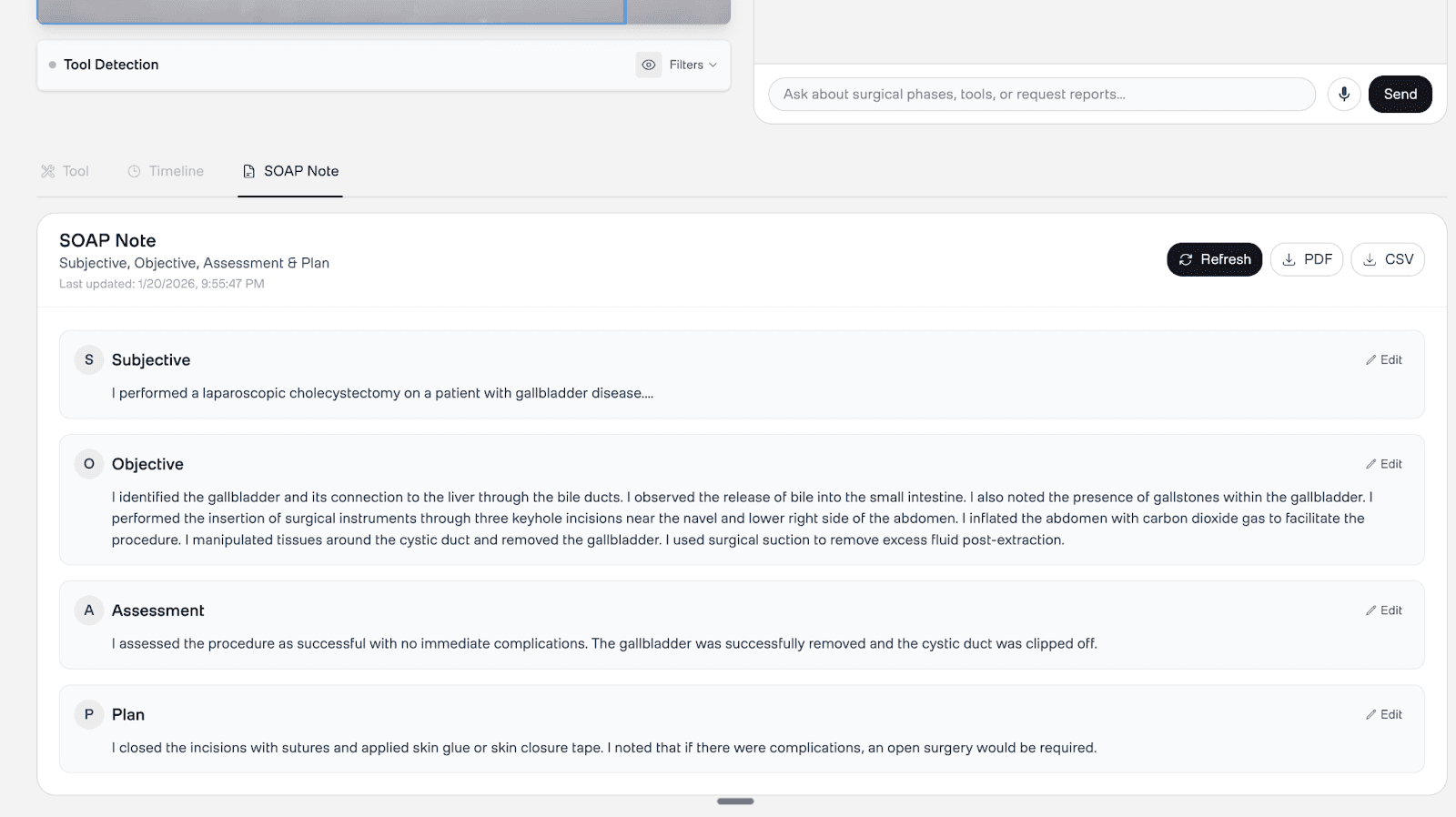

2. Automatic SOAP Note Generation

Upon video upload and indexing, the system generates a first-person operative note using the Twelve Labs Analyze API, enriched with YOLO tool detection data. No manual input required. The resulting SOAP note includes accurate instrument references anchored to specific timestamps, reducing documentation time from hours to minutes.

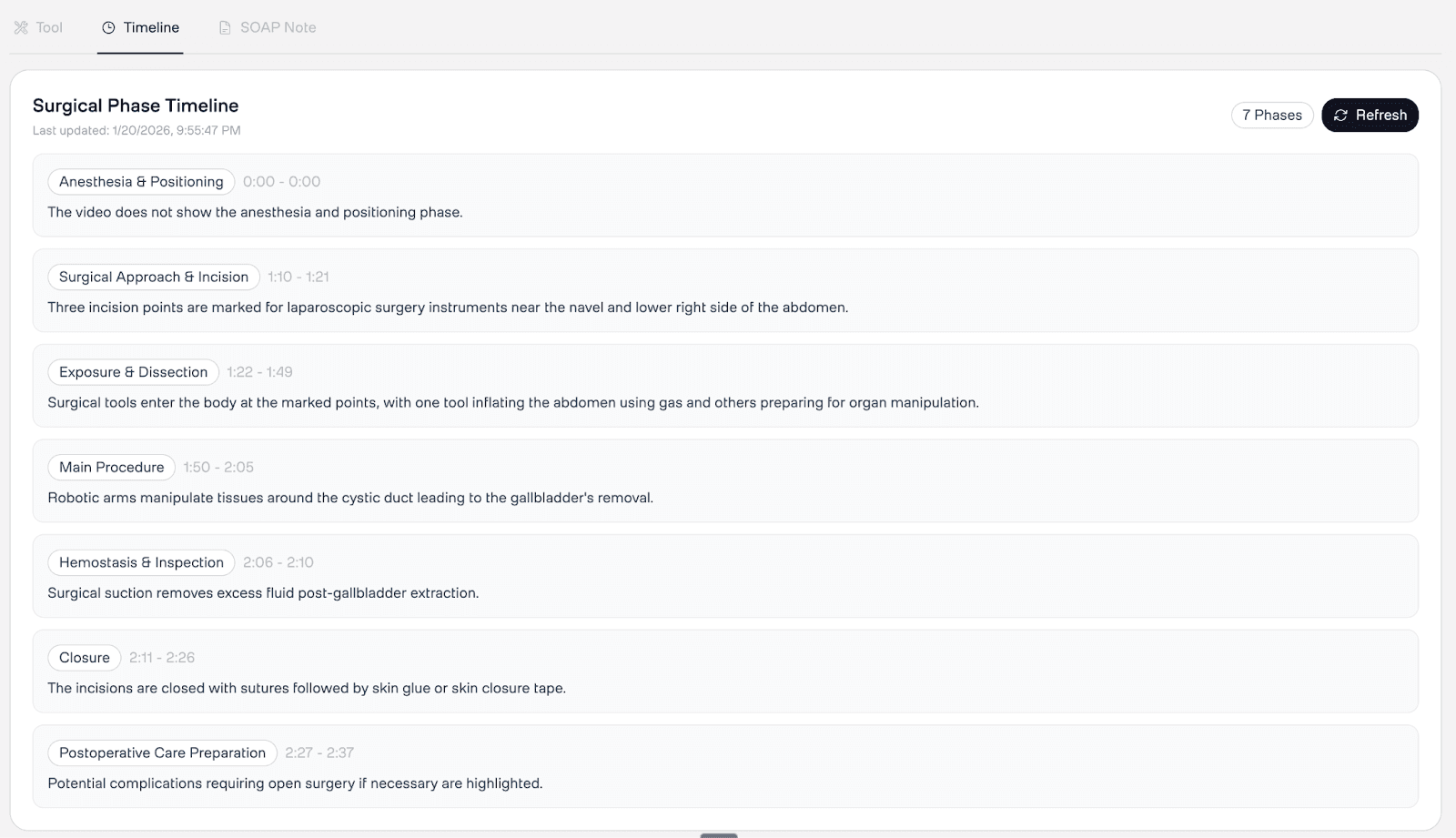

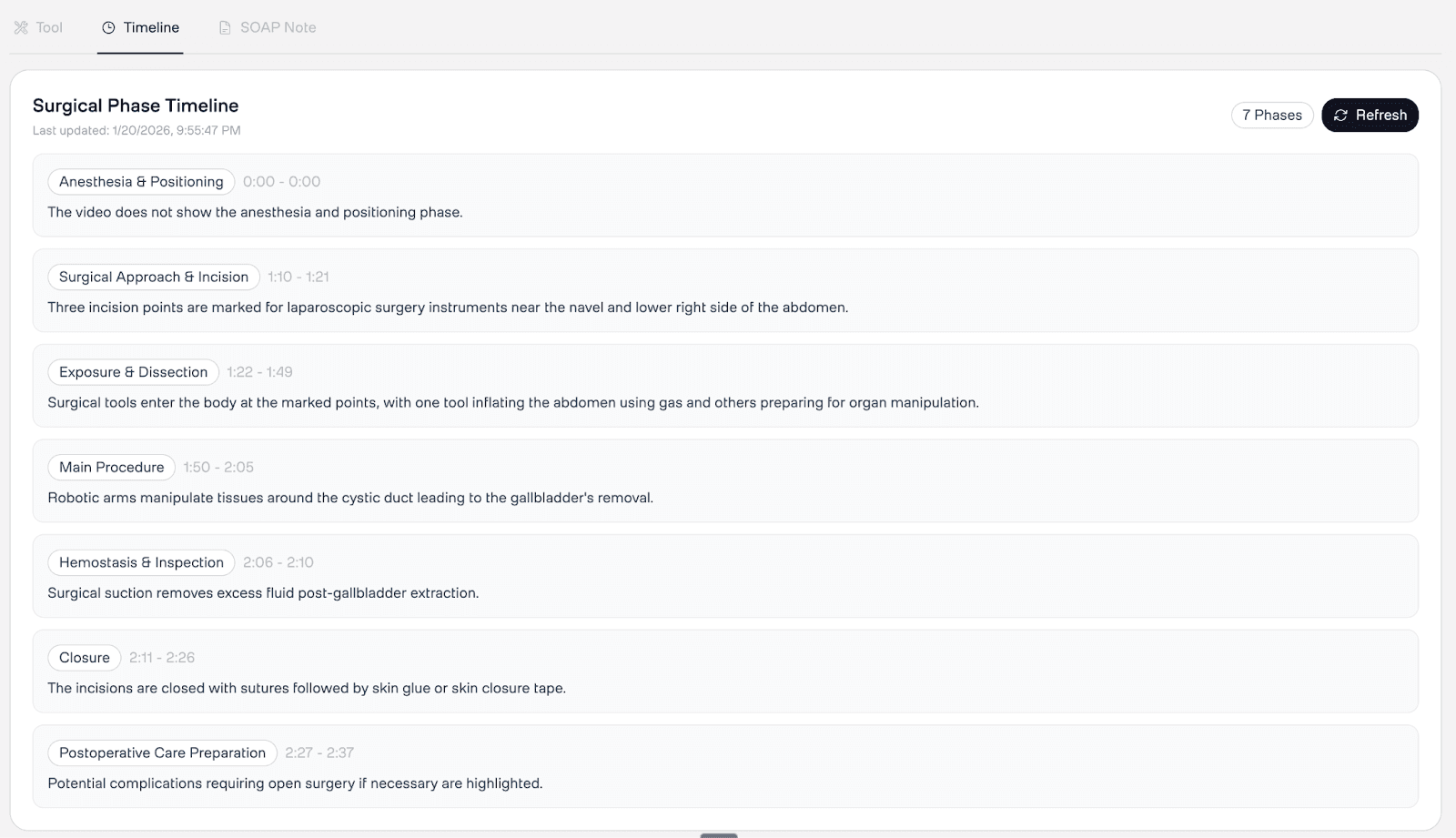

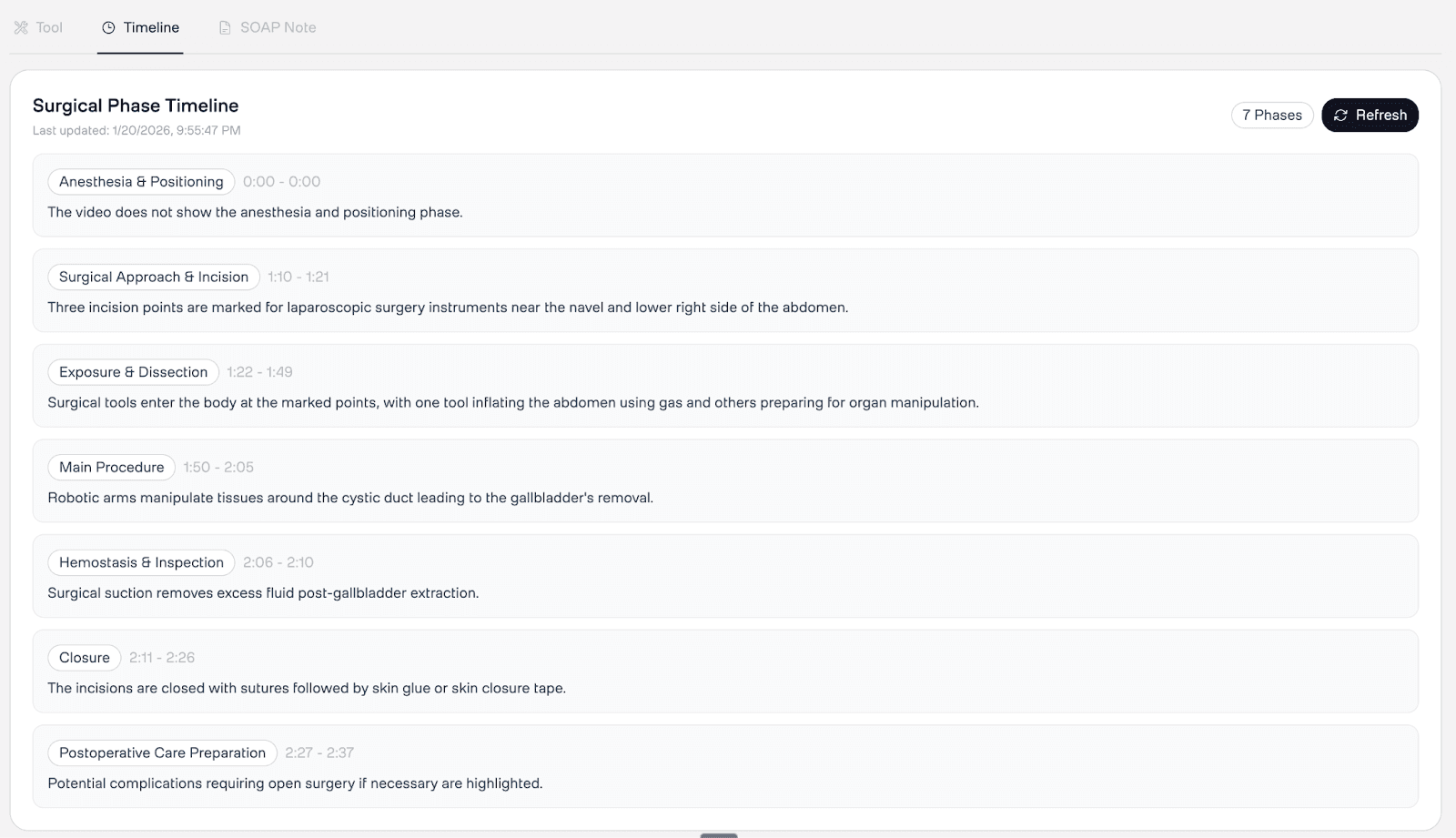

3. Surgical Phase Segmentation

The Twelve Labs Analyze API automatically breaks the surgery into distinct phases — "Preparation," "Dissection," "Closure" — using structured JSON output. These chapters appear on an interactive timeline, enabling quick navigation to specific surgical stages. For training programs, this transforms a 90-minute recording into a navigable curriculum.

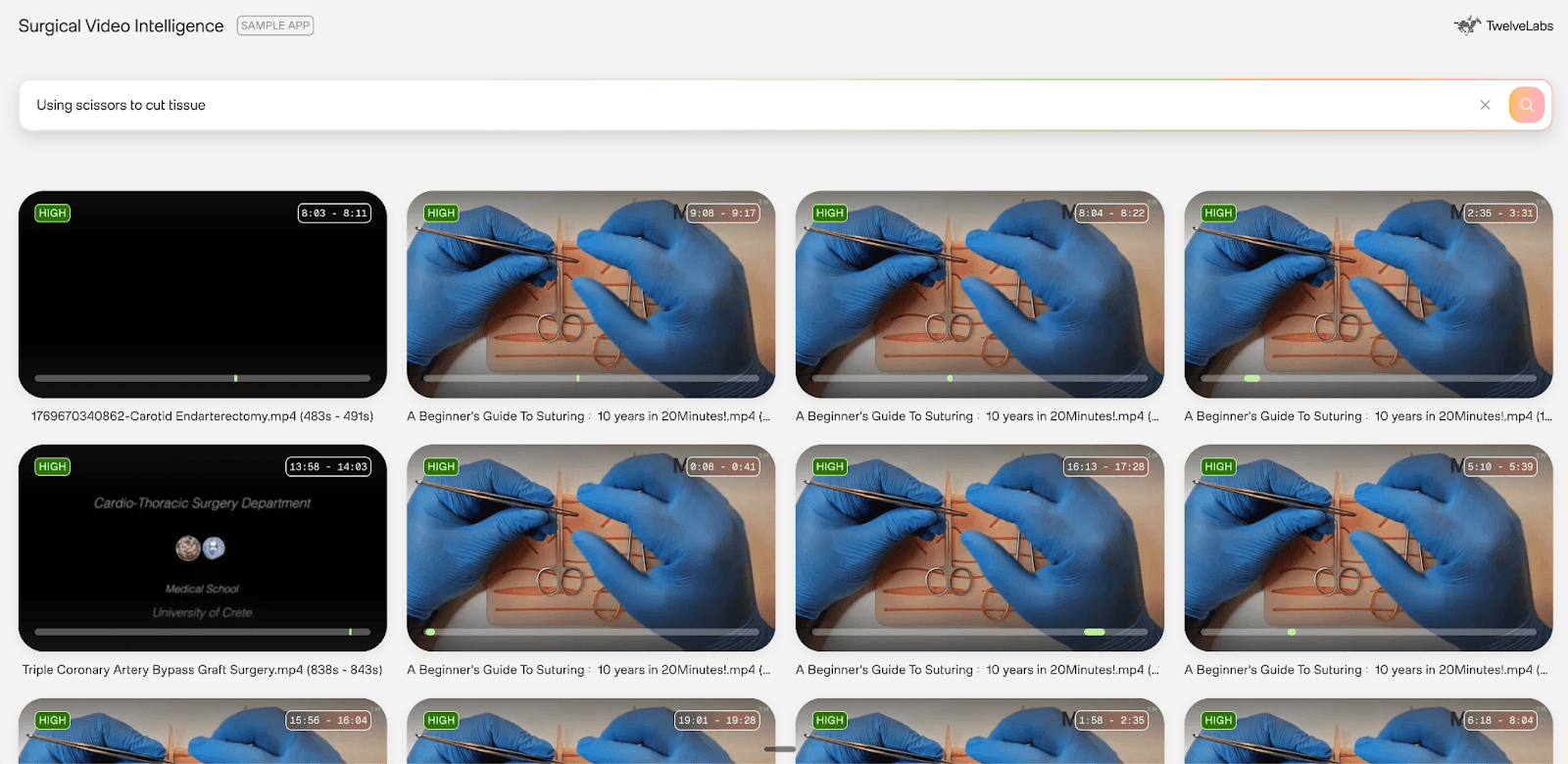

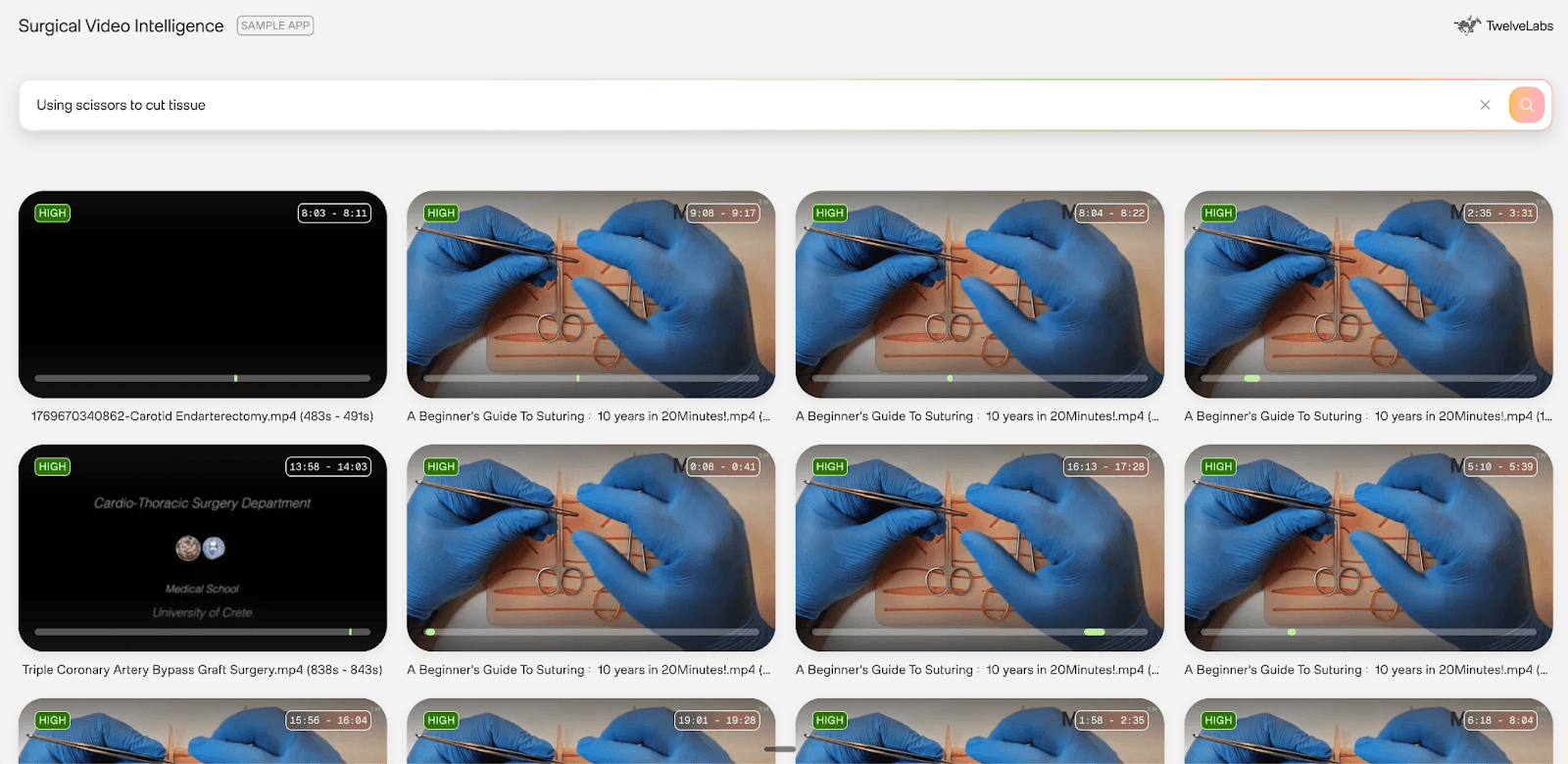

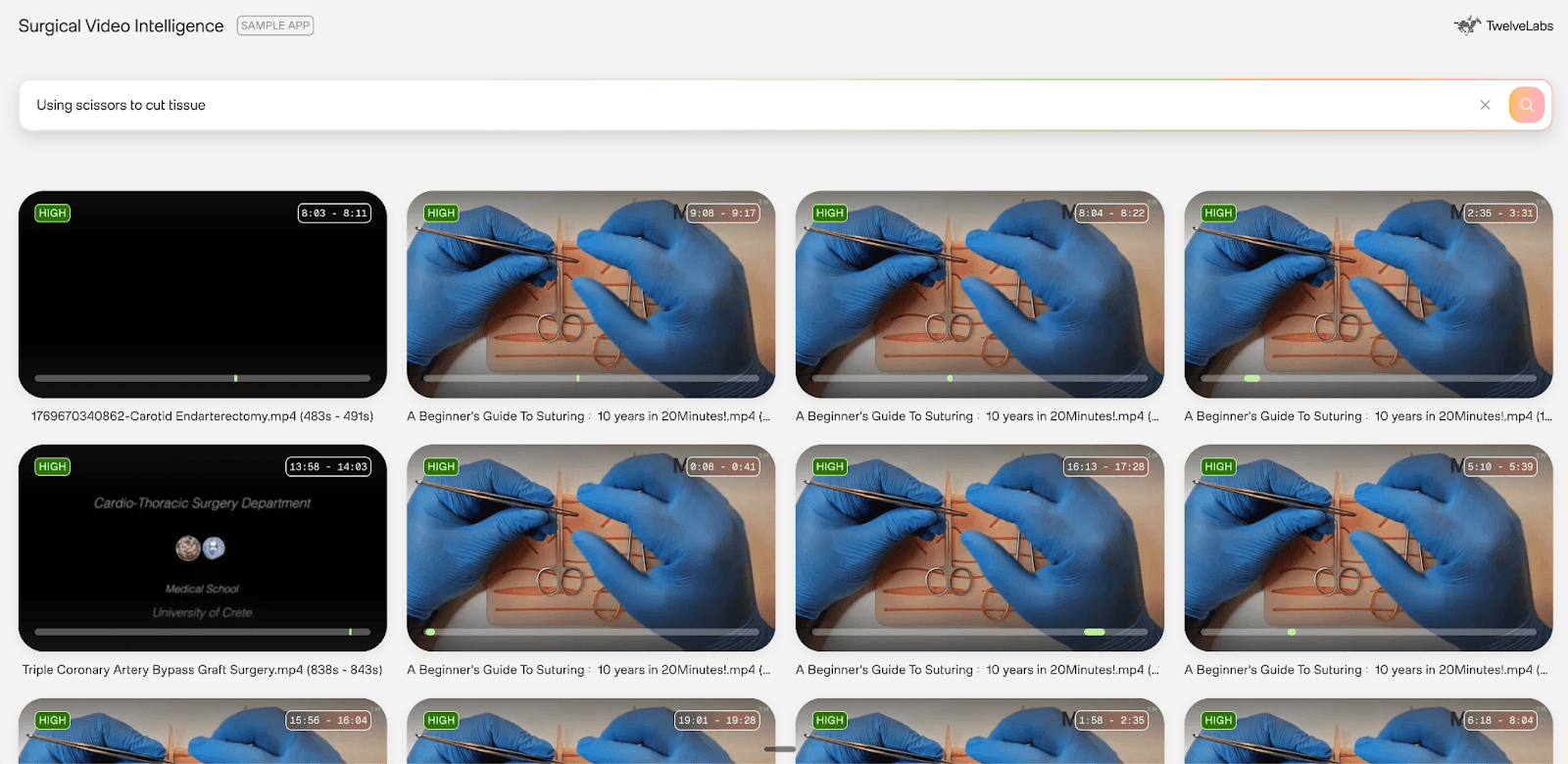

4. Semantic Search

Natural language search across surgical video libraries, powered by Twelve Labs Marengo's multimodal embeddings. Unlike keyword matching, this understands surgical context semantically.

Example query: "Show me when the surgeon used electrocautery" Result: Clips at 2:34–2:58 and 5:12–5:45 with 0.92 confidence

For a surgical educator searching "use of monopolar coagulation" across 50+ training videos, Marengo returns all instances with timestamps — enabling rapid compilation of technique montages without watching a single full recording.

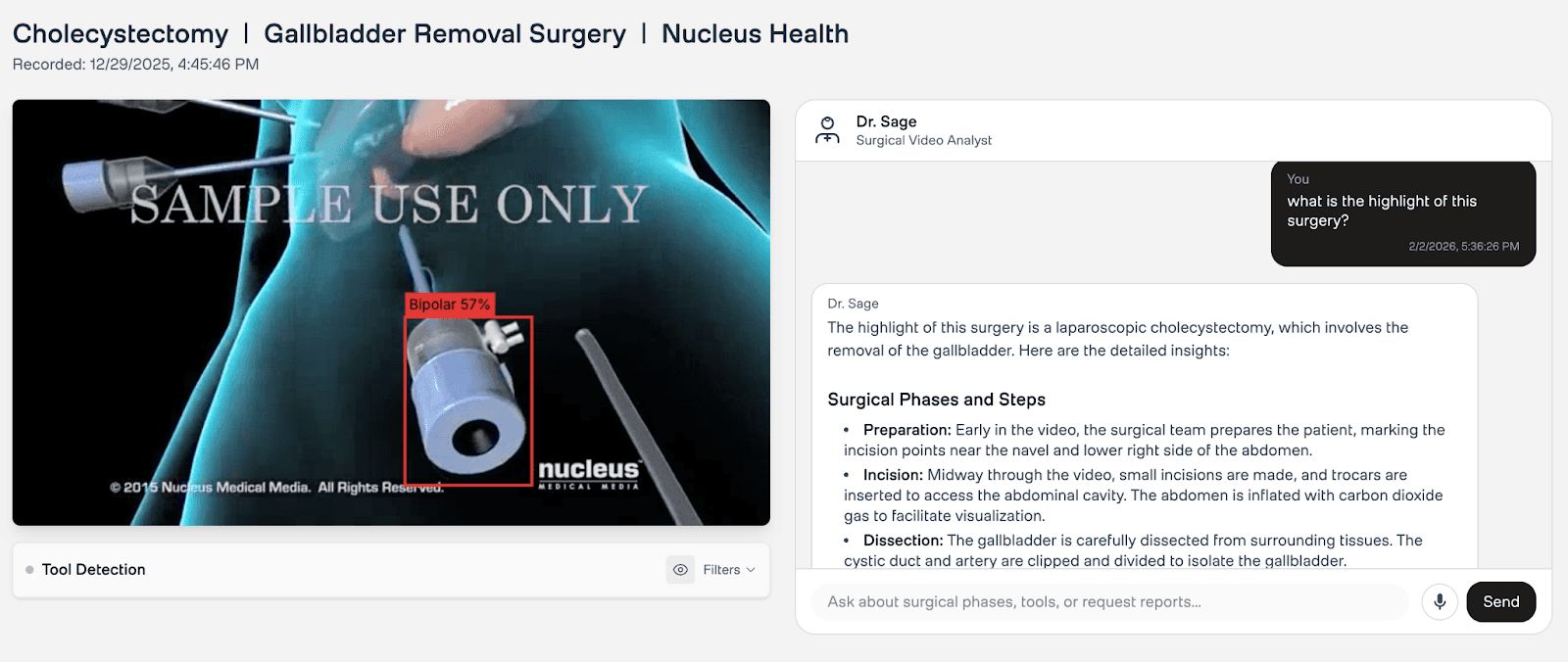

5. Dr. Sage: Conversational Clinical Q&A

After automated analysis completes, Dr. Sage provides a conversational interface for follow-up questions. It has access to both YOLO detections and Twelve Labs video understanding, enabling queries like "What tools were used during dissection?" or "Explain the technique used at 3:45." This bridges the gap between automated reports and the ad-hoc questions that arise during surgical review and training sessions.

Deployment Architecture

The application is designed as a distributed system with clear separation between compute-intensive inference and user-facing operations.

Infrastructure Overview

Frontend (Vercel): Next.js application serving the UI and API routes

Backend (Railway): FastAPI server running YOLO inference

Storage (Vercel Blob): Centralized JSON storage for all analysis results

AI Processing (Twelve Labs): Cloud-based video understanding

Data Flow

When a user uploads a surgical video, two parallel pipelines activate:

Computer Vision Pipeline (Railway)

User uploads video → Frontend sends to Railway backend

YOLO processes the video frame-by-frame (every 120th frame for efficiency)

Backend generates

detections.json:

{ "detections": [ { "frame": 120, "timestamp": 5.0, "tools": [ {"class_name": "Grasper", "confidence": 0.95, "bbox": {...}} ] } ] }

Results upload to Vercel Blob at path:

detections/{videoId}.json

Video Intelligence Pipeline (Twelve Labs + Vercel)

Video is indexed by Twelve Labs (Marengo for embeddings, Pegasus for understanding)

Frontend API routes trigger AI generation:

SOAP Note:

POST /api/analysis/{videoId}/soapChapters/Timeline:

POST /api/timeline

Results are saved to Vercel Blob at:

analysis/{videoId}.json

Vercel Blob: Centralized State

Both pipelines converge at Vercel Blob, a key-value store for JSON files. This eliminates database complexity while maintaining fast lookups.

Storage schema:

How Twelve Labs Powers Intelligence

YOLO gives us the what and when — specific tools, exact timestamps. Twelve Labs gives us the why and how — phase recognition, technique analysis, contextual reasoning. Here is how we use three primary API capabilities: Analyze (for SOAP generation and chapter segmentation) and Search (for semantic retrieval).

1. Analyze API: SOAP Note Generation

The Analyze API is Twelve Labs' multimodal generation endpoint. We use it to create first-person operative notes grounded in YOLO detection data.

Source: frontend/src/app/api/analysis/[videoId]/soap/route.js

// 1. Fetch YOLO detection data from Vercel Blob const toolData = await fetchToolDetection(videoId); // 2. Format detections as readable text for the prompt const toolContext = formatToolDetectionForPrompt(toolData); // Example output: "DETECTED TOOLS: Grasper at 0:45, 1:20, 2:30..." // 3. Enrich the SOAP generation prompt with hard detection data const enrichedPrompt = `${soapPrompt} ## REFERENCE DATA ${toolContext} Use this tool detection data to provide accurate tool names and usage times.`; // 4. Call Twelve Labs Analyze API const response = await getTwelveLabsClient().analyze({ videoId: videoId, prompt: enrichedPrompt, temperature: 0.2 // Low temperature for factual, not creative, output }); // 5. Parse JSON response and save to Vercel Blob const parsedData = JSON.parse(response.data); await saveToBlob(videoId, { operative_note: parsedData.operative_note });

Why this works: By injecting YOLO detections into the prompt, we ground the model's reasoning in verified visual evidence. The AI cannot claim "I used a clipper at 5:30" if YOLO never detected one. This is the key architectural pattern — using computer vision outputs as factual constraints on multimodal generation.

2. Analyze API: Timeline Generation (Structured Output)

We also use the Analyze API for chapter generation by leveraging the responseFormat parameter to request structured JSON output. This provides schema-validated responses, eliminating fragile string parsing.

Source: frontend/src/app/api/timeline/route.js

const response = await getTwelveLabsClient().analyze({ videoId: videoId, prompt: "Divide this surgery into distinct phases with medical terminology. For each phase, provide a title, summary, start time, and end time.", responseFormat: { type: "json_schema", jsonSchema: { type: "object", properties: { chapters: { type: "array", items: { type: "object", properties: { chapterNumber: { type: "number" }, chapterTitle: { type: "string" }, chapterSummary: { type: "string" }, startSec: { type: "number" }, endSec: { type: "number" } }, required: ["chapterNumber", "chapterTitle", "startSec", "endSec"] } } } } } }); // Parse the structured JSON response const parsedData = JSON.parse(response.data); // parsedData.chapters contains the chapter array

Example output:

{ "chapters": [ { "chapterNumber": 1, "chapterTitle": "Preoperative Setup and Positioning", "chapterSummary": "Patient positioned and prepped for procedure", "startSec": 0, "endSec": 163 }, { "chapterNumber": 2, "chapterTitle": "Dissection and Control of Carotid Arteries", "startSec": 163, "endSec": 326 } ] }

Why this works: The responseFormat parameter ensures the model returns valid, parseable JSON matching an exact schema. No regex, no post-processing heuristics — the structured output is ready for direct use in the timeline UI.

3. Search API: Semantic Retrieval with Marengo

The Search API uses Marengo's multimodal embeddings to find specific moments in video based on natural language queries.

Source: frontend/src/app/api/search/route.js

const response = await getTwelveLabsClient().search.query({ indexId: process.env.NEXT_PUBLIC_TWELVELABS_MARENGO_INDEX_ID, searchOptions: ['visual', 'audio'], // Search both modalities queryText: query, // e.g., "clipping of cystic artery" groupBy: "clip", threshold: "low" // Balance precision vs. recall }); // Iterate through results for await (const clip of response) { console.log(`Found at ${clip.start}s - ${clip.end}s (confidence: ${clip.confidence})`); console.log(`Thumbnail: ${clip.thumbnailUrl}`); }

Why this works: Unlike keyword matching, Marengo understands surgical context. A search for "clipping of cystic artery" returns results even when no one says those exact words — the model recognizes the visual and procedural pattern. This enables building searchable surgical video archives without manual tagging.

Step-by-Step Implementation

Prerequisites

Node.js 18+ and Python 3.10+

Twelve Labs API Key: Get one from the Playground

Computer Vision Model: We use a YOLOv8 model fine-tuned on the Cholec80 dataset, which contains 80 cholecystectomy surgeries annotated with 7 instrument classes

Step 1: Setting Up the Backend (Railway)

The YOLO detection backend is a FastAPI server that accepts video uploads and returns JSON detections.

# backend/main.py @app.post("/detect/upload") async def detect_tools_upload(video_id: str, video: UploadFile): # Process video with YOLO results_data = run_inference(temp_video_path, video_id) # Upload results to Vercel Blob via Frontend API await upload_results_to_blob(video_id, results_data, blob_token) return {"status": "completed", "data": results_data}

The backend processes every 120th frame (~1 frame per 5 seconds at 24fps), balancing detection accuracy against processing speed. For a 30-minute surgical video, this produces approximately 360 analyzed frames — enough to build a comprehensive instrument usage timeline without the cost of full frame-by-frame inference.

Step 2: Frontend API Routes (Vercel)

The Next.js API routes orchestrate the intelligence layer. Three key endpoints:

GET /api/detect-tools/{videoId}: Retrieves YOLO detections from Vercel BlobPOST /api/analysis/{videoId}/soap: Generates SOAP notes using Twelve Labs Analyze APIPOST /api/timeline: Creates chapter segmentation using Twelve Labs Analyze API

Each route follows a consistent three-step pattern: fetch YOLO data from Blob (if available), call the Twelve Labs API with enriched prompts, and save results back to Blob for caching.

Step 3: Dr. Sage Chat Integration

The conversational interface enriches user queries with visual evidence before sending them to Twelve Labs.

Source: frontend/src/app/api/analysis/route.js

import { TwelveLabs } from 'twelvelabs-js'; // Helper: Convert raw JSON detections to a chat-friendly summary function formatToolDetectionForChat(toolData) { // ... logic to aggregate frames into time ranges ... // Output example: // "- Grasper: 450 detections (0:05 - 12:30)" // "- Hook: 120 detections (4:15 - 8:00)" return summary; } export async function POST(request) { const { userQuery, videoId } = await request.json(); // 1. Fetch the JSON data produced by our YOLO backend const toolData = await fetchToolDetection(videoId); // 2. Format it into natural language context // This turns thousands of data points into a readable summary for the AI const toolContext = formatToolDetectionForChat(toolData); // 3. Enrich the user's query // We tag this as system-injected data so the model treats it as ground truth const enrichedQuery = toolContext ? `${userQuery}\n\n[SYSTEM INJECTED DATA - DO NOT IGNORE]\n${toolContext}` : userQuery; // 4. Call Twelve Labs Pegasus const client = new TwelveLabs({ apiKey: process.env.TWELVELABS_API_KEY }); const response = await client.analyze({ videoId: videoId, prompt: enrichedQuery, temperature: 0.2 // Low temperature for factual accuracy }); return new Response(JSON.stringify(response)); }

Step 4: Visualizing the Timeline

Raw detection data is useful for the AI, but clinicians need visuals. We map detection timestamps to a React timeline component where each "swimlane" represents a surgical instrument class:

Red: Bipolar (Cautery)

Green: Hook (Dissection)

Yellow: Grasper (Manipulation)

A surgical educator reviewing a trainee's cholecystectomy can instantly spot patterns — prolonged cautery before adequate dissection, or unusual instrument sequencing — and click any moment to verify in the video. The frontend maps the JSON detections array to CSS-positioned elements on the timeline bar.

Conclusion

By pairing precise computer vision with contextual multimodal reasoning, this platform converts surgical video from passive recordings into structured, searchable clinical data.

YOLO delivers the who and when: specific instruments identified with pixel-level confidence at exact timestamps.

Twelve Labs delivers the what and why: phase recognition, technique analysis, and operative note generation grounded in full-procedure context.

The critical design pattern — injecting verified CV detections as factual constraints on multimodal generation — is applicable well beyond surgery. Any domain where you need both precise object identification and contextual reasoning (manufacturing QA, sports analytics, security review) can benefit from this hybrid architecture.

Next steps to explore:

Multi-procedure analytics across a department's full surgical library

Real-time intraoperative assistance with streaming YOLO detection

Automated training curriculum generation from annotated case libraries

Get started:

Surgeons and residents routinely spend 3–5 hours per procedure reviewing laparoscopic footage frame-by-frame — logging instruments, identifying surgical phases, and writing operative reports by hand. Across a teaching hospital processing hundreds of procedures monthly, that manual review adds up to thousands of hours of clinical time diverted from patient care.

In this tutorial, we build Surgical Video Intelligence, a platform that treats surgical video as a rich, queryable dataset. The core architectural insight: pair YOLO (You Only Look Once) for precise object detection with Twelve Labs' multimodal video understanding API to create a system that can both see which tools are on screen and understand the surgical context around them.

⭐️ What We're Building: A Next.js application that automatically:

Detects surgical tools in real-time with bounding boxes and timestamps

Generates SOAP operative notes (Subjective, Objective, Assessment, Plan) from video evidence

Segments surgeries into chapters (e.g., "Dissection," "Closure")

Enables semantic search across video libraries (e.g., "find all cauterization moments")

Provides Dr. Sage, a conversational AI assistant for follow-up clinical Q&A

📌 Try the live demo | View the GitHub repository

System Architecture

Surgical video analysis requires two fundamentally different capabilities: pixel-precise instrument identification and contextual understanding of what those instruments are doing within a procedure. No single model handles both well.

Our architecture separates these concerns into two parallel pipelines that converge at inference time.

Video Ingestion: The user uploads a laparoscopic surgery video.

Tool Detection: A YOLOv8 model (fine-tuned on 7 distinct surgical instrument classes) scans the video and outputs a structured JSON file of detections with timestamps and bounding boxes.

Video Indexing: The video is indexed by Twelve Labs to enable semantic search (Marengo embeddings) and contextual analysis (Pegasus reasoning).

Context Fusion: When the system generates notes or answers questions, YOLO's detection data is injected into the prompt alongside Twelve Labs' video understanding — grounding the AI's reasoning in verified visual evidence.

This hybrid approach matters because each technology compensates for the other's blind spots. YOLO can identify a grasper with 95% confidence at frame 120, but it cannot tell you whether that grasper is being used for retraction or dissection. Twelve Labs can reason about surgical technique and phase progression, but benefits from hard detection data to prevent hallucinating instrument usage that never occurred.

Application Demo

1. Real-Time Tool Detection

The main analysis view provides a synchronized experience. On the left, the video player overlays real-time tool bounding boxes detected by YOLO. Below, a "swimlane" timeline visualizes exactly when each instrument appears — giving educators an instant, scannable map of instrument usage across the entire procedure.

2. Automatic SOAP Note Generation

Upon video upload and indexing, the system generates a first-person operative note using the Twelve Labs Analyze API, enriched with YOLO tool detection data. No manual input required. The resulting SOAP note includes accurate instrument references anchored to specific timestamps, reducing documentation time from hours to minutes.

3. Surgical Phase Segmentation

The Twelve Labs Analyze API automatically breaks the surgery into distinct phases — "Preparation," "Dissection," "Closure" — using structured JSON output. These chapters appear on an interactive timeline, enabling quick navigation to specific surgical stages. For training programs, this transforms a 90-minute recording into a navigable curriculum.

4. Semantic Search

Natural language search across surgical video libraries, powered by Twelve Labs Marengo's multimodal embeddings. Unlike keyword matching, this understands surgical context semantically.

Example query: "Show me when the surgeon used electrocautery" Result: Clips at 2:34–2:58 and 5:12–5:45 with 0.92 confidence

For a surgical educator searching "use of monopolar coagulation" across 50+ training videos, Marengo returns all instances with timestamps — enabling rapid compilation of technique montages without watching a single full recording.

5. Dr. Sage: Conversational Clinical Q&A

After automated analysis completes, Dr. Sage provides a conversational interface for follow-up questions. It has access to both YOLO detections and Twelve Labs video understanding, enabling queries like "What tools were used during dissection?" or "Explain the technique used at 3:45." This bridges the gap between automated reports and the ad-hoc questions that arise during surgical review and training sessions.

Deployment Architecture

The application is designed as a distributed system with clear separation between compute-intensive inference and user-facing operations.

Infrastructure Overview

Frontend (Vercel): Next.js application serving the UI and API routes

Backend (Railway): FastAPI server running YOLO inference

Storage (Vercel Blob): Centralized JSON storage for all analysis results

AI Processing (Twelve Labs): Cloud-based video understanding

Data Flow

When a user uploads a surgical video, two parallel pipelines activate:

Computer Vision Pipeline (Railway)

User uploads video → Frontend sends to Railway backend

YOLO processes the video frame-by-frame (every 120th frame for efficiency)

Backend generates

detections.json:

{ "detections": [ { "frame": 120, "timestamp": 5.0, "tools": [ {"class_name": "Grasper", "confidence": 0.95, "bbox": {...}} ] } ] }

Results upload to Vercel Blob at path:

detections/{videoId}.json

Video Intelligence Pipeline (Twelve Labs + Vercel)

Video is indexed by Twelve Labs (Marengo for embeddings, Pegasus for understanding)

Frontend API routes trigger AI generation:

SOAP Note:

POST /api/analysis/{videoId}/soapChapters/Timeline:

POST /api/timeline

Results are saved to Vercel Blob at:

analysis/{videoId}.json

Vercel Blob: Centralized State

Both pipelines converge at Vercel Blob, a key-value store for JSON files. This eliminates database complexity while maintaining fast lookups.

Storage schema:

How Twelve Labs Powers Intelligence

YOLO gives us the what and when — specific tools, exact timestamps. Twelve Labs gives us the why and how — phase recognition, technique analysis, contextual reasoning. Here is how we use three primary API capabilities: Analyze (for SOAP generation and chapter segmentation) and Search (for semantic retrieval).

1. Analyze API: SOAP Note Generation

The Analyze API is Twelve Labs' multimodal generation endpoint. We use it to create first-person operative notes grounded in YOLO detection data.

Source: frontend/src/app/api/analysis/[videoId]/soap/route.js

// 1. Fetch YOLO detection data from Vercel Blob const toolData = await fetchToolDetection(videoId); // 2. Format detections as readable text for the prompt const toolContext = formatToolDetectionForPrompt(toolData); // Example output: "DETECTED TOOLS: Grasper at 0:45, 1:20, 2:30..." // 3. Enrich the SOAP generation prompt with hard detection data const enrichedPrompt = `${soapPrompt} ## REFERENCE DATA ${toolContext} Use this tool detection data to provide accurate tool names and usage times.`; // 4. Call Twelve Labs Analyze API const response = await getTwelveLabsClient().analyze({ videoId: videoId, prompt: enrichedPrompt, temperature: 0.2 // Low temperature for factual, not creative, output }); // 5. Parse JSON response and save to Vercel Blob const parsedData = JSON.parse(response.data); await saveToBlob(videoId, { operative_note: parsedData.operative_note });

Why this works: By injecting YOLO detections into the prompt, we ground the model's reasoning in verified visual evidence. The AI cannot claim "I used a clipper at 5:30" if YOLO never detected one. This is the key architectural pattern — using computer vision outputs as factual constraints on multimodal generation.

2. Analyze API: Timeline Generation (Structured Output)

We also use the Analyze API for chapter generation by leveraging the responseFormat parameter to request structured JSON output. This provides schema-validated responses, eliminating fragile string parsing.

Source: frontend/src/app/api/timeline/route.js

const response = await getTwelveLabsClient().analyze({ videoId: videoId, prompt: "Divide this surgery into distinct phases with medical terminology. For each phase, provide a title, summary, start time, and end time.", responseFormat: { type: "json_schema", jsonSchema: { type: "object", properties: { chapters: { type: "array", items: { type: "object", properties: { chapterNumber: { type: "number" }, chapterTitle: { type: "string" }, chapterSummary: { type: "string" }, startSec: { type: "number" }, endSec: { type: "number" } }, required: ["chapterNumber", "chapterTitle", "startSec", "endSec"] } } } } } }); // Parse the structured JSON response const parsedData = JSON.parse(response.data); // parsedData.chapters contains the chapter array

Example output:

{ "chapters": [ { "chapterNumber": 1, "chapterTitle": "Preoperative Setup and Positioning", "chapterSummary": "Patient positioned and prepped for procedure", "startSec": 0, "endSec": 163 }, { "chapterNumber": 2, "chapterTitle": "Dissection and Control of Carotid Arteries", "startSec": 163, "endSec": 326 } ] }

Why this works: The responseFormat parameter ensures the model returns valid, parseable JSON matching an exact schema. No regex, no post-processing heuristics — the structured output is ready for direct use in the timeline UI.

3. Search API: Semantic Retrieval with Marengo

The Search API uses Marengo's multimodal embeddings to find specific moments in video based on natural language queries.

Source: frontend/src/app/api/search/route.js

const response = await getTwelveLabsClient().search.query({ indexId: process.env.NEXT_PUBLIC_TWELVELABS_MARENGO_INDEX_ID, searchOptions: ['visual', 'audio'], // Search both modalities queryText: query, // e.g., "clipping of cystic artery" groupBy: "clip", threshold: "low" // Balance precision vs. recall }); // Iterate through results for await (const clip of response) { console.log(`Found at ${clip.start}s - ${clip.end}s (confidence: ${clip.confidence})`); console.log(`Thumbnail: ${clip.thumbnailUrl}`); }

Why this works: Unlike keyword matching, Marengo understands surgical context. A search for "clipping of cystic artery" returns results even when no one says those exact words — the model recognizes the visual and procedural pattern. This enables building searchable surgical video archives without manual tagging.

Step-by-Step Implementation

Prerequisites

Node.js 18+ and Python 3.10+

Twelve Labs API Key: Get one from the Playground

Computer Vision Model: We use a YOLOv8 model fine-tuned on the Cholec80 dataset, which contains 80 cholecystectomy surgeries annotated with 7 instrument classes

Step 1: Setting Up the Backend (Railway)

The YOLO detection backend is a FastAPI server that accepts video uploads and returns JSON detections.

# backend/main.py @app.post("/detect/upload") async def detect_tools_upload(video_id: str, video: UploadFile): # Process video with YOLO results_data = run_inference(temp_video_path, video_id) # Upload results to Vercel Blob via Frontend API await upload_results_to_blob(video_id, results_data, blob_token) return {"status": "completed", "data": results_data}

The backend processes every 120th frame (~1 frame per 5 seconds at 24fps), balancing detection accuracy against processing speed. For a 30-minute surgical video, this produces approximately 360 analyzed frames — enough to build a comprehensive instrument usage timeline without the cost of full frame-by-frame inference.

Step 2: Frontend API Routes (Vercel)

The Next.js API routes orchestrate the intelligence layer. Three key endpoints:

GET /api/detect-tools/{videoId}: Retrieves YOLO detections from Vercel BlobPOST /api/analysis/{videoId}/soap: Generates SOAP notes using Twelve Labs Analyze APIPOST /api/timeline: Creates chapter segmentation using Twelve Labs Analyze API

Each route follows a consistent three-step pattern: fetch YOLO data from Blob (if available), call the Twelve Labs API with enriched prompts, and save results back to Blob for caching.

Step 3: Dr. Sage Chat Integration

The conversational interface enriches user queries with visual evidence before sending them to Twelve Labs.

Source: frontend/src/app/api/analysis/route.js

import { TwelveLabs } from 'twelvelabs-js'; // Helper: Convert raw JSON detections to a chat-friendly summary function formatToolDetectionForChat(toolData) { // ... logic to aggregate frames into time ranges ... // Output example: // "- Grasper: 450 detections (0:05 - 12:30)" // "- Hook: 120 detections (4:15 - 8:00)" return summary; } export async function POST(request) { const { userQuery, videoId } = await request.json(); // 1. Fetch the JSON data produced by our YOLO backend const toolData = await fetchToolDetection(videoId); // 2. Format it into natural language context // This turns thousands of data points into a readable summary for the AI const toolContext = formatToolDetectionForChat(toolData); // 3. Enrich the user's query // We tag this as system-injected data so the model treats it as ground truth const enrichedQuery = toolContext ? `${userQuery}\n\n[SYSTEM INJECTED DATA - DO NOT IGNORE]\n${toolContext}` : userQuery; // 4. Call Twelve Labs Pegasus const client = new TwelveLabs({ apiKey: process.env.TWELVELABS_API_KEY }); const response = await client.analyze({ videoId: videoId, prompt: enrichedQuery, temperature: 0.2 // Low temperature for factual accuracy }); return new Response(JSON.stringify(response)); }

Step 4: Visualizing the Timeline

Raw detection data is useful for the AI, but clinicians need visuals. We map detection timestamps to a React timeline component where each "swimlane" represents a surgical instrument class:

Red: Bipolar (Cautery)

Green: Hook (Dissection)

Yellow: Grasper (Manipulation)

A surgical educator reviewing a trainee's cholecystectomy can instantly spot patterns — prolonged cautery before adequate dissection, or unusual instrument sequencing — and click any moment to verify in the video. The frontend maps the JSON detections array to CSS-positioned elements on the timeline bar.

Conclusion

By pairing precise computer vision with contextual multimodal reasoning, this platform converts surgical video from passive recordings into structured, searchable clinical data.

YOLO delivers the who and when: specific instruments identified with pixel-level confidence at exact timestamps.

Twelve Labs delivers the what and why: phase recognition, technique analysis, and operative note generation grounded in full-procedure context.

The critical design pattern — injecting verified CV detections as factual constraints on multimodal generation — is applicable well beyond surgery. Any domain where you need both precise object identification and contextual reasoning (manufacturing QA, sports analytics, security review) can benefit from this hybrid architecture.

Next steps to explore:

Multi-procedure analytics across a department's full surgical library

Real-time intraoperative assistance with streaming YOLO detection

Automated training curriculum generation from annotated case libraries

Get started:

Surgeons and residents routinely spend 3–5 hours per procedure reviewing laparoscopic footage frame-by-frame — logging instruments, identifying surgical phases, and writing operative reports by hand. Across a teaching hospital processing hundreds of procedures monthly, that manual review adds up to thousands of hours of clinical time diverted from patient care.

In this tutorial, we build Surgical Video Intelligence, a platform that treats surgical video as a rich, queryable dataset. The core architectural insight: pair YOLO (You Only Look Once) for precise object detection with Twelve Labs' multimodal video understanding API to create a system that can both see which tools are on screen and understand the surgical context around them.

⭐️ What We're Building: A Next.js application that automatically:

Detects surgical tools in real-time with bounding boxes and timestamps

Generates SOAP operative notes (Subjective, Objective, Assessment, Plan) from video evidence

Segments surgeries into chapters (e.g., "Dissection," "Closure")

Enables semantic search across video libraries (e.g., "find all cauterization moments")

Provides Dr. Sage, a conversational AI assistant for follow-up clinical Q&A

📌 Try the live demo | View the GitHub repository

System Architecture

Surgical video analysis requires two fundamentally different capabilities: pixel-precise instrument identification and contextual understanding of what those instruments are doing within a procedure. No single model handles both well.

Our architecture separates these concerns into two parallel pipelines that converge at inference time.

Video Ingestion: The user uploads a laparoscopic surgery video.

Tool Detection: A YOLOv8 model (fine-tuned on 7 distinct surgical instrument classes) scans the video and outputs a structured JSON file of detections with timestamps and bounding boxes.

Video Indexing: The video is indexed by Twelve Labs to enable semantic search (Marengo embeddings) and contextual analysis (Pegasus reasoning).

Context Fusion: When the system generates notes or answers questions, YOLO's detection data is injected into the prompt alongside Twelve Labs' video understanding — grounding the AI's reasoning in verified visual evidence.

This hybrid approach matters because each technology compensates for the other's blind spots. YOLO can identify a grasper with 95% confidence at frame 120, but it cannot tell you whether that grasper is being used for retraction or dissection. Twelve Labs can reason about surgical technique and phase progression, but benefits from hard detection data to prevent hallucinating instrument usage that never occurred.

Application Demo

1. Real-Time Tool Detection

The main analysis view provides a synchronized experience. On the left, the video player overlays real-time tool bounding boxes detected by YOLO. Below, a "swimlane" timeline visualizes exactly when each instrument appears — giving educators an instant, scannable map of instrument usage across the entire procedure.

2. Automatic SOAP Note Generation

Upon video upload and indexing, the system generates a first-person operative note using the Twelve Labs Analyze API, enriched with YOLO tool detection data. No manual input required. The resulting SOAP note includes accurate instrument references anchored to specific timestamps, reducing documentation time from hours to minutes.

3. Surgical Phase Segmentation

The Twelve Labs Analyze API automatically breaks the surgery into distinct phases — "Preparation," "Dissection," "Closure" — using structured JSON output. These chapters appear on an interactive timeline, enabling quick navigation to specific surgical stages. For training programs, this transforms a 90-minute recording into a navigable curriculum.

4. Semantic Search

Natural language search across surgical video libraries, powered by Twelve Labs Marengo's multimodal embeddings. Unlike keyword matching, this understands surgical context semantically.

Example query: "Show me when the surgeon used electrocautery" Result: Clips at 2:34–2:58 and 5:12–5:45 with 0.92 confidence

For a surgical educator searching "use of monopolar coagulation" across 50+ training videos, Marengo returns all instances with timestamps — enabling rapid compilation of technique montages without watching a single full recording.

5. Dr. Sage: Conversational Clinical Q&A

After automated analysis completes, Dr. Sage provides a conversational interface for follow-up questions. It has access to both YOLO detections and Twelve Labs video understanding, enabling queries like "What tools were used during dissection?" or "Explain the technique used at 3:45." This bridges the gap between automated reports and the ad-hoc questions that arise during surgical review and training sessions.

Deployment Architecture

The application is designed as a distributed system with clear separation between compute-intensive inference and user-facing operations.

Infrastructure Overview

Frontend (Vercel): Next.js application serving the UI and API routes

Backend (Railway): FastAPI server running YOLO inference

Storage (Vercel Blob): Centralized JSON storage for all analysis results

AI Processing (Twelve Labs): Cloud-based video understanding

Data Flow

When a user uploads a surgical video, two parallel pipelines activate:

Computer Vision Pipeline (Railway)

User uploads video → Frontend sends to Railway backend

YOLO processes the video frame-by-frame (every 120th frame for efficiency)

Backend generates

detections.json:

{ "detections": [ { "frame": 120, "timestamp": 5.0, "tools": [ {"class_name": "Grasper", "confidence": 0.95, "bbox": {...}} ] } ] }

Results upload to Vercel Blob at path:

detections/{videoId}.json

Video Intelligence Pipeline (Twelve Labs + Vercel)

Video is indexed by Twelve Labs (Marengo for embeddings, Pegasus for understanding)

Frontend API routes trigger AI generation:

SOAP Note:

POST /api/analysis/{videoId}/soapChapters/Timeline:

POST /api/timeline

Results are saved to Vercel Blob at:

analysis/{videoId}.json

Vercel Blob: Centralized State

Both pipelines converge at Vercel Blob, a key-value store for JSON files. This eliminates database complexity while maintaining fast lookups.

Storage schema:

How Twelve Labs Powers Intelligence

YOLO gives us the what and when — specific tools, exact timestamps. Twelve Labs gives us the why and how — phase recognition, technique analysis, contextual reasoning. Here is how we use three primary API capabilities: Analyze (for SOAP generation and chapter segmentation) and Search (for semantic retrieval).

1. Analyze API: SOAP Note Generation

The Analyze API is Twelve Labs' multimodal generation endpoint. We use it to create first-person operative notes grounded in YOLO detection data.

Source: frontend/src/app/api/analysis/[videoId]/soap/route.js

// 1. Fetch YOLO detection data from Vercel Blob const toolData = await fetchToolDetection(videoId); // 2. Format detections as readable text for the prompt const toolContext = formatToolDetectionForPrompt(toolData); // Example output: "DETECTED TOOLS: Grasper at 0:45, 1:20, 2:30..." // 3. Enrich the SOAP generation prompt with hard detection data const enrichedPrompt = `${soapPrompt} ## REFERENCE DATA ${toolContext} Use this tool detection data to provide accurate tool names and usage times.`; // 4. Call Twelve Labs Analyze API const response = await getTwelveLabsClient().analyze({ videoId: videoId, prompt: enrichedPrompt, temperature: 0.2 // Low temperature for factual, not creative, output }); // 5. Parse JSON response and save to Vercel Blob const parsedData = JSON.parse(response.data); await saveToBlob(videoId, { operative_note: parsedData.operative_note });

Why this works: By injecting YOLO detections into the prompt, we ground the model's reasoning in verified visual evidence. The AI cannot claim "I used a clipper at 5:30" if YOLO never detected one. This is the key architectural pattern — using computer vision outputs as factual constraints on multimodal generation.

2. Analyze API: Timeline Generation (Structured Output)

We also use the Analyze API for chapter generation by leveraging the responseFormat parameter to request structured JSON output. This provides schema-validated responses, eliminating fragile string parsing.

Source: frontend/src/app/api/timeline/route.js

const response = await getTwelveLabsClient().analyze({ videoId: videoId, prompt: "Divide this surgery into distinct phases with medical terminology. For each phase, provide a title, summary, start time, and end time.", responseFormat: { type: "json_schema", jsonSchema: { type: "object", properties: { chapters: { type: "array", items: { type: "object", properties: { chapterNumber: { type: "number" }, chapterTitle: { type: "string" }, chapterSummary: { type: "string" }, startSec: { type: "number" }, endSec: { type: "number" } }, required: ["chapterNumber", "chapterTitle", "startSec", "endSec"] } } } } } }); // Parse the structured JSON response const parsedData = JSON.parse(response.data); // parsedData.chapters contains the chapter array

Example output:

{ "chapters": [ { "chapterNumber": 1, "chapterTitle": "Preoperative Setup and Positioning", "chapterSummary": "Patient positioned and prepped for procedure", "startSec": 0, "endSec": 163 }, { "chapterNumber": 2, "chapterTitle": "Dissection and Control of Carotid Arteries", "startSec": 163, "endSec": 326 } ] }

Why this works: The responseFormat parameter ensures the model returns valid, parseable JSON matching an exact schema. No regex, no post-processing heuristics — the structured output is ready for direct use in the timeline UI.

3. Search API: Semantic Retrieval with Marengo

The Search API uses Marengo's multimodal embeddings to find specific moments in video based on natural language queries.

Source: frontend/src/app/api/search/route.js

const response = await getTwelveLabsClient().search.query({ indexId: process.env.NEXT_PUBLIC_TWELVELABS_MARENGO_INDEX_ID, searchOptions: ['visual', 'audio'], // Search both modalities queryText: query, // e.g., "clipping of cystic artery" groupBy: "clip", threshold: "low" // Balance precision vs. recall }); // Iterate through results for await (const clip of response) { console.log(`Found at ${clip.start}s - ${clip.end}s (confidence: ${clip.confidence})`); console.log(`Thumbnail: ${clip.thumbnailUrl}`); }

Why this works: Unlike keyword matching, Marengo understands surgical context. A search for "clipping of cystic artery" returns results even when no one says those exact words — the model recognizes the visual and procedural pattern. This enables building searchable surgical video archives without manual tagging.

Step-by-Step Implementation

Prerequisites

Node.js 18+ and Python 3.10+

Twelve Labs API Key: Get one from the Playground

Computer Vision Model: We use a YOLOv8 model fine-tuned on the Cholec80 dataset, which contains 80 cholecystectomy surgeries annotated with 7 instrument classes

Step 1: Setting Up the Backend (Railway)

The YOLO detection backend is a FastAPI server that accepts video uploads and returns JSON detections.

# backend/main.py @app.post("/detect/upload") async def detect_tools_upload(video_id: str, video: UploadFile): # Process video with YOLO results_data = run_inference(temp_video_path, video_id) # Upload results to Vercel Blob via Frontend API await upload_results_to_blob(video_id, results_data, blob_token) return {"status": "completed", "data": results_data}

The backend processes every 120th frame (~1 frame per 5 seconds at 24fps), balancing detection accuracy against processing speed. For a 30-minute surgical video, this produces approximately 360 analyzed frames — enough to build a comprehensive instrument usage timeline without the cost of full frame-by-frame inference.

Step 2: Frontend API Routes (Vercel)

The Next.js API routes orchestrate the intelligence layer. Three key endpoints:

GET /api/detect-tools/{videoId}: Retrieves YOLO detections from Vercel BlobPOST /api/analysis/{videoId}/soap: Generates SOAP notes using Twelve Labs Analyze APIPOST /api/timeline: Creates chapter segmentation using Twelve Labs Analyze API

Each route follows a consistent three-step pattern: fetch YOLO data from Blob (if available), call the Twelve Labs API with enriched prompts, and save results back to Blob for caching.

Step 3: Dr. Sage Chat Integration

The conversational interface enriches user queries with visual evidence before sending them to Twelve Labs.

Source: frontend/src/app/api/analysis/route.js

import { TwelveLabs } from 'twelvelabs-js'; // Helper: Convert raw JSON detections to a chat-friendly summary function formatToolDetectionForChat(toolData) { // ... logic to aggregate frames into time ranges ... // Output example: // "- Grasper: 450 detections (0:05 - 12:30)" // "- Hook: 120 detections (4:15 - 8:00)" return summary; } export async function POST(request) { const { userQuery, videoId } = await request.json(); // 1. Fetch the JSON data produced by our YOLO backend const toolData = await fetchToolDetection(videoId); // 2. Format it into natural language context // This turns thousands of data points into a readable summary for the AI const toolContext = formatToolDetectionForChat(toolData); // 3. Enrich the user's query // We tag this as system-injected data so the model treats it as ground truth const enrichedQuery = toolContext ? `${userQuery}\n\n[SYSTEM INJECTED DATA - DO NOT IGNORE]\n${toolContext}` : userQuery; // 4. Call Twelve Labs Pegasus const client = new TwelveLabs({ apiKey: process.env.TWELVELABS_API_KEY }); const response = await client.analyze({ videoId: videoId, prompt: enrichedQuery, temperature: 0.2 // Low temperature for factual accuracy }); return new Response(JSON.stringify(response)); }

Step 4: Visualizing the Timeline

Raw detection data is useful for the AI, but clinicians need visuals. We map detection timestamps to a React timeline component where each "swimlane" represents a surgical instrument class:

Red: Bipolar (Cautery)

Green: Hook (Dissection)

Yellow: Grasper (Manipulation)

A surgical educator reviewing a trainee's cholecystectomy can instantly spot patterns — prolonged cautery before adequate dissection, or unusual instrument sequencing — and click any moment to verify in the video. The frontend maps the JSON detections array to CSS-positioned elements on the timeline bar.

Conclusion

By pairing precise computer vision with contextual multimodal reasoning, this platform converts surgical video from passive recordings into structured, searchable clinical data.

YOLO delivers the who and when: specific instruments identified with pixel-level confidence at exact timestamps.

Twelve Labs delivers the what and why: phase recognition, technique analysis, and operative note generation grounded in full-procedure context.

The critical design pattern — injecting verified CV detections as factual constraints on multimodal generation — is applicable well beyond surgery. Any domain where you need both precise object identification and contextual reasoning (manufacturing QA, sports analytics, security review) can benefit from this hybrid architecture.

Next steps to explore:

Multi-procedure analytics across a department's full surgical library

Real-time intraoperative assistance with streaming YOLO detection

Automated training curriculum generation from annotated case libraries

Get started:

Related articles

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved