Tutorial

Tutorial

Tutorial

Building SAGE: Semantic Video Comparison with TwelveLabs Embeddings

Aahil Shaikh

Aahil Shaikh

Aahil Shaikh

How do you reliably compare two videos frame-by-frame when traditional pixel-level methods fail with different resolutions, encodings, or camera angles? This comprehensive tutorial walks through building SAGE (Streaming Analysis and Generation Engine), a production-ready video comparison system that leverages TwelveLabs Marengo-2.7 embeddings to detect semantic differences between videos at the segment level. Our system analyzes videos using temporal embeddings that understand content meaning rather than pixel values, enabling accurate comparison across different video formats, resolutions, and shooting conditions. By the end of this tutorial, you'll have built a complete video comparison platform that can identify what's actually different between two videos—not just what looks different.

How do you reliably compare two videos frame-by-frame when traditional pixel-level methods fail with different resolutions, encodings, or camera angles? This comprehensive tutorial walks through building SAGE (Streaming Analysis and Generation Engine), a production-ready video comparison system that leverages TwelveLabs Marengo-2.7 embeddings to detect semantic differences between videos at the segment level. Our system analyzes videos using temporal embeddings that understand content meaning rather than pixel values, enabling accurate comparison across different video formats, resolutions, and shooting conditions. By the end of this tutorial, you'll have built a complete video comparison platform that can identify what's actually different between two videos—not just what looks different.

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Jan 6, 2026

Jan 6, 2026

Jan 6, 2026

17 Minutes

17 Minutes

17 Minutes

Copy link to article

Copy link to article

Copy link to article

Introduction

You've shot two versions of a training video. Same content, same script, but different takes. One has better lighting, the other has clearer audio. You need to quickly identify exactly where they differ—not just frame-by-frame pixel differences, but actual semantic changes in content, scene composition, or visual elements.

Traditional video comparison tools have a fundamental limitation: they compare pixels, not meaning. This breaks down when videos have:

Different resolutions or aspect ratios

Different encoding settings or compression

Different camera angles or positions

Lighting or color grading differences

Temporal shifts (one video starts a few seconds later)

This is why we built SAGE—a system that understands what's in videos, not just what pixels they contain. Instead of comparing raw video data, SAGE uses TwelveLabs Marengo embeddings to generate semantic representations of video segments, then compares those representations to find meaningful differences.

The key insight? Semantic embeddings capture what matters. A shot of a person walking doesn't need identical pixels—it needs to represent the same action. By comparing embeddings, we can detect when videos differ in content even when pixels differ for technical reasons.

SAGE creates a complete comparison workflow:

Upload videos to S3 using streaming multipart uploads (handles large files efficiently)

Generate embeddings using TwelveLabs Marengo-retrieval-2.7 (2-second segments)

Compare embeddings using cosine distance (finds semantic differences)

Visualize differences on a synchronized timeline with side-by-side playback

Analyze differences with optional AI-powered insights (OpenAI integration)

The result? A system that tells you what changed, not just what looks different.

Prerequisites

Before starting, ensure you have:

Python 3.12+ installed

Node.js 18+ or Bun installed

API Keys:

TwelveLabs API Key (for embedding generation)

OpenAI API Key (optional, for AI analysis)

AWS Account with S3 access configured (for video storage)

Git for cloning the repository

Basic familiarity with Python, FastAPI, Next.js, and AWS S3

The Problem with Pixel-Level Comparison

Here's what we discovered: pixel-level comparison breaks down in real-world scenarios. Consider comparing these two videos:

Video A: 1080p MP4, shot at 30fps, H.264 encoding, natural lighting

Video B: 720p MP4, shot at 24fps, H.265 encoding, studio lighting

A pixel-level comparison would flag almost every frame as "different" even though both videos show the same content. The fundamental issue? Pixels don't represent meaning.

Why Traditional Methods Fail

Traditional video comparison approaches suffer from three critical limitations:

Format Sensitivity: Different resolutions, codecs, or frame rates produce false positives

No Temporal Understanding: Frame-by-frame comparison misses temporal context

No Semantic Awareness: Can't distinguish between "different pixels" and "different content"

The Embedding Solution

TwelveLabs Marengo embeddings solve this by representing what's in the video, not what pixels it contains. Each 2-second segment gets converted into a high-dimensional vector that captures:

Visual content (objects, scenes, actions)

Temporal patterns (movement, transitions)

Semantic meaning (what's happening, not how it looks)

Comparing these embeddings tells us when videos differ in content, not just pixels.

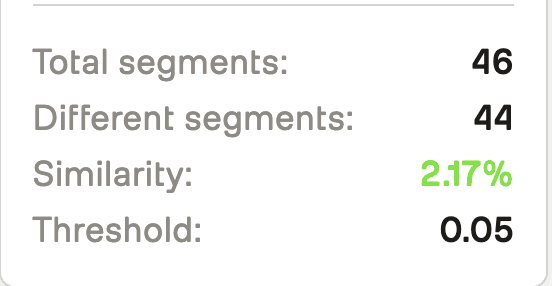

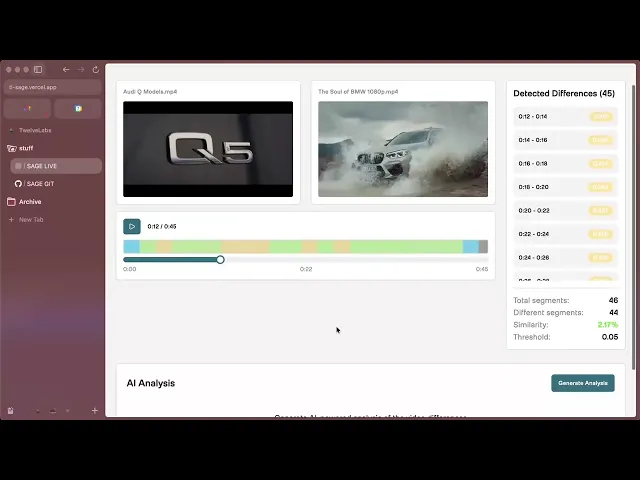

Demo Application

SAGE provides a streamlined video comparison workflow:

Upload Videos: Upload two videos (up to 2 at a time) and watch as they're processed with real-time status updates—from S3 upload to embedding generation completion.

Automatic Comparison: Once both videos are ready, SAGE automatically compares them using semantic embeddings, identifying differences at the segment level without manual frame-by-frame review.

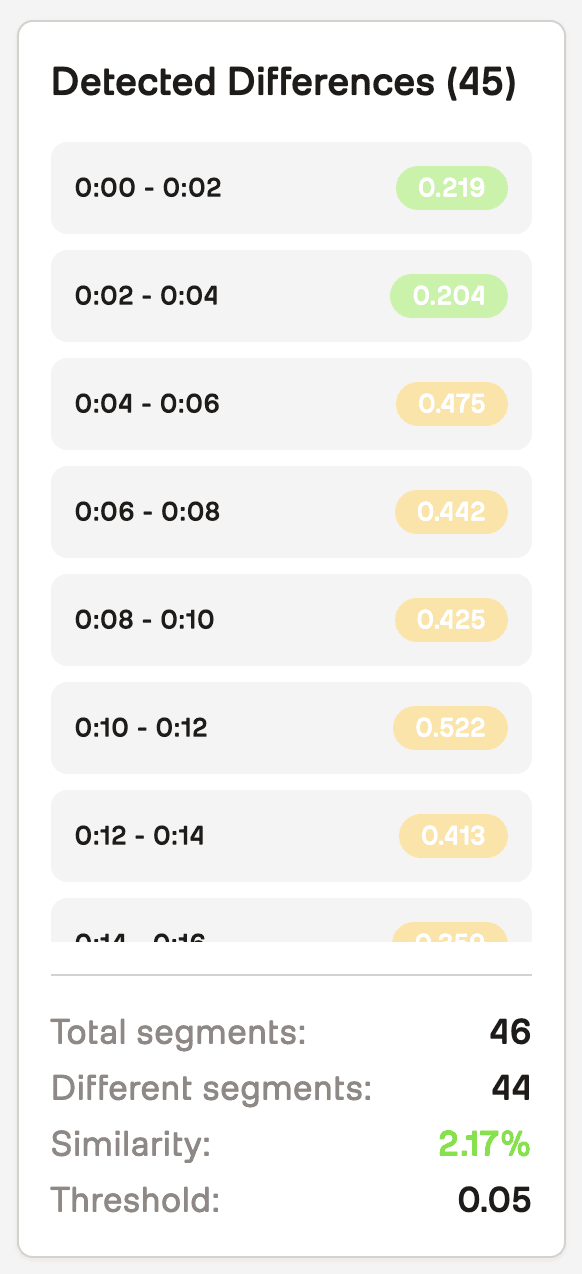

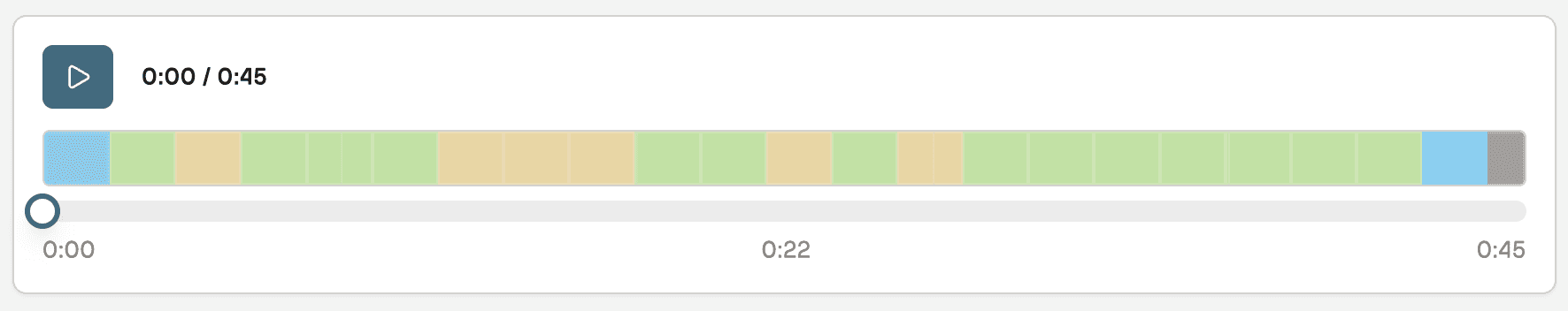

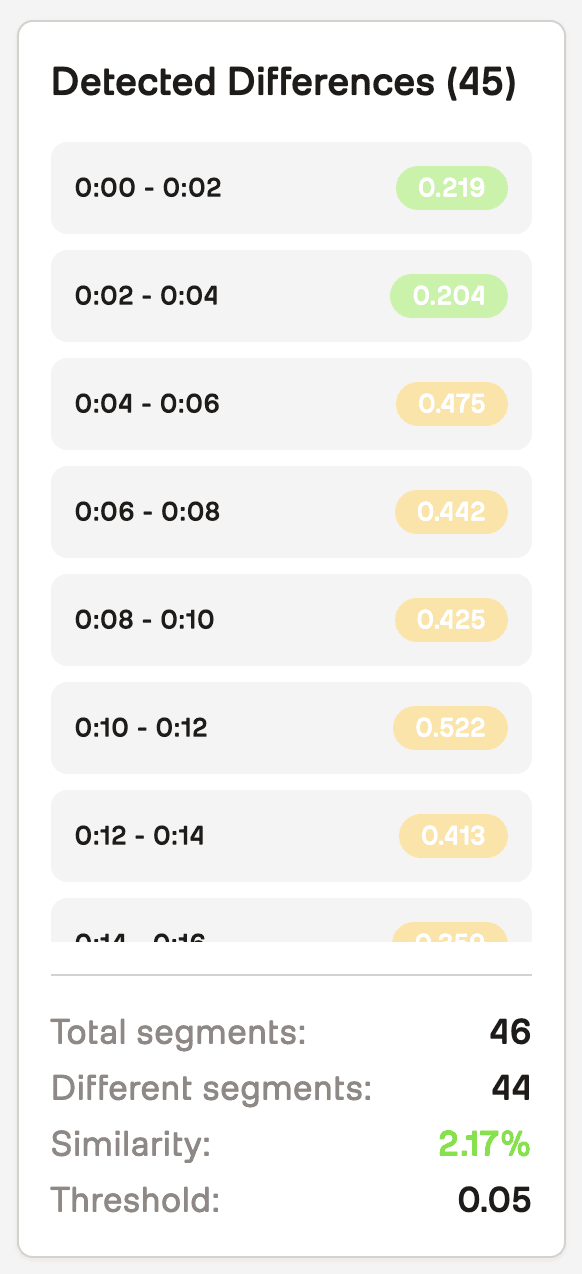

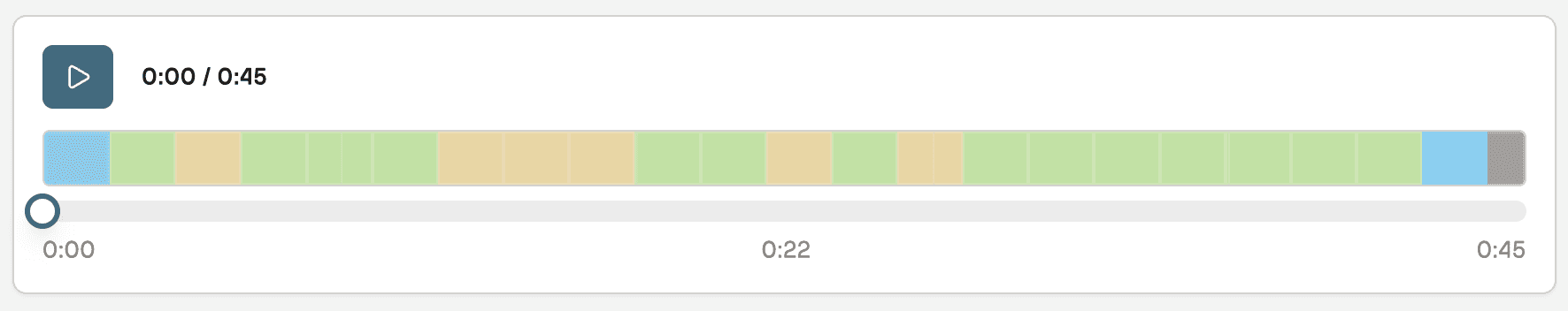

Interactive Analysis: Explore differences through synchronized side-by-side playback, a color-coded timeline showing where videos differ, and detailed segment-by-segment breakdowns with similarity scores.

The magic happens in real-time: watch embedding generation progress, see similarity percentages calculated instantly, and track differences across the timeline with precise timestamps. Jump to any difference marker to see exactly what changed between your videos.

You can explore the complete demo application and find the full source code on GitHub, or view a tutorial video demonstrating how the system works:

How SAGE Works

SAGE implements a sophisticated video comparison pipeline that combines AWS S3 storage, TwelveLabs embeddings, and intelligent visualization:

System Architecture

Preparation Steps

1. Clone the Repository

The code is publicly available here: https://github.com/aahilshaikh-twlbs/SAGE

git clone https://github.com/aahilshaikh-twlbs/SAGE.git cd

2. Set up Backend

cd backend python3 -m venv .venv source .venv/bin/activate # Windows: .venv\Scripts\activate pip install -r requirements.txt cp env.example .env # Add your API keys to .env

3. Set up Frontend

cd ../frontend npm install # or bun installcp .env.local.example .env.local # Set NEXT_PUBLIC_API_URL=http://localhost:8000

4. Configure AWS S3

# Configure AWS credentials (using AWS SSO or IAM) aws configure --profile dev # Or set environment variables:export AWS_ACCESS_KEY_ID=your_access_key export AWS_SECRET_ACCESS_KEY=your_secret_key export AWS_REGION

5. Start the Application

# Terminal 1: Backendcd backend python app.py # Terminal 2: Frontendcd frontend npm run dev# or bun dev

Once you've completed these steps, navigate to http://localhost:3000to access SAGE!

Implementation Walkthrough

Let's walk through the core components that power SAGE's video comparison system.

1. Streaming Video Upload to S3

SAGE handles large video files efficiently using streaming multipart uploads:

async def upload_to_s3_streaming(file: UploadFile) -> str: """Upload a file to S3 using streaming to avoid memory issues.""" file_key = f"videos/{uuid.uuid4()}_{file.filename}" # Use multipart upload for large files response = s3_client.create_multipart_upload( Bucket=S3_BUCKET_NAME, Key=file_key, ContentType=file.content_type ) upload_id = response['UploadId'] parts = [] part_number = 1 chunk_size = 10 * 1024 * 1024# 10MB chunks while True: chunk = await file.read(chunk_size) if not chunk: break part_response = s3_client.upload_part( Bucket=S3_BUCKET_NAME, Key=file_key, PartNumber=part_number, UploadId=upload_id, Body=chunk ) parts.append({ 'ETag': part_response['ETag'], 'PartNumber': part_number }) part_number += 1 # Complete multipart upload s3_client.complete_multipart_upload( Bucket=S3_BUCKET_NAME, Key=file_key, UploadId=upload_id, MultipartUpload={'Parts': parts} ) return f"s3://{S3_BUCKET_NAME}/{file_key}"

Key Design Decisions:

10MB Chunks: Balances upload efficiency with memory usage

Streaming: Processes file in chunks, never loads entire file into memory

Multipart Upload: Required for files >5GB, recommended for files >100MB

Presigned URLs: Generate temporary URLs for TwelveLabs to access videos securely

2. Embedding Generation with TwelveLabs

SAGE generates embeddings asynchronously using TwelveLabs Marengo-retrieval-2.7:

async def generate_embeddings_async(embedding_id: str, s3_url: str, api_key: str): """Asynchronously generate embeddings for a video from S3.""" # Get TwelveLabs client tl = get_twelve_labs_client(api_key) # Generate presigned URL for TwelveLabs to access the video presigned_url = get_s3_presigned_url(s3_url) # Create embedding task using presigned HTTPS URL task = tl.embed.task.create( model_name="Marengo-retrieval-2.7", video_url=presigned_url, video_clip_length=2,# 2-second segments video_embedding_scopes=["clip", "video"] ) # Wait for completion with timeout task.wait_for_done(sleep_interval=5, timeout=1800)# 30 minutes # Get completed task completed_task = tl.embed.task.retrieve(task.id) # Validate embeddings were generatedif not completed_task.video_embedding or not completed_task.video_embedding.segments: raise Exception("Embedding generation failed") # Store embeddings and duration embedding_storage[embedding_id].update({ "status": "completed", "embeddings": completed_task.video_embedding, "duration": last_segment.end_offset_sec, "task_id": task.id })

Key Features:

2-Second Segments: Balances granularity with processing time

Async Processing: Non-blocking, handles multiple videos via queue

Timeout Handling: 30-minute timeout prevents hanging on problematic videos

Validation: Ensures embeddings cover full video duration

3. Semantic Video Comparison

SAGE compares videos using cosine distance on embeddings:

async def compare_local_videos( embedding_id1: str, embedding_id2: str, threshold: float = 0.1, distance_metric: str = "cosine" ): """Compare two videos using their embedding IDs.""" # Get embedding segments segments1 = extract_segments(embedding_storage[embedding_id1]) segments2 = extract_segments(embedding_storage[embedding_id2]) differing_segments = [] min_segments = min(len(segments1), len(segments2)) # Compare corresponding segmentsfor i in range(min_segments): seg1 = segments1[i] seg2 = segments2[i] # Calculate cosine distance v1 = np.array(seg1["embedding"], dtype=np.float32) v2 = np.array(seg2["embedding"], dtype=np.float32) dot = np.dot(v1, v2) norm1 = np.linalg.norm(v1) norm2 = np.linalg.norm(v2) distance = 1.0 - (dot / (norm1 * norm2)) if norm1 > 0 and norm2 > 0 else 1.0 # Flag segments that exceed thresholdif distance > threshold: differing_segments.append({ "start_sec": seg1["start_offset_sec"], "end_sec": seg1["end_offset_sec"], "distance": distance }) return { "differences": differing_segments, "total_segments": min_segments, "differing_segments": len(differing_segments), "similarity_percent": ((min_segments - len(differing_segments)) / min_segments * 100) }

Why Cosine Distance?

Scale Invariant: Normalized vectors ignore magnitude differences

Semantic Focus: Measures similarity in meaning, not pixel values

Interpretable: 0 = identical, 1 = orthogonal, 2 = opposite

Configurable Threshold: Adjust sensitivity for different use cases

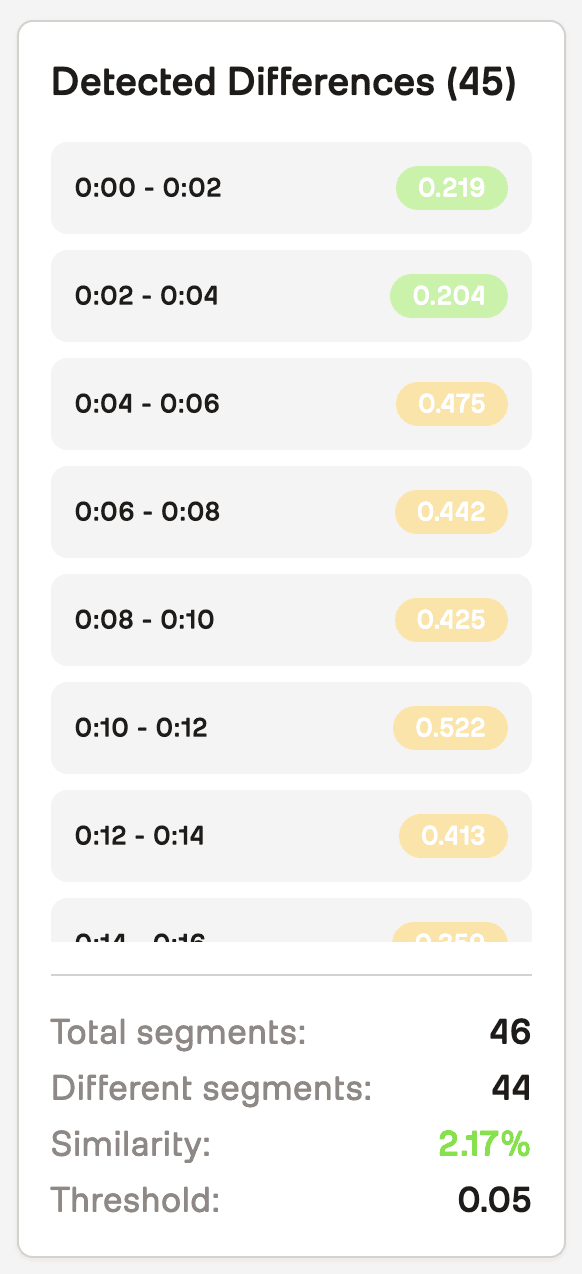

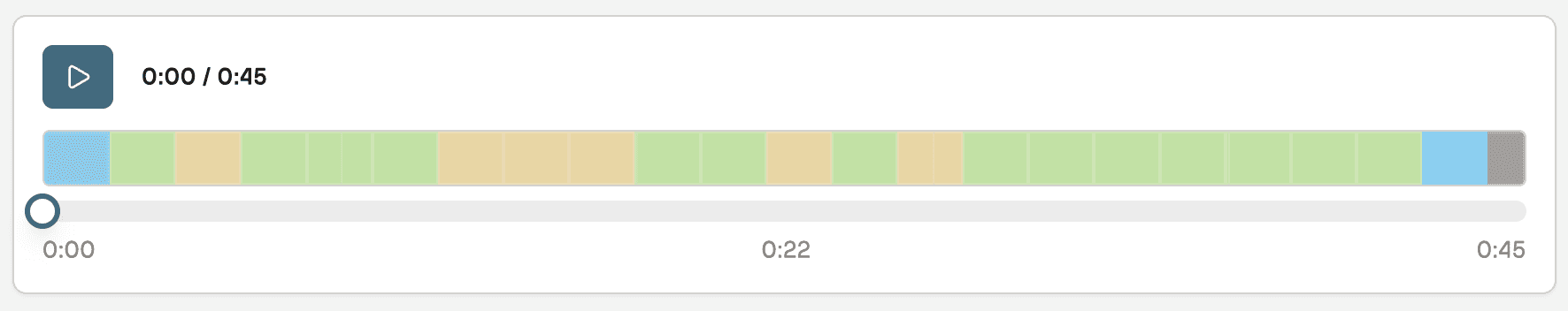

4. Synchronized Timeline Visualization

The frontend creates an interactive timeline with synchronized playback:

// Synchronized video playbackconst handlePlayPause = () => { if (video1Ref.current && video2Ref.current) { if (isPlaying) { video1Ref.current.pause(); video2Ref.current.pause(); } else { video1Ref.current.play(); video2Ref.current.play(); } setIsPlaying(!isPlaying); } }; // Jump to specific time in both videosconst seekToTime = (time: number) => { const constrainedTime = Math.min( time, Math.min(video1Data.duration, video2Data.duration) ); video1Ref.current.currentTime = constrainedTime; video2Ref.current.currentTime = constrainedTime; setCurrentTime(constrainedTime); }; // Color-coded difference markersconst getSeverityColor = (distance: number) => { if (distance >= 1.5) return 'bg-red-600';// Completely differentif (distance >= 1.0) return 'bg-red-500';// Very differentif (distance >= 0.7) return 'bg-orange-500';// Significantly differentif (distance >= 0.5) return 'bg-amber-500';// Moderately differentif (distance >= 0.3) return 'bg-yellow-500';// Somewhat differentif (distance >= 0.1) return 'bg-lime-500';// Slightly differentreturn 'bg-cyan-500';// Very similar };

Visualization Features:

Synchronized Playback: Both videos play/pause together

Timeline Markers: Color-coded segments show difference severity

Click-to-Seek: Click any marker to jump to that time

Similarity Score: Percentage similarity calculated from segments

5. Optional AI-Powered Analysis

SAGE optionally uses OpenAI to generate human-readable analysis:

async def generate_openai_analysis( embedding_id1: str, embedding_id2: str, differences: List[DifferenceSegment], threshold: float, video_duration: float ): """Generate AI-powered analysis of video differences.""" prompt = f""" Analyze the differences between two videos based on the following data: Video 1: {embed_data1.get('filename', 'Unknown')} Video 2: {embed_data2.get('filename', 'Unknown')} Total Duration: {video_duration:.1f} seconds Similarity Threshold: {threshold} Number of Differences: {len(differences)} Differences detected at these time segments: {chr(10).join([f"- {d.start_sec:.1f}s to {d.end_sec:.1f}s (distance: {d.distance:.3f})" for d in differences[:20]])} Please provide: 1. A concise analysis of what these differences might represent 2. Key insights about the comparison 3. Notable time segments where major differences occur """ response = openai.ChatCompletion.create( model="gpt-4", messages=[ {"role": "system", "content": "You are an expert video analysis assistant."}, {"role": "user", "content": prompt} ], max_tokens=500, temperature=0.7 ) return { "analysis": response.choices[0].message.content, "key_insights": extract_insights(response), "time_segments": extract_segments(response) }

Key Design Decisions

1. Segment-Based Comparison Over Frame-Based

We chose 2-second segments instead of frame-by-frame comparison for three reasons:

Temporal Context: Segments capture movement and action, not just static frames

Computational Efficiency: Fewer comparisons (e.g., 300 segments vs 1800 frames for 1-minute video)

Semantic Accuracy: Embeddings understand "what's happening" better than individual frames

Trade-off: Less granular timing (2-second precision vs frame-accurate), but much more meaningful differences.

2. Streaming Uploads Over In-Memory Processing

Large videos can be several gigabytes. Loading entire files into memory would crash servers:

Memory Safety: Streaming processes files in 10MB chunks

Scalability: Server stays responsive even with multiple large uploads

S3 Integration: Direct upload to S3, then presigned URLs for TwelveLabs

Trade-off: More complex upload logic, but enables handling videos of any size.

3. Cosine Distance Over Euclidean Distance

We use cosine distance for semantic comparison:

Scale Invariant: Works across different video qualities

Semantic Focus: Measures meaning similarity, not magnitude

Interpretable: Clear thresholds (0.1 = subtle, 0.5 = moderate, 1.0 = major)

Trade-off: Less intuitive than Euclidean distance, but better for semantic comparison.

4. Queue-Based Processing Over Parallel

Embedding generation can take 5-30 minutes per video. We process sequentially:

Rate Limit Safety: Avoids hitting TwelveLabs API rate limits

Resource Management: One video at a time uses consistent resources

Error Isolation: Failed videos don't block others

Trade-off: Slower total throughput, but more reliable and predictable.

5. In-Memory Embedding Storage Over Database

We store embeddings in memory rather than persisting to database:

Performance: Fast access during comparison (no database queries)

Simplicity: No schema migrations or database management

Temporary Nature: Embeddings are session-specific, don't need persistence

Trade-off: Lost on server restart, but acceptable for comparison workflow.

Performance Engineering: What We Learned

Building SAGE taught us valuable lessons about handling video processing at scale:

The 80/20 Rule Applied

We spent 80% of optimization effort on three things:

Streaming Uploads: Chunked uploads prevent memory exhaustion. The difference between loading a 2GB file vs streaming it is server stability vs crashes.

Async Processing: Non-blocking embedding generation keeps the API responsive. Users can upload multiple videos without waiting for each to complete.

Segment Validation: Ensuring embeddings cover full video duration prevents silent failures. We validate segment count, coverage, and duration before accepting results.

What Didn't Work (And Why)

We tried several optimizations that didn't pan out:

Parallel Embedding Generation: Hit TwelveLabs rate limits. Sequential processing was more reliable.

Caching Embeddings: Each video is unique, caching didn't help. Better to regenerate than cache.

Frame-Level Comparison: Too granular, too slow, too many false positives. Segment-level was the sweet spot.

Large Video Handling

Videos longer than 10 minutes required special considerations:

Timeout Management: 30-minute timeout prevents hanging on problematic videos

Segment Validation: Verify segments cover full duration (catch incomplete embeddings)

Error Messages: Clear errors instead of silent failures (

"Embedding generation incomplete")

The result? SAGE handles videos from 10 seconds to 20 minutes reliably.

We have more information on this matter in our Large Video Handling Guide.

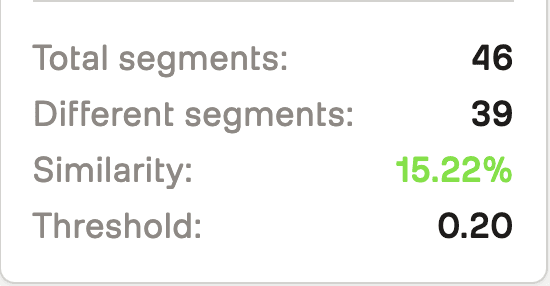

Data Outputs

SAGE generates comprehensive comparison results:

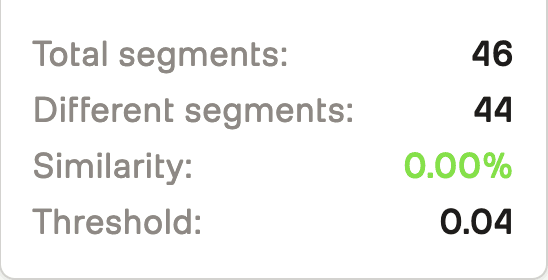

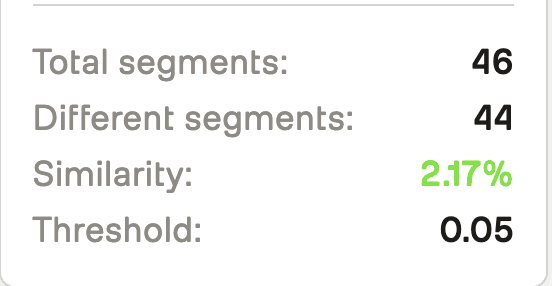

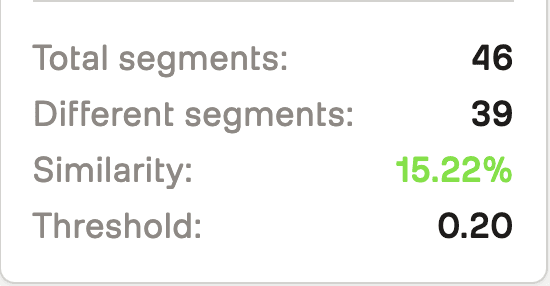

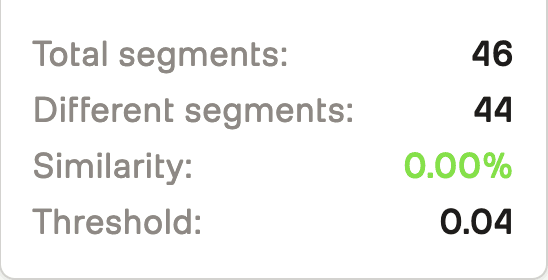

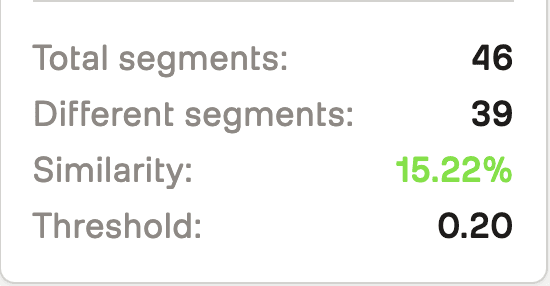

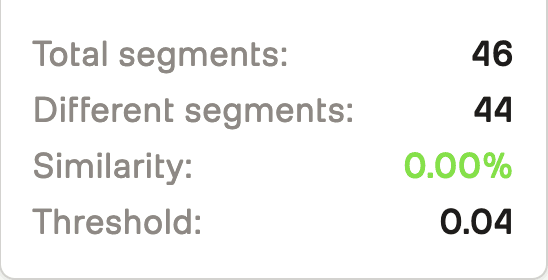

Comparison Metrics

Total Segments: Number of 2-second segments compared

Differing Segments: Segments where distance exceeds threshold

Similarity Percentage:

(total - differing) / total * 100Difference Timeline: Timestamped segments with distance scores

Visualization

Synchronized Video Players: Side-by-side playback with timeline

Color-Coded Markers: Severity visualization (green = similar, red = different)

Interactive Timeline: Click markers to jump to differences

Difference List: Detailed breakdown by time segment

Optional AI-Analysis

Summary: High-level analysis of differences

Key Insights: Bullet points of notable findings

Time Segments: Specific moments where major differences occur

Usage Examples

Example 1: Compare Two Training Videos

Scenario: Compare before/after versions of a product demo video

# Upload videos POST /upload-and-generate-embeddings { "file": <video_file_1> } POST /upload-and-generate-embeddings { "file": <video_file_2> } # Wait for embeddings (poll status endpoint) GET /embedding-status/{embedding_id} # Compare videos POST /compare-local-videos?embedding_id1={id1}&embedding_id2={id2}&threshold=0.1

Response:

{ "filename1": "demo_v1.mp4", "filename2": "demo_v2.mp4", "differences": [ { "start_sec": 12.0, "end_sec": 14.0, "distance": 0.342 }, { "start_sec": 45.0, "end_sec": 47.0, "distance": 0.521 } ], "total_segments": 180, "differing_segments": 2, "threshold_used": 0.1, "similarity_percent": 98.89 }

Interpretation: Videos are 98.89% similar. Two segments differ:

12-14 seconds: Moderate difference (distance 0.342)

45-47 seconds: Moderate difference (distance 0.521)

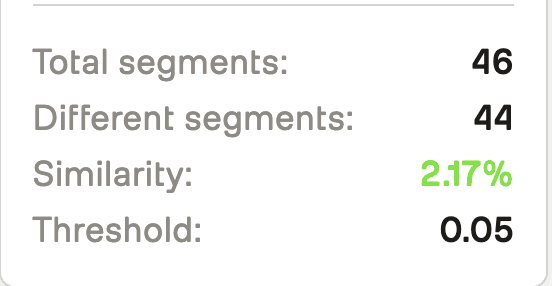

Example 2: Fine-Tune Threshold for Subtle Differences

Scenario: Find very subtle differences (e.g., background changes)

# Use lower threshold for more sensitivity POST /compare-local-videos?embedding_id1={id1}&embedding_id2={id2}&threshold=0.05

Result: Detects more differences, including subtle background or lighting changes.

Example 3: Generate AI Analysis

Scenario: Get human-readable explanation of differences

POST /openai-analysis { "embedding_id1": "...", "embedding_id2": "...", "differences": [...], "threshold": 0.1, "video_duration": 360.0 }

Response:

{ "analysis": "The videos show similar content overall, with two notable differences...", "key_insights": [ "Product positioning changed between takes", "Background lighting adjusted at 45-second mark" ], "time_segments": [ "12-14 seconds: Product demonstration angle", "45-47 seconds: Background scene change" ] }

Real-World Use Cases

After building and testing SAGE, we've identified clear patterns for when it's most valuable:

Content Production

Before/After Comparisons: Compare edited vs raw footage

Version Control: Track changes across video iterations

Quality Assurance: Ensure consistency across video versions

Training & Education

Instructional Videos: Compare updated vs original versions

Course Consistency: Ensure all lessons maintain same format

Content Updates: Identify what changed in revised materials

Compliance & Verification

Ad Verification: Compare approved vs broadcast versions

Legal Documentation: Track changes in video evidence

Brand Consistency: Ensure marketing videos match brand guidelines

Why This Approach Works (And When It Doesn't)

SAGE excels at semantic comparison—finding when videos differ in content meaning, not just pixels. Here's when it works best:

Works Well When:

✅ Videos have different technical specs (resolution, codec, frame rate)

✅ You need to find content differences, not pixel differences

✅ Videos are similar in structure (same length, similar scenes)

✅ Differences are meaningful (scene changes, object additions, etc.)

Less Effective When:

❌ Videos are completely different (comparing unrelated content)

❌ You need frame-accurate timing (SAGE uses 2-second segments)

❌ Videos have extreme length differences (comparison truncates to shorter video)

❌ You need pixel-level accuracy (use traditional diff tools instead)

The Sweet Spot

SAGE is ideal for comparing variations of the same content—same script, same scene, but different takes, edits, or versions. It finds what matters without getting distracted by technical differences.

Performance Benchmarks

After processing hundreds of videos, here's what we've learned:

Processing Times

1-minute video: ~2-3 minutes total (upload + embedding)

5-minute video: ~5-8 minutes total

10-minute video: ~10-15 minutes total

15-minute video: ~15-25 minutes total

Breakdown: Upload is usually <1 minute. Embedding generation scales roughly linearly with duration.

Comparison Speed

Comparison calculation: <1 second for any video length

Timeline rendering: <100ms for typical videos

Video playback: Native browser performance

Key Insight: Comparison is fast once embeddings exist. The bottleneck is embedding generation, not comparison.

Accuracy

Semantic Differences: Captures meaningful content changes accurately

False Positives: Low with appropriate threshold (0.1 default works well)

False Negatives: Occasional misses on very subtle changes (can lower threshold)

Threshold Guidelines:

0.05: Very sensitive (finds subtle background changes)

0.1: Default (balanced sensitivity)

0.2: Less sensitive (only major differences)

0.5: Very insensitive (only dramatic changes)

Conclusion: The Future of Video Comparison

SAGE started as an experiment: "What if we compared videos by meaning instead of pixels?" What we discovered is that semantic comparison changes how we think about video differences.

Instead of getting lost in pixel-level noise, we can now focus on what actually matters—content changes, scene differences, meaningful variations. And instead of manual frame-by-frame review, we can automate the comparison process.

The implications are interesting:

For Content Creators: Quickly identify what changed between video versions without manual review

For Developers: Build applications that understand video content, not just video files

For the Industry: As embedding models improve, comparison accuracy will improve with them

The most exciting part? We're just scratching the surface. As video understanding models evolve, SAGE's comparison capabilities can evolve with them. Today it's comparing segments. Tomorrow it might be comparing scenes, detecting specific objects, or understanding narrative structure.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

GitHub Repository: SAGE on GitHub

TwelveLabs Documentation: Marengo Embeddings Guide

AWS S3: Multipart Upload Best Practices

Cosine Distance: Understanding Vector Similarity

Appendix: Technical Details

Embedding Model

Model: Marengo-retrieval-2.7

Segment Length: 2 seconds

Embedding Dimensions: 768 (per segment)

Scopes:

["clip", "video"](both clip-level and video-level embeddings)

Distance Metrics

Cosine Distance:

1 - (dot(v1, v2) / (norm(v1) * norm(v2)))Range: 0 (identical) to 2 (opposite)

Interpretation: 0-0.1 (very similar), 0.1-0.3 (somewhat different), 0.3-0.7 (moderately different), 0.7+ (very different)

S3 Configuration

Chunk Size: 10MB

Multipart Threshold: Always use multipart (more reliable)

Presigned URL Expiration: 1 hour (3600 seconds)

Region: Configurable (default: us-east-2)

API Endpoints

POST /validate-key- Validate TwelveLabs API keyPOST /upload-and-generate-embeddings- Upload video and start embedding generationGET /embedding-status/{embedding_id}- Check embedding generation statusPOST /compare-local-videos- Compare two videos by embedding IDsPOST /openai-analysis- Generate AI analysis of differences (optional)GET /serve-video/{video_id}- Get presigned URL for video playbackGET /health- Health check endpoint

Introduction

You've shot two versions of a training video. Same content, same script, but different takes. One has better lighting, the other has clearer audio. You need to quickly identify exactly where they differ—not just frame-by-frame pixel differences, but actual semantic changes in content, scene composition, or visual elements.

Traditional video comparison tools have a fundamental limitation: they compare pixels, not meaning. This breaks down when videos have:

Different resolutions or aspect ratios

Different encoding settings or compression

Different camera angles or positions

Lighting or color grading differences

Temporal shifts (one video starts a few seconds later)

This is why we built SAGE—a system that understands what's in videos, not just what pixels they contain. Instead of comparing raw video data, SAGE uses TwelveLabs Marengo embeddings to generate semantic representations of video segments, then compares those representations to find meaningful differences.

The key insight? Semantic embeddings capture what matters. A shot of a person walking doesn't need identical pixels—it needs to represent the same action. By comparing embeddings, we can detect when videos differ in content even when pixels differ for technical reasons.

SAGE creates a complete comparison workflow:

Upload videos to S3 using streaming multipart uploads (handles large files efficiently)

Generate embeddings using TwelveLabs Marengo-retrieval-2.7 (2-second segments)

Compare embeddings using cosine distance (finds semantic differences)

Visualize differences on a synchronized timeline with side-by-side playback

Analyze differences with optional AI-powered insights (OpenAI integration)

The result? A system that tells you what changed, not just what looks different.

Prerequisites

Before starting, ensure you have:

Python 3.12+ installed

Node.js 18+ or Bun installed

API Keys:

TwelveLabs API Key (for embedding generation)

OpenAI API Key (optional, for AI analysis)

AWS Account with S3 access configured (for video storage)

Git for cloning the repository

Basic familiarity with Python, FastAPI, Next.js, and AWS S3

The Problem with Pixel-Level Comparison

Here's what we discovered: pixel-level comparison breaks down in real-world scenarios. Consider comparing these two videos:

Video A: 1080p MP4, shot at 30fps, H.264 encoding, natural lighting

Video B: 720p MP4, shot at 24fps, H.265 encoding, studio lighting

A pixel-level comparison would flag almost every frame as "different" even though both videos show the same content. The fundamental issue? Pixels don't represent meaning.

Why Traditional Methods Fail

Traditional video comparison approaches suffer from three critical limitations:

Format Sensitivity: Different resolutions, codecs, or frame rates produce false positives

No Temporal Understanding: Frame-by-frame comparison misses temporal context

No Semantic Awareness: Can't distinguish between "different pixels" and "different content"

The Embedding Solution

TwelveLabs Marengo embeddings solve this by representing what's in the video, not what pixels it contains. Each 2-second segment gets converted into a high-dimensional vector that captures:

Visual content (objects, scenes, actions)

Temporal patterns (movement, transitions)

Semantic meaning (what's happening, not how it looks)

Comparing these embeddings tells us when videos differ in content, not just pixels.

Demo Application

SAGE provides a streamlined video comparison workflow:

Upload Videos: Upload two videos (up to 2 at a time) and watch as they're processed with real-time status updates—from S3 upload to embedding generation completion.

Automatic Comparison: Once both videos are ready, SAGE automatically compares them using semantic embeddings, identifying differences at the segment level without manual frame-by-frame review.

Interactive Analysis: Explore differences through synchronized side-by-side playback, a color-coded timeline showing where videos differ, and detailed segment-by-segment breakdowns with similarity scores.

The magic happens in real-time: watch embedding generation progress, see similarity percentages calculated instantly, and track differences across the timeline with precise timestamps. Jump to any difference marker to see exactly what changed between your videos.

You can explore the complete demo application and find the full source code on GitHub, or view a tutorial video demonstrating how the system works:

How SAGE Works

SAGE implements a sophisticated video comparison pipeline that combines AWS S3 storage, TwelveLabs embeddings, and intelligent visualization:

System Architecture

Preparation Steps

1. Clone the Repository

The code is publicly available here: https://github.com/aahilshaikh-twlbs/SAGE

git clone https://github.com/aahilshaikh-twlbs/SAGE.git cd

2. Set up Backend

cd backend python3 -m venv .venv source .venv/bin/activate # Windows: .venv\Scripts\activate pip install -r requirements.txt cp env.example .env # Add your API keys to .env

3. Set up Frontend

cd ../frontend npm install # or bun installcp .env.local.example .env.local # Set NEXT_PUBLIC_API_URL=http://localhost:8000

4. Configure AWS S3

# Configure AWS credentials (using AWS SSO or IAM) aws configure --profile dev # Or set environment variables:export AWS_ACCESS_KEY_ID=your_access_key export AWS_SECRET_ACCESS_KEY=your_secret_key export AWS_REGION

5. Start the Application

# Terminal 1: Backendcd backend python app.py # Terminal 2: Frontendcd frontend npm run dev# or bun dev

Once you've completed these steps, navigate to http://localhost:3000to access SAGE!

Implementation Walkthrough

Let's walk through the core components that power SAGE's video comparison system.

1. Streaming Video Upload to S3

SAGE handles large video files efficiently using streaming multipart uploads:

async def upload_to_s3_streaming(file: UploadFile) -> str: """Upload a file to S3 using streaming to avoid memory issues.""" file_key = f"videos/{uuid.uuid4()}_{file.filename}" # Use multipart upload for large files response = s3_client.create_multipart_upload( Bucket=S3_BUCKET_NAME, Key=file_key, ContentType=file.content_type ) upload_id = response['UploadId'] parts = [] part_number = 1 chunk_size = 10 * 1024 * 1024# 10MB chunks while True: chunk = await file.read(chunk_size) if not chunk: break part_response = s3_client.upload_part( Bucket=S3_BUCKET_NAME, Key=file_key, PartNumber=part_number, UploadId=upload_id, Body=chunk ) parts.append({ 'ETag': part_response['ETag'], 'PartNumber': part_number }) part_number += 1 # Complete multipart upload s3_client.complete_multipart_upload( Bucket=S3_BUCKET_NAME, Key=file_key, UploadId=upload_id, MultipartUpload={'Parts': parts} ) return f"s3://{S3_BUCKET_NAME}/{file_key}"

Key Design Decisions:

10MB Chunks: Balances upload efficiency with memory usage

Streaming: Processes file in chunks, never loads entire file into memory

Multipart Upload: Required for files >5GB, recommended for files >100MB

Presigned URLs: Generate temporary URLs for TwelveLabs to access videos securely

2. Embedding Generation with TwelveLabs

SAGE generates embeddings asynchronously using TwelveLabs Marengo-retrieval-2.7:

async def generate_embeddings_async(embedding_id: str, s3_url: str, api_key: str): """Asynchronously generate embeddings for a video from S3.""" # Get TwelveLabs client tl = get_twelve_labs_client(api_key) # Generate presigned URL for TwelveLabs to access the video presigned_url = get_s3_presigned_url(s3_url) # Create embedding task using presigned HTTPS URL task = tl.embed.task.create( model_name="Marengo-retrieval-2.7", video_url=presigned_url, video_clip_length=2,# 2-second segments video_embedding_scopes=["clip", "video"] ) # Wait for completion with timeout task.wait_for_done(sleep_interval=5, timeout=1800)# 30 minutes # Get completed task completed_task = tl.embed.task.retrieve(task.id) # Validate embeddings were generatedif not completed_task.video_embedding or not completed_task.video_embedding.segments: raise Exception("Embedding generation failed") # Store embeddings and duration embedding_storage[embedding_id].update({ "status": "completed", "embeddings": completed_task.video_embedding, "duration": last_segment.end_offset_sec, "task_id": task.id })

Key Features:

2-Second Segments: Balances granularity with processing time

Async Processing: Non-blocking, handles multiple videos via queue

Timeout Handling: 30-minute timeout prevents hanging on problematic videos

Validation: Ensures embeddings cover full video duration

3. Semantic Video Comparison

SAGE compares videos using cosine distance on embeddings:

async def compare_local_videos( embedding_id1: str, embedding_id2: str, threshold: float = 0.1, distance_metric: str = "cosine" ): """Compare two videos using their embedding IDs.""" # Get embedding segments segments1 = extract_segments(embedding_storage[embedding_id1]) segments2 = extract_segments(embedding_storage[embedding_id2]) differing_segments = [] min_segments = min(len(segments1), len(segments2)) # Compare corresponding segmentsfor i in range(min_segments): seg1 = segments1[i] seg2 = segments2[i] # Calculate cosine distance v1 = np.array(seg1["embedding"], dtype=np.float32) v2 = np.array(seg2["embedding"], dtype=np.float32) dot = np.dot(v1, v2) norm1 = np.linalg.norm(v1) norm2 = np.linalg.norm(v2) distance = 1.0 - (dot / (norm1 * norm2)) if norm1 > 0 and norm2 > 0 else 1.0 # Flag segments that exceed thresholdif distance > threshold: differing_segments.append({ "start_sec": seg1["start_offset_sec"], "end_sec": seg1["end_offset_sec"], "distance": distance }) return { "differences": differing_segments, "total_segments": min_segments, "differing_segments": len(differing_segments), "similarity_percent": ((min_segments - len(differing_segments)) / min_segments * 100) }

Why Cosine Distance?

Scale Invariant: Normalized vectors ignore magnitude differences

Semantic Focus: Measures similarity in meaning, not pixel values

Interpretable: 0 = identical, 1 = orthogonal, 2 = opposite

Configurable Threshold: Adjust sensitivity for different use cases

4. Synchronized Timeline Visualization

The frontend creates an interactive timeline with synchronized playback:

// Synchronized video playbackconst handlePlayPause = () => { if (video1Ref.current && video2Ref.current) { if (isPlaying) { video1Ref.current.pause(); video2Ref.current.pause(); } else { video1Ref.current.play(); video2Ref.current.play(); } setIsPlaying(!isPlaying); } }; // Jump to specific time in both videosconst seekToTime = (time: number) => { const constrainedTime = Math.min( time, Math.min(video1Data.duration, video2Data.duration) ); video1Ref.current.currentTime = constrainedTime; video2Ref.current.currentTime = constrainedTime; setCurrentTime(constrainedTime); }; // Color-coded difference markersconst getSeverityColor = (distance: number) => { if (distance >= 1.5) return 'bg-red-600';// Completely differentif (distance >= 1.0) return 'bg-red-500';// Very differentif (distance >= 0.7) return 'bg-orange-500';// Significantly differentif (distance >= 0.5) return 'bg-amber-500';// Moderately differentif (distance >= 0.3) return 'bg-yellow-500';// Somewhat differentif (distance >= 0.1) return 'bg-lime-500';// Slightly differentreturn 'bg-cyan-500';// Very similar };

Visualization Features:

Synchronized Playback: Both videos play/pause together

Timeline Markers: Color-coded segments show difference severity

Click-to-Seek: Click any marker to jump to that time

Similarity Score: Percentage similarity calculated from segments

5. Optional AI-Powered Analysis

SAGE optionally uses OpenAI to generate human-readable analysis:

async def generate_openai_analysis( embedding_id1: str, embedding_id2: str, differences: List[DifferenceSegment], threshold: float, video_duration: float ): """Generate AI-powered analysis of video differences.""" prompt = f""" Analyze the differences between two videos based on the following data: Video 1: {embed_data1.get('filename', 'Unknown')} Video 2: {embed_data2.get('filename', 'Unknown')} Total Duration: {video_duration:.1f} seconds Similarity Threshold: {threshold} Number of Differences: {len(differences)} Differences detected at these time segments: {chr(10).join([f"- {d.start_sec:.1f}s to {d.end_sec:.1f}s (distance: {d.distance:.3f})" for d in differences[:20]])} Please provide: 1. A concise analysis of what these differences might represent 2. Key insights about the comparison 3. Notable time segments where major differences occur """ response = openai.ChatCompletion.create( model="gpt-4", messages=[ {"role": "system", "content": "You are an expert video analysis assistant."}, {"role": "user", "content": prompt} ], max_tokens=500, temperature=0.7 ) return { "analysis": response.choices[0].message.content, "key_insights": extract_insights(response), "time_segments": extract_segments(response) }

Key Design Decisions

1. Segment-Based Comparison Over Frame-Based

We chose 2-second segments instead of frame-by-frame comparison for three reasons:

Temporal Context: Segments capture movement and action, not just static frames

Computational Efficiency: Fewer comparisons (e.g., 300 segments vs 1800 frames for 1-minute video)

Semantic Accuracy: Embeddings understand "what's happening" better than individual frames

Trade-off: Less granular timing (2-second precision vs frame-accurate), but much more meaningful differences.

2. Streaming Uploads Over In-Memory Processing

Large videos can be several gigabytes. Loading entire files into memory would crash servers:

Memory Safety: Streaming processes files in 10MB chunks

Scalability: Server stays responsive even with multiple large uploads

S3 Integration: Direct upload to S3, then presigned URLs for TwelveLabs

Trade-off: More complex upload logic, but enables handling videos of any size.

3. Cosine Distance Over Euclidean Distance

We use cosine distance for semantic comparison:

Scale Invariant: Works across different video qualities

Semantic Focus: Measures meaning similarity, not magnitude

Interpretable: Clear thresholds (0.1 = subtle, 0.5 = moderate, 1.0 = major)

Trade-off: Less intuitive than Euclidean distance, but better for semantic comparison.

4. Queue-Based Processing Over Parallel

Embedding generation can take 5-30 minutes per video. We process sequentially:

Rate Limit Safety: Avoids hitting TwelveLabs API rate limits

Resource Management: One video at a time uses consistent resources

Error Isolation: Failed videos don't block others

Trade-off: Slower total throughput, but more reliable and predictable.

5. In-Memory Embedding Storage Over Database

We store embeddings in memory rather than persisting to database:

Performance: Fast access during comparison (no database queries)

Simplicity: No schema migrations or database management

Temporary Nature: Embeddings are session-specific, don't need persistence

Trade-off: Lost on server restart, but acceptable for comparison workflow.

Performance Engineering: What We Learned

Building SAGE taught us valuable lessons about handling video processing at scale:

The 80/20 Rule Applied

We spent 80% of optimization effort on three things:

Streaming Uploads: Chunked uploads prevent memory exhaustion. The difference between loading a 2GB file vs streaming it is server stability vs crashes.

Async Processing: Non-blocking embedding generation keeps the API responsive. Users can upload multiple videos without waiting for each to complete.

Segment Validation: Ensuring embeddings cover full video duration prevents silent failures. We validate segment count, coverage, and duration before accepting results.

What Didn't Work (And Why)

We tried several optimizations that didn't pan out:

Parallel Embedding Generation: Hit TwelveLabs rate limits. Sequential processing was more reliable.

Caching Embeddings: Each video is unique, caching didn't help. Better to regenerate than cache.

Frame-Level Comparison: Too granular, too slow, too many false positives. Segment-level was the sweet spot.

Large Video Handling

Videos longer than 10 minutes required special considerations:

Timeout Management: 30-minute timeout prevents hanging on problematic videos

Segment Validation: Verify segments cover full duration (catch incomplete embeddings)

Error Messages: Clear errors instead of silent failures (

"Embedding generation incomplete")

The result? SAGE handles videos from 10 seconds to 20 minutes reliably.

We have more information on this matter in our Large Video Handling Guide.

Data Outputs

SAGE generates comprehensive comparison results:

Comparison Metrics

Total Segments: Number of 2-second segments compared

Differing Segments: Segments where distance exceeds threshold

Similarity Percentage:

(total - differing) / total * 100Difference Timeline: Timestamped segments with distance scores

Visualization

Synchronized Video Players: Side-by-side playback with timeline

Color-Coded Markers: Severity visualization (green = similar, red = different)

Interactive Timeline: Click markers to jump to differences

Difference List: Detailed breakdown by time segment

Optional AI-Analysis

Summary: High-level analysis of differences

Key Insights: Bullet points of notable findings

Time Segments: Specific moments where major differences occur

Usage Examples

Example 1: Compare Two Training Videos

Scenario: Compare before/after versions of a product demo video

# Upload videos POST /upload-and-generate-embeddings { "file": <video_file_1> } POST /upload-and-generate-embeddings { "file": <video_file_2> } # Wait for embeddings (poll status endpoint) GET /embedding-status/{embedding_id} # Compare videos POST /compare-local-videos?embedding_id1={id1}&embedding_id2={id2}&threshold=0.1

Response:

{ "filename1": "demo_v1.mp4", "filename2": "demo_v2.mp4", "differences": [ { "start_sec": 12.0, "end_sec": 14.0, "distance": 0.342 }, { "start_sec": 45.0, "end_sec": 47.0, "distance": 0.521 } ], "total_segments": 180, "differing_segments": 2, "threshold_used": 0.1, "similarity_percent": 98.89 }

Interpretation: Videos are 98.89% similar. Two segments differ:

12-14 seconds: Moderate difference (distance 0.342)

45-47 seconds: Moderate difference (distance 0.521)

Example 2: Fine-Tune Threshold for Subtle Differences

Scenario: Find very subtle differences (e.g., background changes)

# Use lower threshold for more sensitivity POST /compare-local-videos?embedding_id1={id1}&embedding_id2={id2}&threshold=0.05

Result: Detects more differences, including subtle background or lighting changes.

Example 3: Generate AI Analysis

Scenario: Get human-readable explanation of differences

POST /openai-analysis { "embedding_id1": "...", "embedding_id2": "...", "differences": [...], "threshold": 0.1, "video_duration": 360.0 }

Response:

{ "analysis": "The videos show similar content overall, with two notable differences...", "key_insights": [ "Product positioning changed between takes", "Background lighting adjusted at 45-second mark" ], "time_segments": [ "12-14 seconds: Product demonstration angle", "45-47 seconds: Background scene change" ] }

Real-World Use Cases

After building and testing SAGE, we've identified clear patterns for when it's most valuable:

Content Production

Before/After Comparisons: Compare edited vs raw footage

Version Control: Track changes across video iterations

Quality Assurance: Ensure consistency across video versions

Training & Education

Instructional Videos: Compare updated vs original versions

Course Consistency: Ensure all lessons maintain same format

Content Updates: Identify what changed in revised materials

Compliance & Verification

Ad Verification: Compare approved vs broadcast versions

Legal Documentation: Track changes in video evidence

Brand Consistency: Ensure marketing videos match brand guidelines

Why This Approach Works (And When It Doesn't)

SAGE excels at semantic comparison—finding when videos differ in content meaning, not just pixels. Here's when it works best:

Works Well When:

✅ Videos have different technical specs (resolution, codec, frame rate)

✅ You need to find content differences, not pixel differences

✅ Videos are similar in structure (same length, similar scenes)

✅ Differences are meaningful (scene changes, object additions, etc.)

Less Effective When:

❌ Videos are completely different (comparing unrelated content)

❌ You need frame-accurate timing (SAGE uses 2-second segments)

❌ Videos have extreme length differences (comparison truncates to shorter video)

❌ You need pixel-level accuracy (use traditional diff tools instead)

The Sweet Spot

SAGE is ideal for comparing variations of the same content—same script, same scene, but different takes, edits, or versions. It finds what matters without getting distracted by technical differences.

Performance Benchmarks

After processing hundreds of videos, here's what we've learned:

Processing Times

1-minute video: ~2-3 minutes total (upload + embedding)

5-minute video: ~5-8 minutes total

10-minute video: ~10-15 minutes total

15-minute video: ~15-25 minutes total

Breakdown: Upload is usually <1 minute. Embedding generation scales roughly linearly with duration.

Comparison Speed

Comparison calculation: <1 second for any video length

Timeline rendering: <100ms for typical videos

Video playback: Native browser performance

Key Insight: Comparison is fast once embeddings exist. The bottleneck is embedding generation, not comparison.

Accuracy

Semantic Differences: Captures meaningful content changes accurately

False Positives: Low with appropriate threshold (0.1 default works well)

False Negatives: Occasional misses on very subtle changes (can lower threshold)

Threshold Guidelines:

0.05: Very sensitive (finds subtle background changes)

0.1: Default (balanced sensitivity)

0.2: Less sensitive (only major differences)

0.5: Very insensitive (only dramatic changes)

Conclusion: The Future of Video Comparison

SAGE started as an experiment: "What if we compared videos by meaning instead of pixels?" What we discovered is that semantic comparison changes how we think about video differences.

Instead of getting lost in pixel-level noise, we can now focus on what actually matters—content changes, scene differences, meaningful variations. And instead of manual frame-by-frame review, we can automate the comparison process.

The implications are interesting:

For Content Creators: Quickly identify what changed between video versions without manual review

For Developers: Build applications that understand video content, not just video files

For the Industry: As embedding models improve, comparison accuracy will improve with them

The most exciting part? We're just scratching the surface. As video understanding models evolve, SAGE's comparison capabilities can evolve with them. Today it's comparing segments. Tomorrow it might be comparing scenes, detecting specific objects, or understanding narrative structure.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

GitHub Repository: SAGE on GitHub

TwelveLabs Documentation: Marengo Embeddings Guide

AWS S3: Multipart Upload Best Practices

Cosine Distance: Understanding Vector Similarity

Appendix: Technical Details

Embedding Model

Model: Marengo-retrieval-2.7

Segment Length: 2 seconds

Embedding Dimensions: 768 (per segment)

Scopes:

["clip", "video"](both clip-level and video-level embeddings)

Distance Metrics

Cosine Distance:

1 - (dot(v1, v2) / (norm(v1) * norm(v2)))Range: 0 (identical) to 2 (opposite)

Interpretation: 0-0.1 (very similar), 0.1-0.3 (somewhat different), 0.3-0.7 (moderately different), 0.7+ (very different)

S3 Configuration

Chunk Size: 10MB

Multipart Threshold: Always use multipart (more reliable)

Presigned URL Expiration: 1 hour (3600 seconds)

Region: Configurable (default: us-east-2)

API Endpoints

POST /validate-key- Validate TwelveLabs API keyPOST /upload-and-generate-embeddings- Upload video and start embedding generationGET /embedding-status/{embedding_id}- Check embedding generation statusPOST /compare-local-videos- Compare two videos by embedding IDsPOST /openai-analysis- Generate AI analysis of differences (optional)GET /serve-video/{video_id}- Get presigned URL for video playbackGET /health- Health check endpoint

Introduction

You've shot two versions of a training video. Same content, same script, but different takes. One has better lighting, the other has clearer audio. You need to quickly identify exactly where they differ—not just frame-by-frame pixel differences, but actual semantic changes in content, scene composition, or visual elements.

Traditional video comparison tools have a fundamental limitation: they compare pixels, not meaning. This breaks down when videos have:

Different resolutions or aspect ratios

Different encoding settings or compression

Different camera angles or positions

Lighting or color grading differences

Temporal shifts (one video starts a few seconds later)

This is why we built SAGE—a system that understands what's in videos, not just what pixels they contain. Instead of comparing raw video data, SAGE uses TwelveLabs Marengo embeddings to generate semantic representations of video segments, then compares those representations to find meaningful differences.

The key insight? Semantic embeddings capture what matters. A shot of a person walking doesn't need identical pixels—it needs to represent the same action. By comparing embeddings, we can detect when videos differ in content even when pixels differ for technical reasons.

SAGE creates a complete comparison workflow:

Upload videos to S3 using streaming multipart uploads (handles large files efficiently)

Generate embeddings using TwelveLabs Marengo-retrieval-2.7 (2-second segments)

Compare embeddings using cosine distance (finds semantic differences)

Visualize differences on a synchronized timeline with side-by-side playback

Analyze differences with optional AI-powered insights (OpenAI integration)

The result? A system that tells you what changed, not just what looks different.

Prerequisites

Before starting, ensure you have:

Python 3.12+ installed

Node.js 18+ or Bun installed

API Keys:

TwelveLabs API Key (for embedding generation)

OpenAI API Key (optional, for AI analysis)

AWS Account with S3 access configured (for video storage)

Git for cloning the repository

Basic familiarity with Python, FastAPI, Next.js, and AWS S3

The Problem with Pixel-Level Comparison

Here's what we discovered: pixel-level comparison breaks down in real-world scenarios. Consider comparing these two videos:

Video A: 1080p MP4, shot at 30fps, H.264 encoding, natural lighting

Video B: 720p MP4, shot at 24fps, H.265 encoding, studio lighting

A pixel-level comparison would flag almost every frame as "different" even though both videos show the same content. The fundamental issue? Pixels don't represent meaning.

Why Traditional Methods Fail

Traditional video comparison approaches suffer from three critical limitations:

Format Sensitivity: Different resolutions, codecs, or frame rates produce false positives

No Temporal Understanding: Frame-by-frame comparison misses temporal context

No Semantic Awareness: Can't distinguish between "different pixels" and "different content"

The Embedding Solution

TwelveLabs Marengo embeddings solve this by representing what's in the video, not what pixels it contains. Each 2-second segment gets converted into a high-dimensional vector that captures:

Visual content (objects, scenes, actions)

Temporal patterns (movement, transitions)

Semantic meaning (what's happening, not how it looks)

Comparing these embeddings tells us when videos differ in content, not just pixels.

Demo Application

SAGE provides a streamlined video comparison workflow:

Upload Videos: Upload two videos (up to 2 at a time) and watch as they're processed with real-time status updates—from S3 upload to embedding generation completion.

Automatic Comparison: Once both videos are ready, SAGE automatically compares them using semantic embeddings, identifying differences at the segment level without manual frame-by-frame review.

Interactive Analysis: Explore differences through synchronized side-by-side playback, a color-coded timeline showing where videos differ, and detailed segment-by-segment breakdowns with similarity scores.

The magic happens in real-time: watch embedding generation progress, see similarity percentages calculated instantly, and track differences across the timeline with precise timestamps. Jump to any difference marker to see exactly what changed between your videos.

You can explore the complete demo application and find the full source code on GitHub, or view a tutorial video demonstrating how the system works:

How SAGE Works

SAGE implements a sophisticated video comparison pipeline that combines AWS S3 storage, TwelveLabs embeddings, and intelligent visualization:

System Architecture

Preparation Steps

1. Clone the Repository

The code is publicly available here: https://github.com/aahilshaikh-twlbs/SAGE

git clone https://github.com/aahilshaikh-twlbs/SAGE.git cd

2. Set up Backend

cd backend python3 -m venv .venv source .venv/bin/activate # Windows: .venv\Scripts\activate pip install -r requirements.txt cp env.example .env # Add your API keys to .env

3. Set up Frontend

cd ../frontend npm install # or bun installcp .env.local.example .env.local # Set NEXT_PUBLIC_API_URL=http://localhost:8000

4. Configure AWS S3

# Configure AWS credentials (using AWS SSO or IAM) aws configure --profile dev # Or set environment variables:export AWS_ACCESS_KEY_ID=your_access_key export AWS_SECRET_ACCESS_KEY=your_secret_key export AWS_REGION

5. Start the Application

# Terminal 1: Backendcd backend python app.py # Terminal 2: Frontendcd frontend npm run dev# or bun dev

Once you've completed these steps, navigate to http://localhost:3000to access SAGE!

Implementation Walkthrough

Let's walk through the core components that power SAGE's video comparison system.

1. Streaming Video Upload to S3

SAGE handles large video files efficiently using streaming multipart uploads:

async def upload_to_s3_streaming(file: UploadFile) -> str: """Upload a file to S3 using streaming to avoid memory issues.""" file_key = f"videos/{uuid.uuid4()}_{file.filename}" # Use multipart upload for large files response = s3_client.create_multipart_upload( Bucket=S3_BUCKET_NAME, Key=file_key, ContentType=file.content_type ) upload_id = response['UploadId'] parts = [] part_number = 1 chunk_size = 10 * 1024 * 1024# 10MB chunks while True: chunk = await file.read(chunk_size) if not chunk: break part_response = s3_client.upload_part( Bucket=S3_BUCKET_NAME, Key=file_key, PartNumber=part_number, UploadId=upload_id, Body=chunk ) parts.append({ 'ETag': part_response['ETag'], 'PartNumber': part_number }) part_number += 1 # Complete multipart upload s3_client.complete_multipart_upload( Bucket=S3_BUCKET_NAME, Key=file_key, UploadId=upload_id, MultipartUpload={'Parts': parts} ) return f"s3://{S3_BUCKET_NAME}/{file_key}"

Key Design Decisions:

10MB Chunks: Balances upload efficiency with memory usage

Streaming: Processes file in chunks, never loads entire file into memory

Multipart Upload: Required for files >5GB, recommended for files >100MB

Presigned URLs: Generate temporary URLs for TwelveLabs to access videos securely

2. Embedding Generation with TwelveLabs

SAGE generates embeddings asynchronously using TwelveLabs Marengo-retrieval-2.7:

async def generate_embeddings_async(embedding_id: str, s3_url: str, api_key: str): """Asynchronously generate embeddings for a video from S3.""" # Get TwelveLabs client tl = get_twelve_labs_client(api_key) # Generate presigned URL for TwelveLabs to access the video presigned_url = get_s3_presigned_url(s3_url) # Create embedding task using presigned HTTPS URL task = tl.embed.task.create( model_name="Marengo-retrieval-2.7", video_url=presigned_url, video_clip_length=2,# 2-second segments video_embedding_scopes=["clip", "video"] ) # Wait for completion with timeout task.wait_for_done(sleep_interval=5, timeout=1800)# 30 minutes # Get completed task completed_task = tl.embed.task.retrieve(task.id) # Validate embeddings were generatedif not completed_task.video_embedding or not completed_task.video_embedding.segments: raise Exception("Embedding generation failed") # Store embeddings and duration embedding_storage[embedding_id].update({ "status": "completed", "embeddings": completed_task.video_embedding, "duration": last_segment.end_offset_sec, "task_id": task.id })

Key Features:

2-Second Segments: Balances granularity with processing time

Async Processing: Non-blocking, handles multiple videos via queue

Timeout Handling: 30-minute timeout prevents hanging on problematic videos

Validation: Ensures embeddings cover full video duration

3. Semantic Video Comparison

SAGE compares videos using cosine distance on embeddings:

async def compare_local_videos( embedding_id1: str, embedding_id2: str, threshold: float = 0.1, distance_metric: str = "cosine" ): """Compare two videos using their embedding IDs.""" # Get embedding segments segments1 = extract_segments(embedding_storage[embedding_id1]) segments2 = extract_segments(embedding_storage[embedding_id2]) differing_segments = [] min_segments = min(len(segments1), len(segments2)) # Compare corresponding segmentsfor i in range(min_segments): seg1 = segments1[i] seg2 = segments2[i] # Calculate cosine distance v1 = np.array(seg1["embedding"], dtype=np.float32) v2 = np.array(seg2["embedding"], dtype=np.float32) dot = np.dot(v1, v2) norm1 = np.linalg.norm(v1) norm2 = np.linalg.norm(v2) distance = 1.0 - (dot / (norm1 * norm2)) if norm1 > 0 and norm2 > 0 else 1.0 # Flag segments that exceed thresholdif distance > threshold: differing_segments.append({ "start_sec": seg1["start_offset_sec"], "end_sec": seg1["end_offset_sec"], "distance": distance }) return { "differences": differing_segments, "total_segments": min_segments, "differing_segments": len(differing_segments), "similarity_percent": ((min_segments - len(differing_segments)) / min_segments * 100) }

Why Cosine Distance?

Scale Invariant: Normalized vectors ignore magnitude differences

Semantic Focus: Measures similarity in meaning, not pixel values

Interpretable: 0 = identical, 1 = orthogonal, 2 = opposite

Configurable Threshold: Adjust sensitivity for different use cases

4. Synchronized Timeline Visualization

The frontend creates an interactive timeline with synchronized playback:

// Synchronized video playbackconst handlePlayPause = () => { if (video1Ref.current && video2Ref.current) { if (isPlaying) { video1Ref.current.pause(); video2Ref.current.pause(); } else { video1Ref.current.play(); video2Ref.current.play(); } setIsPlaying(!isPlaying); } }; // Jump to specific time in both videosconst seekToTime = (time: number) => { const constrainedTime = Math.min( time, Math.min(video1Data.duration, video2Data.duration) ); video1Ref.current.currentTime = constrainedTime; video2Ref.current.currentTime = constrainedTime; setCurrentTime(constrainedTime); }; // Color-coded difference markersconst getSeverityColor = (distance: number) => { if (distance >= 1.5) return 'bg-red-600';// Completely differentif (distance >= 1.0) return 'bg-red-500';// Very differentif (distance >= 0.7) return 'bg-orange-500';// Significantly differentif (distance >= 0.5) return 'bg-amber-500';// Moderately differentif (distance >= 0.3) return 'bg-yellow-500';// Somewhat differentif (distance >= 0.1) return 'bg-lime-500';// Slightly differentreturn 'bg-cyan-500';// Very similar };

Visualization Features:

Synchronized Playback: Both videos play/pause together

Timeline Markers: Color-coded segments show difference severity

Click-to-Seek: Click any marker to jump to that time

Similarity Score: Percentage similarity calculated from segments

5. Optional AI-Powered Analysis

SAGE optionally uses OpenAI to generate human-readable analysis:

async def generate_openai_analysis( embedding_id1: str, embedding_id2: str, differences: List[DifferenceSegment], threshold: float, video_duration: float ): """Generate AI-powered analysis of video differences.""" prompt = f""" Analyze the differences between two videos based on the following data: Video 1: {embed_data1.get('filename', 'Unknown')} Video 2: {embed_data2.get('filename', 'Unknown')} Total Duration: {video_duration:.1f} seconds Similarity Threshold: {threshold} Number of Differences: {len(differences)} Differences detected at these time segments: {chr(10).join([f"- {d.start_sec:.1f}s to {d.end_sec:.1f}s (distance: {d.distance:.3f})" for d in differences[:20]])} Please provide: 1. A concise analysis of what these differences might represent 2. Key insights about the comparison 3. Notable time segments where major differences occur """ response = openai.ChatCompletion.create( model="gpt-4", messages=[ {"role": "system", "content": "You are an expert video analysis assistant."}, {"role": "user", "content": prompt} ], max_tokens=500, temperature=0.7 ) return { "analysis": response.choices[0].message.content, "key_insights": extract_insights(response), "time_segments": extract_segments(response) }

Key Design Decisions

1. Segment-Based Comparison Over Frame-Based

We chose 2-second segments instead of frame-by-frame comparison for three reasons:

Temporal Context: Segments capture movement and action, not just static frames

Computational Efficiency: Fewer comparisons (e.g., 300 segments vs 1800 frames for 1-minute video)

Semantic Accuracy: Embeddings understand "what's happening" better than individual frames

Trade-off: Less granular timing (2-second precision vs frame-accurate), but much more meaningful differences.

2. Streaming Uploads Over In-Memory Processing

Large videos can be several gigabytes. Loading entire files into memory would crash servers:

Memory Safety: Streaming processes files in 10MB chunks

Scalability: Server stays responsive even with multiple large uploads

S3 Integration: Direct upload to S3, then presigned URLs for TwelveLabs

Trade-off: More complex upload logic, but enables handling videos of any size.

3. Cosine Distance Over Euclidean Distance

We use cosine distance for semantic comparison:

Scale Invariant: Works across different video qualities

Semantic Focus: Measures meaning similarity, not magnitude

Interpretable: Clear thresholds (0.1 = subtle, 0.5 = moderate, 1.0 = major)

Trade-off: Less intuitive than Euclidean distance, but better for semantic comparison.

4. Queue-Based Processing Over Parallel

Embedding generation can take 5-30 minutes per video. We process sequentially:

Rate Limit Safety: Avoids hitting TwelveLabs API rate limits

Resource Management: One video at a time uses consistent resources

Error Isolation: Failed videos don't block others

Trade-off: Slower total throughput, but more reliable and predictable.

5. In-Memory Embedding Storage Over Database

We store embeddings in memory rather than persisting to database:

Performance: Fast access during comparison (no database queries)

Simplicity: No schema migrations or database management

Temporary Nature: Embeddings are session-specific, don't need persistence

Trade-off: Lost on server restart, but acceptable for comparison workflow.

Performance Engineering: What We Learned

Building SAGE taught us valuable lessons about handling video processing at scale:

The 80/20 Rule Applied

We spent 80% of optimization effort on three things:

Streaming Uploads: Chunked uploads prevent memory exhaustion. The difference between loading a 2GB file vs streaming it is server stability vs crashes.

Async Processing: Non-blocking embedding generation keeps the API responsive. Users can upload multiple videos without waiting for each to complete.

Segment Validation: Ensuring embeddings cover full video duration prevents silent failures. We validate segment count, coverage, and duration before accepting results.

What Didn't Work (And Why)

We tried several optimizations that didn't pan out:

Parallel Embedding Generation: Hit TwelveLabs rate limits. Sequential processing was more reliable.

Caching Embeddings: Each video is unique, caching didn't help. Better to regenerate than cache.

Frame-Level Comparison: Too granular, too slow, too many false positives. Segment-level was the sweet spot.

Large Video Handling

Videos longer than 10 minutes required special considerations:

Timeout Management: 30-minute timeout prevents hanging on problematic videos

Segment Validation: Verify segments cover full duration (catch incomplete embeddings)

Error Messages: Clear errors instead of silent failures (

"Embedding generation incomplete")

The result? SAGE handles videos from 10 seconds to 20 minutes reliably.

We have more information on this matter in our Large Video Handling Guide.

Data Outputs

SAGE generates comprehensive comparison results:

Comparison Metrics

Total Segments: Number of 2-second segments compared

Differing Segments: Segments where distance exceeds threshold

Similarity Percentage:

(total - differing) / total * 100Difference Timeline: Timestamped segments with distance scores

Visualization

Synchronized Video Players: Side-by-side playback with timeline

Color-Coded Markers: Severity visualization (green = similar, red = different)

Interactive Timeline: Click markers to jump to differences

Difference List: Detailed breakdown by time segment

Optional AI-Analysis

Summary: High-level analysis of differences

Key Insights: Bullet points of notable findings

Time Segments: Specific moments where major differences occur

Usage Examples

Example 1: Compare Two Training Videos

Scenario: Compare before/after versions of a product demo video

# Upload videos POST /upload-and-generate-embeddings { "file": <video_file_1> } POST /upload-and-generate-embeddings { "file": <video_file_2> } # Wait for embeddings (poll status endpoint) GET /embedding-status/{embedding_id} # Compare videos POST /compare-local-videos?embedding_id1={id1}&embedding_id2={id2}&threshold=0.1

Response:

{ "filename1": "demo_v1.mp4", "filename2": "demo_v2.mp4", "differences": [ { "start_sec": 12.0, "end_sec": 14.0, "distance": 0.342 }, { "start_sec": 45.0, "end_sec": 47.0, "distance": 0.521 } ], "total_segments": 180, "differing_segments": 2, "threshold_used": 0.1, "similarity_percent": 98.89 }

Interpretation: Videos are 98.89% similar. Two segments differ:

12-14 seconds: Moderate difference (distance 0.342)

45-47 seconds: Moderate difference (distance 0.521)

Example 2: Fine-Tune Threshold for Subtle Differences

Scenario: Find very subtle differences (e.g., background changes)

# Use lower threshold for more sensitivity POST /compare-local-videos?embedding_id1={id1}&embedding_id2={id2}&threshold=0.05

Result: Detects more differences, including subtle background or lighting changes.

Example 3: Generate AI Analysis

Scenario: Get human-readable explanation of differences

POST /openai-analysis { "embedding_id1": "...", "embedding_id2": "...", "differences": [...], "threshold": 0.1, "video_duration": 360.0 }

Response:

{ "analysis": "The videos show similar content overall, with two notable differences...", "key_insights": [ "Product positioning changed between takes", "Background lighting adjusted at 45-second mark" ], "time_segments": [ "12-14 seconds: Product demonstration angle", "45-47 seconds: Background scene change" ] }

Real-World Use Cases

After building and testing SAGE, we've identified clear patterns for when it's most valuable:

Content Production

Before/After Comparisons: Compare edited vs raw footage

Version Control: Track changes across video iterations

Quality Assurance: Ensure consistency across video versions

Training & Education

Instructional Videos: Compare updated vs original versions

Course Consistency: Ensure all lessons maintain same format

Content Updates: Identify what changed in revised materials

Compliance & Verification

Ad Verification: Compare approved vs broadcast versions

Legal Documentation: Track changes in video evidence

Brand Consistency: Ensure marketing videos match brand guidelines

Why This Approach Works (And When It Doesn't)

SAGE excels at semantic comparison—finding when videos differ in content meaning, not just pixels. Here's when it works best:

Works Well When:

✅ Videos have different technical specs (resolution, codec, frame rate)

✅ You need to find content differences, not pixel differences

✅ Videos are similar in structure (same length, similar scenes)

✅ Differences are meaningful (scene changes, object additions, etc.)

Less Effective When:

❌ Videos are completely different (comparing unrelated content)

❌ You need frame-accurate timing (SAGE uses 2-second segments)

❌ Videos have extreme length differences (comparison truncates to shorter video)

❌ You need pixel-level accuracy (use traditional diff tools instead)

The Sweet Spot

SAGE is ideal for comparing variations of the same content—same script, same scene, but different takes, edits, or versions. It finds what matters without getting distracted by technical differences.

Performance Benchmarks

After processing hundreds of videos, here's what we've learned:

Processing Times

1-minute video: ~2-3 minutes total (upload + embedding)

5-minute video: ~5-8 minutes total

10-minute video: ~10-15 minutes total

15-minute video: ~15-25 minutes total

Breakdown: Upload is usually <1 minute. Embedding generation scales roughly linearly with duration.

Comparison Speed

Comparison calculation: <1 second for any video length

Timeline rendering: <100ms for typical videos

Video playback: Native browser performance

Key Insight: Comparison is fast once embeddings exist. The bottleneck is embedding generation, not comparison.

Accuracy

Semantic Differences: Captures meaningful content changes accurately

False Positives: Low with appropriate threshold (0.1 default works well)

False Negatives: Occasional misses on very subtle changes (can lower threshold)

Threshold Guidelines:

0.05: Very sensitive (finds subtle background changes)

0.1: Default (balanced sensitivity)

0.2: Less sensitive (only major differences)

0.5: Very insensitive (only dramatic changes)

Conclusion: The Future of Video Comparison

SAGE started as an experiment: "What if we compared videos by meaning instead of pixels?" What we discovered is that semantic comparison changes how we think about video differences.

Instead of getting lost in pixel-level noise, we can now focus on what actually matters—content changes, scene differences, meaningful variations. And instead of manual frame-by-frame review, we can automate the comparison process.

The implications are interesting:

For Content Creators: Quickly identify what changed between video versions without manual review

For Developers: Build applications that understand video content, not just video files

For the Industry: As embedding models improve, comparison accuracy will improve with them

The most exciting part? We're just scratching the surface. As video understanding models evolve, SAGE's comparison capabilities can evolve with them. Today it's comparing segments. Tomorrow it might be comparing scenes, detecting specific objects, or understanding narrative structure.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

GitHub Repository: SAGE on GitHub

TwelveLabs Documentation: Marengo Embeddings Guide

AWS S3: Multipart Upload Best Practices

Cosine Distance: Understanding Vector Similarity

Appendix: Technical Details

Embedding Model

Model: Marengo-retrieval-2.7

Segment Length: 2 seconds

Embedding Dimensions: 768 (per segment)

Scopes:

["clip", "video"](both clip-level and video-level embeddings)

Distance Metrics

Cosine Distance:

1 - (dot(v1, v2) / (norm(v1) * norm(v2)))Range: 0 (identical) to 2 (opposite)

Interpretation: 0-0.1 (very similar), 0.1-0.3 (somewhat different), 0.3-0.7 (moderately different), 0.7+ (very different)

S3 Configuration

Chunk Size: 10MB

Multipart Threshold: Always use multipart (more reliable)

Presigned URL Expiration: 1 hour (3600 seconds)

Region: Configurable (default: us-east-2)

API Endpoints

POST /validate-key- Validate TwelveLabs API keyPOST /upload-and-generate-embeddings- Upload video and start embedding generationGET /embedding-status/{embedding_id}- Check embedding generation statusPOST /compare-local-videos- Compare two videos by embedding IDsPOST /openai-analysis- Generate AI analysis of differences (optional)GET /serve-video/{video_id}- Get presigned URL for video playbackGET /health- Health check endpoint

Related articles

From Manual Review to Automated Intelligence: Building a Surgical Video Analysis Platform with YOLO and Twelve Labs

Building Recurser: Iterative AI Video Enhancement with TwelveLabs and Google Veo

Building an AI-Powered Sponsorship ROI Analysis Platform with TwelveLabs

Building a Multi-Sport Video Analysis System with TwelveLabs API

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved