Tutorial

Building Recurser: Iterative AI Video Enhancement with TwelveLabs and Google Veo

Aahil Shaikh

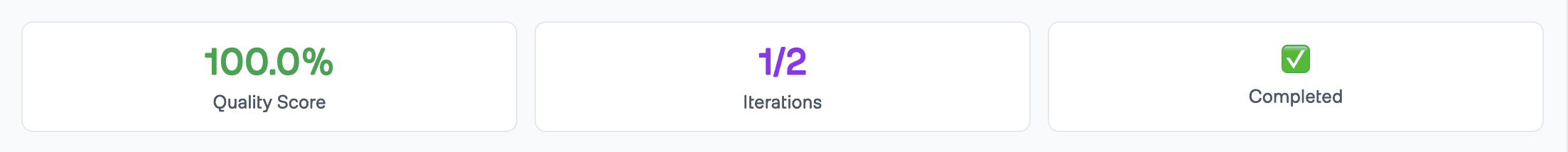

AI-generated videos often contain telltale artifacts—unnatural facial symmetry, jerky movements, inconsistent lighting—that reveal their synthetic origins. This tutorial shows you how to build Recurser, a production-ready system that automatically detects these flaws using TwelveLabs Pegasus and Marengo, generates improved prompts with Google Gemini, and regenerates videos until they achieve photorealistic quality (100% confidence score). Recurser combines video-native AI understanding with intelligent prompt engineering to transform AI-generated videos from "obviously synthetic" to "indistinguishable from real" through an automated feedback loop. By the end of this tutorial, you'll have a complete video enhancement system ready to deploy and extend.

AI-generated videos often contain telltale artifacts—unnatural facial symmetry, jerky movements, inconsistent lighting—that reveal their synthetic origins. This tutorial shows you how to build Recurser, a production-ready system that automatically detects these flaws using TwelveLabs Pegasus and Marengo, generates improved prompts with Google Gemini, and regenerates videos until they achieve photorealistic quality (100% confidence score). Recurser combines video-native AI understanding with intelligent prompt engineering to transform AI-generated videos from "obviously synthetic" to "indistinguishable from real" through an automated feedback loop. By the end of this tutorial, you'll have a complete video enhancement system ready to deploy and extend.

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Jan 13, 2026

18 Minutes

Copy link to article

Introduction

You've just generated a video with Google Gemini’s Veo2. It looks decent at first glance—the prompt was good, the composition is solid. But something feels off. On closer inspection, you notice the cat's fur moves unnaturally, shadows don't quite align with the lighting, and there's a subtle "uncanny valley" effect that makes it feel synthetic.

The problem? Most AI video generation is a one-shot process. You generate once, maybe tweak the prompt slightly, generate again. But how do you know what's actually wrong? And more importantly, how do you fix it without spending hours manually reviewing every frame?

This is why we built Recurser—an automated system that doesn't just detect AI problems, but actively fixes them through smart iteration. Instead of guessing what's wrong, Recurser uses AI video understanding to find specific issues, then automatically improves prompts to regenerate better versions until the video looks real.

The key insight? Fixing the prompt is more powerful than fixing the video. Traditional post-processing can only address what's already generated, but improving prompts addresses the root cause—ensuring the next generation is fundamentally better.

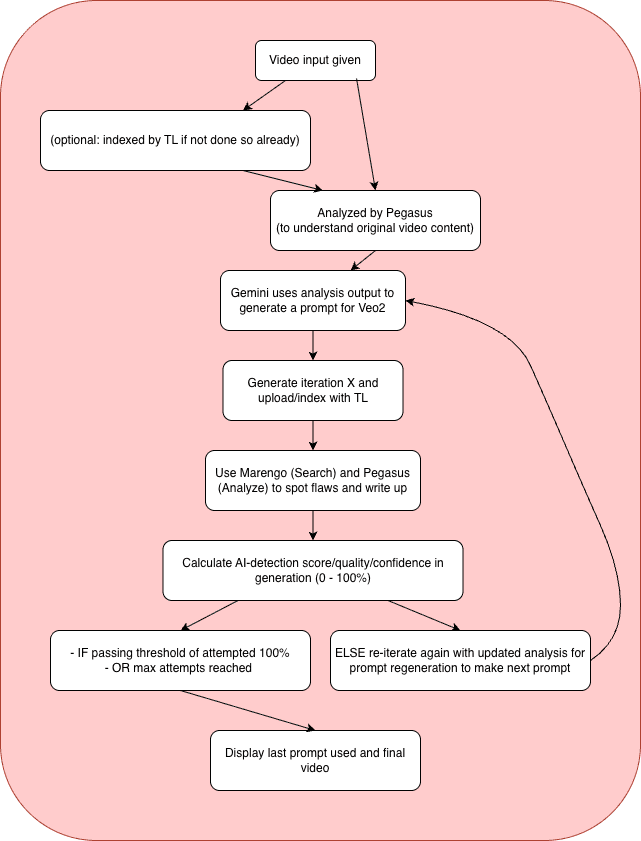

Recurser creates a feedback loop:

Detect problems using TwelveLabs Marengo (checks 250+ different issues)

Understand what's in the video with TwelveLabs Pegasus (converts video to text description)

Improve the prompt using Google Gemini (makes the prompt better)

Regenerate with Veo2 (creates a new video with the improved prompt)

Repeat until quality reaches 100% or max iterations

The result? Videos that start at 60% quality and systematically improve to 100% over 1-3 iterations, without you doing anything.

Prerequisites

Before starting, ensure you have:

Python 3.12+ installed

Node.js 18+ installed

API Keys:

TwelveLabs API Key (for Pegasus and Marengo)

Google Gemini API Key (for prompt enhancement)

Google Veo 2.0 access (for video generation)

Git for cloning the repository

Basic familiarity with Python, FastAPI, and Next.js

The Problem with One-Shot Generation

Here's what we discovered: AI video generation models don't always produce the same result. Run the same prompt twice and you get different videos. But more importantly, models often produce flaws that look okay at first glance but become obvious on closer inspection.

Consider this scenario: You generate a video of "a cat drinking tea in a garden." The first generation might have:

Unnatural fur movement (motion artifacts)

Inconsistent lighting between frames (temporal artifacts)

Slightly robotic cat behavior (behavioral artifacts)

Traditional approaches would either:

Accept the flaws (if they're subtle enough)

Manually regenerate with slightly different prompts (trial and error)

Apply post-processing filters (only fixes certain issues)

Recurser takes a different approach: find every problem, then automatically improve the prompt to fix them all at once. Each iteration learns from the previous one, so the improvements build on each other.

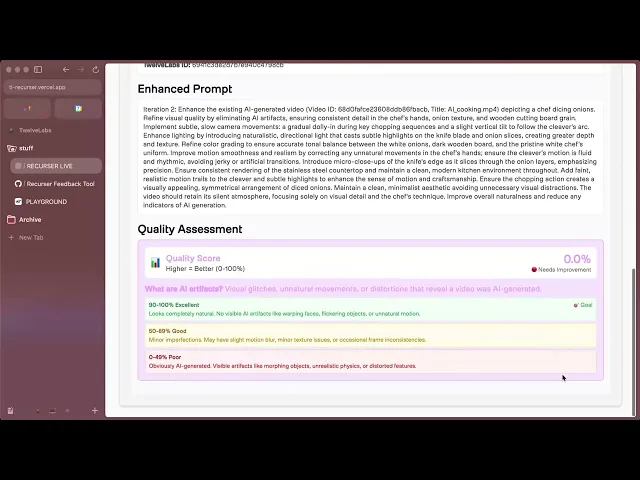

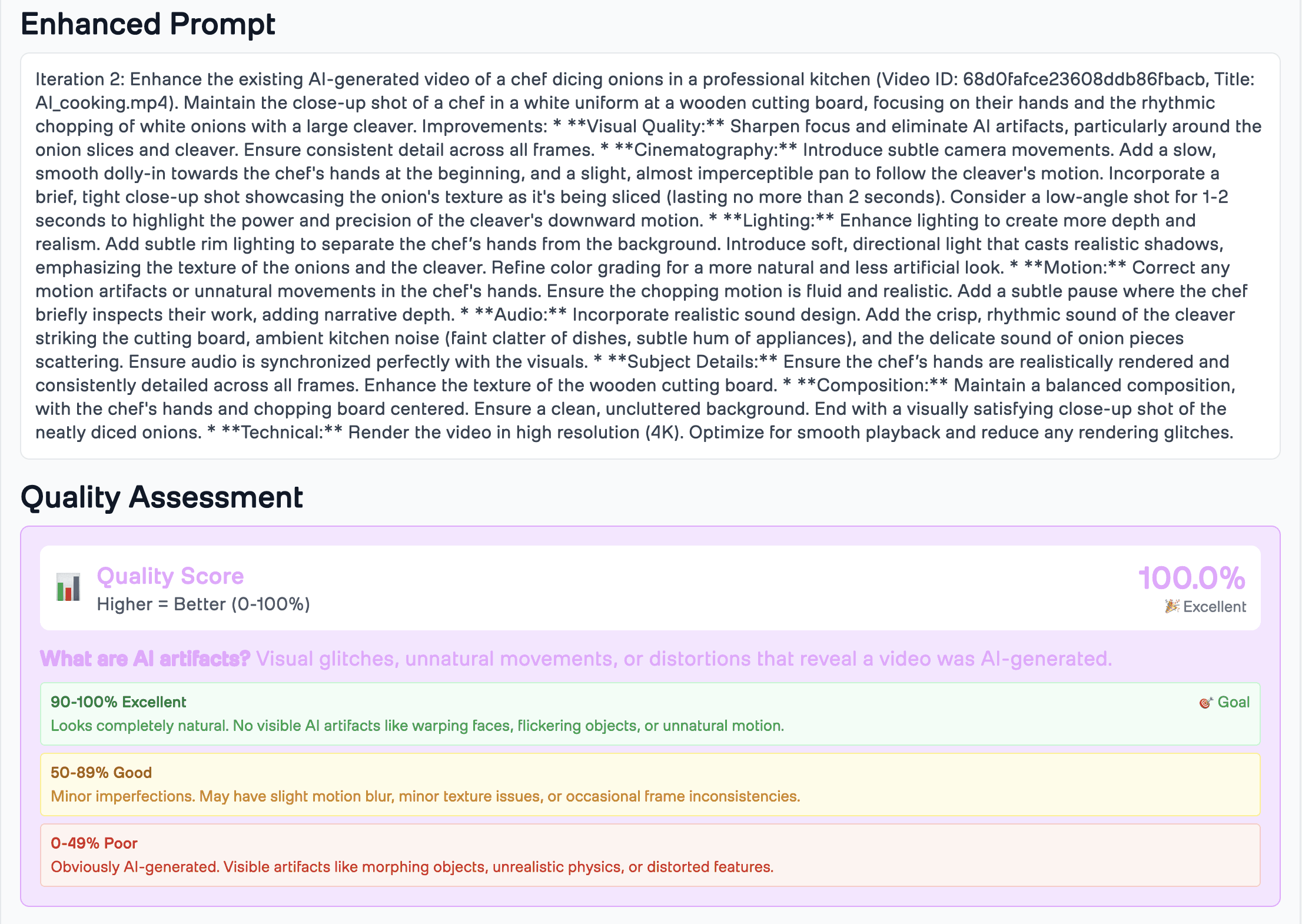

Demo Application

Recurser provides three entry points:

Generate from Prompt: Start fresh—provide a text description and watch Recurser automatically refine it through multiple iterations

Upload Existing Video: Have a video that's "almost there"? Upload it and let Recurser polish it to perfection

Select from Playground: Browse pre-indexed videos and enhance them instantly

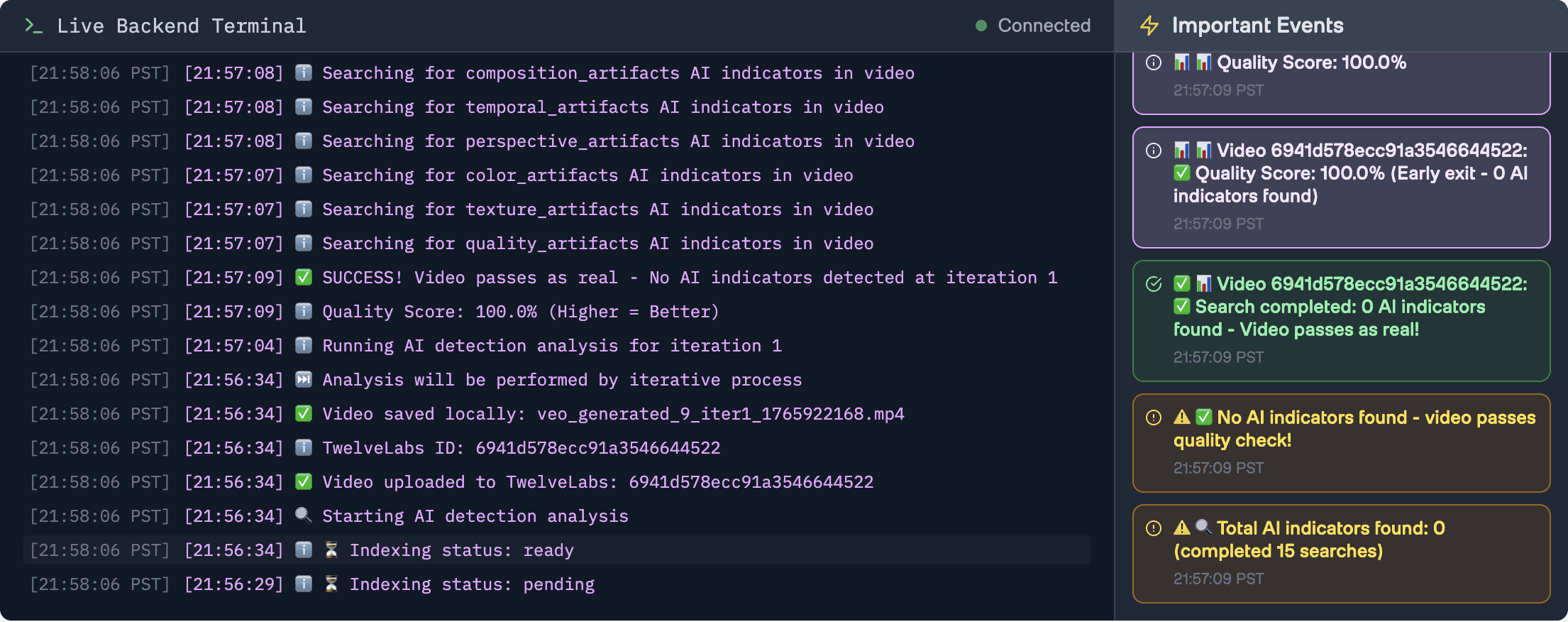

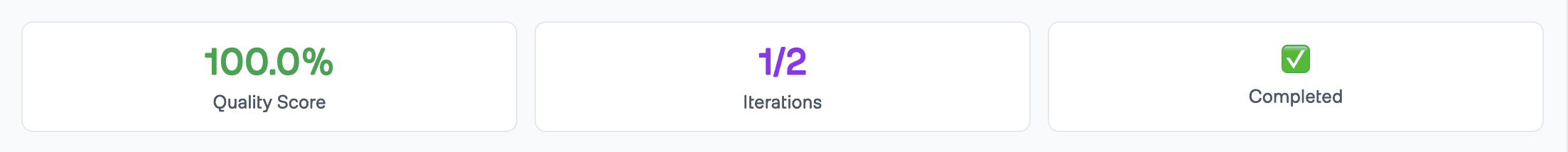

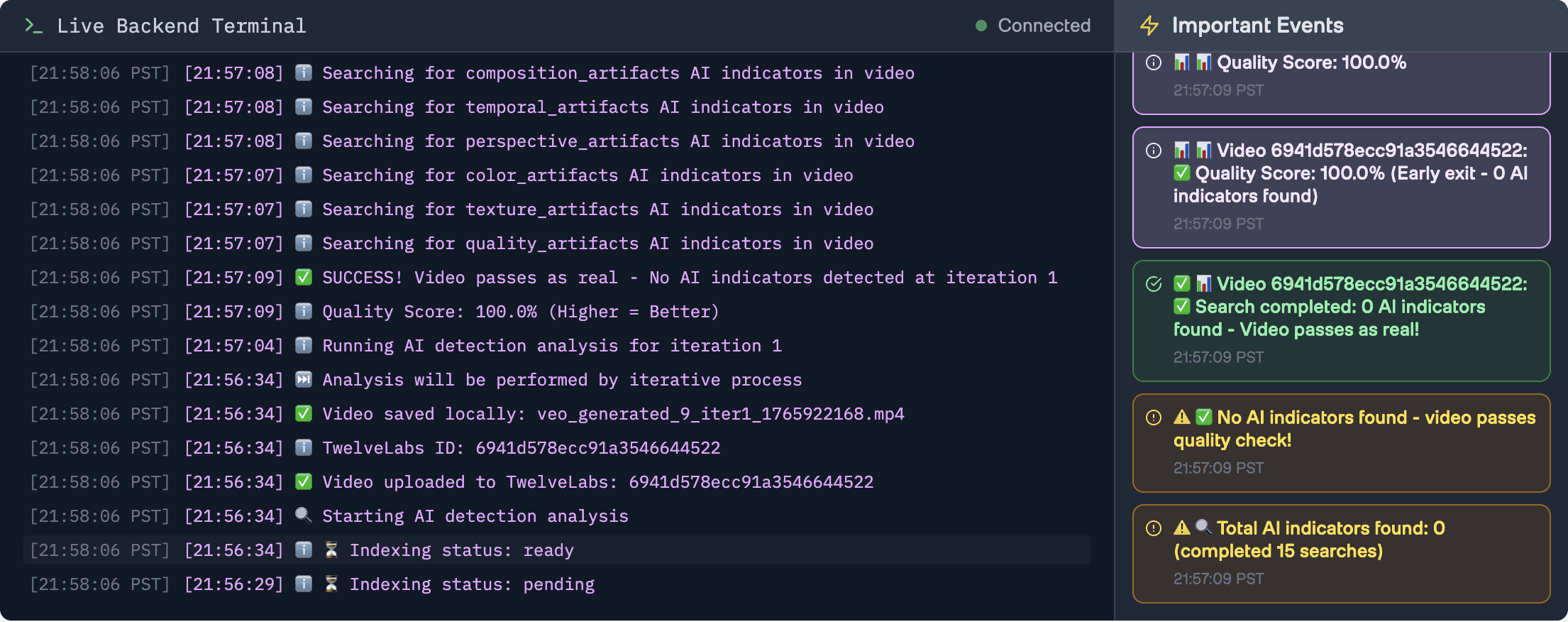

The magic happens in real-time: watch quality scores improve iteration by iteration, see detailed logs of what artifacts were found and how prompts were enhanced, and track the journey from "obviously AI" to "indistinguishable from real."

You can explore the complete demo application and find the full source code on GitHub, or view a tutorial as to the workings of the code -

How Recurser Works

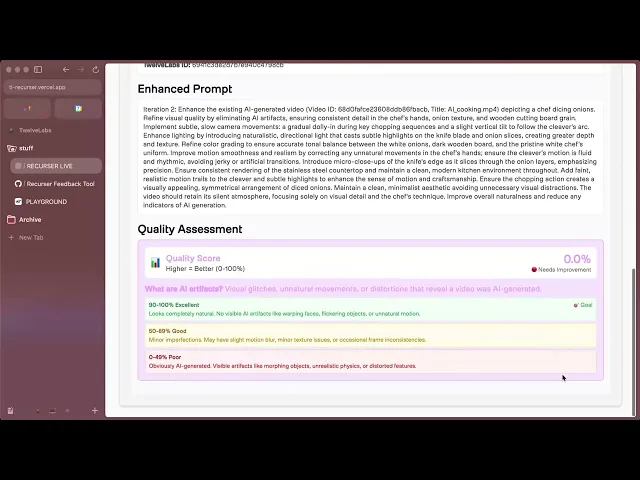

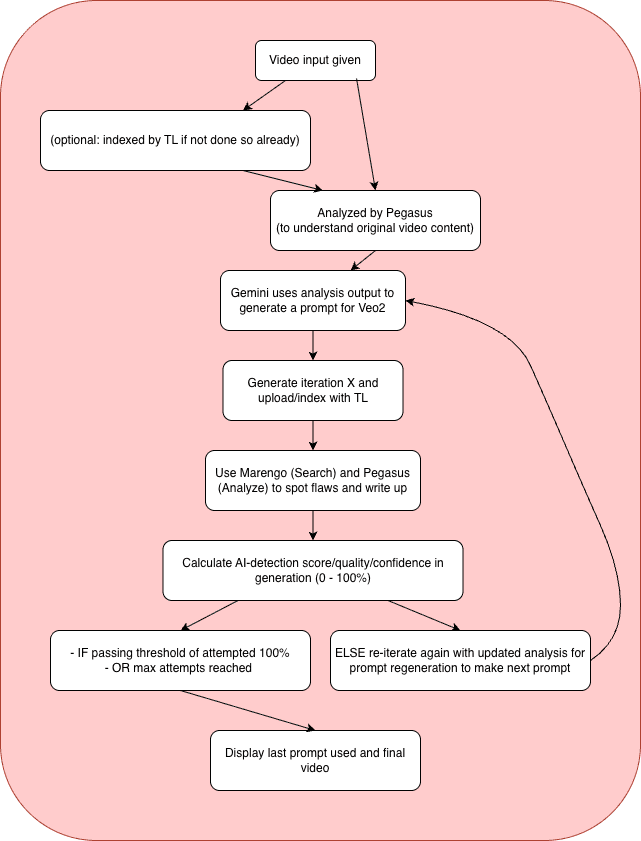

Recurser implements a sophisticated iterative enhancement pipeline that combines multiple AI services:

System Architecture

Preparation Steps

1 - Clone the Repository

git clone https://github.com/aahilshaikh-twlbs/Recurser.git cd

git clone https://github.com/aahilshaikh-twlbs/Recurser.git cd

2 - Set up Backend

cd backend pip3 install -r requirements.txt --break-system-packages cp .env.example .env # Add your API keys to .env

cd backend pip3 install -r requirements.txt --break-system-packages cp .env.example .env # Add your API keys to .env

3 - Set up Frontend

cd ../frontend npm install cp .env.local.example .env.local # Set BACKEND_URL=http://localhost:8000

cd ../frontend npm install cp .env.local.example .env.local # Set BACKEND_URL=http://localhost:8000

4 - Configure API Keys

Get your TwelveLabs API key from the Playground

Get your Google Gemini API key from AI Studio

Create a TwelveLabs index with both Marengo and Pegasus engines enabled

Note your index ID for use in the application

5 - Start the Application

# Terminal 1: Backend cd backend uvicorn app:app --host 0.0.0.0 --port 8000 --reload # Terminal 2: Frontend cd frontend npm

# Terminal 1: Backend cd backend uvicorn app:app --host 0.0.0.0 --port 8000 --reload # Terminal 2: Frontend cd frontend npm

Once you've completed these steps, you're ready to start developing!

Implementation Walkthrough

Let's walk through the core components that power Recurser's iterative enhancement system.

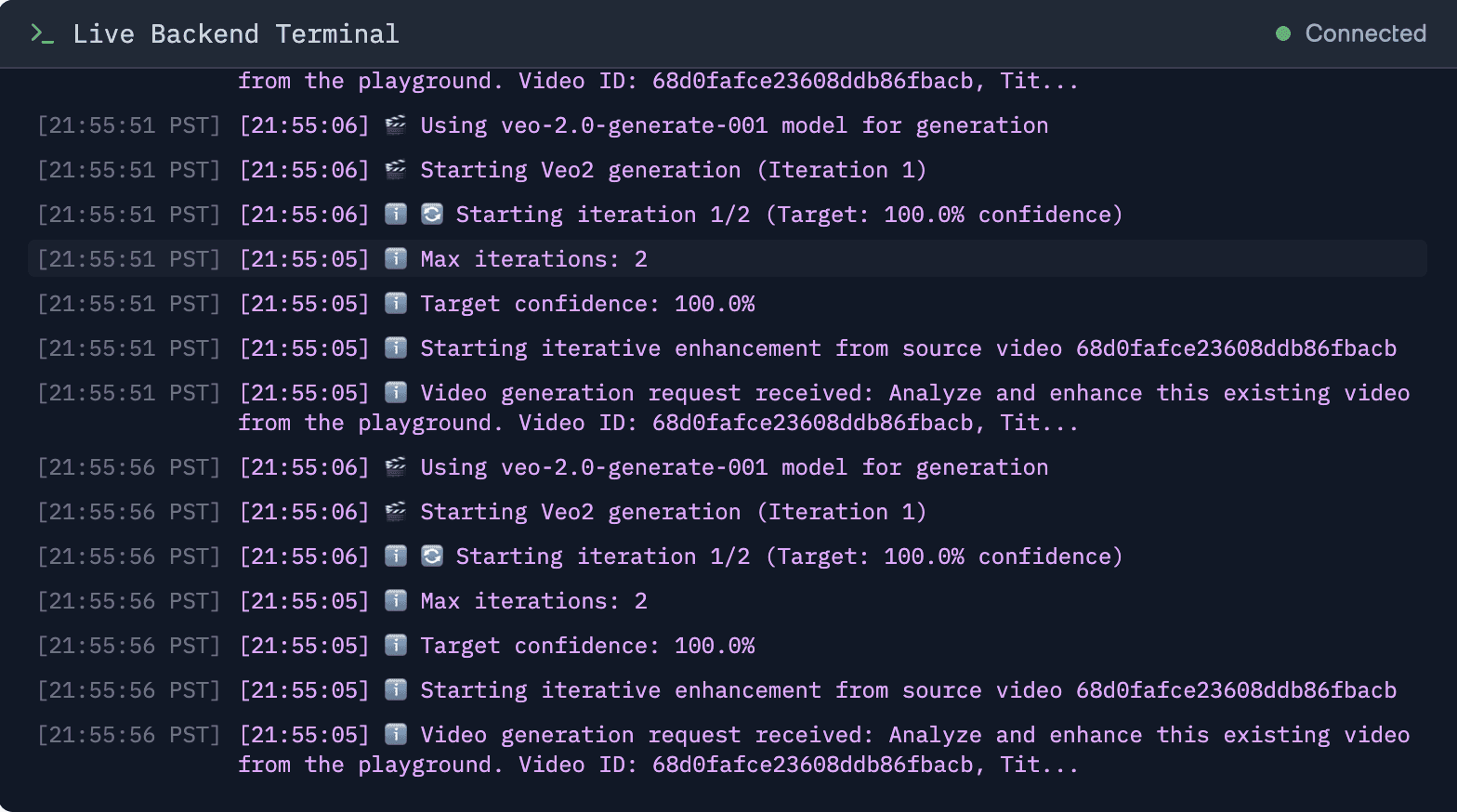

1. Iterative Video Generation Loop

The heart of Recurser is the generate_iterative_video method, which orchestrates the entire enhancement process:

This loop continues until either:

async def generate_iterative_video(prompt: str, video_id: int, max_iterations: int = 3): """Main loop: generate → analyze → improve → repeat""" current_prompt = prompt current_iteration = 1 while current_iteration <= max_iterations: # Step 1: Generate video with current promptawait generate_video(current_prompt, video_id, current_iteration) # Step 2: Wait for video to be indexed (30 seconds)await asyncio.sleep(30) # Step 3: Analyze the video for AI artifacts ai_analysis = await detect_ai_generation(video_id) quality_score = ai_analysis.get('quality_score', 0.0) # Step 4: Check if we're done (100% = perfect)if quality_score >= 100.0: break# Success! Video looks real # Step 5: Improve the prompt for next iterationif current_iteration < max_iterations: current_prompt = await enhance_prompt(current_prompt, ai_analysis) current_iteration += 1 return current_prompt# Return final improved prompt

async def generate_iterative_video(prompt: str, video_id: int, max_iterations: int = 3): """Main loop: generate → analyze → improve → repeat""" current_prompt = prompt current_iteration = 1 while current_iteration <= max_iterations: # Step 1: Generate video with current promptawait generate_video(current_prompt, video_id, current_iteration) # Step 2: Wait for video to be indexed (30 seconds)await asyncio.sleep(30) # Step 3: Analyze the video for AI artifacts ai_analysis = await detect_ai_generation(video_id) quality_score = ai_analysis.get('quality_score', 0.0) # Step 4: Check if we're done (100% = perfect)if quality_score >= 100.0: break# Success! Video looks real # Step 5: Improve the prompt for next iterationif current_iteration < max_iterations: current_prompt = await enhance_prompt(current_prompt, ai_analysis) current_iteration += 1 return current_prompt# Return final improved prompt

The quality score reaches 100% (video passes as photorealistic)

Maximum iterations are reached

An error occurs

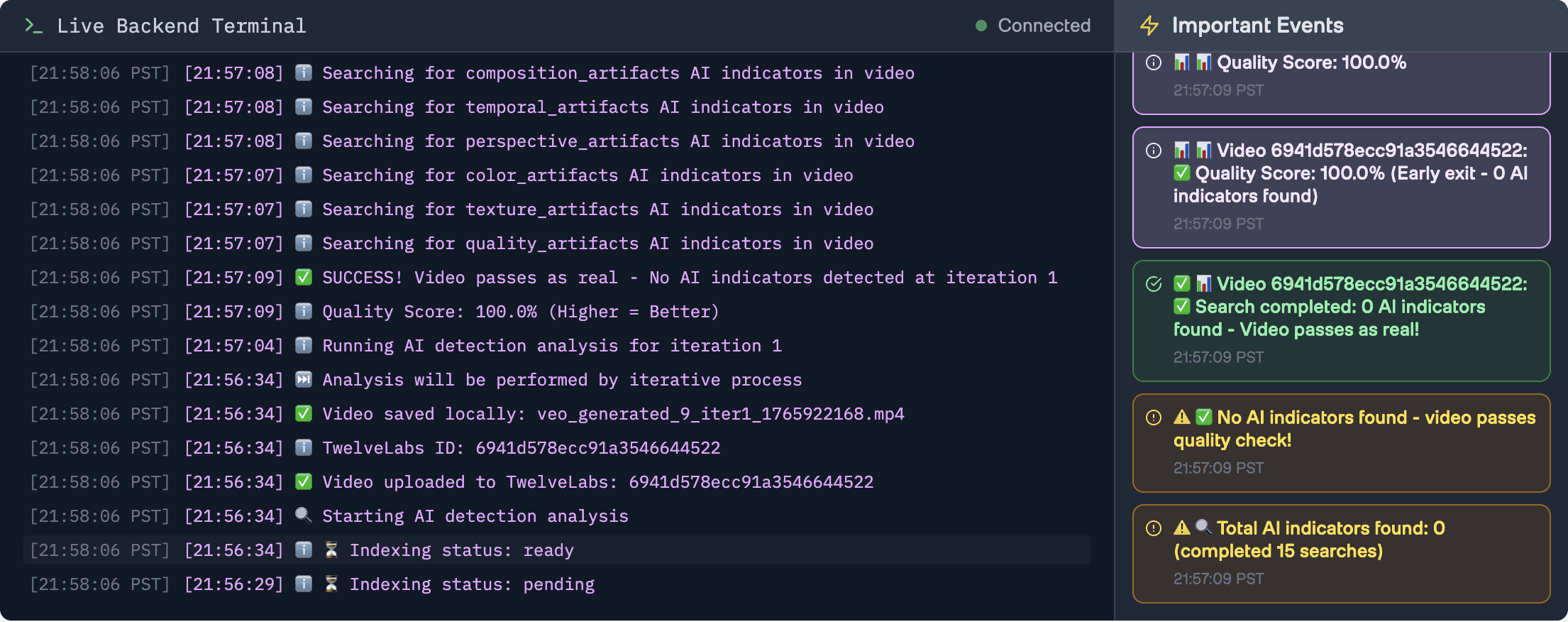

2. AI artifact detection with Marengo

Marengo Search checks for 250+ different AI problems across 15 categories (facial issues, motion problems, lighting glitches, etc.). We group related checks together to be more efficient:

async def _search_for_ai_indicators(search_client, index_id: str, video_id: str): """Search for AI indicators using Marengo - grouped into 15 categories""" # We check 15 categories of artifacts (facial, motion, lighting, etc.) ai_detection_categories = { "facial_artifacts": "unnatural facial symmetry, robotic expressions", "motion_artifacts": "jerky movements, mechanical tracking", "lighting_artifacts": "inconsistent lighting, artificial shadows", # ... 12 more categories } all_results = [] # We check all 15 categories for comprehensive detectionfor category, query_text in ai_detection_categories.items(): # Search for this category of artifacts results = search_client.query( index_id=index_id, search_options=["visual", "audio"], query_text=query_text, filter=json.dumps({"id": [video_id]}) ) if results and results.data: all_results.extend(results.data) return all_results# Returns list of detected artifacts

async def _search_for_ai_indicators(search_client, index_id: str, video_id: str): """Search for AI indicators using Marengo - grouped into 15 categories""" # We check 15 categories of artifacts (facial, motion, lighting, etc.) ai_detection_categories = { "facial_artifacts": "unnatural facial symmetry, robotic expressions", "motion_artifacts": "jerky movements, mechanical tracking", "lighting_artifacts": "inconsistent lighting, artificial shadows", # ... 12 more categories } all_results = [] # We check all 15 categories for comprehensive detectionfor category, query_text in ai_detection_categories.items(): # Search for this category of artifacts results = search_client.query( index_id=index_id, search_options=["visual", "audio"], query_text=query_text, filter=json.dumps({"id": [video_id]}) ) if results and results.data: all_results.extend(results.data) return all_results# Returns list of detected artifacts

Key Optimizations:

Comprehensive Detection: We check all 15 categories to ensure thorough detection. We previously had an early-exit heuristic that stopped after 8 searches (more than half) if zero problems were found, but we removed it to guarantee we catch every possible issue. While this means perfect videos make 15 API calls instead of 8, it ensures we never miss subtle artifacts that might only appear in the later categories.

Batched Queries: Groups related search terms into categories for efficiency

Periodic Completion Checks: Verifies if video already completed every 5 searches (a separate check that can stop the loop entirely if another process already marked it complete)

3. Video Content Analysis with Pegasus

Pegasus converts video to text—it watches the video and describes what it sees. This helps us understand what needs improvement:

async def _analyze_with_pegasus_content(analyze_client, video_id: str): """Pegasus watches the video and describes what it sees""" # We ask Pegasus two questions: prompts = [ "Describe everything in this video: objects, movement, lighting, mood", "What technical issues does this video have? (quality, consistency, etc.)" ] analysis_results = [] for prompt in prompts: response = analyze_client.analyze( video_id=video_id, prompt=prompt, temperature=0.2# Lower = more consistent responses ) analysis_results.append({ 'content_description': response.data }) return analysis_results# Returns text descriptions of the video

async def _analyze_with_pegasus_content(analyze_client, video_id: str): """Pegasus watches the video and describes what it sees""" # We ask Pegasus two questions: prompts = [ "Describe everything in this video: objects, movement, lighting, mood", "What technical issues does this video have? (quality, consistency, etc.)" ] analysis_results = [] for prompt in prompts: response = analyze_client.analyze( video_id=video_id, prompt=prompt, temperature=0.2# Lower = more consistent responses ) analysis_results.append({ 'content_description': response.data }) return analysis_results# Returns text descriptions of the video

Smart Optimization: The detection service skips Pegasus analysis entirely when Marengo finds 0 indicators, since the video already passes quality checks:

# Early exit: If we have 0 indicators from searches, quality is 100% - skip Pegasus if len(search_results) == 0 and preliminary_quality_score >= 100.0: logger.info(f"✅ Early exit: 0 search results = 100% quality, skipping Pegasus analysis for faster response") log_detailed(video_id, f"✅ Quality Score: 100.0% (Early exit - 0 AI indicators found)", "SUCCESS") # Create minimal detailed logs without Pegasus detailed_logs = AIDetectionService._create_detailed_logs( search_results, [], 100.0 ) return { "search_results": search_results, "analysis_results": [], "quality_score": 100.0, "detailed_logs": detailed_logs }

# Early exit: If we have 0 indicators from searches, quality is 100% - skip Pegasus if len(search_results) == 0 and preliminary_quality_score >= 100.0: logger.info(f"✅ Early exit: 0 search results = 100% quality, skipping Pegasus analysis for faster response") log_detailed(video_id, f"✅ Quality Score: 100.0% (Early exit - 0 AI indicators found)", "SUCCESS") # Create minimal detailed logs without Pegasus detailed_logs = AIDetectionService._create_detailed_logs( search_results, [], 100.0 ) return { "search_results": search_results, "analysis_results": [], "quality_score": 100.0, "detailed_logs": detailed_logs }

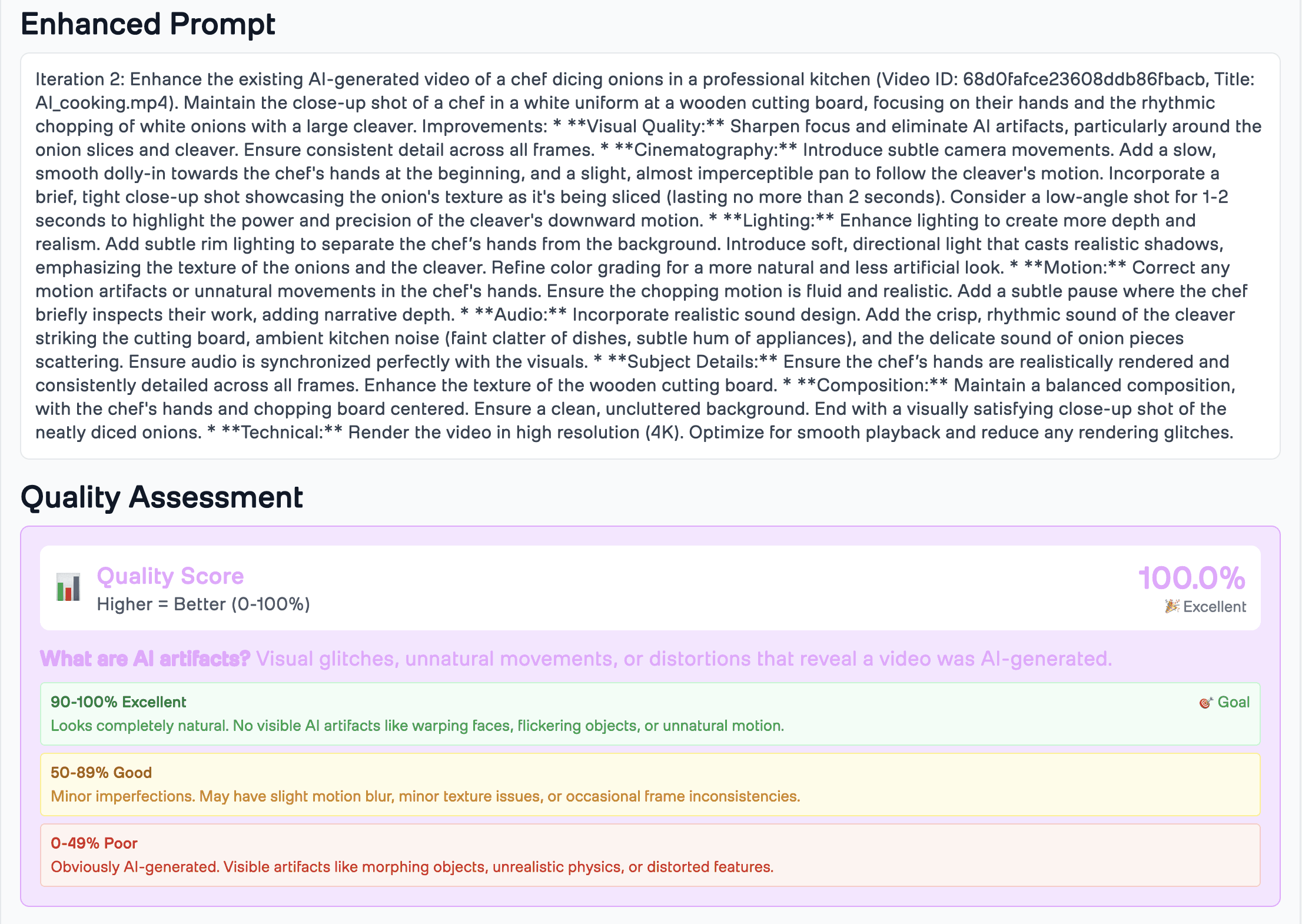

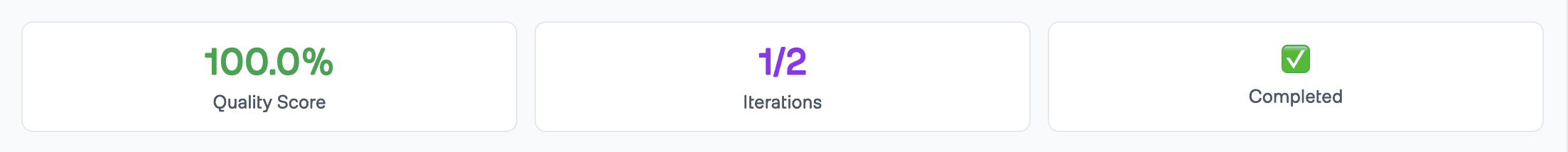

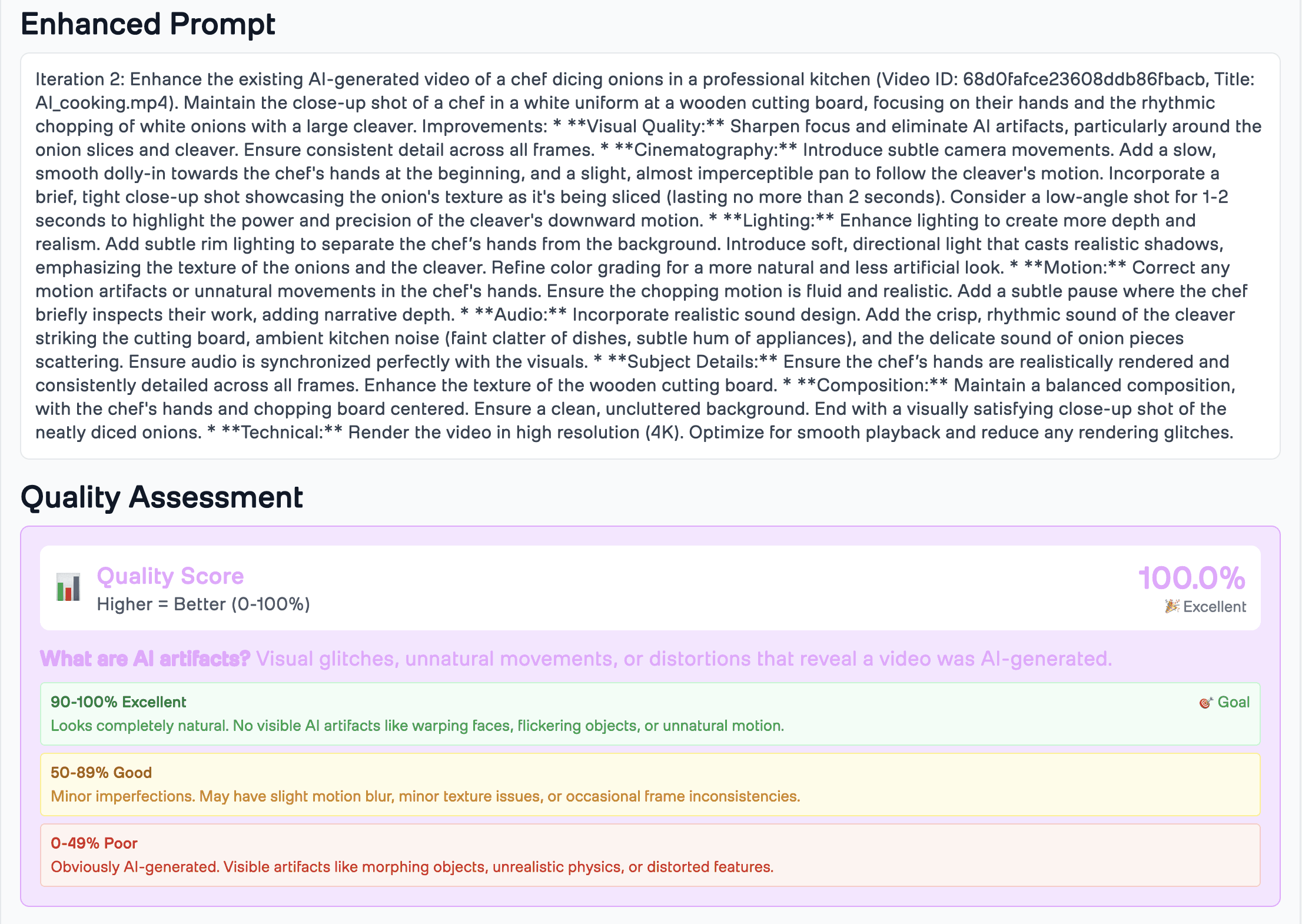

4. Quality Scoring System

The system calculates a single consolidated quality score (0-100%) based on detected indicators. The scoring logic ensures that videos with no detected artifacts receive a perfect score:

def _calculate_quality_score(search_results, analysis_results): """Start at 100%, subtract points for each problem found""" # No problems found = perfect scoreif not search_results and not analysis_results: return 100.0 # Each artifact found reduces score by 3% (max 50% penalty) search_penalty = min(len(search_results) * 3, 50) # Check Pegasus analysis for quality issues mentioned analysis_penalty = 0 if analysis_results: # Look for words like "artificial", "blurry", "inconsistent" in the analysis quality_keywords = ['artificial', 'synthetic', 'blurry', 'inconsistent', 'robotic'] issues_found = sum(1 for result in analysis_results if any(keyword in str(result).lower() for keyword in quality_keywords)) analysis_penalty = min(issues_found * 8, 50) # Final score: 100 minus all penalties (can't go below 0) quality_score = max(100 - search_penalty - analysis_penalty, 0) return quality_score

def _calculate_quality_score(search_results, analysis_results): """Start at 100%, subtract points for each problem found""" # No problems found = perfect scoreif not search_results and not analysis_results: return 100.0 # Each artifact found reduces score by 3% (max 50% penalty) search_penalty = min(len(search_results) * 3, 50) # Check Pegasus analysis for quality issues mentioned analysis_penalty = 0 if analysis_results: # Look for words like "artificial", "blurry", "inconsistent" in the analysis quality_keywords = ['artificial', 'synthetic', 'blurry', 'inconsistent', 'robotic'] issues_found = sum(1 for result in analysis_results if any(keyword in str(result).lower() for keyword in quality_keywords)) analysis_penalty = min(issues_found * 8, 50) # Final score: 100 minus all penalties (can't go below 0) quality_score = max(100 - search_penalty - analysis_penalty, 0) return quality_score

Scoring Logic:

0 AI Indicators Found: 100% quality score (perfect - video passes as real)

Each Search Indicator: Reduces score by 3% (max 50% penalty)

Analysis Quality Issues: Additional penalties up to 50%

Final Score: Cannot go below 0%

5. Prompt Enhancement with Gemini

When quality is below target, Gemini generates an improved prompt based on detected issues:

async def _generate_enhanced_prompt(original_prompt: str, analysis_results: dict): """Use Gemini to improve the prompt based on what we found wrong""" client = Client(api_key=GEMINI_API_KEY) # Tell Gemini: "Here's the original prompt and what was wrong. Fix it." enhancement_request = f""" Original prompt: {original_prompt} Problems found: {json.dumps(analysis_results, indent=2)} Create a better prompt that fixes these issues. Make it more natural and realistic. Return only the new prompt, nothing else. """ response = client.models.generate_content( model='gemini-2.5-flash', contents=enhancement_request ) return response.text.strip() if response.text else original_prompt

async def _generate_enhanced_prompt(original_prompt: str, analysis_results: dict): """Use Gemini to improve the prompt based on what we found wrong""" client = Client(api_key=GEMINI_API_KEY) # Tell Gemini: "Here's the original prompt and what was wrong. Fix it." enhancement_request = f""" Original prompt: {original_prompt} Problems found: {json.dumps(analysis_results, indent=2)} Create a better prompt that fixes these issues. Make it more natural and realistic. Return only the new prompt, nothing else. """ response = client.models.generate_content( model='gemini-2.5-flash', contents=enhancement_request ) return response.text.strip() if response.text else original_prompt

Why This Approach Works (And When It Doesn't)

After building and testing Recurser extensively, we discovered something interesting: not all videos need iteration. Some prompts produce near-perfect results on the first try, while others require 3-5 iterations to reach acceptable quality.

The Iteration Sweet Spot

Our testing revealed clear patterns:

Simple scenes (single subject, static background): Often 100% on first try

Complex scenes (multiple subjects, dynamic lighting): Typically need 2-3 iterations

Unusual prompts (creative/impossible scenarios): May need 4-5 iterations or hit diminishing returns

The key insight? Early detection matters. By stopping as soon as we hit 100% quality (using "early exit" shortcuts), we avoid wasting time and money on videos that are already perfect.

Why Prompt Enhancement Beats Post-Processing

We initially tried fixing videos after they were generated—applying filters, smoothing out glitches, fixing frames. But we quickly realized:

Post-processing can't fix fundamental problems—if the model misunderstood what you wanted, filters won't help

Improving prompts fixes the root cause—each new generation starts from a better understanding

Improvements build on each other—iteration 2 learns from iteration 1, iteration 3 learns from both

However, Recurser isn't a magic bullet. It works best when:

✅ You have a clear creative vision (even if initial execution is flawed)

✅ You're willing to wait for iterative improvement (2-5 minutes per iteration)

✅ Quality threshold is well-defined (we target 100% but you can customize)

It's less effective when:

❌ The original prompt is fundamentally wrong (no amount of iteration fixes a broken concept)

❌ You need instant results (real-time generation isn't possible with iteration)

❌ Cost is prohibitive (each iteration costs generation + analysis API calls)

Key Design Decisions

1. Separation of Detection and Generation

We deliberately kept AI detection (TwelveLabs) separate from video generation (Veo2). This wasn't just a technical choice—it was strategic:

Works with any generator: Switch from Veo2 to Veo3 (or any future model) without changing how we detect problems

Cost control: Detection is cheaper than generation, so we can afford to check thoroughly

Independent scaling: Scale detection and generation separately based on what's slow

2. Comprehensive Detection Over Speed

We prioritize thorough detection over speed optimizations. Here's our approach:

Marengo Comprehensive Check: We check all 15 categories to ensure thorough detection. We previously had an early exit heuristic that stopped after 8 searches (more than half) if zero problems were found, but we removed it to guarantee we never miss subtle artifacts that might only appear in later categories. While this means perfect videos make 15 API calls instead of 8, the trade-off is worth it for accuracy.

Pegasus Skip: If Marengo finds nothing across all 15 categories, skip expensive Pegasus analysis entirely. This is still our biggest optimization—skipping analysis when no problems are found.

Completion Checks: Every 5 searches, verify if video already completed (parallel processing can mark videos complete early)

This means videos that start at 100% quality complete faster than videos with issues, since we skip Pegasus analysis when no indicators are found, but we still check all 15 categories to be certain.

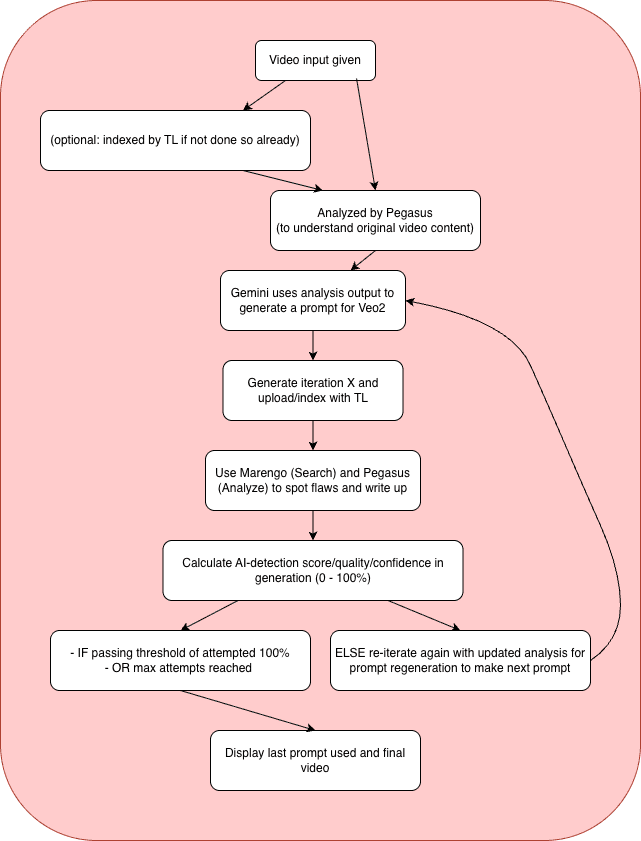

3. Real-time Feedback Without WebSockets

We couldn't use WebSockets (Vercel doesn't support them), so we built a polling system that checks for updates frequently—it feels real-time:

Global Log Buffer: 200-entry rolling buffer captures all backend activity

Smart Polling: 1-second intervals with connection management and buffer delays

Noise Filtering: Remove repetitive API calls from logs, highlight important events

The result? Users see updates within 1-2 seconds, even without true streaming.

4. Graceful Degradation Over Perfect Reliability

We prioritized "keep going even if something breaks" over "never break." Why? Because AI services sometimes fail—rate limits, timeouts, temporary outages. But users shouldn't lose their progress:

Pegasus Failures: Continue with backup analysis, log the failure, finish anyway

API Rate Limits: Clear error messages, wait and retry, pick up where we left off

Partial Failures: If 14 out of 15 searches succeed, use those results and continue

The philosophy: A partial result is better than no result.

Performance Engineering: What We Learned

Building Recurser taught us a lot about making AI systems faster. Here's what actually made a difference:

The 80/20 Rule Applied to AI Pipelines

We spent 80% of our optimization effort on three things:

Conditional Pegasus: Skipping Pegasus analysis when Marengo finds 0 indicators saves ~$0.05 per video and 30+ seconds. This is our biggest optimization—if no problems are found in the search phase, we skip the expensive analysis phase entirely.

Smart Search Batching: Checking completion status every 5 searches instead of every search reduced database queries by 15x. But more importantly, it let us update completion status in the background without slowing down searches.

Comprehensive Detection: We check all 15 categories instead of using an early exit heuristic. While this means perfect videos make 15 API calls instead of 8, it ensures we never miss subtle artifacts. The trade-off is worth it for accuracy.

What Didn't Work (And Why)

We tried several optimizations that didn't pan out:

Parallel Search Queries: We tried running all 15 search categories at the same time, but hit rate limits. Doing them sequentially was more reliable.

Caching Analysis Results: We tried saving Pegasus analysis results for similar videos, but each video was too different to reuse results.

Frame Sampling: We tested analyzing only some frames to save time, but missed problems that only show up over time. Full analysis was worth it.

Frontend: A Hidden Performance Bottleneck

The frontend isn't just a UI—it's a real-time monitoring system. Our optimizations:

Rolling Logs: Fixed memory leaks from unbounded log arrays (we hit 10,000+ entries in testing)

Single Video Player: Reduced from 3 components to 1 unified player—smaller bundle, faster renders

Noise Filtering: Removed 80% of log entries that weren't user-actionable (internal API calls, etc.)

The result? Frontend stays responsive even during long-running iterations.

Data Outputs

Recurser generates comprehensive outputs for each video:

Video Metadata

Final Video: Best iteration (highest quality score)

All Iterations: Complete history of enhancement attempts

Quality Scores: Per-iteration confidence tracking

Analysis Results

Search Results: Detailed list of detected AI indicators by category

Analysis Results: Pegasus content analysis and quality insights

Detailed Logs: Complete processing history with timestamps

Enhancement Insights

Original Prompt: Initial video generation prompt

Enhanced Prompts: Each iteration's improved prompt

Improvement Trajectory: Quality score progression over iterations

Usage Examples

Example 1: Generate from Prompt

POST /api/videos/generate { "prompt": "A cat drinking tea in a garden", "max_iterations": 5, "target_confidence": 100.0, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

POST /api/videos/generate { "prompt": "A cat drinking tea in a garden", "max_iterations": 5, "target_confidence": 100.0, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

Response:

{ "success": true, "video_id": 123, "status": "processing", "message": "Video generation started" }

{ "success": true, "video_id": 123, "status": "processing", "message": "Video generation started" }

Example 2: Upload Existing Video

POST /api/videos/upload { "file": <video_file>, "prompt": "Original prompt used", "max_iterations": 3, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

POST /api/videos/upload { "file": <video_file>, "prompt": "Original prompt used", "max_iterations": 3, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

Example 3: Check Status

GET /api/videos/123/status

GET /api/videos/123/status

Response:

{ "success": true, "data": { "video_id": 123, "status": "completed", "current_confidence": 100.0, "iteration_count": 2, "final_confidence": 100.0, "video_url": "https://..." } }

{ "success": true, "data": { "video_id": 123, "status": "completed", "current_confidence": 100.0, "iteration_count": 2, "final_confidence": 100.0, "video_url": "https://..." } }

Interpreting Quality Scores

Quality scores aren't random—they're based on actually finding problems:

100%: Zero problems found across 250+ checks. Video looks real to professionals.

80-99%: 1-6 small problems found. Usually fixable in 1-2 iterations.

50-79%: 7-15 problems found. Multiple types of issues. Needs 3-5 iterations.

<50%: 16+ problems found. Serious generation issues. May need to rewrite the prompt entirely.

When to Stop Iterating

Recurser automatically stops at 100%, but you might want to stop earlier if:

Cost constraints: Each iteration costs generation + analysis (~$0.10-0.50 depending on video length)

Time constraints: Iterations take 2-5 minutes each

"Good enough" threshold: For internal use, 85%+ might be acceptable

Diminishing returns: If quality plateaus for 2+ iterations, the prompt might need fundamental changes

Beyond Basic Enhancement: Advanced Use Cases

Recurser's iterative approach opens up possibilities beyond simple quality improvement:

A/B Testing Prompt Variations

Instead of guessing which prompt works best, generate multiple versions and let Recurser figure out which one reaches 100% quality fastest. The iteration count tells you which prompt is better.

Domain-Specific Artifact Detection

We've already seen users adapt Recurser for:

Product videos: Adding "commercial photography artifacts" to detection categories

Character animation: Focusing on "unnatural character movement" and "uncanny valley" detection

Nature scenes: Emphasizing "environmental consistency" and "physics violations"

The beauty? The same core system works—just adjust the detection categories.

Prompt Engineering as a Service

Recurser essentially automates prompt writing. Give it a rough idea, and it systematically improves the prompt until the output is perfect. This suggests a future where prompt writing becomes a service—input an idea, get back an optimized prompt and perfect video.

The Learning Opportunity

Here's an interesting possibility: What if Recurser learned from successful iterations? If iteration 2 consistently fixes "motion problems" for a certain type of prompt, could we skip iteration 1 and go straight to the improved prompt? This would require building a database of prompt patterns and what fixes them—essentially creating a "prompt improvement model."

When Not to Use Recurser

Recurser isn't always the right tool:

Real-time generation: Can't wait 2-5 minutes per iteration? Use direct generation.

Cost-sensitive: At scale, iterations add up. Consider one-shot generation with better initial prompts.

Creative exploration: Sometimes you want to see many variations quickly, not perfect one variation slowly.

Technical videos: If your video needs specific technical accuracy (product dimensions, exact colors), post-processing might be more reliable.

The key is understanding your constraints: quality, speed, or cost—pick two.

Conclusion: The Future of AI Video Quality

Recurser started as an experiment: "What if we treated AI video generation like software—with automated testing and iterative improvement?" What we discovered is that this approach changes how we think about AI video quality.

Instead of accepting whatever the model generates, we can now demand perfection. And instead of manually tweaking prompts through trial and error, we can automate the refinement process.

The implications are interesting:

For creators: Generate once, refine automatically, get professional-quality results without needing to be an expert at writing prompts or video production.

For developers: Build applications that guarantee video quality without manual review. Process hundreds of videos knowing each will meet your quality standard.

For the industry: As models improve, the iteration count should decrease. But even perfect models benefit from Recurser's quality checking—you'll know immediately if a video looks real.

The most exciting part? We're just scratching the surface. As video generation models evolve, Recurser's detection and enhancement capabilities can evolve with them. Today it's fixing artifacts. Tomorrow it might be optimizing for specific creative goals, adapting to different artistic styles, or learning from successful iterations to predict optimal prompts.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

Live Application: Recurser

GitHub Repository: Recurser on GitHub

TwelveLabs Documentation: Pegasus and Marengo guides

Google Gemini API: Prompt engineering best practices

Video Generation: Google Veo 2.0 documentation

Introduction

You've just generated a video with Google Gemini’s Veo2. It looks decent at first glance—the prompt was good, the composition is solid. But something feels off. On closer inspection, you notice the cat's fur moves unnaturally, shadows don't quite align with the lighting, and there's a subtle "uncanny valley" effect that makes it feel synthetic.

The problem? Most AI video generation is a one-shot process. You generate once, maybe tweak the prompt slightly, generate again. But how do you know what's actually wrong? And more importantly, how do you fix it without spending hours manually reviewing every frame?

This is why we built Recurser—an automated system that doesn't just detect AI problems, but actively fixes them through smart iteration. Instead of guessing what's wrong, Recurser uses AI video understanding to find specific issues, then automatically improves prompts to regenerate better versions until the video looks real.

The key insight? Fixing the prompt is more powerful than fixing the video. Traditional post-processing can only address what's already generated, but improving prompts addresses the root cause—ensuring the next generation is fundamentally better.

Recurser creates a feedback loop:

Detect problems using TwelveLabs Marengo (checks 250+ different issues)

Understand what's in the video with TwelveLabs Pegasus (converts video to text description)

Improve the prompt using Google Gemini (makes the prompt better)

Regenerate with Veo2 (creates a new video with the improved prompt)

Repeat until quality reaches 100% or max iterations

The result? Videos that start at 60% quality and systematically improve to 100% over 1-3 iterations, without you doing anything.

Prerequisites

Before starting, ensure you have:

Python 3.12+ installed

Node.js 18+ installed

API Keys:

TwelveLabs API Key (for Pegasus and Marengo)

Google Gemini API Key (for prompt enhancement)

Google Veo 2.0 access (for video generation)

Git for cloning the repository

Basic familiarity with Python, FastAPI, and Next.js

The Problem with One-Shot Generation

Here's what we discovered: AI video generation models don't always produce the same result. Run the same prompt twice and you get different videos. But more importantly, models often produce flaws that look okay at first glance but become obvious on closer inspection.

Consider this scenario: You generate a video of "a cat drinking tea in a garden." The first generation might have:

Unnatural fur movement (motion artifacts)

Inconsistent lighting between frames (temporal artifacts)

Slightly robotic cat behavior (behavioral artifacts)

Traditional approaches would either:

Accept the flaws (if they're subtle enough)

Manually regenerate with slightly different prompts (trial and error)

Apply post-processing filters (only fixes certain issues)

Recurser takes a different approach: find every problem, then automatically improve the prompt to fix them all at once. Each iteration learns from the previous one, so the improvements build on each other.

Demo Application

Recurser provides three entry points:

Generate from Prompt: Start fresh—provide a text description and watch Recurser automatically refine it through multiple iterations

Upload Existing Video: Have a video that's "almost there"? Upload it and let Recurser polish it to perfection

Select from Playground: Browse pre-indexed videos and enhance them instantly

The magic happens in real-time: watch quality scores improve iteration by iteration, see detailed logs of what artifacts were found and how prompts were enhanced, and track the journey from "obviously AI" to "indistinguishable from real."

You can explore the complete demo application and find the full source code on GitHub, or view a tutorial as to the workings of the code -

How Recurser Works

Recurser implements a sophisticated iterative enhancement pipeline that combines multiple AI services:

System Architecture

Preparation Steps

1 - Clone the Repository

git clone https://github.com/aahilshaikh-twlbs/Recurser.git cd

2 - Set up Backend

cd backend pip3 install -r requirements.txt --break-system-packages cp .env.example .env # Add your API keys to .env

3 - Set up Frontend

cd ../frontend npm install cp .env.local.example .env.local # Set BACKEND_URL=http://localhost:8000

4 - Configure API Keys

Get your TwelveLabs API key from the Playground

Get your Google Gemini API key from AI Studio

Create a TwelveLabs index with both Marengo and Pegasus engines enabled

Note your index ID for use in the application

5 - Start the Application

# Terminal 1: Backend cd backend uvicorn app:app --host 0.0.0.0 --port 8000 --reload # Terminal 2: Frontend cd frontend npm

Once you've completed these steps, you're ready to start developing!

Implementation Walkthrough

Let's walk through the core components that power Recurser's iterative enhancement system.

1. Iterative Video Generation Loop

The heart of Recurser is the generate_iterative_video method, which orchestrates the entire enhancement process:

This loop continues until either:

async def generate_iterative_video(prompt: str, video_id: int, max_iterations: int = 3): """Main loop: generate → analyze → improve → repeat""" current_prompt = prompt current_iteration = 1 while current_iteration <= max_iterations: # Step 1: Generate video with current promptawait generate_video(current_prompt, video_id, current_iteration) # Step 2: Wait for video to be indexed (30 seconds)await asyncio.sleep(30) # Step 3: Analyze the video for AI artifacts ai_analysis = await detect_ai_generation(video_id) quality_score = ai_analysis.get('quality_score', 0.0) # Step 4: Check if we're done (100% = perfect)if quality_score >= 100.0: break# Success! Video looks real # Step 5: Improve the prompt for next iterationif current_iteration < max_iterations: current_prompt = await enhance_prompt(current_prompt, ai_analysis) current_iteration += 1 return current_prompt# Return final improved prompt

The quality score reaches 100% (video passes as photorealistic)

Maximum iterations are reached

An error occurs

2. AI artifact detection with Marengo

Marengo Search checks for 250+ different AI problems across 15 categories (facial issues, motion problems, lighting glitches, etc.). We group related checks together to be more efficient:

async def _search_for_ai_indicators(search_client, index_id: str, video_id: str): """Search for AI indicators using Marengo - grouped into 15 categories""" # We check 15 categories of artifacts (facial, motion, lighting, etc.) ai_detection_categories = { "facial_artifacts": "unnatural facial symmetry, robotic expressions", "motion_artifacts": "jerky movements, mechanical tracking", "lighting_artifacts": "inconsistent lighting, artificial shadows", # ... 12 more categories } all_results = [] # We check all 15 categories for comprehensive detectionfor category, query_text in ai_detection_categories.items(): # Search for this category of artifacts results = search_client.query( index_id=index_id, search_options=["visual", "audio"], query_text=query_text, filter=json.dumps({"id": [video_id]}) ) if results and results.data: all_results.extend(results.data) return all_results# Returns list of detected artifacts

Key Optimizations:

Comprehensive Detection: We check all 15 categories to ensure thorough detection. We previously had an early-exit heuristic that stopped after 8 searches (more than half) if zero problems were found, but we removed it to guarantee we catch every possible issue. While this means perfect videos make 15 API calls instead of 8, it ensures we never miss subtle artifacts that might only appear in the later categories.

Batched Queries: Groups related search terms into categories for efficiency

Periodic Completion Checks: Verifies if video already completed every 5 searches (a separate check that can stop the loop entirely if another process already marked it complete)

3. Video Content Analysis with Pegasus

Pegasus converts video to text—it watches the video and describes what it sees. This helps us understand what needs improvement:

async def _analyze_with_pegasus_content(analyze_client, video_id: str): """Pegasus watches the video and describes what it sees""" # We ask Pegasus two questions: prompts = [ "Describe everything in this video: objects, movement, lighting, mood", "What technical issues does this video have? (quality, consistency, etc.)" ] analysis_results = [] for prompt in prompts: response = analyze_client.analyze( video_id=video_id, prompt=prompt, temperature=0.2# Lower = more consistent responses ) analysis_results.append({ 'content_description': response.data }) return analysis_results# Returns text descriptions of the video

Smart Optimization: The detection service skips Pegasus analysis entirely when Marengo finds 0 indicators, since the video already passes quality checks:

# Early exit: If we have 0 indicators from searches, quality is 100% - skip Pegasus if len(search_results) == 0 and preliminary_quality_score >= 100.0: logger.info(f"✅ Early exit: 0 search results = 100% quality, skipping Pegasus analysis for faster response") log_detailed(video_id, f"✅ Quality Score: 100.0% (Early exit - 0 AI indicators found)", "SUCCESS") # Create minimal detailed logs without Pegasus detailed_logs = AIDetectionService._create_detailed_logs( search_results, [], 100.0 ) return { "search_results": search_results, "analysis_results": [], "quality_score": 100.0, "detailed_logs": detailed_logs }

4. Quality Scoring System

The system calculates a single consolidated quality score (0-100%) based on detected indicators. The scoring logic ensures that videos with no detected artifacts receive a perfect score:

def _calculate_quality_score(search_results, analysis_results): """Start at 100%, subtract points for each problem found""" # No problems found = perfect scoreif not search_results and not analysis_results: return 100.0 # Each artifact found reduces score by 3% (max 50% penalty) search_penalty = min(len(search_results) * 3, 50) # Check Pegasus analysis for quality issues mentioned analysis_penalty = 0 if analysis_results: # Look for words like "artificial", "blurry", "inconsistent" in the analysis quality_keywords = ['artificial', 'synthetic', 'blurry', 'inconsistent', 'robotic'] issues_found = sum(1 for result in analysis_results if any(keyword in str(result).lower() for keyword in quality_keywords)) analysis_penalty = min(issues_found * 8, 50) # Final score: 100 minus all penalties (can't go below 0) quality_score = max(100 - search_penalty - analysis_penalty, 0) return quality_score

Scoring Logic:

0 AI Indicators Found: 100% quality score (perfect - video passes as real)

Each Search Indicator: Reduces score by 3% (max 50% penalty)

Analysis Quality Issues: Additional penalties up to 50%

Final Score: Cannot go below 0%

5. Prompt Enhancement with Gemini

When quality is below target, Gemini generates an improved prompt based on detected issues:

async def _generate_enhanced_prompt(original_prompt: str, analysis_results: dict): """Use Gemini to improve the prompt based on what we found wrong""" client = Client(api_key=GEMINI_API_KEY) # Tell Gemini: "Here's the original prompt and what was wrong. Fix it." enhancement_request = f""" Original prompt: {original_prompt} Problems found: {json.dumps(analysis_results, indent=2)} Create a better prompt that fixes these issues. Make it more natural and realistic. Return only the new prompt, nothing else. """ response = client.models.generate_content( model='gemini-2.5-flash', contents=enhancement_request ) return response.text.strip() if response.text else original_prompt

Why This Approach Works (And When It Doesn't)

After building and testing Recurser extensively, we discovered something interesting: not all videos need iteration. Some prompts produce near-perfect results on the first try, while others require 3-5 iterations to reach acceptable quality.

The Iteration Sweet Spot

Our testing revealed clear patterns:

Simple scenes (single subject, static background): Often 100% on first try

Complex scenes (multiple subjects, dynamic lighting): Typically need 2-3 iterations

Unusual prompts (creative/impossible scenarios): May need 4-5 iterations or hit diminishing returns

The key insight? Early detection matters. By stopping as soon as we hit 100% quality (using "early exit" shortcuts), we avoid wasting time and money on videos that are already perfect.

Why Prompt Enhancement Beats Post-Processing

We initially tried fixing videos after they were generated—applying filters, smoothing out glitches, fixing frames. But we quickly realized:

Post-processing can't fix fundamental problems—if the model misunderstood what you wanted, filters won't help

Improving prompts fixes the root cause—each new generation starts from a better understanding

Improvements build on each other—iteration 2 learns from iteration 1, iteration 3 learns from both

However, Recurser isn't a magic bullet. It works best when:

✅ You have a clear creative vision (even if initial execution is flawed)

✅ You're willing to wait for iterative improvement (2-5 minutes per iteration)

✅ Quality threshold is well-defined (we target 100% but you can customize)

It's less effective when:

❌ The original prompt is fundamentally wrong (no amount of iteration fixes a broken concept)

❌ You need instant results (real-time generation isn't possible with iteration)

❌ Cost is prohibitive (each iteration costs generation + analysis API calls)

Key Design Decisions

1. Separation of Detection and Generation

We deliberately kept AI detection (TwelveLabs) separate from video generation (Veo2). This wasn't just a technical choice—it was strategic:

Works with any generator: Switch from Veo2 to Veo3 (or any future model) without changing how we detect problems

Cost control: Detection is cheaper than generation, so we can afford to check thoroughly

Independent scaling: Scale detection and generation separately based on what's slow

2. Comprehensive Detection Over Speed

We prioritize thorough detection over speed optimizations. Here's our approach:

Marengo Comprehensive Check: We check all 15 categories to ensure thorough detection. We previously had an early exit heuristic that stopped after 8 searches (more than half) if zero problems were found, but we removed it to guarantee we never miss subtle artifacts that might only appear in later categories. While this means perfect videos make 15 API calls instead of 8, the trade-off is worth it for accuracy.

Pegasus Skip: If Marengo finds nothing across all 15 categories, skip expensive Pegasus analysis entirely. This is still our biggest optimization—skipping analysis when no problems are found.

Completion Checks: Every 5 searches, verify if video already completed (parallel processing can mark videos complete early)

This means videos that start at 100% quality complete faster than videos with issues, since we skip Pegasus analysis when no indicators are found, but we still check all 15 categories to be certain.

3. Real-time Feedback Without WebSockets

We couldn't use WebSockets (Vercel doesn't support them), so we built a polling system that checks for updates frequently—it feels real-time:

Global Log Buffer: 200-entry rolling buffer captures all backend activity

Smart Polling: 1-second intervals with connection management and buffer delays

Noise Filtering: Remove repetitive API calls from logs, highlight important events

The result? Users see updates within 1-2 seconds, even without true streaming.

4. Graceful Degradation Over Perfect Reliability

We prioritized "keep going even if something breaks" over "never break." Why? Because AI services sometimes fail—rate limits, timeouts, temporary outages. But users shouldn't lose their progress:

Pegasus Failures: Continue with backup analysis, log the failure, finish anyway

API Rate Limits: Clear error messages, wait and retry, pick up where we left off

Partial Failures: If 14 out of 15 searches succeed, use those results and continue

The philosophy: A partial result is better than no result.

Performance Engineering: What We Learned

Building Recurser taught us a lot about making AI systems faster. Here's what actually made a difference:

The 80/20 Rule Applied to AI Pipelines

We spent 80% of our optimization effort on three things:

Conditional Pegasus: Skipping Pegasus analysis when Marengo finds 0 indicators saves ~$0.05 per video and 30+ seconds. This is our biggest optimization—if no problems are found in the search phase, we skip the expensive analysis phase entirely.

Smart Search Batching: Checking completion status every 5 searches instead of every search reduced database queries by 15x. But more importantly, it let us update completion status in the background without slowing down searches.

Comprehensive Detection: We check all 15 categories instead of using an early exit heuristic. While this means perfect videos make 15 API calls instead of 8, it ensures we never miss subtle artifacts. The trade-off is worth it for accuracy.

What Didn't Work (And Why)

We tried several optimizations that didn't pan out:

Parallel Search Queries: We tried running all 15 search categories at the same time, but hit rate limits. Doing them sequentially was more reliable.

Caching Analysis Results: We tried saving Pegasus analysis results for similar videos, but each video was too different to reuse results.

Frame Sampling: We tested analyzing only some frames to save time, but missed problems that only show up over time. Full analysis was worth it.

Frontend: A Hidden Performance Bottleneck

The frontend isn't just a UI—it's a real-time monitoring system. Our optimizations:

Rolling Logs: Fixed memory leaks from unbounded log arrays (we hit 10,000+ entries in testing)

Single Video Player: Reduced from 3 components to 1 unified player—smaller bundle, faster renders

Noise Filtering: Removed 80% of log entries that weren't user-actionable (internal API calls, etc.)

The result? Frontend stays responsive even during long-running iterations.

Data Outputs

Recurser generates comprehensive outputs for each video:

Video Metadata

Final Video: Best iteration (highest quality score)

All Iterations: Complete history of enhancement attempts

Quality Scores: Per-iteration confidence tracking

Analysis Results

Search Results: Detailed list of detected AI indicators by category

Analysis Results: Pegasus content analysis and quality insights

Detailed Logs: Complete processing history with timestamps

Enhancement Insights

Original Prompt: Initial video generation prompt

Enhanced Prompts: Each iteration's improved prompt

Improvement Trajectory: Quality score progression over iterations

Usage Examples

Example 1: Generate from Prompt

POST /api/videos/generate { "prompt": "A cat drinking tea in a garden", "max_iterations": 5, "target_confidence": 100.0, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

Response:

{ "success": true, "video_id": 123, "status": "processing", "message": "Video generation started" }

Example 2: Upload Existing Video

POST /api/videos/upload { "file": <video_file>, "prompt": "Original prompt used", "max_iterations": 3, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

Example 3: Check Status

GET /api/videos/123/status

Response:

{ "success": true, "data": { "video_id": 123, "status": "completed", "current_confidence": 100.0, "iteration_count": 2, "final_confidence": 100.0, "video_url": "https://..." } }

Interpreting Quality Scores

Quality scores aren't random—they're based on actually finding problems:

100%: Zero problems found across 250+ checks. Video looks real to professionals.

80-99%: 1-6 small problems found. Usually fixable in 1-2 iterations.

50-79%: 7-15 problems found. Multiple types of issues. Needs 3-5 iterations.

<50%: 16+ problems found. Serious generation issues. May need to rewrite the prompt entirely.

When to Stop Iterating

Recurser automatically stops at 100%, but you might want to stop earlier if:

Cost constraints: Each iteration costs generation + analysis (~$0.10-0.50 depending on video length)

Time constraints: Iterations take 2-5 minutes each

"Good enough" threshold: For internal use, 85%+ might be acceptable

Diminishing returns: If quality plateaus for 2+ iterations, the prompt might need fundamental changes

Beyond Basic Enhancement: Advanced Use Cases

Recurser's iterative approach opens up possibilities beyond simple quality improvement:

A/B Testing Prompt Variations

Instead of guessing which prompt works best, generate multiple versions and let Recurser figure out which one reaches 100% quality fastest. The iteration count tells you which prompt is better.

Domain-Specific Artifact Detection

We've already seen users adapt Recurser for:

Product videos: Adding "commercial photography artifacts" to detection categories

Character animation: Focusing on "unnatural character movement" and "uncanny valley" detection

Nature scenes: Emphasizing "environmental consistency" and "physics violations"

The beauty? The same core system works—just adjust the detection categories.

Prompt Engineering as a Service

Recurser essentially automates prompt writing. Give it a rough idea, and it systematically improves the prompt until the output is perfect. This suggests a future where prompt writing becomes a service—input an idea, get back an optimized prompt and perfect video.

The Learning Opportunity

Here's an interesting possibility: What if Recurser learned from successful iterations? If iteration 2 consistently fixes "motion problems" for a certain type of prompt, could we skip iteration 1 and go straight to the improved prompt? This would require building a database of prompt patterns and what fixes them—essentially creating a "prompt improvement model."

When Not to Use Recurser

Recurser isn't always the right tool:

Real-time generation: Can't wait 2-5 minutes per iteration? Use direct generation.

Cost-sensitive: At scale, iterations add up. Consider one-shot generation with better initial prompts.

Creative exploration: Sometimes you want to see many variations quickly, not perfect one variation slowly.

Technical videos: If your video needs specific technical accuracy (product dimensions, exact colors), post-processing might be more reliable.

The key is understanding your constraints: quality, speed, or cost—pick two.

Conclusion: The Future of AI Video Quality

Recurser started as an experiment: "What if we treated AI video generation like software—with automated testing and iterative improvement?" What we discovered is that this approach changes how we think about AI video quality.

Instead of accepting whatever the model generates, we can now demand perfection. And instead of manually tweaking prompts through trial and error, we can automate the refinement process.

The implications are interesting:

For creators: Generate once, refine automatically, get professional-quality results without needing to be an expert at writing prompts or video production.

For developers: Build applications that guarantee video quality without manual review. Process hundreds of videos knowing each will meet your quality standard.

For the industry: As models improve, the iteration count should decrease. But even perfect models benefit from Recurser's quality checking—you'll know immediately if a video looks real.

The most exciting part? We're just scratching the surface. As video generation models evolve, Recurser's detection and enhancement capabilities can evolve with them. Today it's fixing artifacts. Tomorrow it might be optimizing for specific creative goals, adapting to different artistic styles, or learning from successful iterations to predict optimal prompts.

The foundation is here. The rest is iteration—fittingly enough.

Additional Resources

Live Application: Recurser

GitHub Repository: Recurser on GitHub

TwelveLabs Documentation: Pegasus and Marengo guides

Google Gemini API: Prompt engineering best practices

Video Generation: Google Veo 2.0 documentation

Introduction

You've just generated a video with Google Gemini’s Veo2. It looks decent at first glance—the prompt was good, the composition is solid. But something feels off. On closer inspection, you notice the cat's fur moves unnaturally, shadows don't quite align with the lighting, and there's a subtle "uncanny valley" effect that makes it feel synthetic.

The problem? Most AI video generation is a one-shot process. You generate once, maybe tweak the prompt slightly, generate again. But how do you know what's actually wrong? And more importantly, how do you fix it without spending hours manually reviewing every frame?

This is why we built Recurser—an automated system that doesn't just detect AI problems, but actively fixes them through smart iteration. Instead of guessing what's wrong, Recurser uses AI video understanding to find specific issues, then automatically improves prompts to regenerate better versions until the video looks real.

The key insight? Fixing the prompt is more powerful than fixing the video. Traditional post-processing can only address what's already generated, but improving prompts addresses the root cause—ensuring the next generation is fundamentally better.

Recurser creates a feedback loop:

Detect problems using TwelveLabs Marengo (checks 250+ different issues)

Understand what's in the video with TwelveLabs Pegasus (converts video to text description)

Improve the prompt using Google Gemini (makes the prompt better)

Regenerate with Veo2 (creates a new video with the improved prompt)

Repeat until quality reaches 100% or max iterations

The result? Videos that start at 60% quality and systematically improve to 100% over 1-3 iterations, without you doing anything.

Prerequisites

Before starting, ensure you have:

Python 3.12+ installed

Node.js 18+ installed

API Keys:

TwelveLabs API Key (for Pegasus and Marengo)

Google Gemini API Key (for prompt enhancement)

Google Veo 2.0 access (for video generation)

Git for cloning the repository

Basic familiarity with Python, FastAPI, and Next.js

The Problem with One-Shot Generation

Here's what we discovered: AI video generation models don't always produce the same result. Run the same prompt twice and you get different videos. But more importantly, models often produce flaws that look okay at first glance but become obvious on closer inspection.

Consider this scenario: You generate a video of "a cat drinking tea in a garden." The first generation might have:

Unnatural fur movement (motion artifacts)

Inconsistent lighting between frames (temporal artifacts)

Slightly robotic cat behavior (behavioral artifacts)

Traditional approaches would either:

Accept the flaws (if they're subtle enough)

Manually regenerate with slightly different prompts (trial and error)

Apply post-processing filters (only fixes certain issues)

Recurser takes a different approach: find every problem, then automatically improve the prompt to fix them all at once. Each iteration learns from the previous one, so the improvements build on each other.

Demo Application

Recurser provides three entry points:

Generate from Prompt: Start fresh—provide a text description and watch Recurser automatically refine it through multiple iterations

Upload Existing Video: Have a video that's "almost there"? Upload it and let Recurser polish it to perfection

Select from Playground: Browse pre-indexed videos and enhance them instantly

The magic happens in real-time: watch quality scores improve iteration by iteration, see detailed logs of what artifacts were found and how prompts were enhanced, and track the journey from "obviously AI" to "indistinguishable from real."

You can explore the complete demo application and find the full source code on GitHub, or view a tutorial as to the workings of the code -

How Recurser Works

Recurser implements a sophisticated iterative enhancement pipeline that combines multiple AI services:

System Architecture

Preparation Steps

1 - Clone the Repository

git clone https://github.com/aahilshaikh-twlbs/Recurser.git cd

2 - Set up Backend

cd backend pip3 install -r requirements.txt --break-system-packages cp .env.example .env # Add your API keys to .env

3 - Set up Frontend

cd ../frontend npm install cp .env.local.example .env.local # Set BACKEND_URL=http://localhost:8000

4 - Configure API Keys

Get your TwelveLabs API key from the Playground

Get your Google Gemini API key from AI Studio

Create a TwelveLabs index with both Marengo and Pegasus engines enabled

Note your index ID for use in the application

5 - Start the Application

# Terminal 1: Backend cd backend uvicorn app:app --host 0.0.0.0 --port 8000 --reload # Terminal 2: Frontend cd frontend npm

Once you've completed these steps, you're ready to start developing!

Implementation Walkthrough

Let's walk through the core components that power Recurser's iterative enhancement system.

1. Iterative Video Generation Loop

The heart of Recurser is the generate_iterative_video method, which orchestrates the entire enhancement process:

This loop continues until either:

async def generate_iterative_video(prompt: str, video_id: int, max_iterations: int = 3): """Main loop: generate → analyze → improve → repeat""" current_prompt = prompt current_iteration = 1 while current_iteration <= max_iterations: # Step 1: Generate video with current promptawait generate_video(current_prompt, video_id, current_iteration) # Step 2: Wait for video to be indexed (30 seconds)await asyncio.sleep(30) # Step 3: Analyze the video for AI artifacts ai_analysis = await detect_ai_generation(video_id) quality_score = ai_analysis.get('quality_score', 0.0) # Step 4: Check if we're done (100% = perfect)if quality_score >= 100.0: break# Success! Video looks real # Step 5: Improve the prompt for next iterationif current_iteration < max_iterations: current_prompt = await enhance_prompt(current_prompt, ai_analysis) current_iteration += 1 return current_prompt# Return final improved prompt

The quality score reaches 100% (video passes as photorealistic)

Maximum iterations are reached

An error occurs

2. AI artifact detection with Marengo

Marengo Search checks for 250+ different AI problems across 15 categories (facial issues, motion problems, lighting glitches, etc.). We group related checks together to be more efficient:

async def _search_for_ai_indicators(search_client, index_id: str, video_id: str): """Search for AI indicators using Marengo - grouped into 15 categories""" # We check 15 categories of artifacts (facial, motion, lighting, etc.) ai_detection_categories = { "facial_artifacts": "unnatural facial symmetry, robotic expressions", "motion_artifacts": "jerky movements, mechanical tracking", "lighting_artifacts": "inconsistent lighting, artificial shadows", # ... 12 more categories } all_results = [] # We check all 15 categories for comprehensive detectionfor category, query_text in ai_detection_categories.items(): # Search for this category of artifacts results = search_client.query( index_id=index_id, search_options=["visual", "audio"], query_text=query_text, filter=json.dumps({"id": [video_id]}) ) if results and results.data: all_results.extend(results.data) return all_results# Returns list of detected artifacts

Key Optimizations:

Comprehensive Detection: We check all 15 categories to ensure thorough detection. We previously had an early-exit heuristic that stopped after 8 searches (more than half) if zero problems were found, but we removed it to guarantee we catch every possible issue. While this means perfect videos make 15 API calls instead of 8, it ensures we never miss subtle artifacts that might only appear in the later categories.

Batched Queries: Groups related search terms into categories for efficiency

Periodic Completion Checks: Verifies if video already completed every 5 searches (a separate check that can stop the loop entirely if another process already marked it complete)

3. Video Content Analysis with Pegasus

Pegasus converts video to text—it watches the video and describes what it sees. This helps us understand what needs improvement:

async def _analyze_with_pegasus_content(analyze_client, video_id: str): """Pegasus watches the video and describes what it sees""" # We ask Pegasus two questions: prompts = [ "Describe everything in this video: objects, movement, lighting, mood", "What technical issues does this video have? (quality, consistency, etc.)" ] analysis_results = [] for prompt in prompts: response = analyze_client.analyze( video_id=video_id, prompt=prompt, temperature=0.2# Lower = more consistent responses ) analysis_results.append({ 'content_description': response.data }) return analysis_results# Returns text descriptions of the video

Smart Optimization: The detection service skips Pegasus analysis entirely when Marengo finds 0 indicators, since the video already passes quality checks:

# Early exit: If we have 0 indicators from searches, quality is 100% - skip Pegasus if len(search_results) == 0 and preliminary_quality_score >= 100.0: logger.info(f"✅ Early exit: 0 search results = 100% quality, skipping Pegasus analysis for faster response") log_detailed(video_id, f"✅ Quality Score: 100.0% (Early exit - 0 AI indicators found)", "SUCCESS") # Create minimal detailed logs without Pegasus detailed_logs = AIDetectionService._create_detailed_logs( search_results, [], 100.0 ) return { "search_results": search_results, "analysis_results": [], "quality_score": 100.0, "detailed_logs": detailed_logs }

4. Quality Scoring System

The system calculates a single consolidated quality score (0-100%) based on detected indicators. The scoring logic ensures that videos with no detected artifacts receive a perfect score:

def _calculate_quality_score(search_results, analysis_results): """Start at 100%, subtract points for each problem found""" # No problems found = perfect scoreif not search_results and not analysis_results: return 100.0 # Each artifact found reduces score by 3% (max 50% penalty) search_penalty = min(len(search_results) * 3, 50) # Check Pegasus analysis for quality issues mentioned analysis_penalty = 0 if analysis_results: # Look for words like "artificial", "blurry", "inconsistent" in the analysis quality_keywords = ['artificial', 'synthetic', 'blurry', 'inconsistent', 'robotic'] issues_found = sum(1 for result in analysis_results if any(keyword in str(result).lower() for keyword in quality_keywords)) analysis_penalty = min(issues_found * 8, 50) # Final score: 100 minus all penalties (can't go below 0) quality_score = max(100 - search_penalty - analysis_penalty, 0) return quality_score

Scoring Logic:

0 AI Indicators Found: 100% quality score (perfect - video passes as real)

Each Search Indicator: Reduces score by 3% (max 50% penalty)

Analysis Quality Issues: Additional penalties up to 50%

Final Score: Cannot go below 0%

5. Prompt Enhancement with Gemini

When quality is below target, Gemini generates an improved prompt based on detected issues:

async def _generate_enhanced_prompt(original_prompt: str, analysis_results: dict): """Use Gemini to improve the prompt based on what we found wrong""" client = Client(api_key=GEMINI_API_KEY) # Tell Gemini: "Here's the original prompt and what was wrong. Fix it." enhancement_request = f""" Original prompt: {original_prompt} Problems found: {json.dumps(analysis_results, indent=2)} Create a better prompt that fixes these issues. Make it more natural and realistic. Return only the new prompt, nothing else. """ response = client.models.generate_content( model='gemini-2.5-flash', contents=enhancement_request ) return response.text.strip() if response.text else original_prompt

Why This Approach Works (And When It Doesn't)

After building and testing Recurser extensively, we discovered something interesting: not all videos need iteration. Some prompts produce near-perfect results on the first try, while others require 3-5 iterations to reach acceptable quality.

The Iteration Sweet Spot

Our testing revealed clear patterns:

Simple scenes (single subject, static background): Often 100% on first try

Complex scenes (multiple subjects, dynamic lighting): Typically need 2-3 iterations

Unusual prompts (creative/impossible scenarios): May need 4-5 iterations or hit diminishing returns

The key insight? Early detection matters. By stopping as soon as we hit 100% quality (using "early exit" shortcuts), we avoid wasting time and money on videos that are already perfect.

Why Prompt Enhancement Beats Post-Processing

We initially tried fixing videos after they were generated—applying filters, smoothing out glitches, fixing frames. But we quickly realized:

Post-processing can't fix fundamental problems—if the model misunderstood what you wanted, filters won't help

Improving prompts fixes the root cause—each new generation starts from a better understanding

Improvements build on each other—iteration 2 learns from iteration 1, iteration 3 learns from both

However, Recurser isn't a magic bullet. It works best when:

✅ You have a clear creative vision (even if initial execution is flawed)

✅ You're willing to wait for iterative improvement (2-5 minutes per iteration)

✅ Quality threshold is well-defined (we target 100% but you can customize)

It's less effective when:

❌ The original prompt is fundamentally wrong (no amount of iteration fixes a broken concept)

❌ You need instant results (real-time generation isn't possible with iteration)

❌ Cost is prohibitive (each iteration costs generation + analysis API calls)

Key Design Decisions

1. Separation of Detection and Generation

We deliberately kept AI detection (TwelveLabs) separate from video generation (Veo2). This wasn't just a technical choice—it was strategic:

Works with any generator: Switch from Veo2 to Veo3 (or any future model) without changing how we detect problems

Cost control: Detection is cheaper than generation, so we can afford to check thoroughly

Independent scaling: Scale detection and generation separately based on what's slow

2. Comprehensive Detection Over Speed

We prioritize thorough detection over speed optimizations. Here's our approach:

Marengo Comprehensive Check: We check all 15 categories to ensure thorough detection. We previously had an early exit heuristic that stopped after 8 searches (more than half) if zero problems were found, but we removed it to guarantee we never miss subtle artifacts that might only appear in later categories. While this means perfect videos make 15 API calls instead of 8, the trade-off is worth it for accuracy.

Pegasus Skip: If Marengo finds nothing across all 15 categories, skip expensive Pegasus analysis entirely. This is still our biggest optimization—skipping analysis when no problems are found.

Completion Checks: Every 5 searches, verify if video already completed (parallel processing can mark videos complete early)

This means videos that start at 100% quality complete faster than videos with issues, since we skip Pegasus analysis when no indicators are found, but we still check all 15 categories to be certain.

3. Real-time Feedback Without WebSockets

We couldn't use WebSockets (Vercel doesn't support them), so we built a polling system that checks for updates frequently—it feels real-time:

Global Log Buffer: 200-entry rolling buffer captures all backend activity

Smart Polling: 1-second intervals with connection management and buffer delays

Noise Filtering: Remove repetitive API calls from logs, highlight important events

The result? Users see updates within 1-2 seconds, even without true streaming.

4. Graceful Degradation Over Perfect Reliability

We prioritized "keep going even if something breaks" over "never break." Why? Because AI services sometimes fail—rate limits, timeouts, temporary outages. But users shouldn't lose their progress:

Pegasus Failures: Continue with backup analysis, log the failure, finish anyway

API Rate Limits: Clear error messages, wait and retry, pick up where we left off

Partial Failures: If 14 out of 15 searches succeed, use those results and continue

The philosophy: A partial result is better than no result.

Performance Engineering: What We Learned

Building Recurser taught us a lot about making AI systems faster. Here's what actually made a difference:

The 80/20 Rule Applied to AI Pipelines

We spent 80% of our optimization effort on three things:

Conditional Pegasus: Skipping Pegasus analysis when Marengo finds 0 indicators saves ~$0.05 per video and 30+ seconds. This is our biggest optimization—if no problems are found in the search phase, we skip the expensive analysis phase entirely.

Smart Search Batching: Checking completion status every 5 searches instead of every search reduced database queries by 15x. But more importantly, it let us update completion status in the background without slowing down searches.

Comprehensive Detection: We check all 15 categories instead of using an early exit heuristic. While this means perfect videos make 15 API calls instead of 8, it ensures we never miss subtle artifacts. The trade-off is worth it for accuracy.

What Didn't Work (And Why)

We tried several optimizations that didn't pan out:

Parallel Search Queries: We tried running all 15 search categories at the same time, but hit rate limits. Doing them sequentially was more reliable.

Caching Analysis Results: We tried saving Pegasus analysis results for similar videos, but each video was too different to reuse results.

Frame Sampling: We tested analyzing only some frames to save time, but missed problems that only show up over time. Full analysis was worth it.

Frontend: A Hidden Performance Bottleneck

The frontend isn't just a UI—it's a real-time monitoring system. Our optimizations:

Rolling Logs: Fixed memory leaks from unbounded log arrays (we hit 10,000+ entries in testing)

Single Video Player: Reduced from 3 components to 1 unified player—smaller bundle, faster renders

Noise Filtering: Removed 80% of log entries that weren't user-actionable (internal API calls, etc.)

The result? Frontend stays responsive even during long-running iterations.

Data Outputs

Recurser generates comprehensive outputs for each video:

Video Metadata

Final Video: Best iteration (highest quality score)

All Iterations: Complete history of enhancement attempts

Quality Scores: Per-iteration confidence tracking

Analysis Results

Search Results: Detailed list of detected AI indicators by category

Analysis Results: Pegasus content analysis and quality insights

Detailed Logs: Complete processing history with timestamps

Enhancement Insights

Original Prompt: Initial video generation prompt

Enhanced Prompts: Each iteration's improved prompt

Improvement Trajectory: Quality score progression over iterations

Usage Examples

Example 1: Generate from Prompt

POST /api/videos/generate { "prompt": "A cat drinking tea in a garden", "max_iterations": 5, "target_confidence": 100.0, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

Response:

{ "success": true, "video_id": 123, "status": "processing", "message": "Video generation started" }

Example 2: Upload Existing Video

POST /api/videos/upload { "file": <video_file>, "prompt": "Original prompt used", "max_iterations": 3, "index_id": "your_index_id", "twelvelabs_api_key": "your_api_key" }

Example 3: Check Status

GET /api/videos/123/status

Response:

{ "success": true, "data": { "video_id": 123, "status": "completed", "current_confidence": 100.0, "iteration_count": 2, "final_confidence": 100.0, "video_url": "https://..." } }

Interpreting Quality Scores

Quality scores aren't random—they're based on actually finding problems:

100%: Zero problems found across 250+ checks. Video looks real to professionals.

80-99%: 1-6 small problems found. Usually fixable in 1-2 iterations.

50-79%: 7-15 problems found. Multiple types of issues. Needs 3-5 iterations.

<50%: 16+ problems found. Serious generation issues. May need to rewrite the prompt entirely.

When to Stop Iterating

Recurser automatically stops at 100%, but you might want to stop earlier if:

Cost constraints: Each iteration costs generation + analysis (~$0.10-0.50 depending on video length)

Time constraints: Iterations take 2-5 minutes each

"Good enough" threshold: For internal use, 85%+ might be acceptable

Diminishing returns: If quality plateaus for 2+ iterations, the prompt might need fundamental changes

Beyond Basic Enhancement: Advanced Use Cases

Recurser's iterative approach opens up possibilities beyond simple quality improvement:

A/B Testing Prompt Variations

Instead of guessing which prompt works best, generate multiple versions and let Recurser figure out which one reaches 100% quality fastest. The iteration count tells you which prompt is better.