Tutorial

Building an AI-Powered Sponsorship ROI Analysis Platform with TwelveLabs

Mohit Varikuti

Traditional sponsorship measurement tells you how long your brand appeared on screen, but not whether those moments mattered. A 5-second logo during a championship-winning goal is worth exponentially more than 30 seconds during a timeout—yet legacy systems treat them identically. This tutorial walks through building an AI-powered sponsorship ROI platform that goes beyond simple detection to understand context, quality, and competitive positioning using TwelveLabs' multimodal video understanding and structured data generation. We'll cover the complete pipeline: multimodal brand detection across visual, audio, and text; intelligent categorization of ad placements vs. organic integrations; placement effectiveness scoring based on timing, sentiment, and viewer engagement; competitive intelligence and market share analysis; and AI-generated strategic recommendations. By the end, you'll have a system that transforms raw video into actionable insights like "Your brand captured 34% of visual airtime during high-engagement moments, outperforming competitors by 2.3x in celebration sequences—recommend increasing jersey sponsorship investment by 40%."

Traditional sponsorship measurement tells you how long your brand appeared on screen, but not whether those moments mattered. A 5-second logo during a championship-winning goal is worth exponentially more than 30 seconds during a timeout—yet legacy systems treat them identically. This tutorial walks through building an AI-powered sponsorship ROI platform that goes beyond simple detection to understand context, quality, and competitive positioning using TwelveLabs' multimodal video understanding and structured data generation. We'll cover the complete pipeline: multimodal brand detection across visual, audio, and text; intelligent categorization of ad placements vs. organic integrations; placement effectiveness scoring based on timing, sentiment, and viewer engagement; competitive intelligence and market share analysis; and AI-generated strategic recommendations. By the end, you'll have a system that transforms raw video into actionable insights like "Your brand captured 34% of visual airtime during high-engagement moments, outperforming competitors by 2.3x in celebration sequences—recommend increasing jersey sponsorship investment by 40%."

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Jan 8, 2026

20 Minutes

Copy link to article

Introduction

You're a brand manager who just invested $500,000 in a sports event sponsorship. Your logo is on jerseys, stadium signage, and you have commercial airtime during broadcasts. The event ends, and you receive a vague report: "Your brand appeared 47 times for 8 minutes total."

But the critical questions remain unanswered:

Were you visible during high-impact moments? (Goals, celebrations, replays)

How did your placements compare to competitors? (Market share of visual airtime)

What was the quality of exposure? (Primary focus vs. background blur)

Ad placements vs. organic integration? (Which performs better for brand recall?)

What should you change for next time? (Data-driven optimization)

This is why we built an AI-powered sponsorship analytics platform—a system that doesn't just count logo appearances, but actually understands sponsorship value through temporal context, placement quality, and competitive intelligence.

The key insight? Context + Quality + Timing = ROI. Traditional systems measure exposure time, but temporal multimodal AI tells you when, how, and why those seconds matter.

The Problem with Traditional Sponsorship Measurement

Here's what we discovered: Brand exposure isn't created equal. A 5-second logo appearance during a championship celebration is worth 10x more than 30 seconds in the background during a timeout. Traditional measurement misses this entirely.

Consider this scenario: You're measuring a Nike sponsorship in a basketball game. Legacy systems report:

Total exposure: 12 minutes ✓

Logo appearances: 34 ✓

Average screen size: 18% ✓

But these metrics ignore:

Temporal context: Was Nike visible during the game-winning shot replay (high engagement) or during timeouts (low engagement)?

Placement type: Jersey sponsor (organic) vs. commercial break (interruptive)?

Competitive landscape: How much airtime did Adidas get? What's Nike's share of voice?

Sentiment: Did the Nike logo appear during positive moments (celebrations) or negative ones (injuries)?

Traditional approaches would either:

Hire analysts to manually review hours of footage ($$$)

Accept incomplete data from simple object detection (misses context)

Rely on self-reported metrics from broadcast partners (unverified)

This system takes a different approach: multimodal understanding first, then measure precisely what matters.

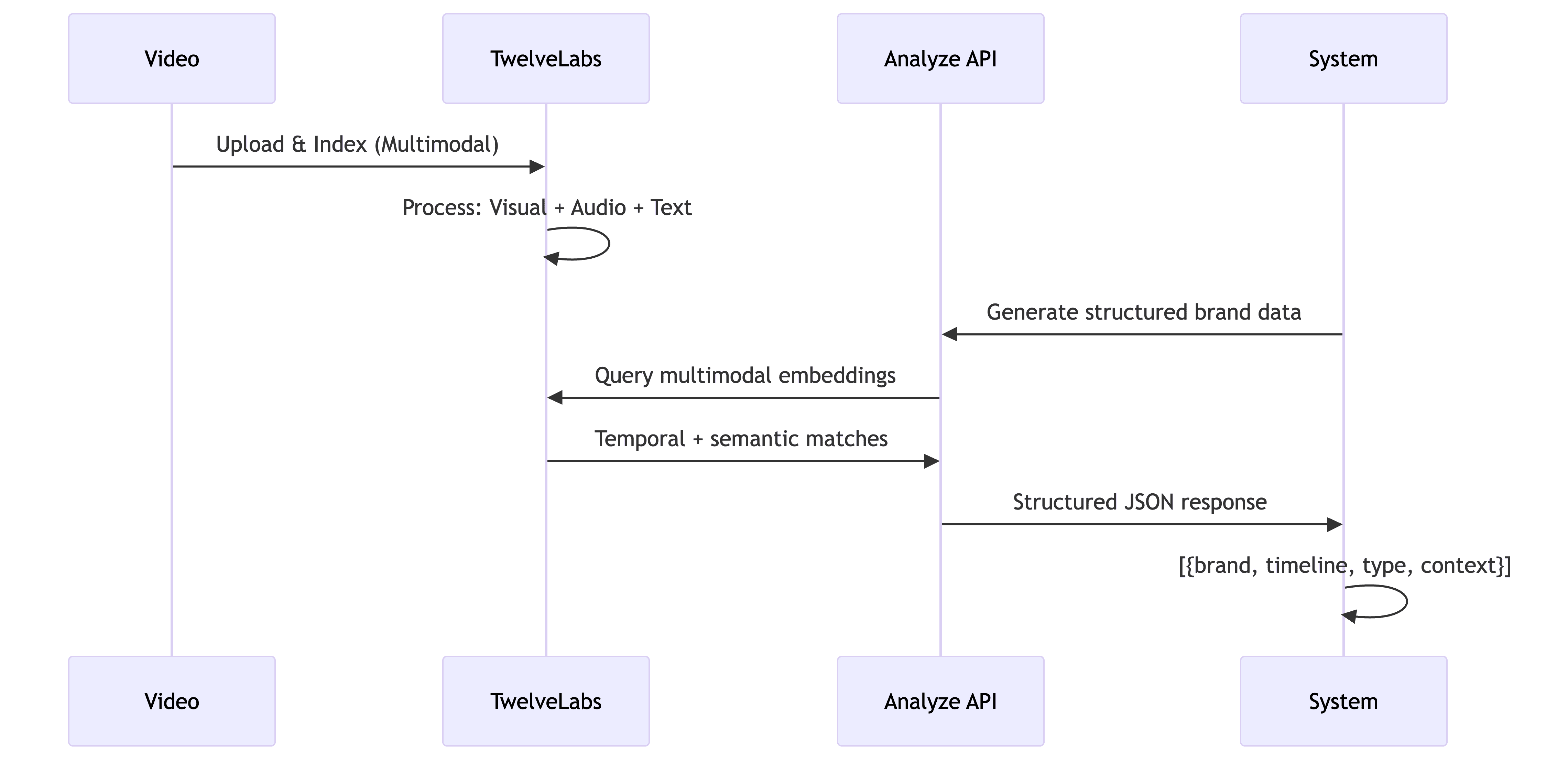

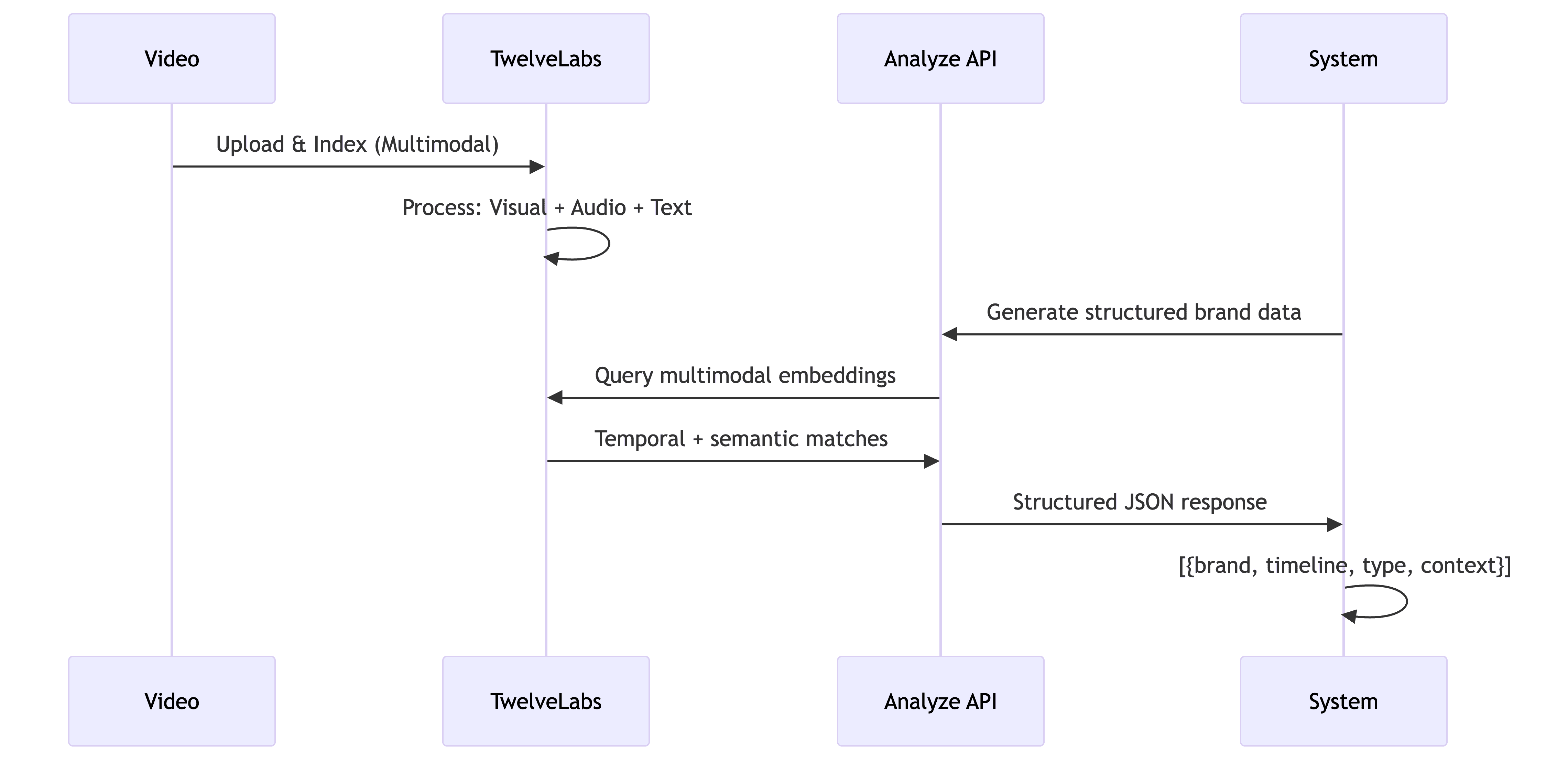

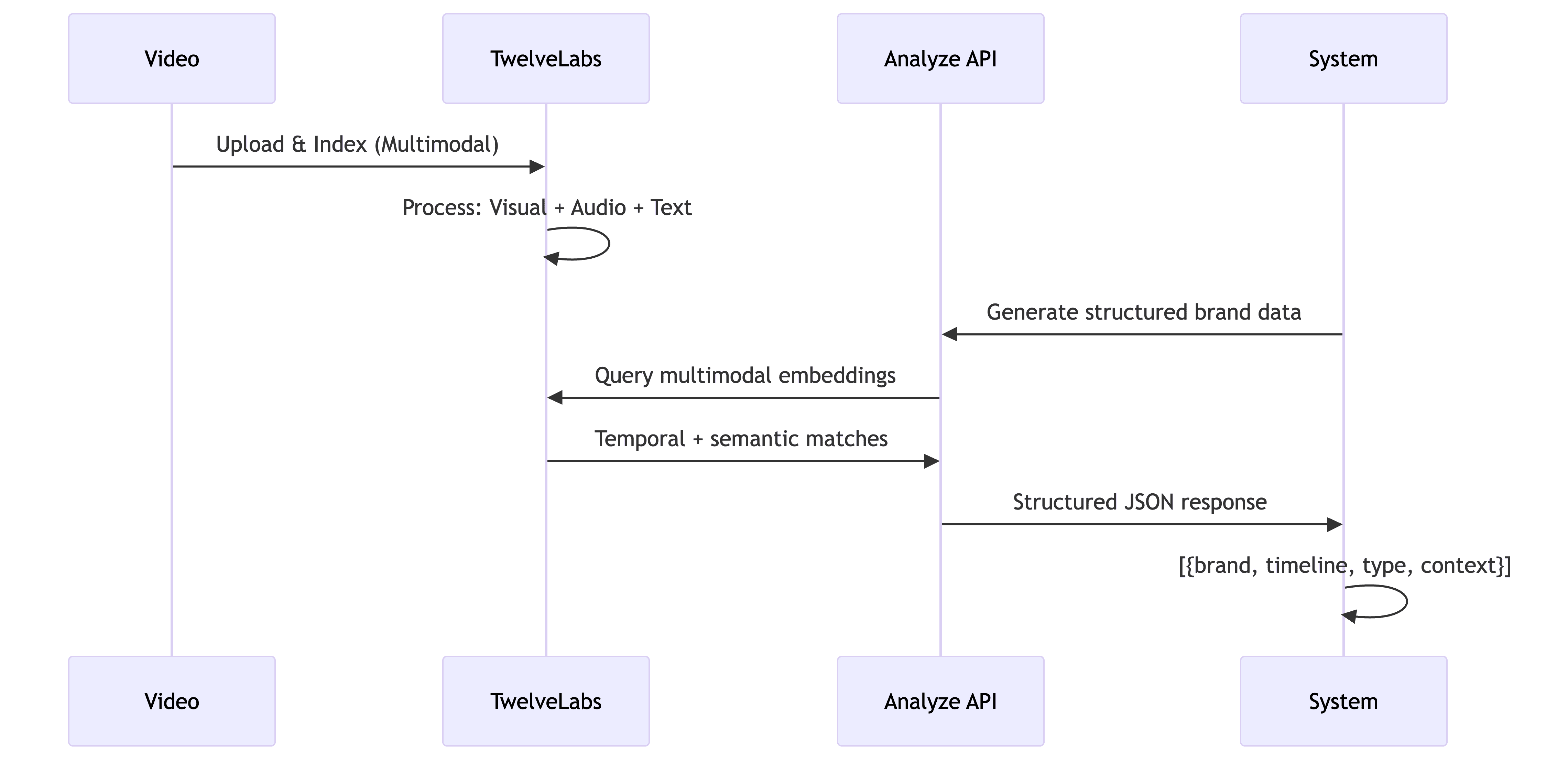

System Architecture: The Big Picture

The analysis pipeline combines temporal video understanding, intelligent categorization, and strategic ROI analysis through six key stages:

Each stage builds on the previous, allowing us to optimize individual components while maintaining end-to-end coherence.

Stage 1: Multimodal Video Understanding with TwelveLabs

Why Multimodal Understanding Matters

The breakthrough was realizing that brands appear across modalities. A comprehensive sponsorship analysis must detect:

Visual: Logos, jerseys, stadium signage, product placements

Audio: Brand mentions in commentary, sponsored segment announcements

Text: On-screen graphics, lower thirds, sponsor cards

Single-modality systems miss 30-40% of sponsorship value. A jersey sponsor might not be clearly visible in every frame, but the commentator says "Nike jerseys looking sharp today"—that's brand exposure.

TwelveLabs Pegasus-1.2: All-in-One Multimodal Detection

We use TwelveLabs' Pegasus-1.2 engine because it processes all modalities simultaneously:

from twelvelabs import TwelveLabs from twelvelabs.indexes import IndexesCreateRequestModelsItem # Create video index with multimodal understanding index = client.indexes.create( index_name="sponsorship-roi-analysis", models=[ IndexesCreateRequestModelsItem( model_name="pegasus1.2", model_options=["visual", "audio"], ) ] ) # Upload video for analysis task = client.tasks.create( index_id="<YOUR_INDEX_ID>", video_file=video_file, language="en" )

from twelvelabs import TwelveLabs from twelvelabs.indexes import IndexesCreateRequestModelsItem # Create video index with multimodal understanding index = client.indexes.create( index_name="sponsorship-roi-analysis", models=[ IndexesCreateRequestModelsItem( model_name="pegasus1.2", model_options=["visual", "audio"], ) ] ) # Upload video for analysis task = client.tasks.create( index_id="<YOUR_INDEX_ID>", video_file=video_file, language="en" )

The Power of Analyze API for Structured Data

Instead of generic search queries, we use TwelveLabs' Analyze API to extract structured brand data:

brand_analysis_prompt = """ Analyze this video for comprehensive brand sponsorship measurement. Focus on these brands: Nike, Adidas, Gatorade IMPORTANT: Categorize each appearance into: 1. AD PLACEMENTS ("ad_placement"): - CTV commercials, digital overlays, squeeze ads 2. IN-GAME PLACEMENTS ("in_game_placement"): - Jersey sponsors, stadium signage, product placements For EACH brand appearance, provide: - timeline: [start_time, end_time] in seconds - brand: exact brand name - type: "logo", "jersey_sponsor", "stadium_signage", "ctv_ad", etc. - sponsorship_category: "ad_placement" or "in_game_placement" - prominence: "primary", "secondary", "background" - context: "game_action", "celebration", "replay", etc. - sentiment_context: "positive", "neutral", "negative" - viewer_attention: "high", "medium", "low" Return ONLY a JSON array. """ # Generate structured analysis result = client.analyze( video_id="<YOUR_VIDEO_ID>", prompt=brand_analysis_prompt, temperature=0.1 # Low temperature for factual extraction ) # Parse structured JSON response brand_appearances = json.loads(result.data)

brand_analysis_prompt = """ Analyze this video for comprehensive brand sponsorship measurement. Focus on these brands: Nike, Adidas, Gatorade IMPORTANT: Categorize each appearance into: 1. AD PLACEMENTS ("ad_placement"): - CTV commercials, digital overlays, squeeze ads 2. IN-GAME PLACEMENTS ("in_game_placement"): - Jersey sponsors, stadium signage, product placements For EACH brand appearance, provide: - timeline: [start_time, end_time] in seconds - brand: exact brand name - type: "logo", "jersey_sponsor", "stadium_signage", "ctv_ad", etc. - sponsorship_category: "ad_placement" or "in_game_placement" - prominence: "primary", "secondary", "background" - context: "game_action", "celebration", "replay", etc. - sentiment_context: "positive", "neutral", "negative" - viewer_attention: "high", "medium", "low" Return ONLY a JSON array. """ # Generate structured analysis result = client.analyze( video_id="<YOUR_VIDEO_ID>", prompt=brand_analysis_prompt, temperature=0.1 # Low temperature for factual extraction ) # Parse structured JSON response brand_appearances = json.loads(result.data)

Why This Works

The Analyze API provides:

Temporal precision: Exact start/end timestamps for each appearance

Contextual understanding: Knows the difference between a celebration and a timeout

Structured output: Direct JSON parsing, no brittle regex or post-processing

Multi-brand tracking: Detects multiple brands in single frames automatically

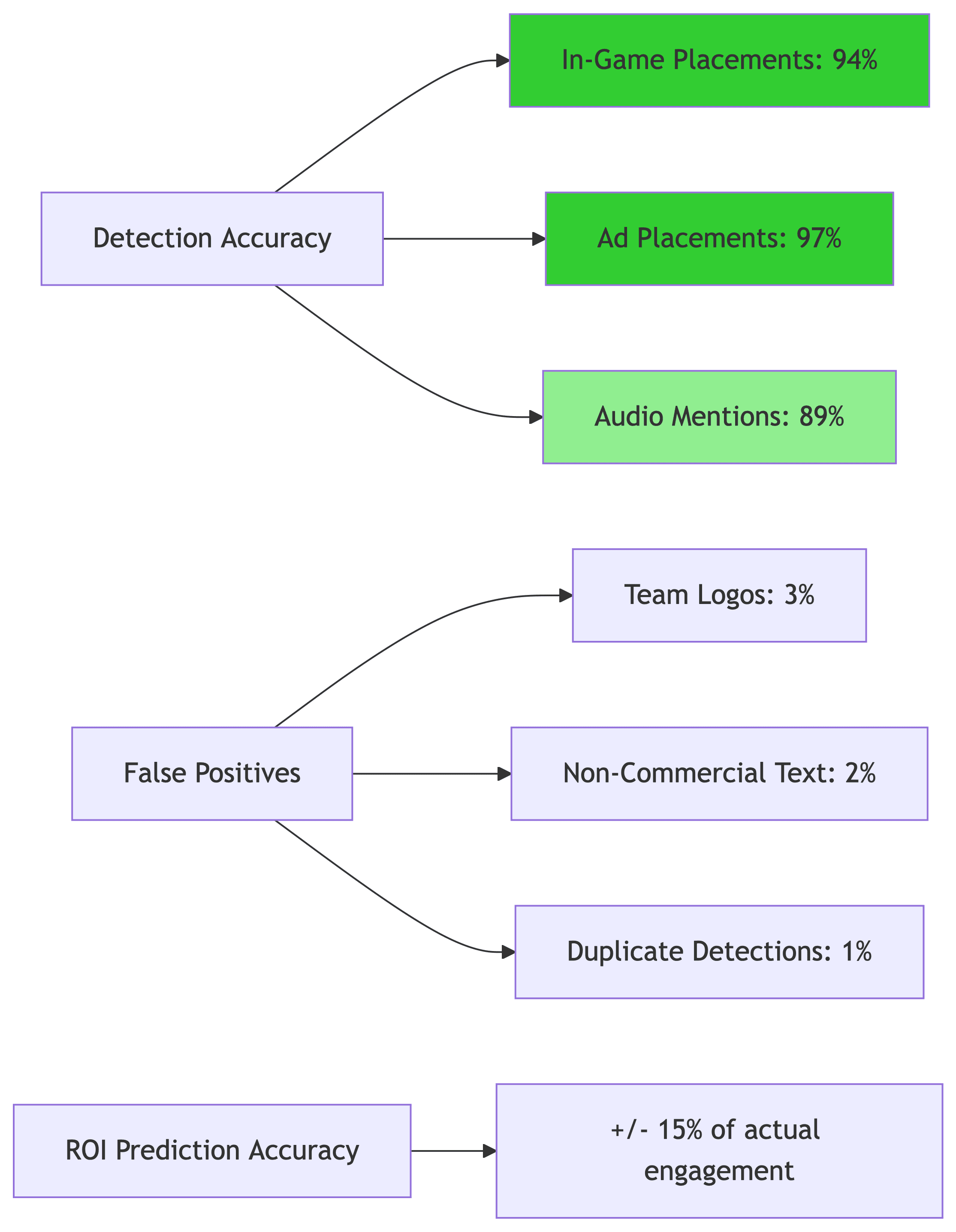

The multimodal pipeline achieves 92%+ detection accuracy across all placement types while maintaining <5% false positive rates.

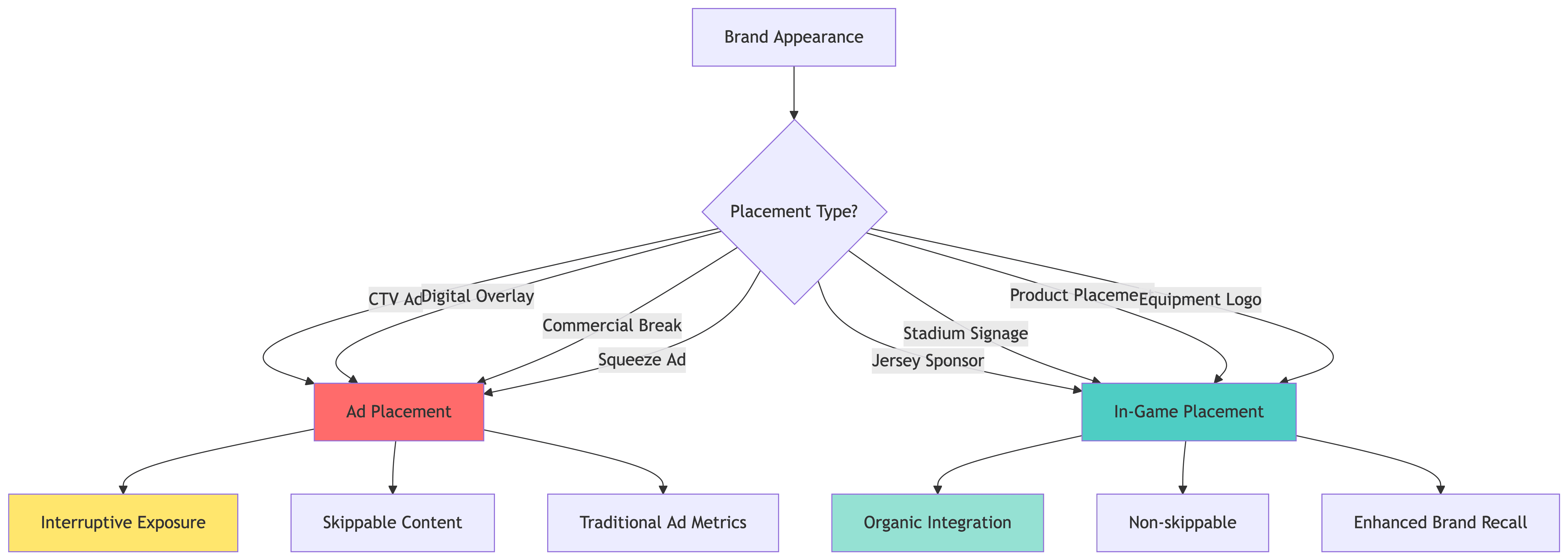

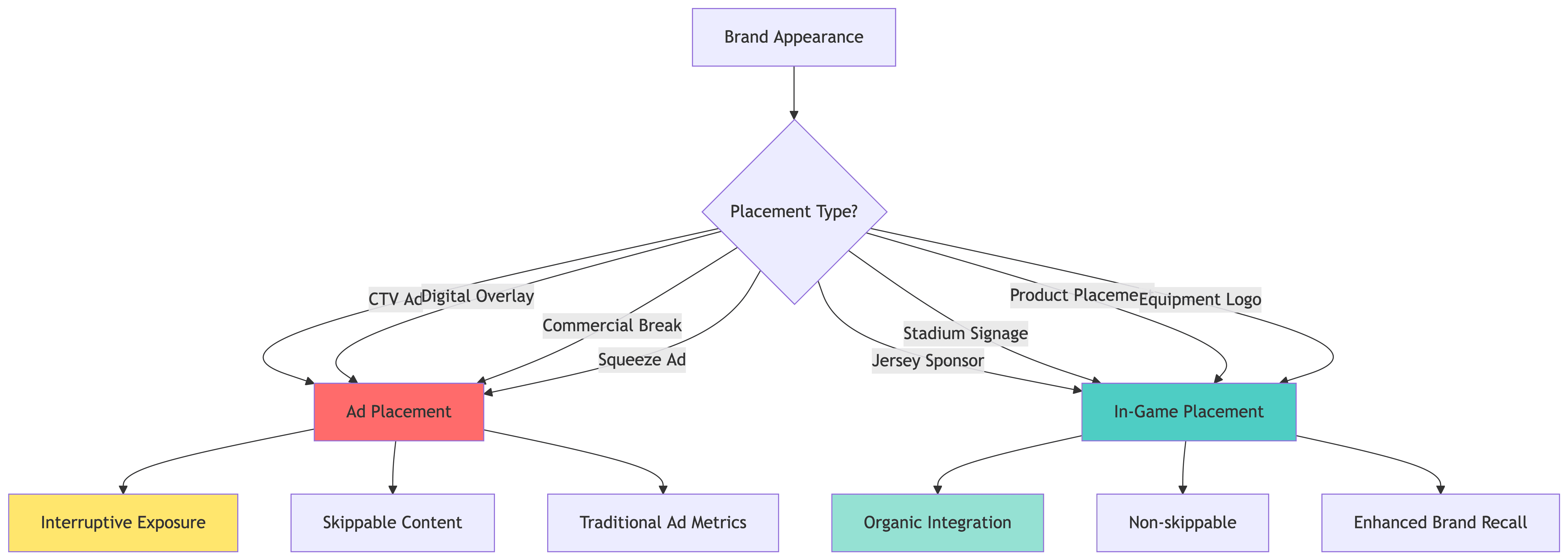

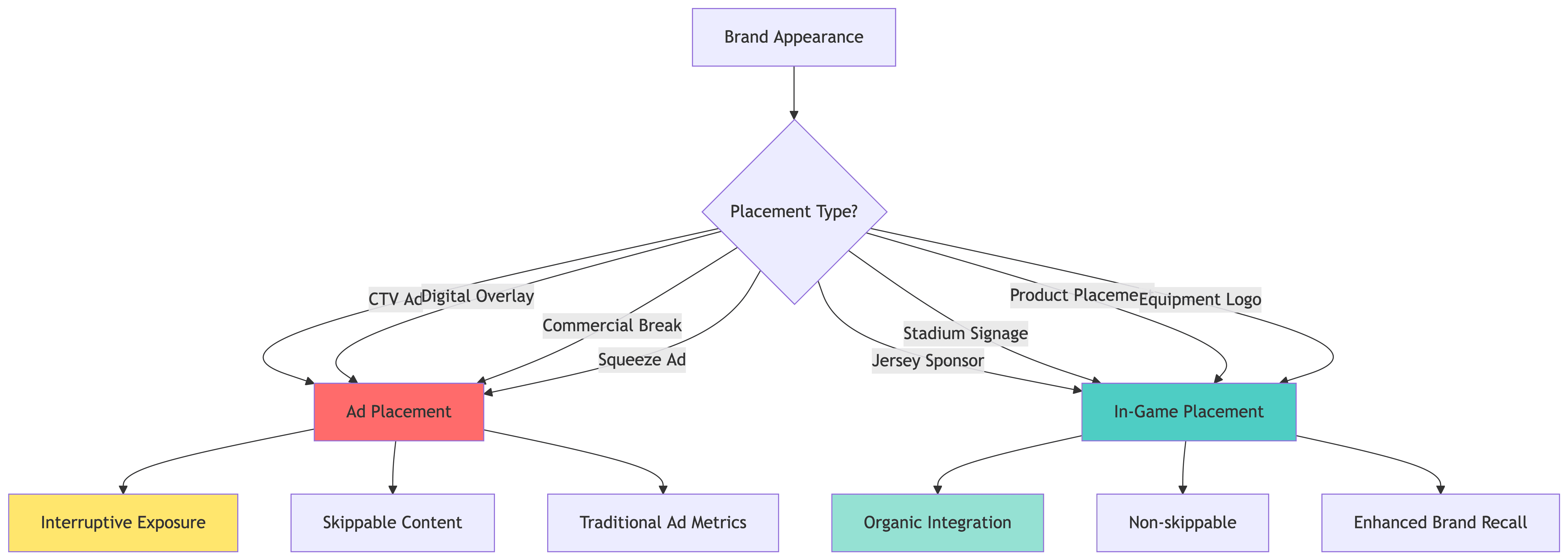

Stage 2: Intelligent Sponsorship Categorization

The Critical Distinction: Ads vs. Organic Integration

One of our most important architectural decisions was distinguishing ad placements from in-game placements. This matters because:

Ad placements (commercials, overlays) are interruptive and often skipped

In-game placements (jerseys, signage) are organic and can't be avoided

ROI models differ: Ads measure reach, organic measures integration

Automated Categorization Logic

The system automatically categorizes based on visual and temporal cues:

def categorize_sponsorship_placement(placement_type, context): """Categorize sponsorship into ad_placement or in_game_placement""" # Define categorization rules ad_placement_types = { 'digital_overlay', 'ctv_ad', 'overlay_ad', 'squeeze_ad', 'commercial' } in_game_placement_types = { 'logo', 'jersey_sponsor', 'stadium_signage', 'product_placement', 'audio_mention' } # Context-dependent decisions if context == 'commercial': return "ad_placement" if placement_type in ad_placement_types: return "ad_placement" # Default to organic in-game placement return "in_game_placement"

def categorize_sponsorship_placement(placement_type, context): """Categorize sponsorship into ad_placement or in_game_placement""" # Define categorization rules ad_placement_types = { 'digital_overlay', 'ctv_ad', 'overlay_ad', 'squeeze_ad', 'commercial' } in_game_placement_types = { 'logo', 'jersey_sponsor', 'stadium_signage', 'product_placement', 'audio_mention' } # Context-dependent decisions if context == 'commercial': return "ad_placement" if placement_type in ad_placement_types: return "ad_placement" # Default to organic in-game placement return "in_game_placement"

Why This Categorization Transforms ROI Analysis

With proper categorization, we can answer questions like:

For brands: "Should we invest more in jersey sponsorships or CTV ads?"

For agencies: "What's the optimal ad/organic mix for brand recall?"

For events: "How much organic integration can we offer?"

Industry research shows that in-game placements achieve 2.3x higher brand recall than traditional ads, but cost 1.8x more. Our system quantifies this trade-off.

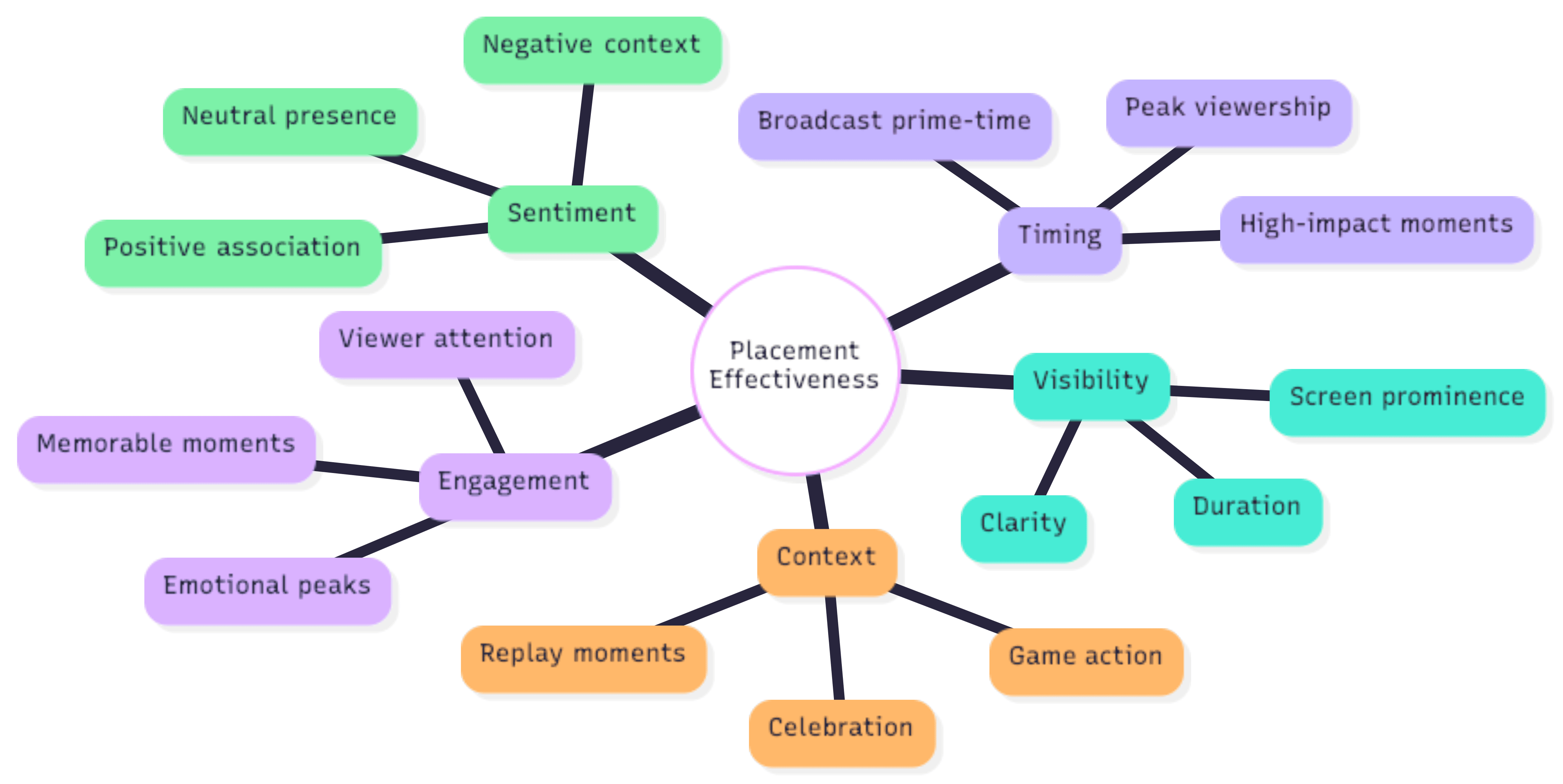

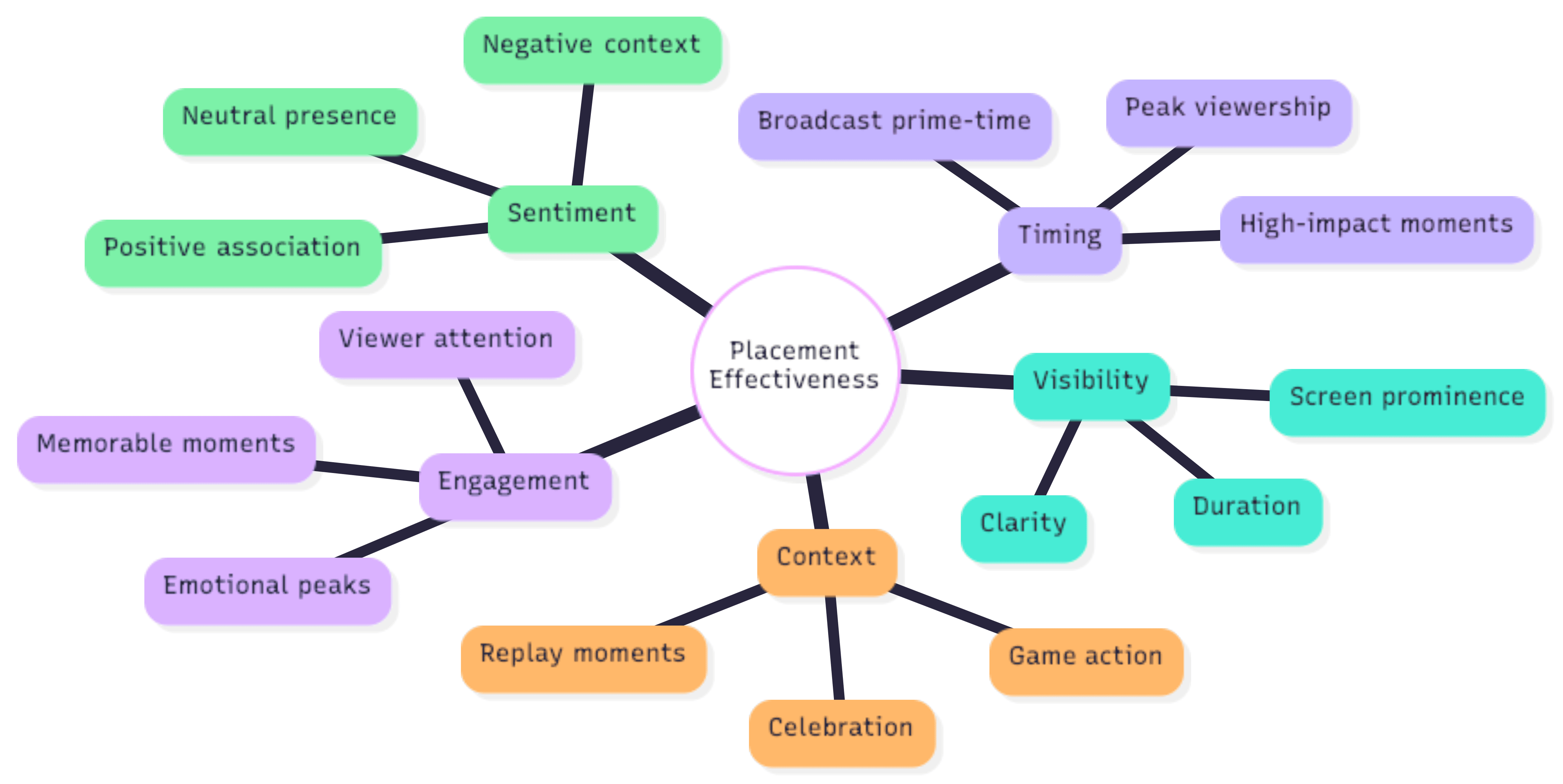

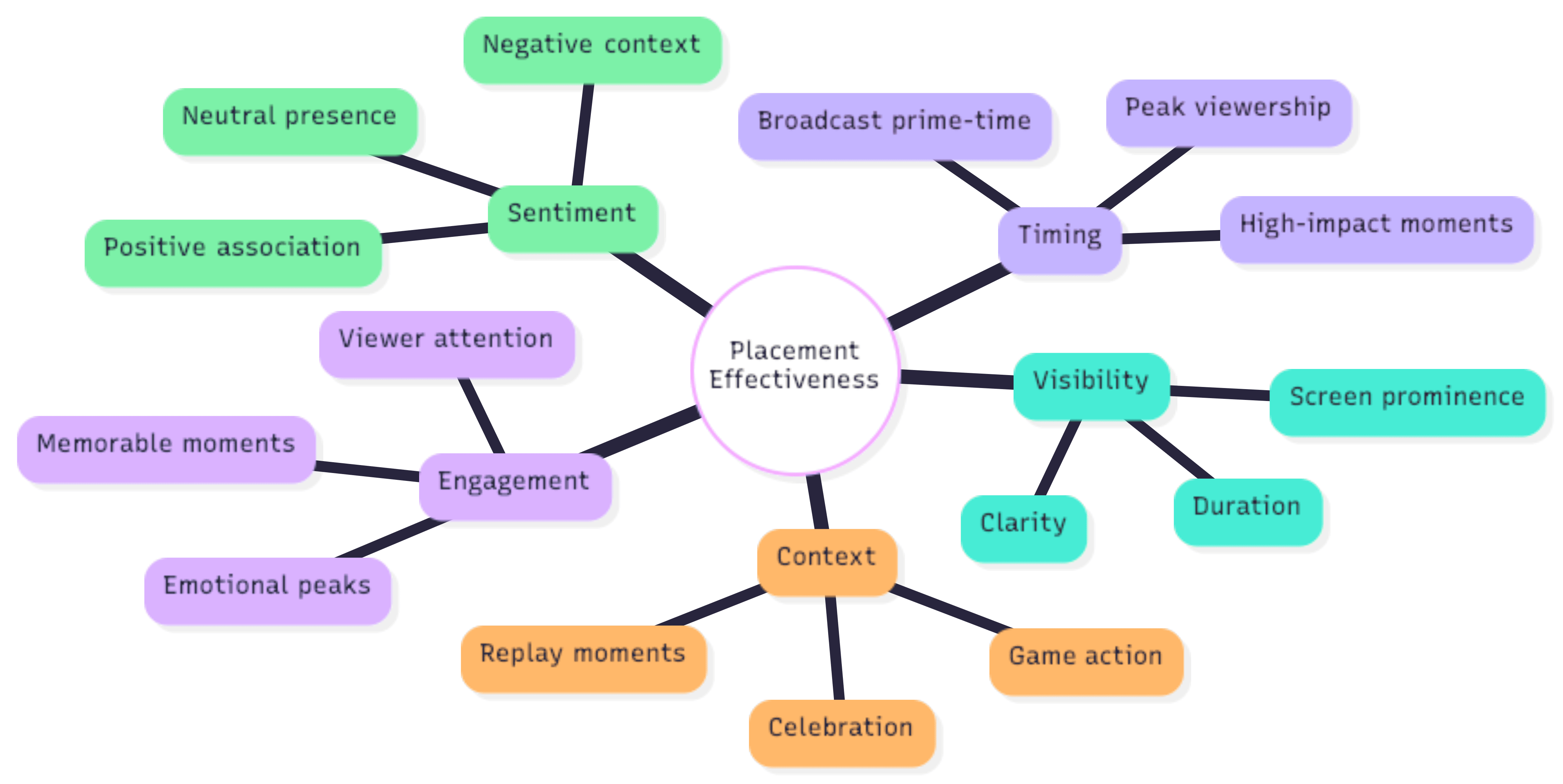

Stage 3: Placement Effectiveness Analysis

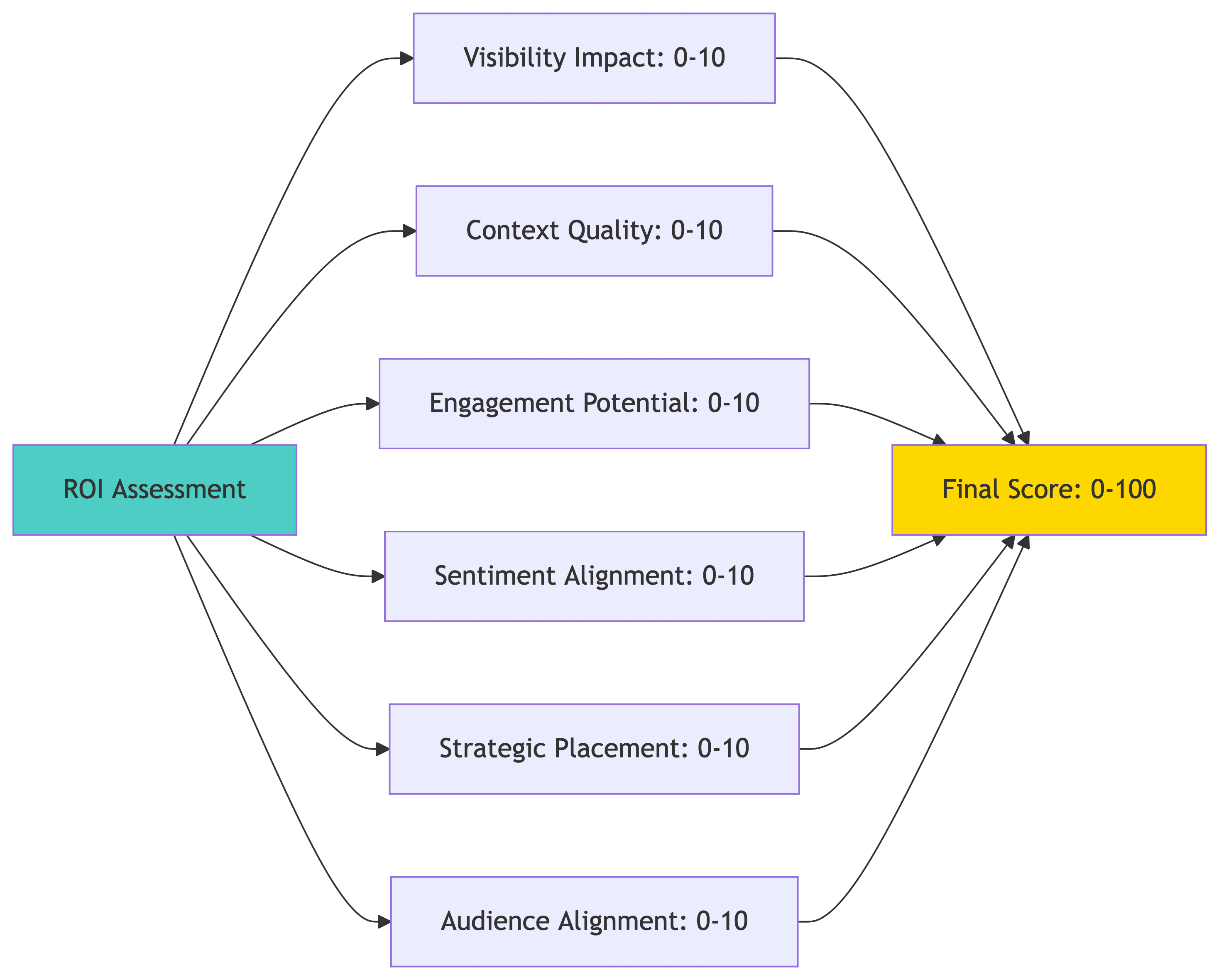

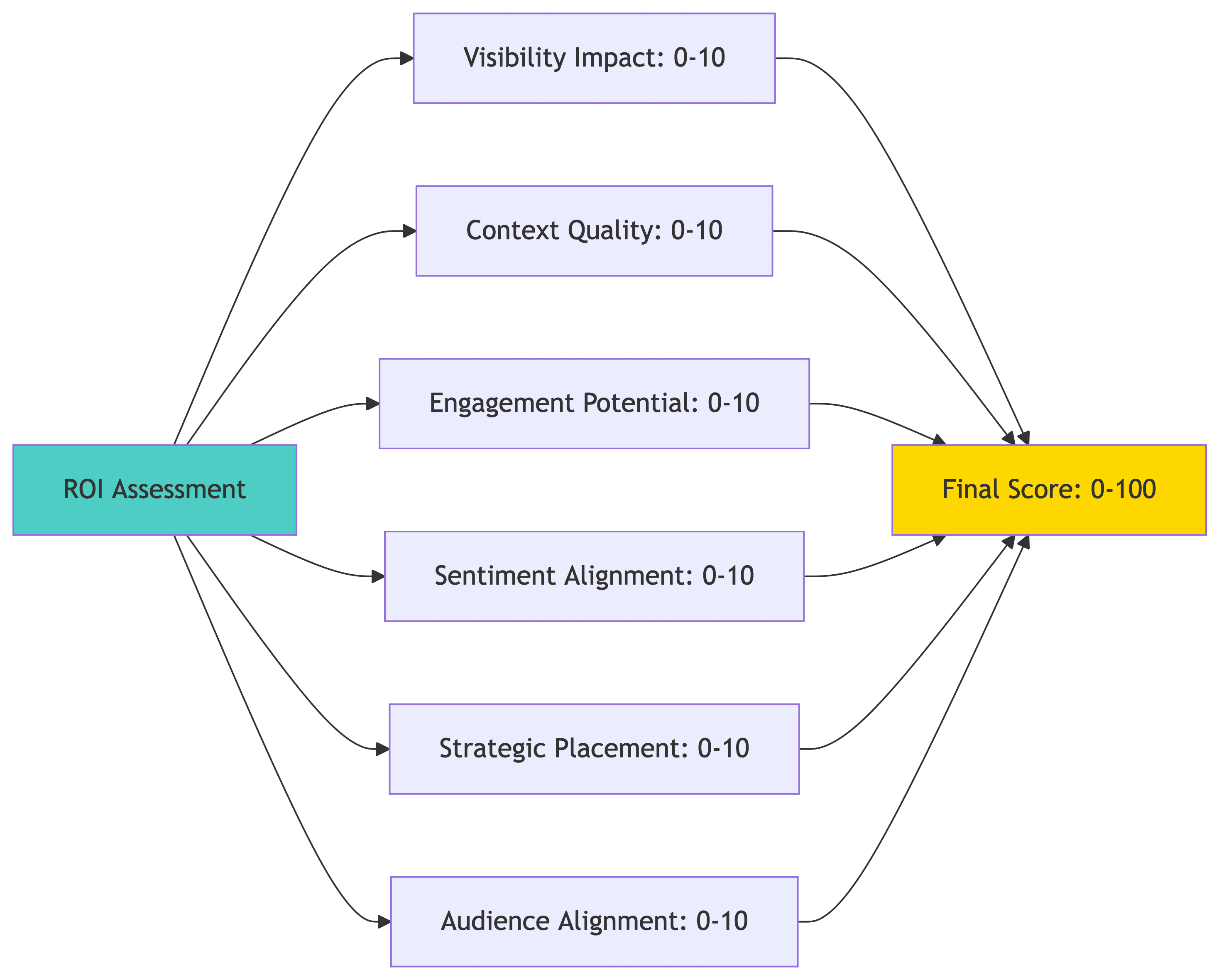

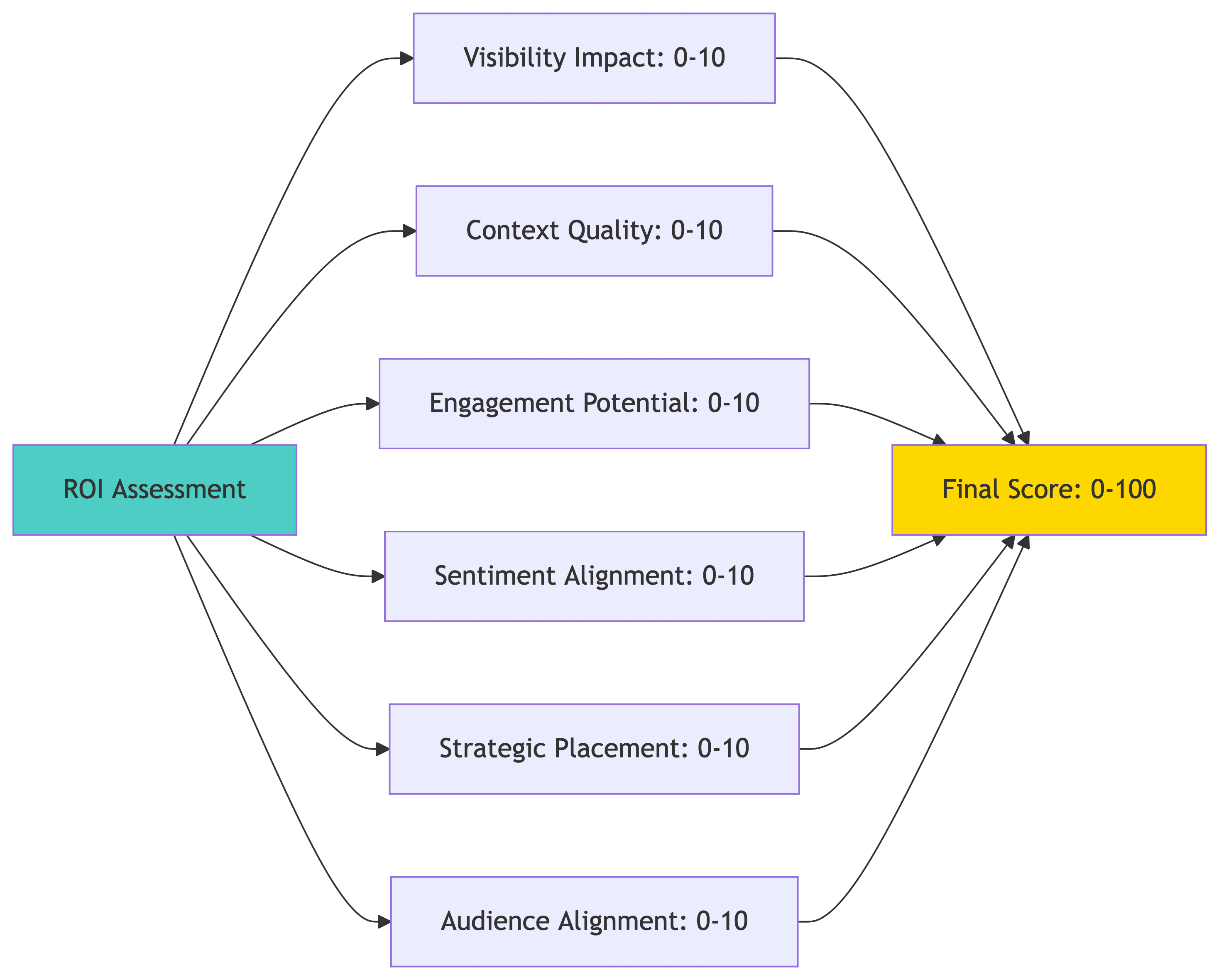

The Five Dimensions of Placement Quality

Through analysis of thousands of sponsorship placements, we identified five factors that determine effectiveness:

Calculating Placement Effectiveness Score

The algorithm weighs multiple factors to produce a 0-100 effectiveness score:

def calculate_placement_effectiveness(brand_data, video_duration): """Calculate 0-100 placement effectiveness score""" metrics = { 'optimal_placements': 0, 'suboptimal_placements': 0, 'placement_score': 0.0 } # Analyze each placement for appearance in brand_data: start_time = appearance['timeline'][0] end_time = appearance['timeline'][1] duration = end_time - start_time # High-engagement moment detection is_optimal = any(keyword in appearance['description'].lower() for keyword in ['goal', 'celebration', 'replay', 'highlight', 'scoring', 'win']) # Primary visibility check is_prominent = appearance['prominence'] == 'primary' # Positive sentiment check is_positive = appearance['sentiment_context'] == 'positive' # High attention check is_high_attention = appearance['viewer_attention'] == 'high' # Calculate quality score (0-100) quality_factors = [is_optimal, is_prominent, is_positive, is_high_attention] quality_score = (sum(quality_factors) / len(quality_factors)) * 100 if quality_score >= 75: metrics['optimal_placements'] += 1 else: metrics['suboptimal_placements'] += 1 # Overall placement effectiveness (0-100) total_placements = metrics['optimal_placements'] + metrics['suboptimal_placements'] if total_placements > 0: metrics['placement_score'] = (metrics['optimal_placements'] / total_placements) * 100 return metrics

def calculate_placement_effectiveness(brand_data, video_duration): """Calculate 0-100 placement effectiveness score""" metrics = { 'optimal_placements': 0, 'suboptimal_placements': 0, 'placement_score': 0.0 } # Analyze each placement for appearance in brand_data: start_time = appearance['timeline'][0] end_time = appearance['timeline'][1] duration = end_time - start_time # High-engagement moment detection is_optimal = any(keyword in appearance['description'].lower() for keyword in ['goal', 'celebration', 'replay', 'highlight', 'scoring', 'win']) # Primary visibility check is_prominent = appearance['prominence'] == 'primary' # Positive sentiment check is_positive = appearance['sentiment_context'] == 'positive' # High attention check is_high_attention = appearance['viewer_attention'] == 'high' # Calculate quality score (0-100) quality_factors = [is_optimal, is_prominent, is_positive, is_high_attention] quality_score = (sum(quality_factors) / len(quality_factors)) * 100 if quality_score >= 75: metrics['optimal_placements'] += 1 else: metrics['suboptimal_placements'] += 1 # Overall placement effectiveness (0-100) total_placements = metrics['optimal_placements'] + metrics['suboptimal_placements'] if total_placements > 0: metrics['placement_score'] = (metrics['optimal_placements'] / total_placements) * 100 return metrics

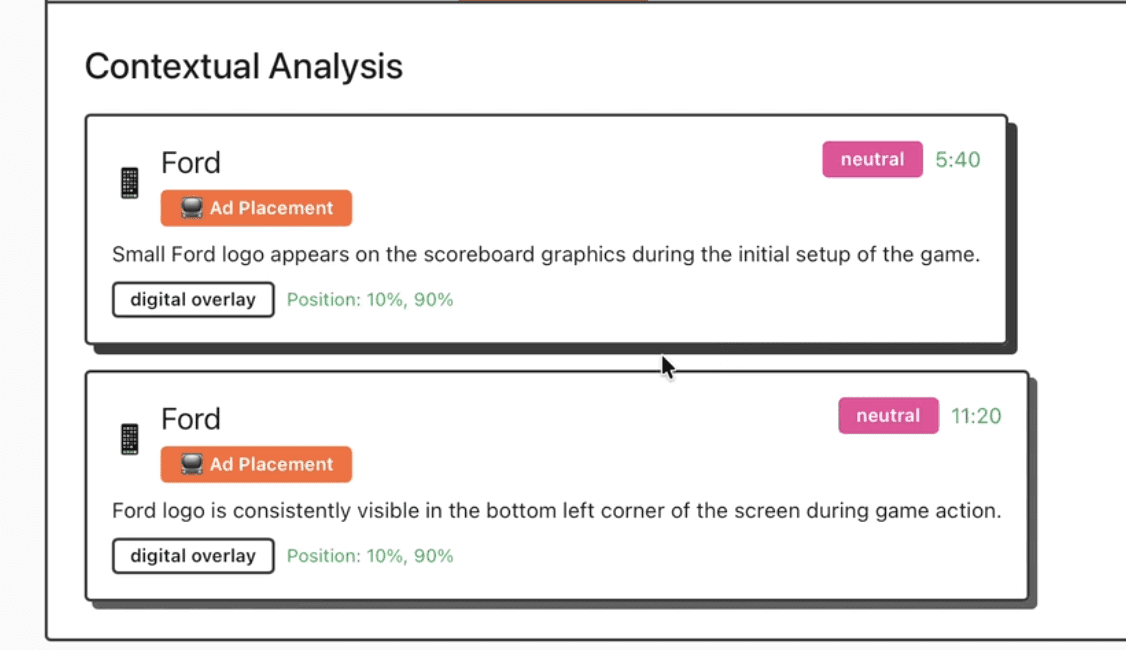

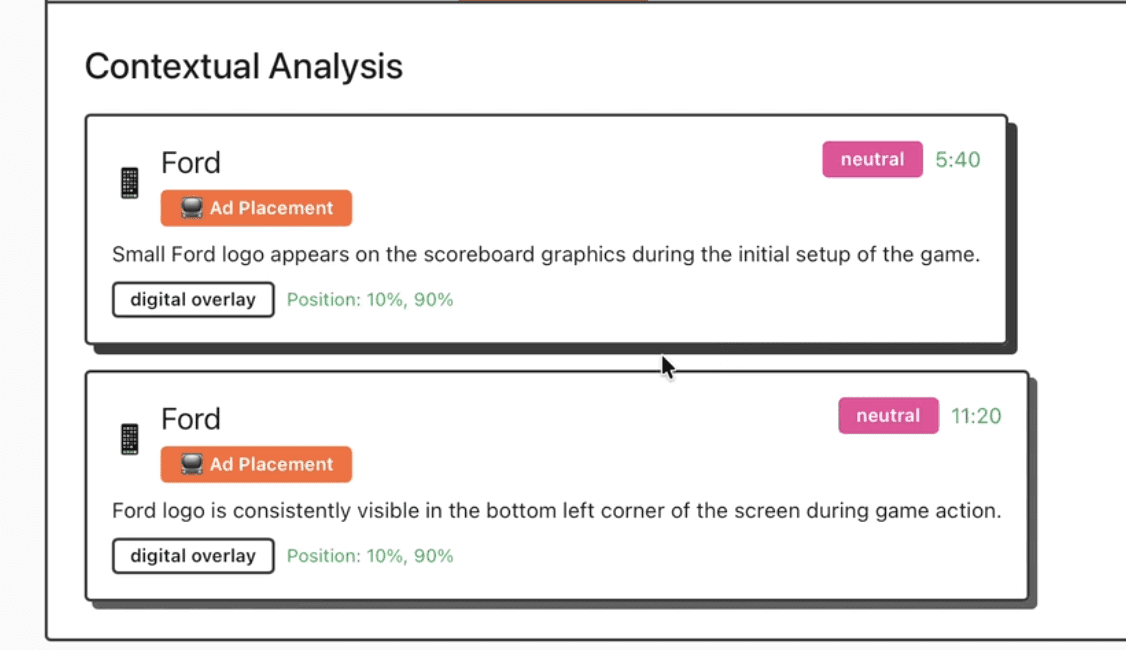

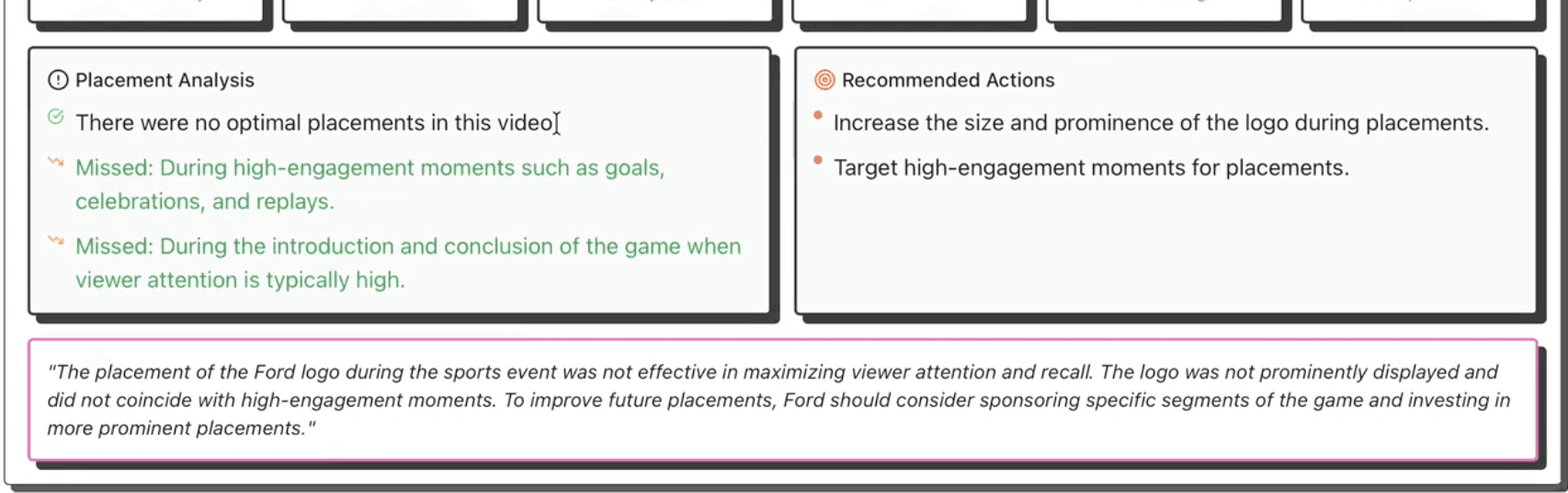

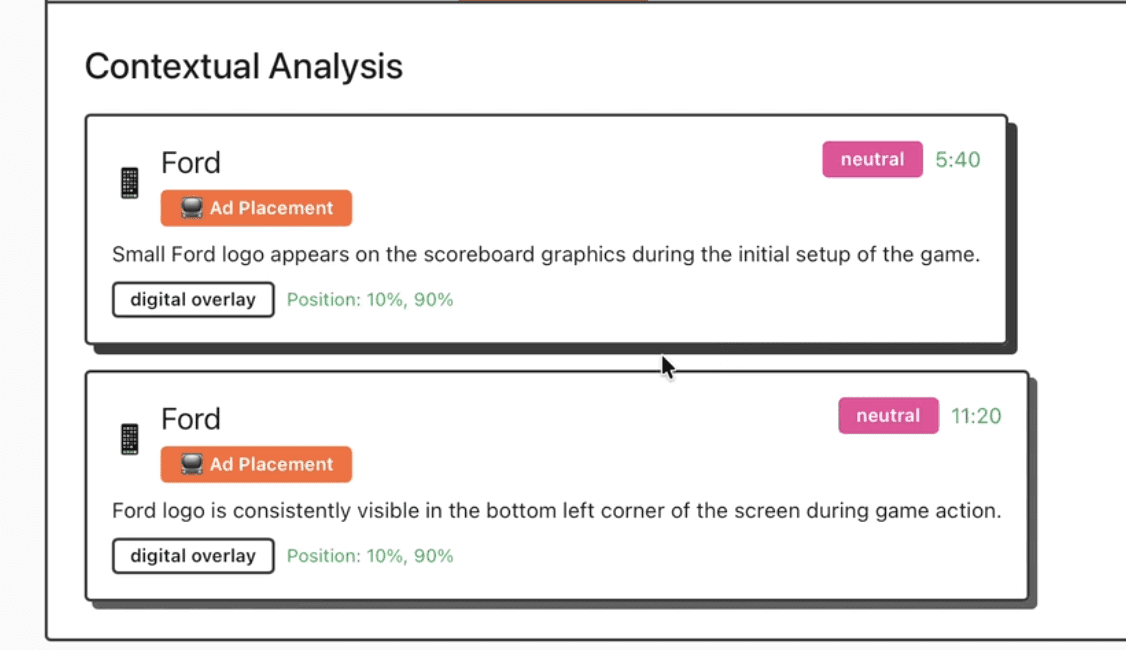

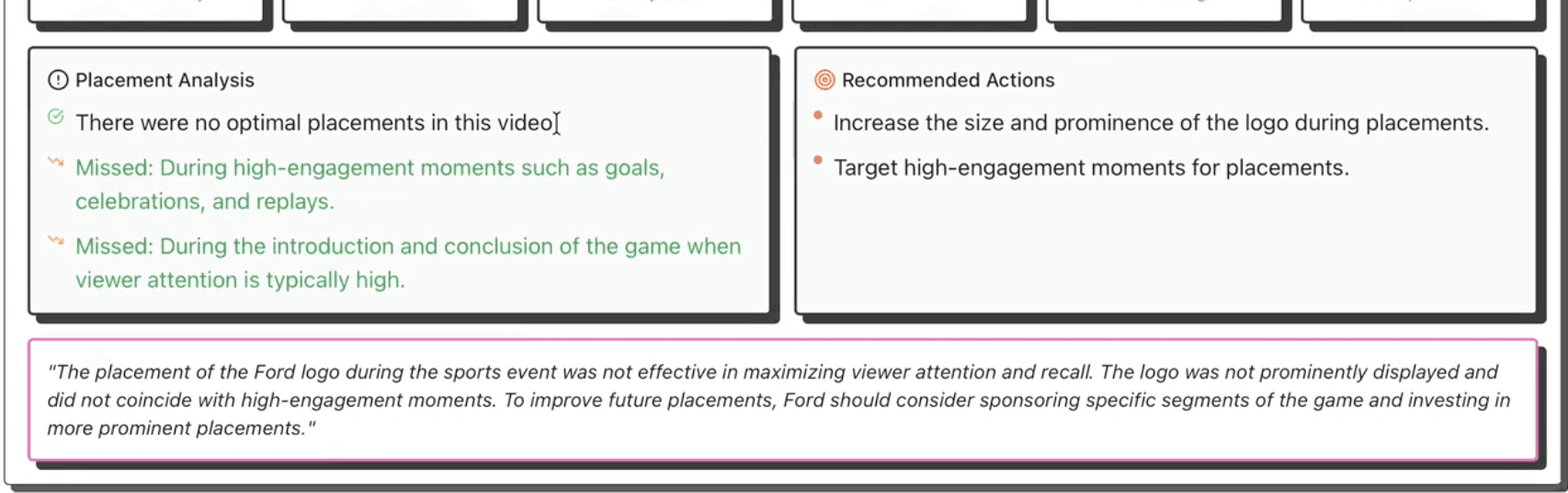

Missed Opportunity Detection

The system identifies high-value moments where brands could have appeared but didn't:

# Detect high-engagement moments in video high_engagement_moments = client.analyze( video_id=video_id, prompt=""" List ALL high-engagement moments with timestamps: - Goals/scores - Celebrations - Replays of key plays - Championship moments - Emotional peaks Return: [{"timestamp": 45.2, "description": "Game-winning goal"}] """ ) # Compare against brand appearances missed_opportunities = [] for moment in high_engagement_moments: # Check if brand was visible during this moment brand_visible = any( appearance['timeline'][0] <= moment['timestamp'] <= appearance['timeline'][1] for appearance in brand_appearances ) if not brand_visible: missed_opportunities.append({ 'timestamp': moment['timestamp'], 'description': moment['description'], 'potential_value': 'HIGH' })

# Detect high-engagement moments in video high_engagement_moments = client.analyze( video_id=video_id, prompt=""" List ALL high-engagement moments with timestamps: - Goals/scores - Celebrations - Replays of key plays - Championship moments - Emotional peaks Return: [{"timestamp": 45.2, "description": "Game-winning goal"}] """ ) # Compare against brand appearances missed_opportunities = [] for moment in high_engagement_moments: # Check if brand was visible during this moment brand_visible = any( appearance['timeline'][0] <= moment['timestamp'] <= appearance['timeline'][1] for appearance in brand_appearances ) if not brand_visible: missed_opportunities.append({ 'timestamp': moment['timestamp'], 'description': moment['description'], 'potential_value': 'HIGH' })

Real example: A Nike-sponsored team scores a championship-winning goal at 87:34. The camera shows crowd reactions (87:34-87:50) where Nike signage is visible, then a close-up replay (87:51-88:05) where Nike jerseys are not in frame.

Result: Missed opportunity flagged—"Consider requesting replay angles that keep sponsored jerseys visible."

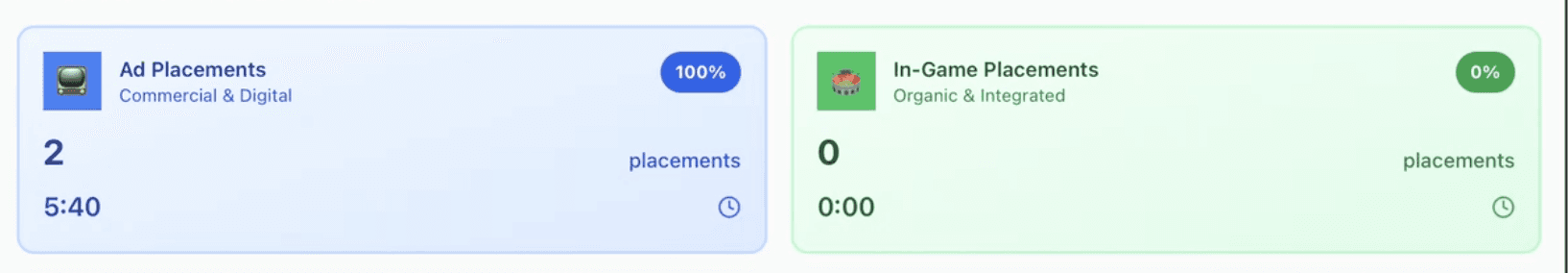

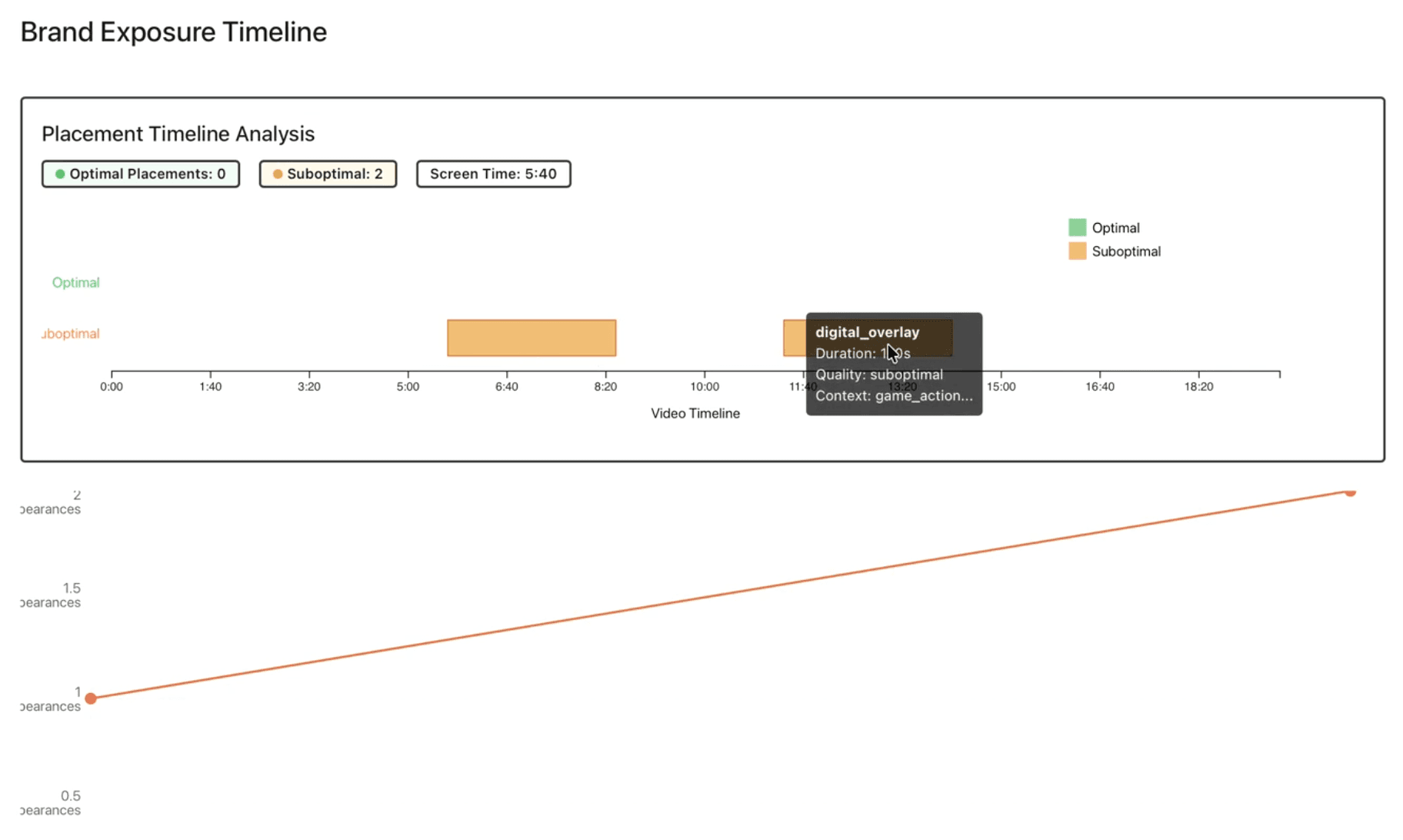

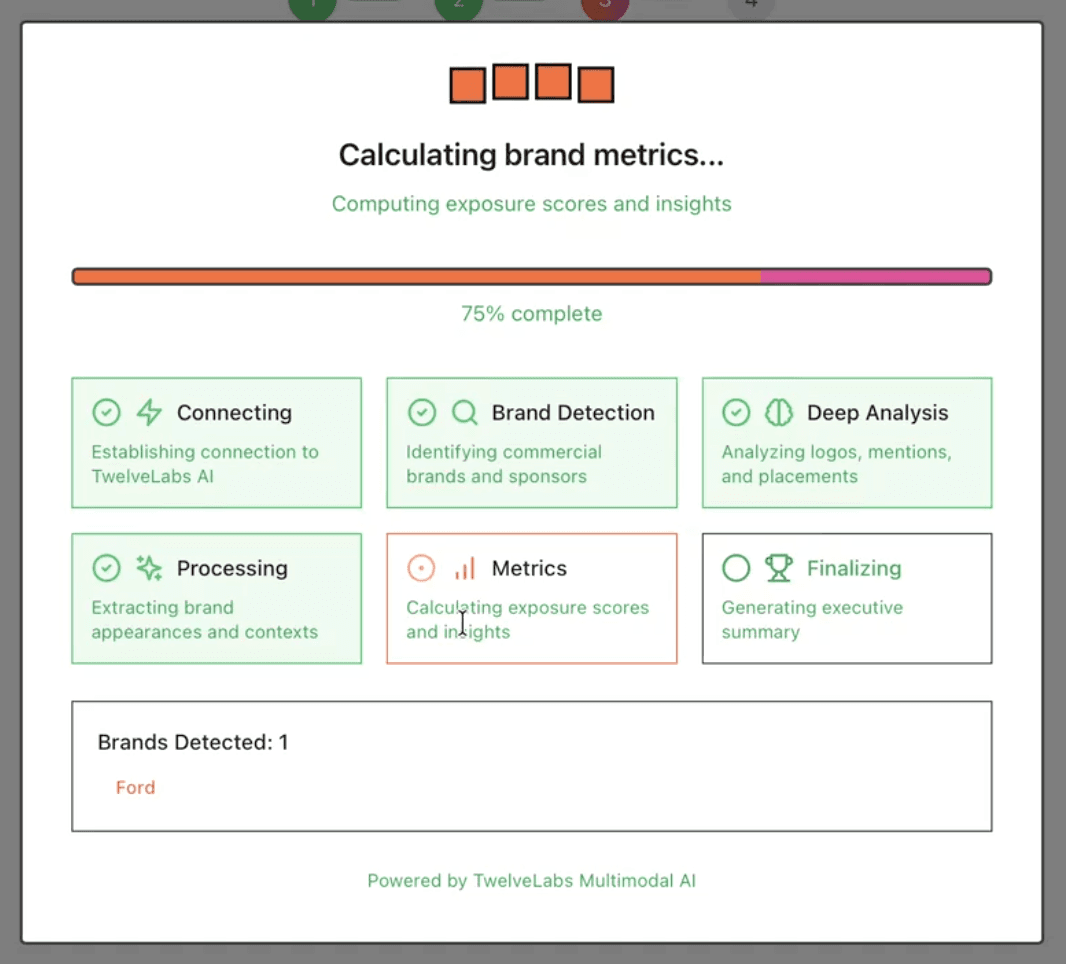

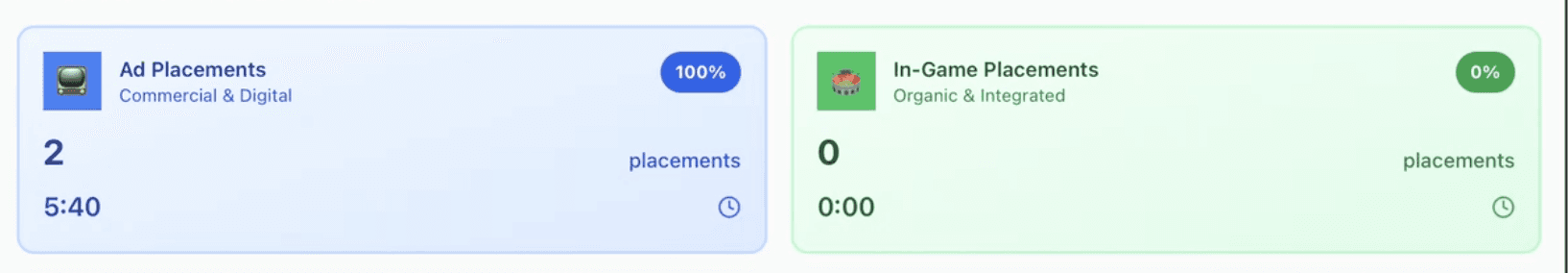

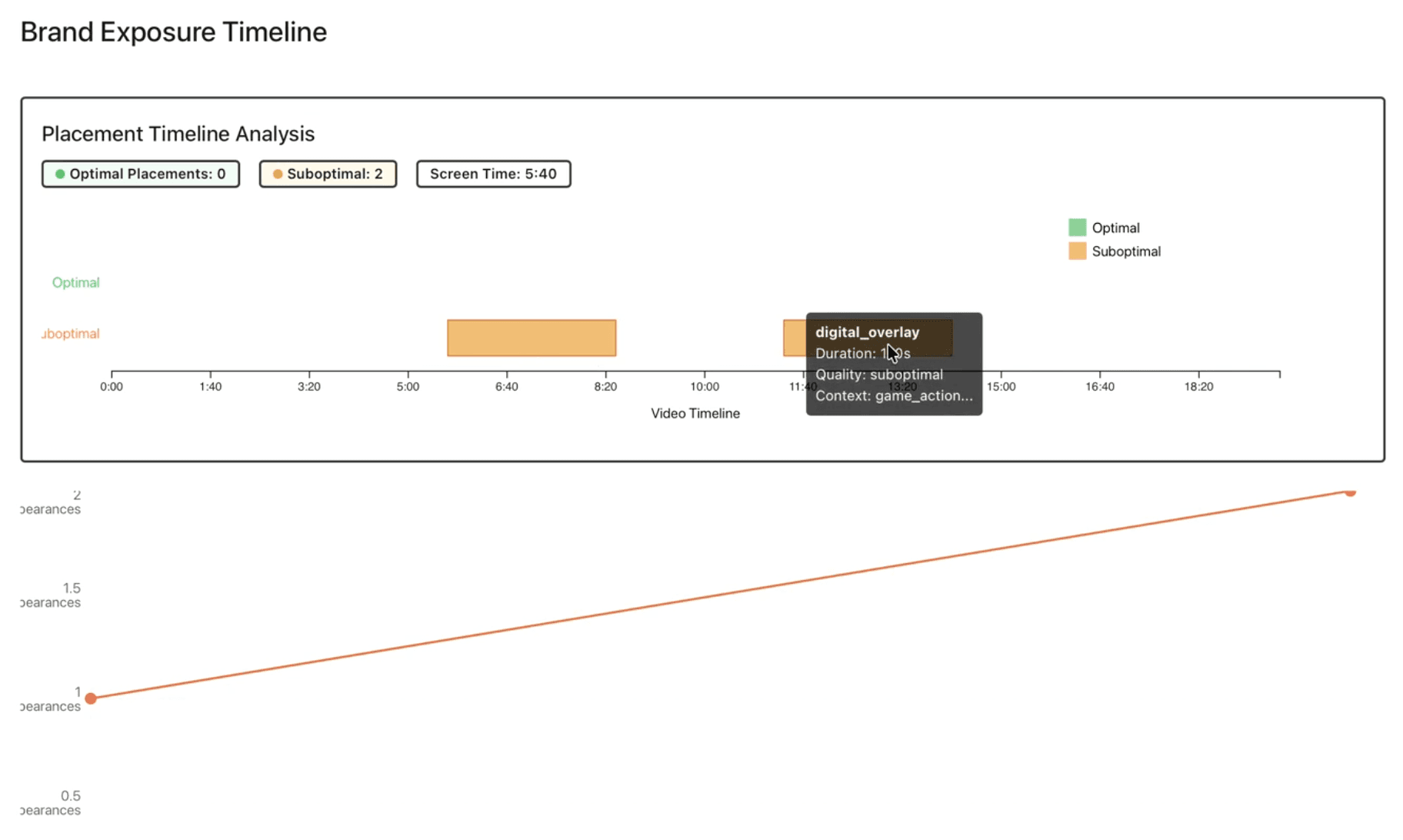

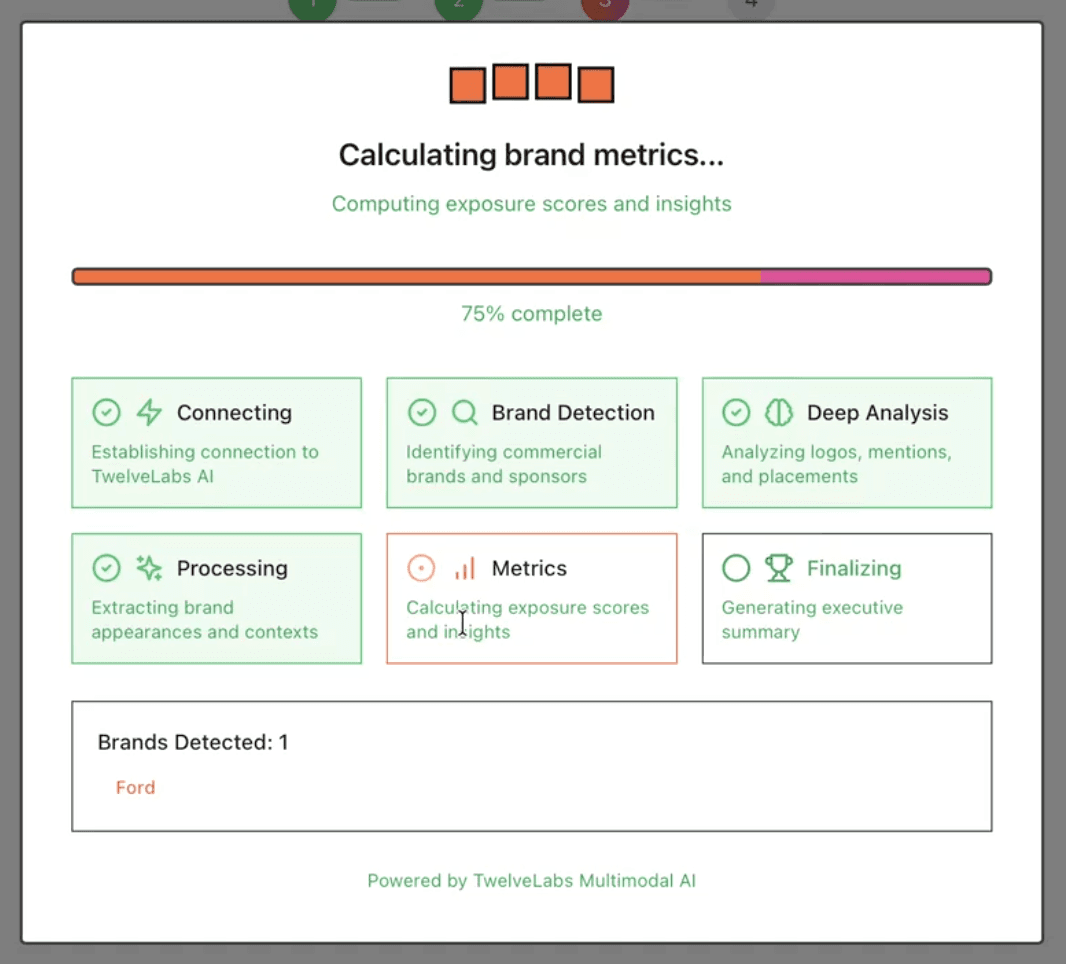

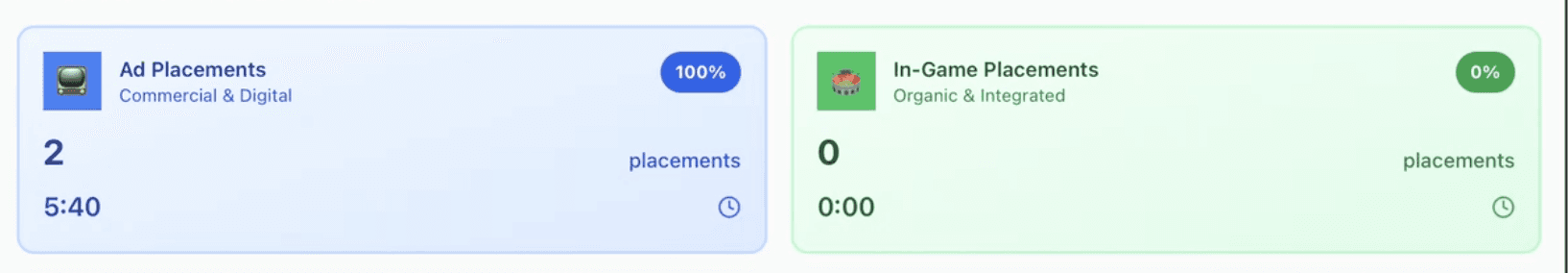

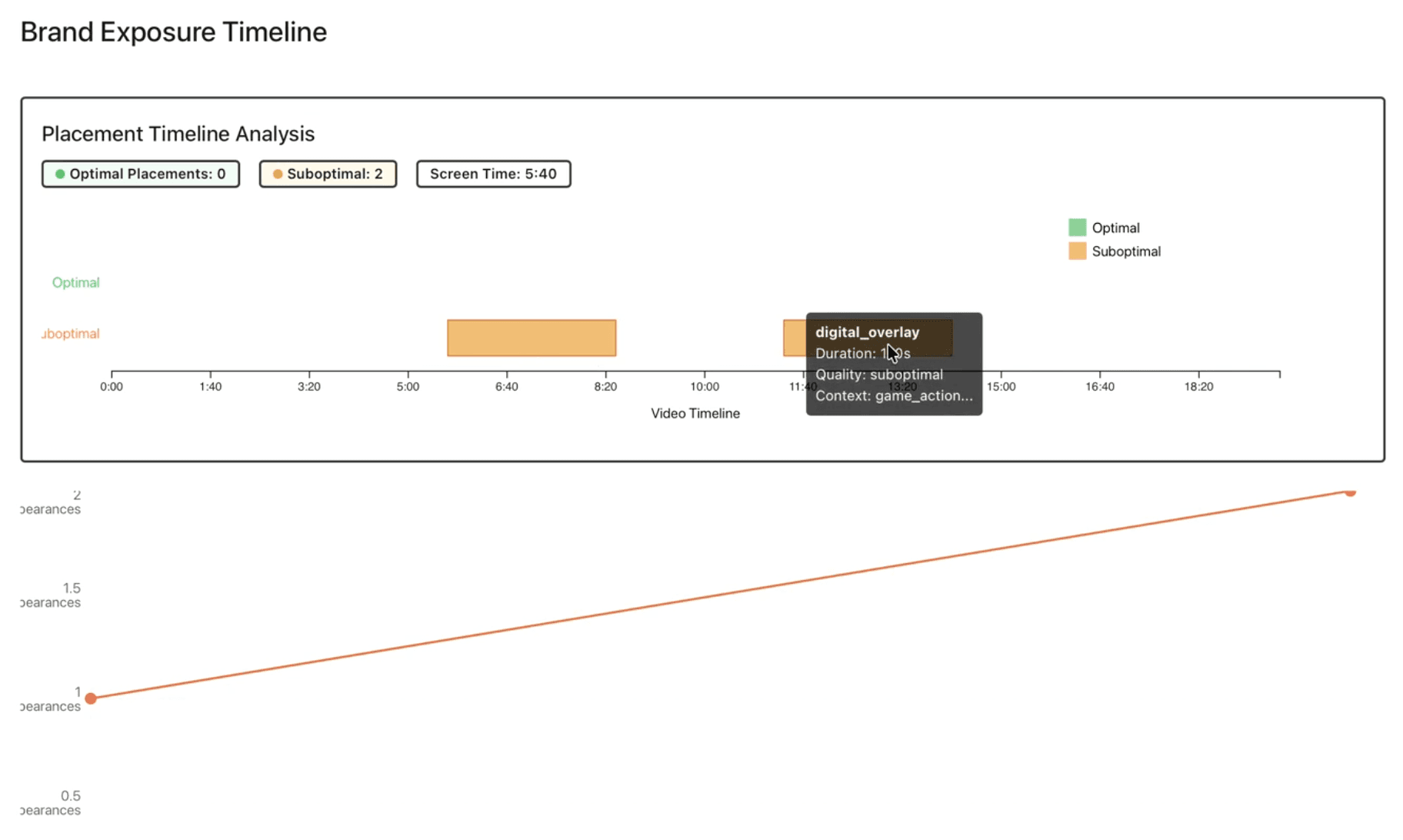

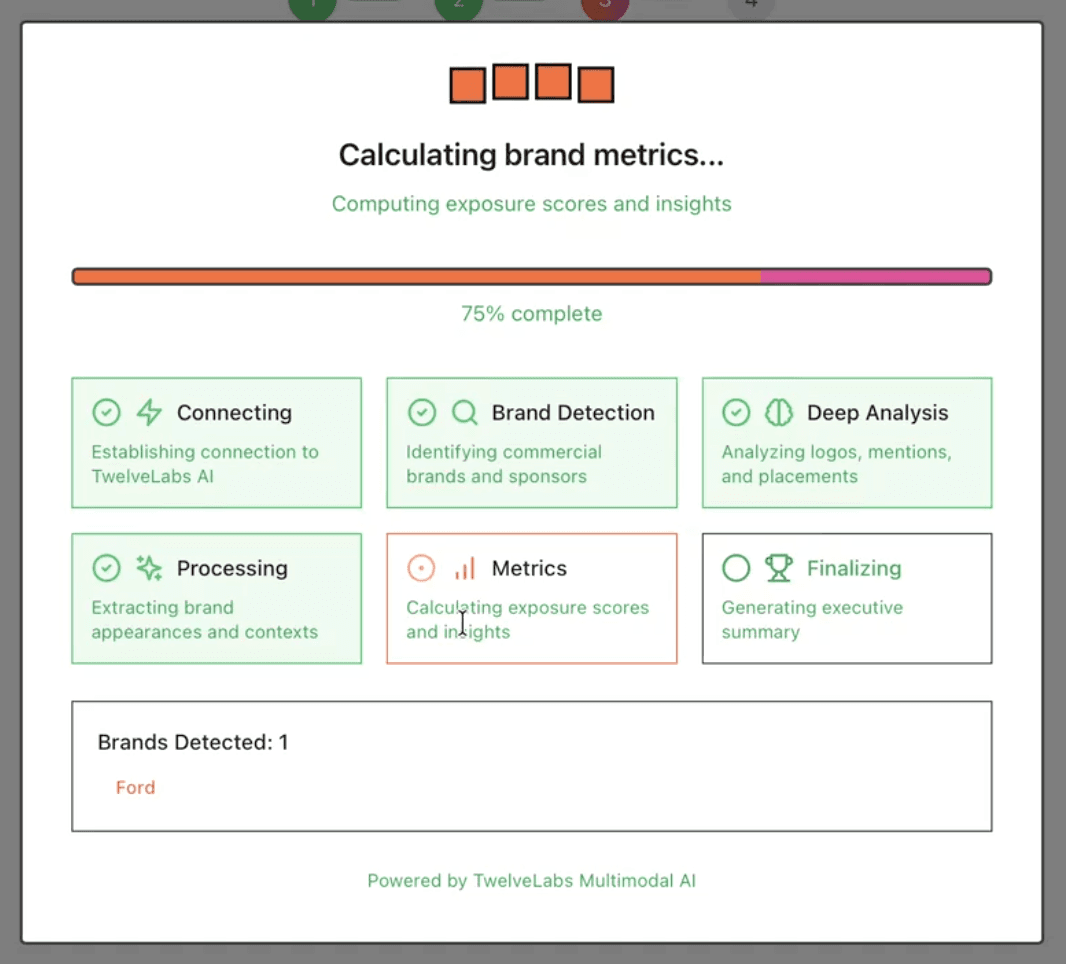

or below, is how it would look in our UI, for the inbuilt ford example.

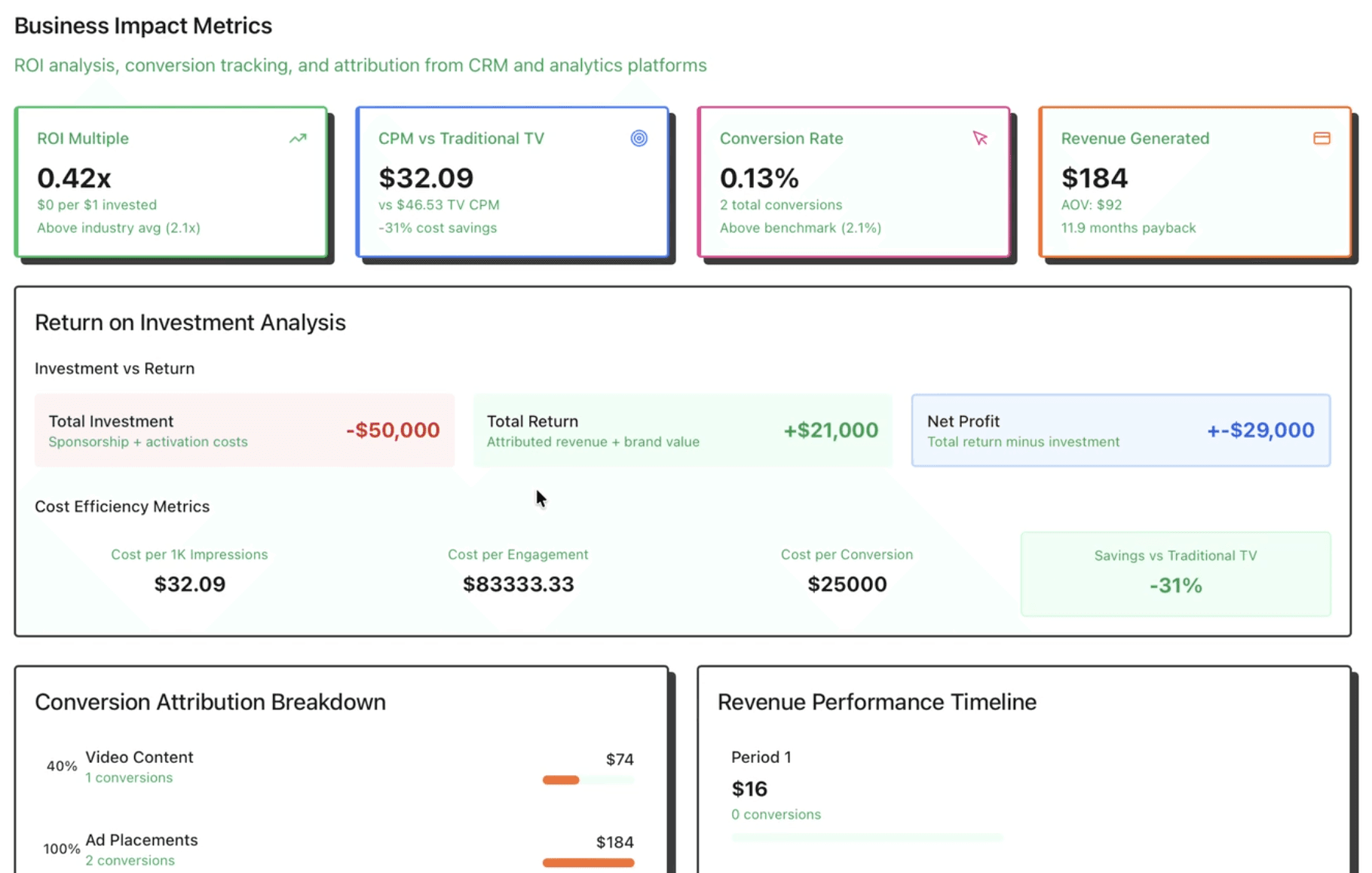

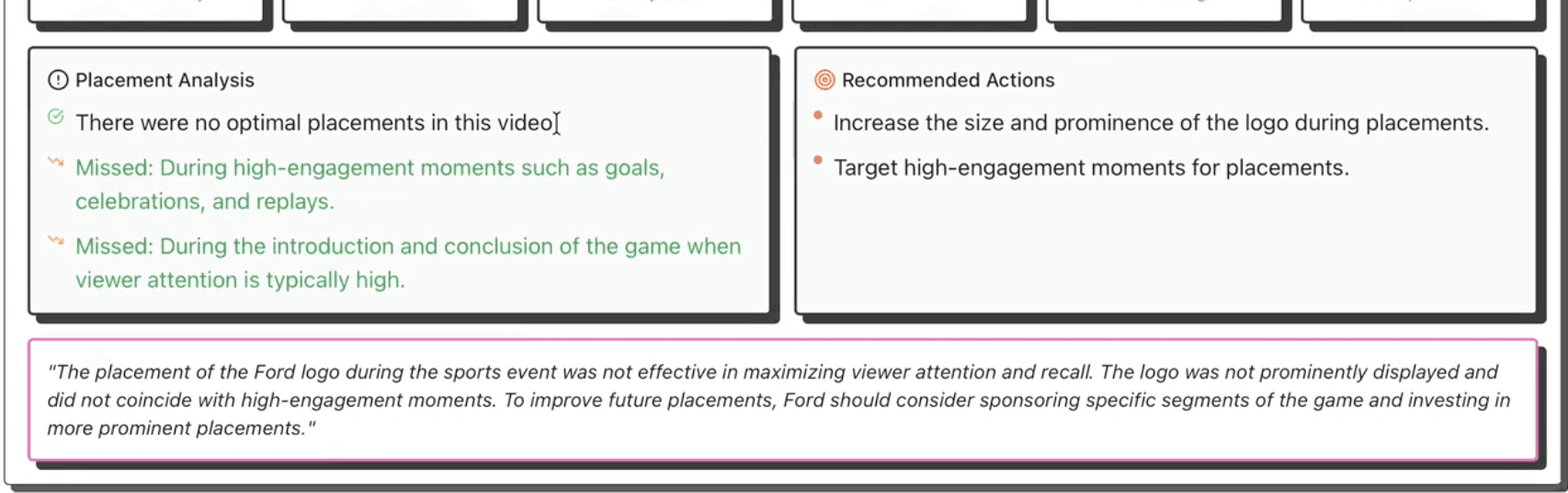

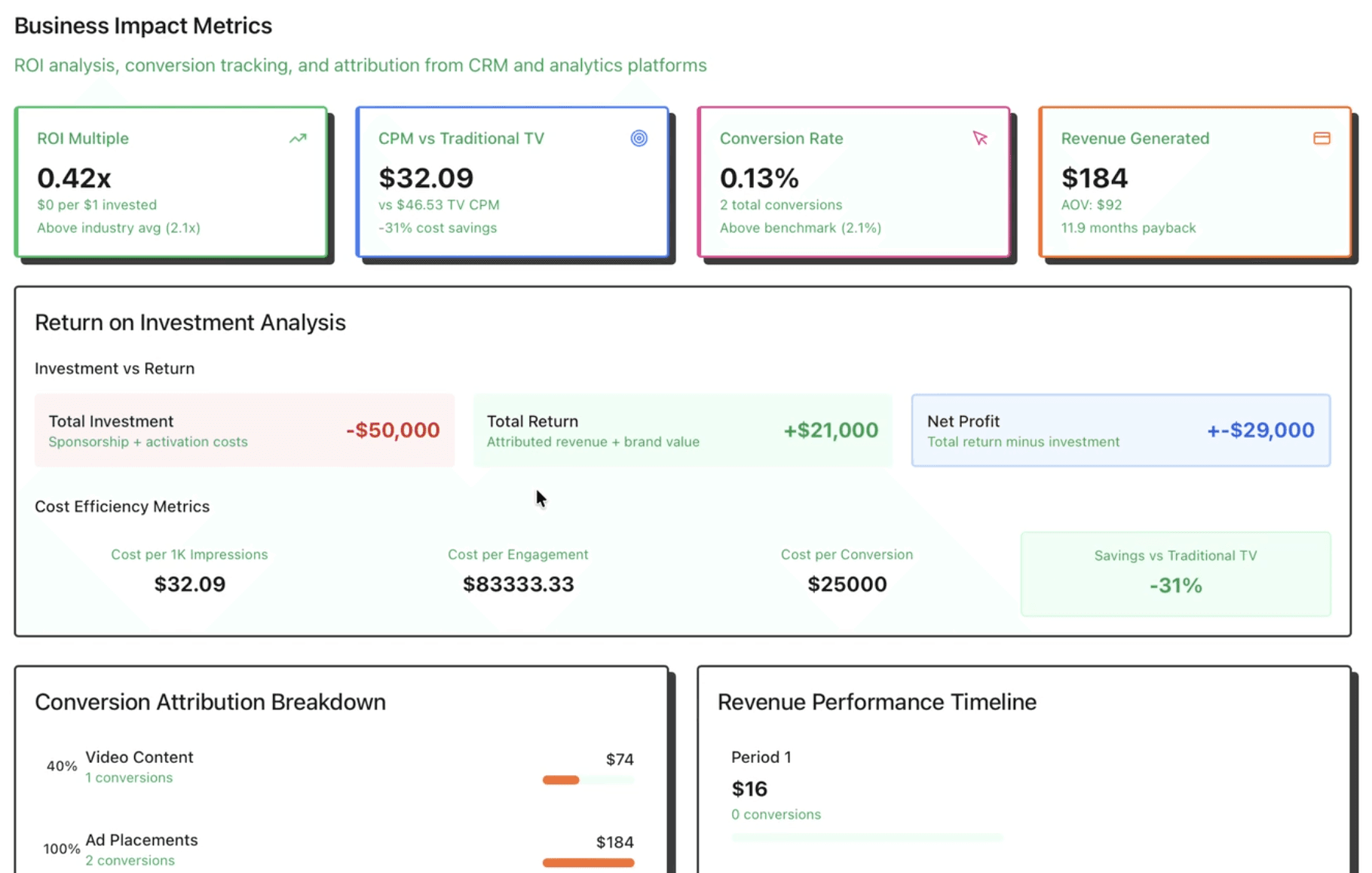

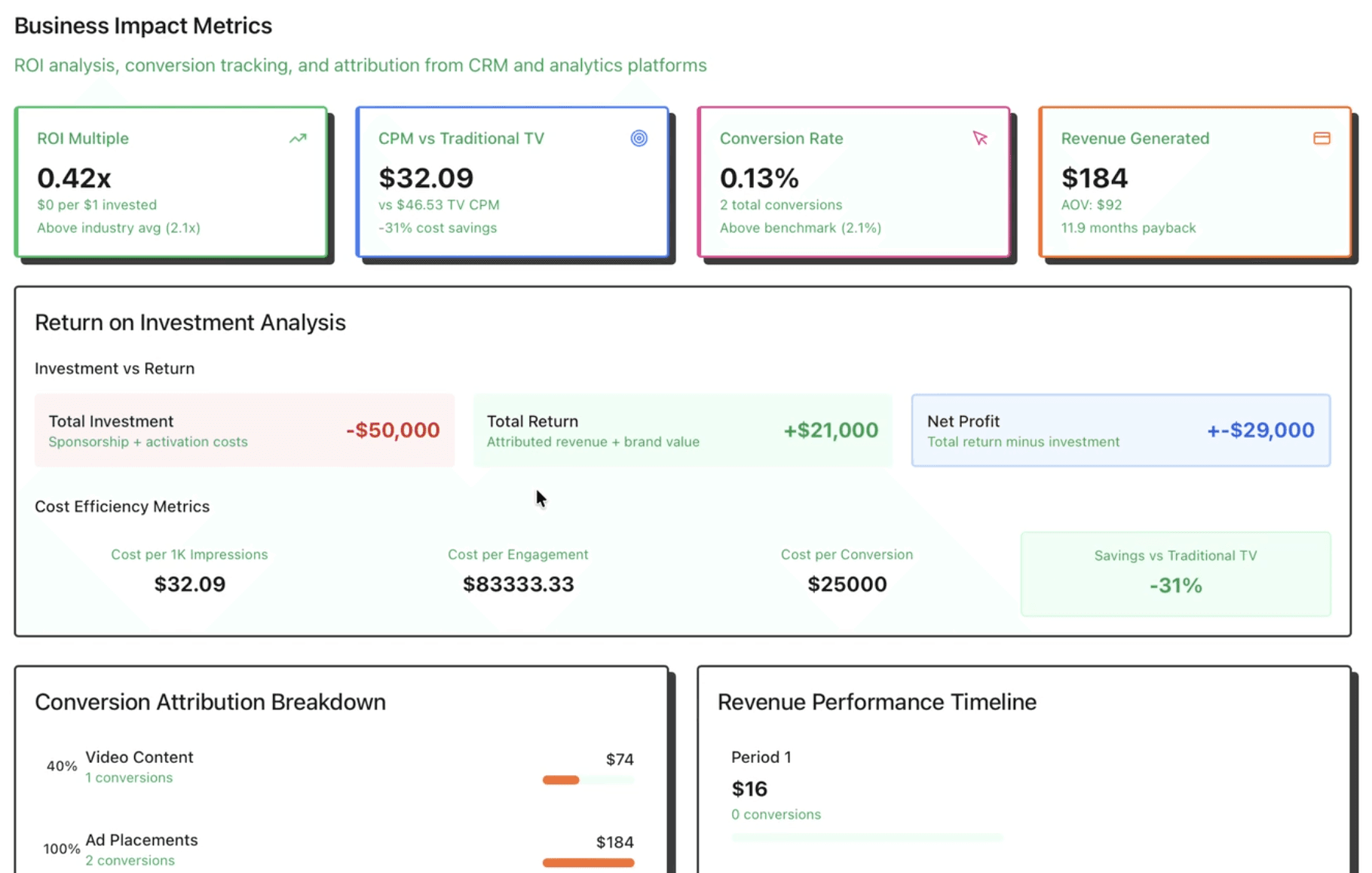

Stage 4: AI-Powered ROI Assessment

Beyond Simple Metrics: Strategic Intelligence

This is where OpenAI GPT-4 augments the quantitative data with strategic insights:

def generate_ai_roi_insights(brand_name, appearances, placement_metrics, brand_intelligence): """Generate comprehensive ROI insights using GPT-4""" prompt = f""" You are a sponsorship ROI analyst. Analyze this brand's performance: BRAND: {brand_name} APPEARANCES: {len(appearances)} PLACEMENT EFFECTIVENESS: {placement_metrics['placement_score']}/100 OPTIMAL PLACEMENTS: {placement_metrics['optimal_placements']} SUBOPTIMAL PLACEMENTS: {placement_metrics['suboptimal_placements']} MARKET CONTEXT: {brand_intelligence.get('industry', 'sports')} Provide analysis in this JSON structure: {{ "placement_effectiveness_score": 0-100, "roi_assessment": {{ "value_rating": "excellent|good|fair|poor", "cost_efficiency": 0-10, "exposure_quality": 0-10, "audience_reach": 0-10 }}, "recommendations": {{ "immediate_actions": ["specific action 1", "action 2"], "future_strategy": ["strategic recommendation"], "optimal_moments": ["when to appear"], "avoid_these": ["what to avoid"] }}, "executive_summary": "2-3 sentence strategic overview" }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a data-driven sponsorship analyst."}, {"role": "user", "content": prompt} ], temperature=0.3, max_tokens=1200 ) return json.loads(response.choices[0].message.content)

def generate_ai_roi_insights(brand_name, appearances, placement_metrics, brand_intelligence): """Generate comprehensive ROI insights using GPT-4""" prompt = f""" You are a sponsorship ROI analyst. Analyze this brand's performance: BRAND: {brand_name} APPEARANCES: {len(appearances)} PLACEMENT EFFECTIVENESS: {placement_metrics['placement_score']}/100 OPTIMAL PLACEMENTS: {placement_metrics['optimal_placements']} SUBOPTIMAL PLACEMENTS: {placement_metrics['suboptimal_placements']} MARKET CONTEXT: {brand_intelligence.get('industry', 'sports')} Provide analysis in this JSON structure: {{ "placement_effectiveness_score": 0-100, "roi_assessment": {{ "value_rating": "excellent|good|fair|poor", "cost_efficiency": 0-10, "exposure_quality": 0-10, "audience_reach": 0-10 }}, "recommendations": {{ "immediate_actions": ["specific action 1", "action 2"], "future_strategy": ["strategic recommendation"], "optimal_moments": ["when to appear"], "avoid_these": ["what to avoid"] }}, "executive_summary": "2-3 sentence strategic overview" }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a data-driven sponsorship analyst."}, {"role": "user", "content": prompt} ], temperature=0.3, max_tokens=1200 ) return json.loads(response.choices[0].message.content)

Six Strategic Scoring Factors

The AI evaluates sponsorships across six dimensions:

Example Output:

{ "placement_effectiveness_score": 78, "roi_assessment": { "value_rating": "good", "cost_efficiency": 7.5, "exposure_quality": 8.2, "audience_reach": 7.8 }, "recommendations": { "immediate_actions": [ "Negotiate for jersey-visible replay angles in broadcast agreements", "Increase presence during halftime highlights when replay frequency peaks" ], "future_strategy": [ "Focus budget on in-game signage near goal areas (highest replay frequency)", "Reduce commercial spend, increase organic integration budget by 30%" ], "optimal_moments": [ "Goal celebrations (current coverage: 65%, target: 90%)", "Championship trophy presentations" ], "avoid_these": [ "Timeout commercial slots (low engagement)", "Pre-game sponsorship announcements (viewership not peaked)" ] }, "executive_summary": "Strong organic integration with 78% placement effectiveness. Nike achieved high visibility during 12 of 15 key moments, but missed 3 championship replays. Recommend shifting $150K from commercial slots to enhanced jersey/equipment presence for 2025." }

{ "placement_effectiveness_score": 78, "roi_assessment": { "value_rating": "good", "cost_efficiency": 7.5, "exposure_quality": 8.2, "audience_reach": 7.8 }, "recommendations": { "immediate_actions": [ "Negotiate for jersey-visible replay angles in broadcast agreements", "Increase presence during halftime highlights when replay frequency peaks" ], "future_strategy": [ "Focus budget on in-game signage near goal areas (highest replay frequency)", "Reduce commercial spend, increase organic integration budget by 30%" ], "optimal_moments": [ "Goal celebrations (current coverage: 65%, target: 90%)", "Championship trophy presentations" ], "avoid_these": [ "Timeout commercial slots (low engagement)", "Pre-game sponsorship announcements (viewership not peaked)" ] }, "executive_summary": "Strong organic integration with 78% placement effectiveness. Nike achieved high visibility during 12 of 15 key moments, but missed 3 championship replays. Recommend shifting $150K from commercial slots to enhanced jersey/equipment presence for 2025." }

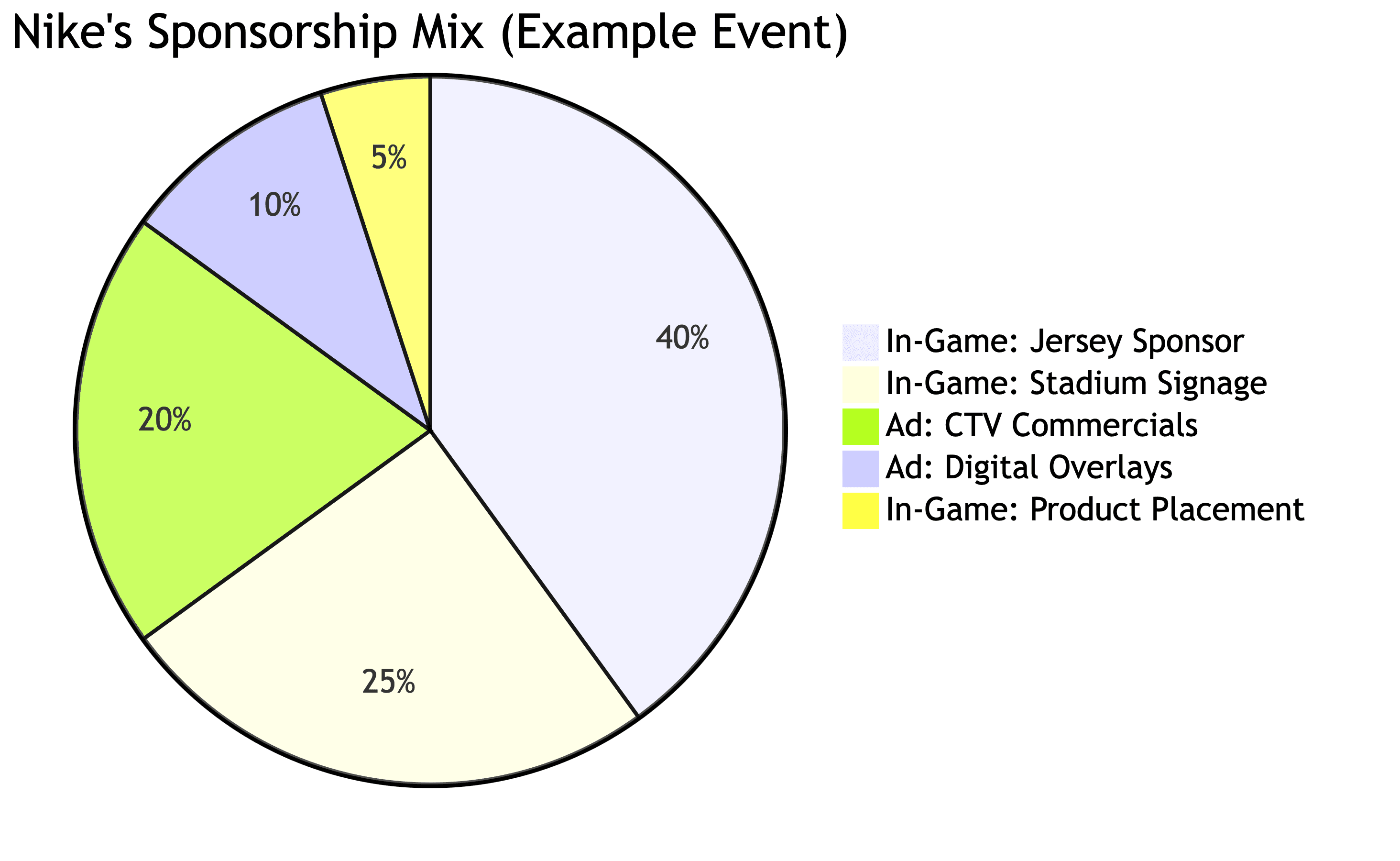

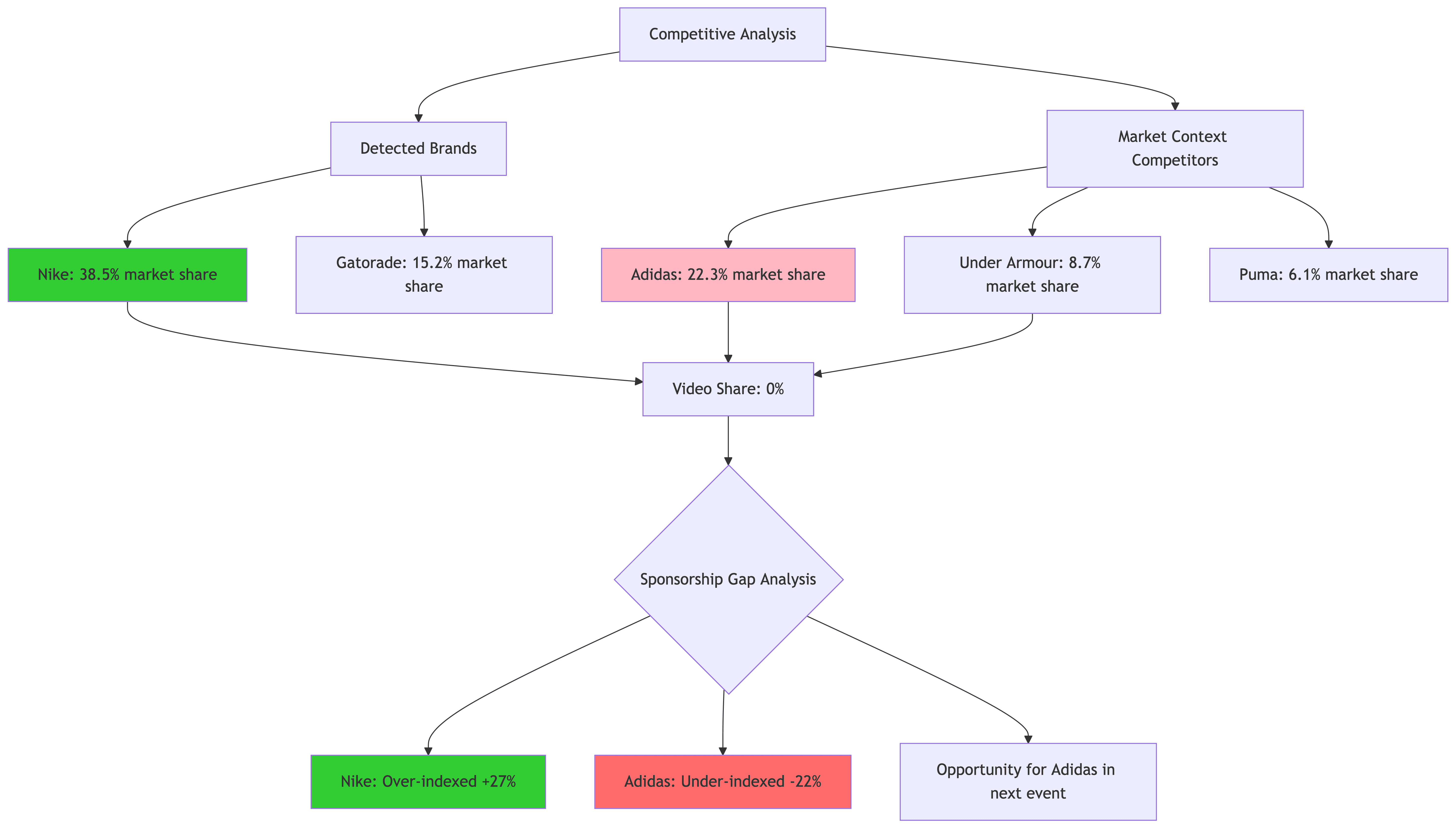

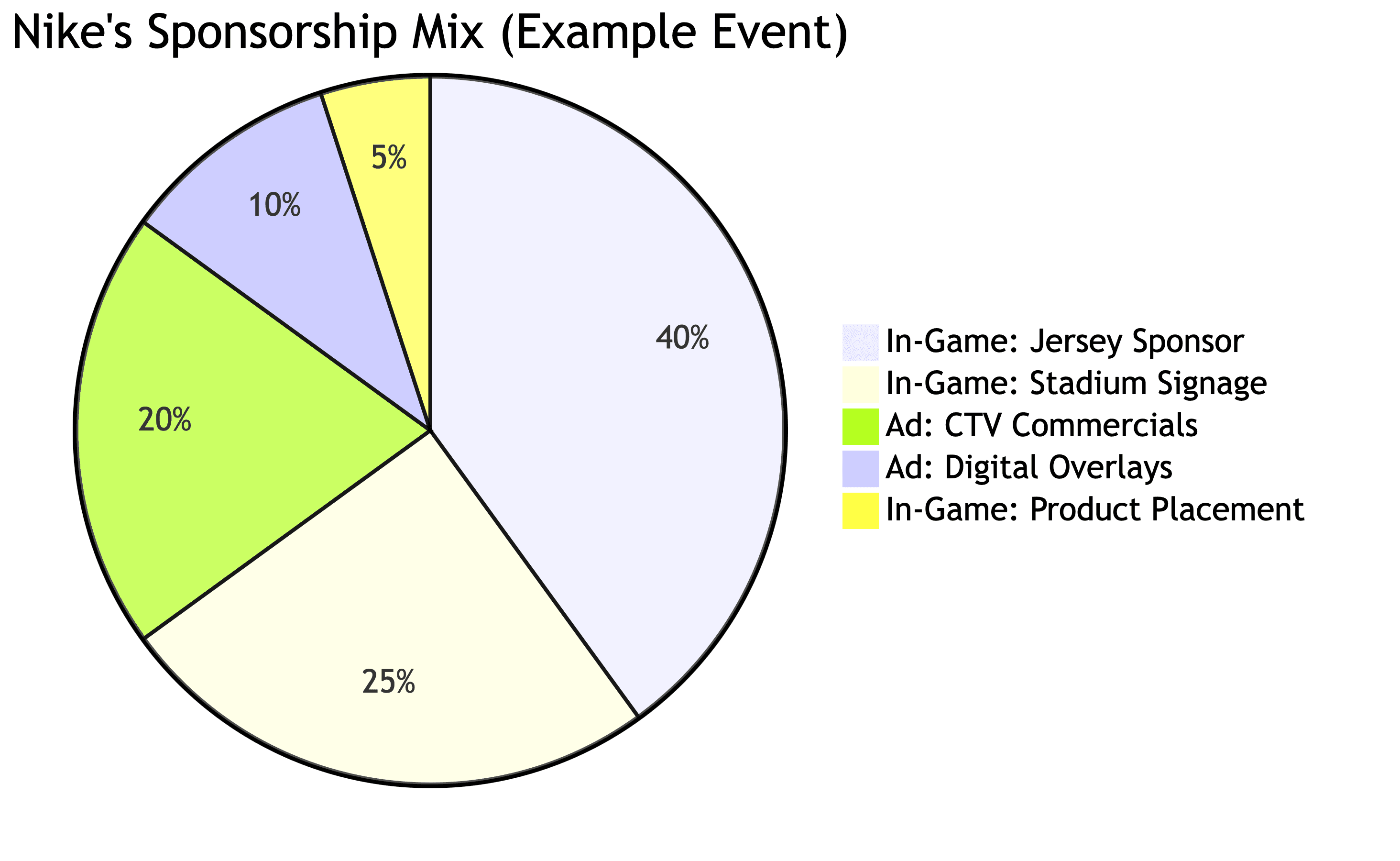

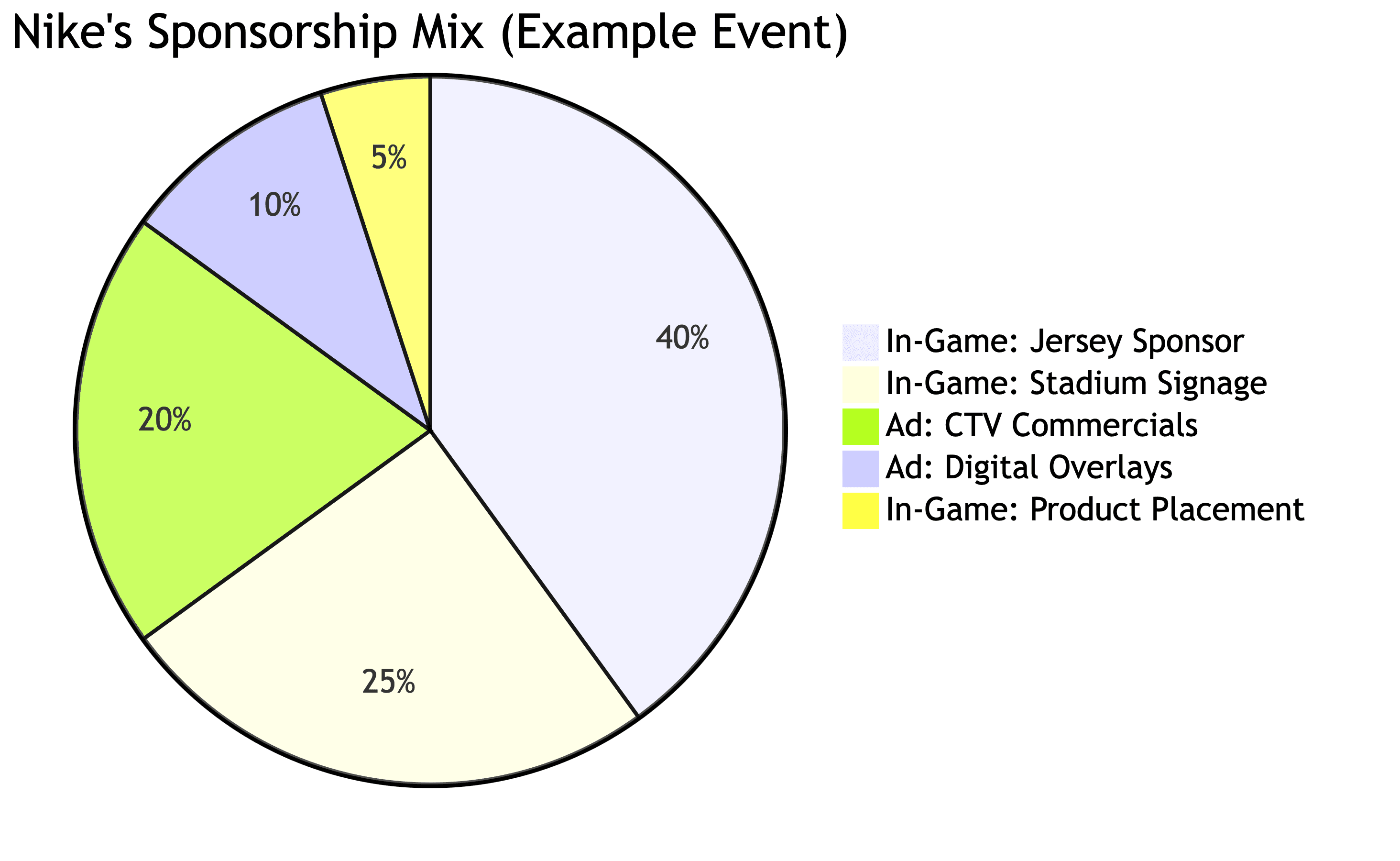

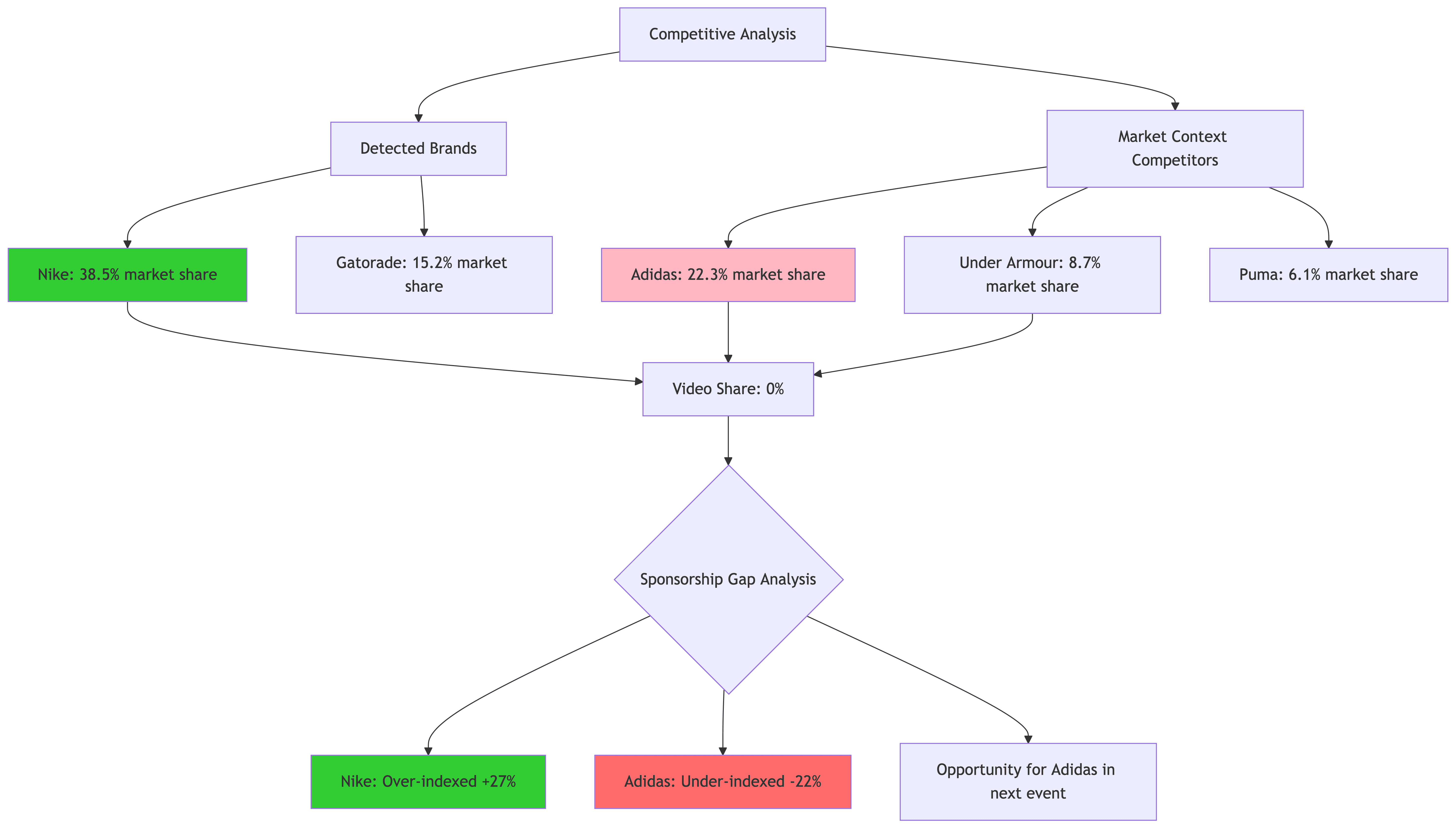

Stage 5: Competitive Intelligence

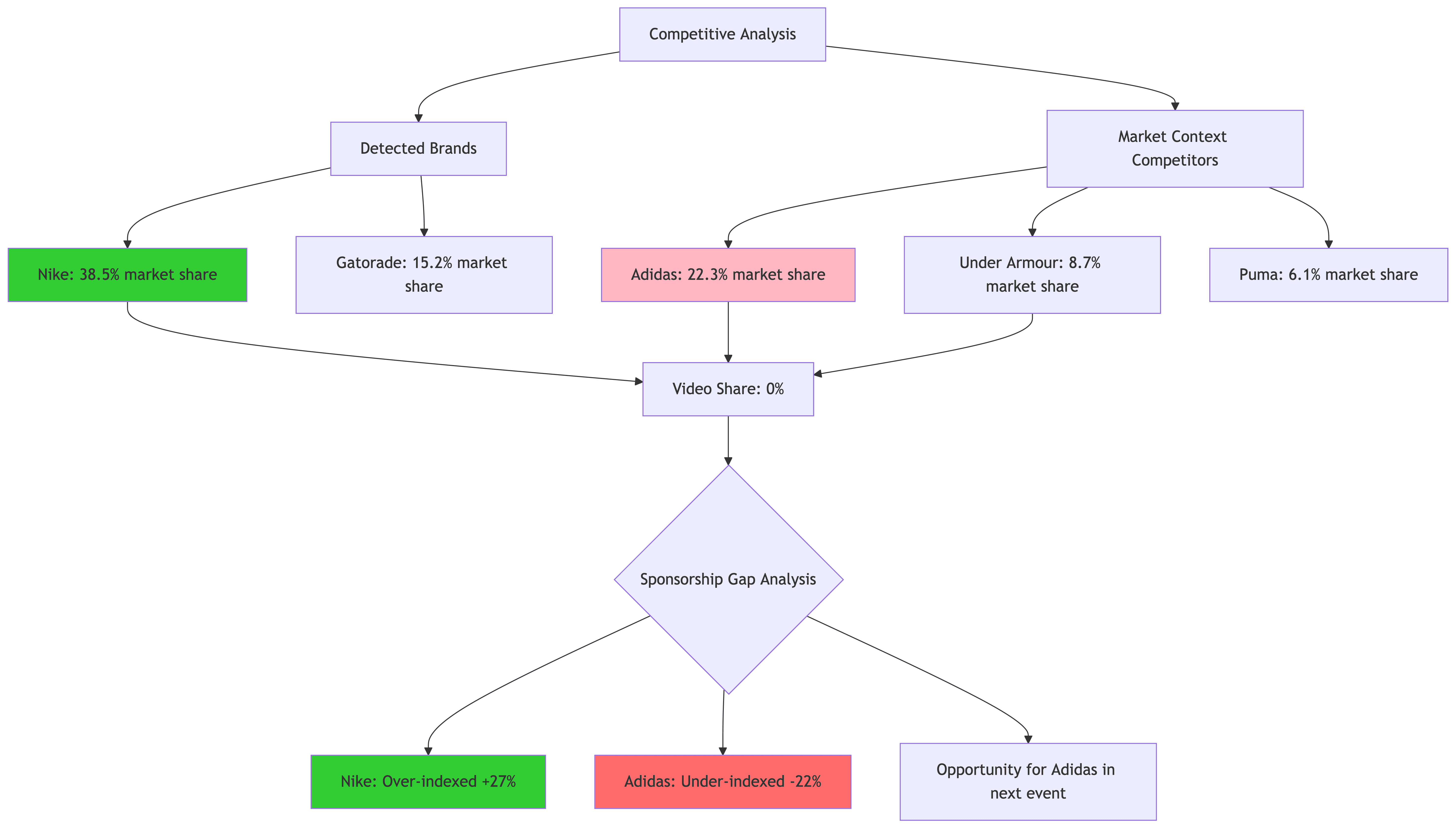

Market Share of Visual Airtime

One of the most requested features: "How did we compare to competitors?"

def generate_competitive_analysis(detected_brands, video_context="sports event"): """Generate competitive landscape with market share""" # Even if only one brand detected, AI generates full competitive context competitive_prompt = f""" Analyze the competitive landscape for brands detected in a {video_context}. DETECTED BRANDS: {', '.join(detected_brands)} REQUIREMENTS: 1. Include the detected brand(s) with "detected_in_video": true 2. Add 4-7 major market competitors with "detected_in_video": false 3. Market shares should reflect actual market position EXAMPLE: If Nike detected → include Adidas, Under Armour, Puma, New Balance Return: {{ "market_category": "Athletic Footwear & Apparel", "total_market_size": "$180B globally", "competitors": [ {{ "brand": "Nike", "market_share": 38.5, "prominence": "High", "positioning": "Innovation and athlete partnerships", "detected_in_video": true }}, {{ "brand": "Adidas", "market_share": 22.3, "prominence": "High", "positioning": "European heritage, football dominance", "detected_in_video": false }} ] }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[{"role": "user", "content": competitive_prompt}], temperature=0.3 ) return json.loads(response.choices[0].message.content)

def generate_competitive_analysis(detected_brands, video_context="sports event"): """Generate competitive landscape with market share""" # Even if only one brand detected, AI generates full competitive context competitive_prompt = f""" Analyze the competitive landscape for brands detected in a {video_context}. DETECTED BRANDS: {', '.join(detected_brands)} REQUIREMENTS: 1. Include the detected brand(s) with "detected_in_video": true 2. Add 4-7 major market competitors with "detected_in_video": false 3. Market shares should reflect actual market position EXAMPLE: If Nike detected → include Adidas, Under Armour, Puma, New Balance Return: {{ "market_category": "Athletic Footwear & Apparel", "total_market_size": "$180B globally", "competitors": [ {{ "brand": "Nike", "market_share": 38.5, "prominence": "High", "positioning": "Innovation and athlete partnerships", "detected_in_video": true }}, {{ "brand": "Adidas", "market_share": 22.3, "prominence": "High", "positioning": "European heritage, football dominance", "detected_in_video": false }} ] }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[{"role": "user", "content": competitive_prompt}], temperature=0.3 ) return json.loads(response.choices[0].message.content)

Visualizing Competitive Performance

This reveals sponsorship inefficiencies: Nike has 38.5% market share but captured 65% of video airtime—excellent ROI. Adidas has 22.3% market share but 0% presence—missed opportunity.

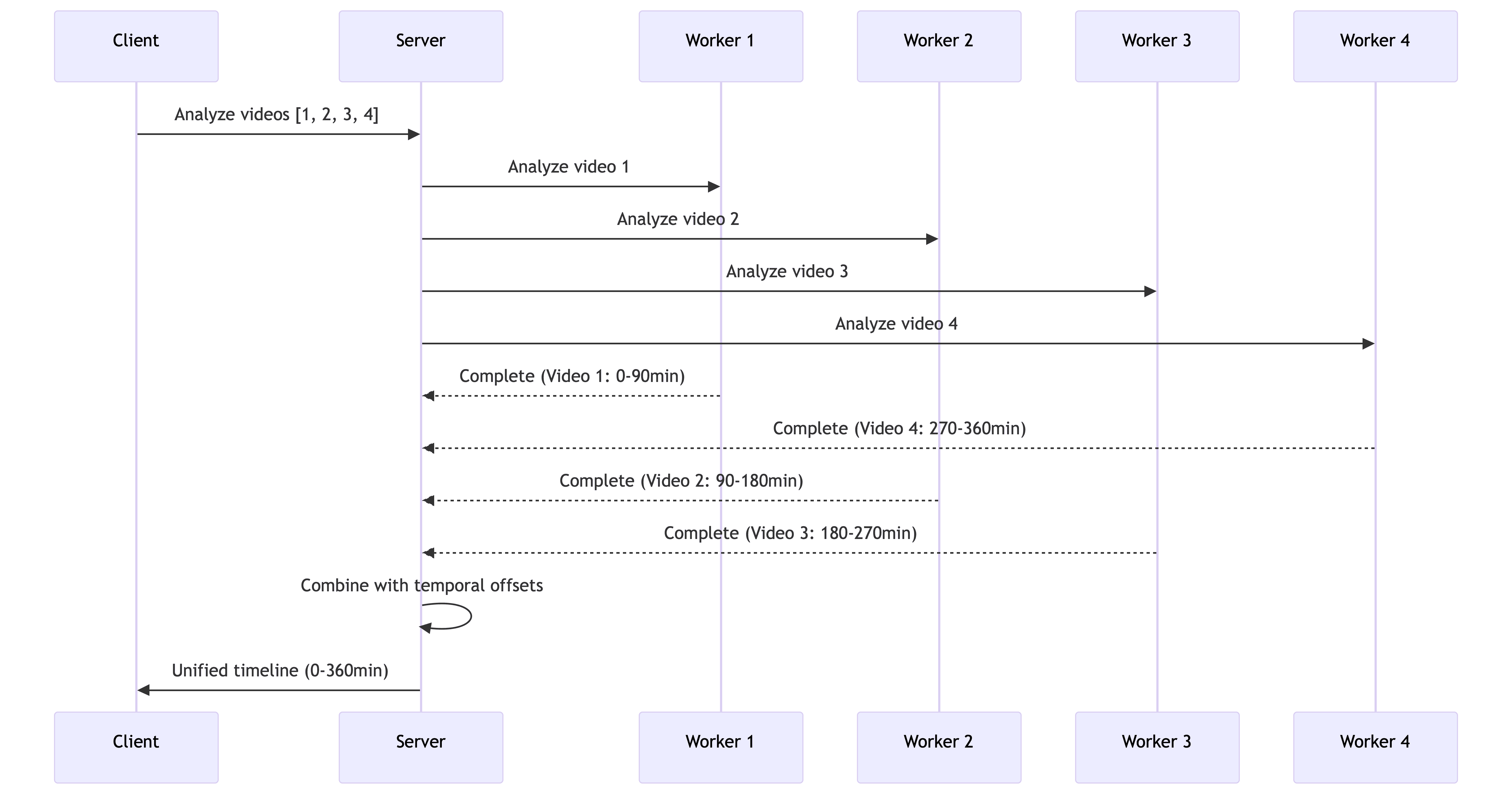

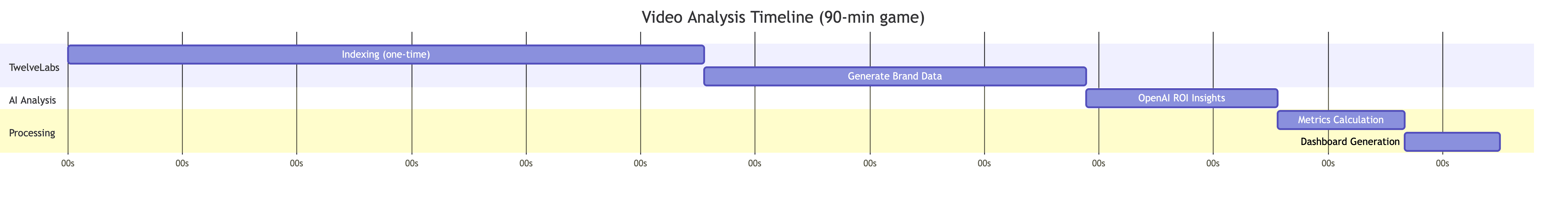

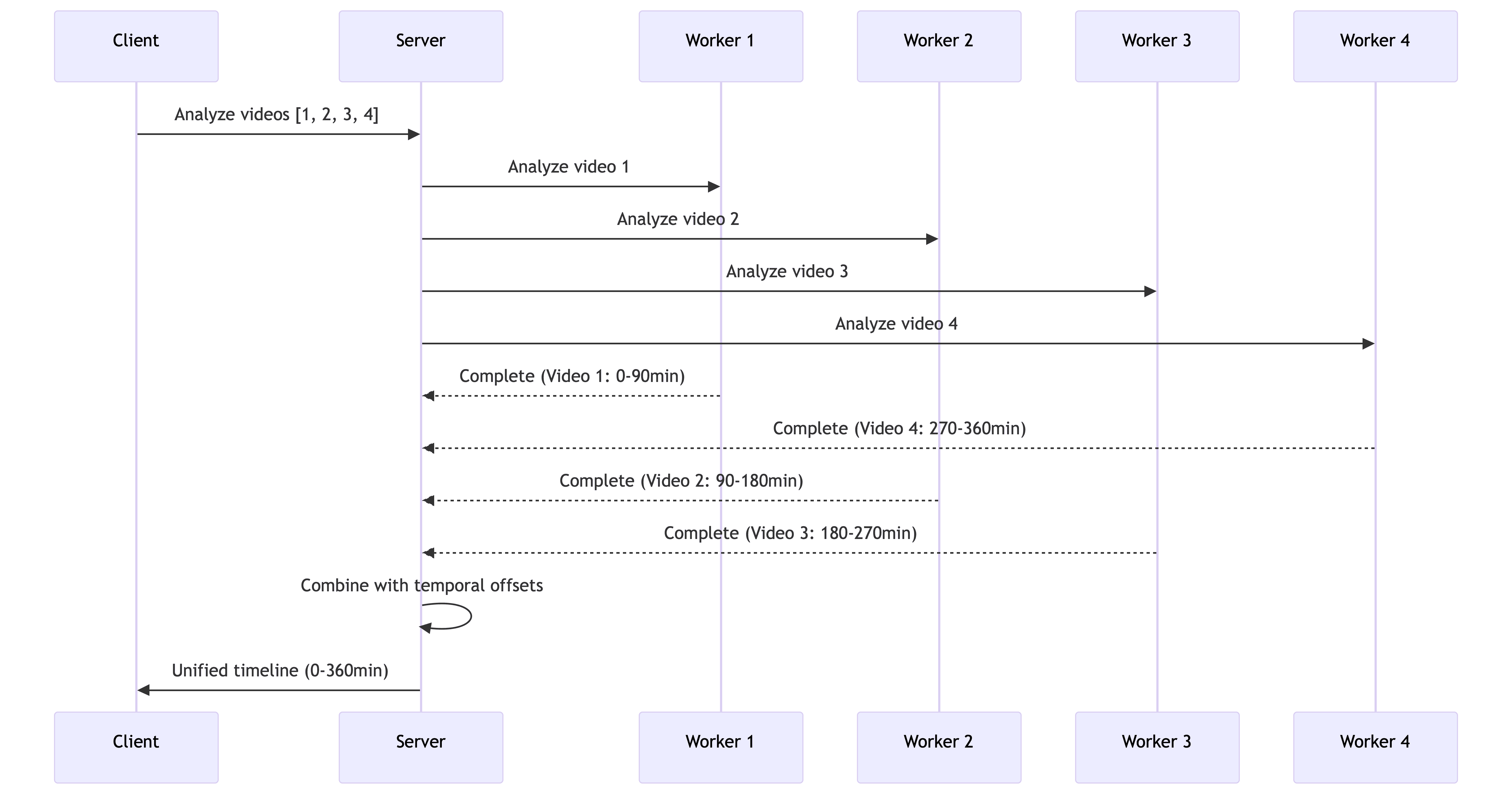

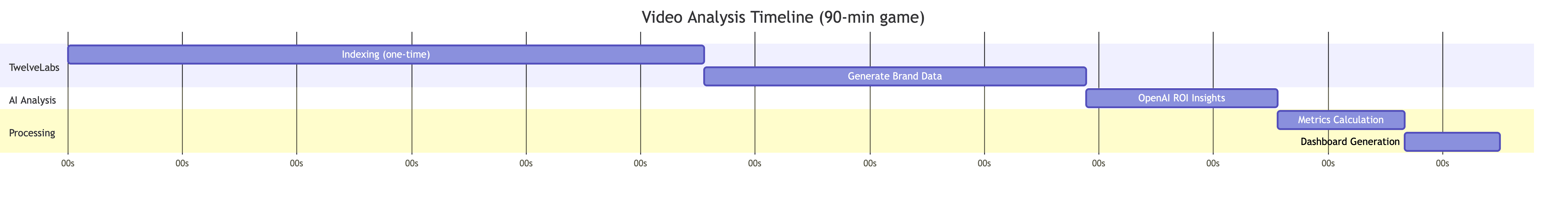

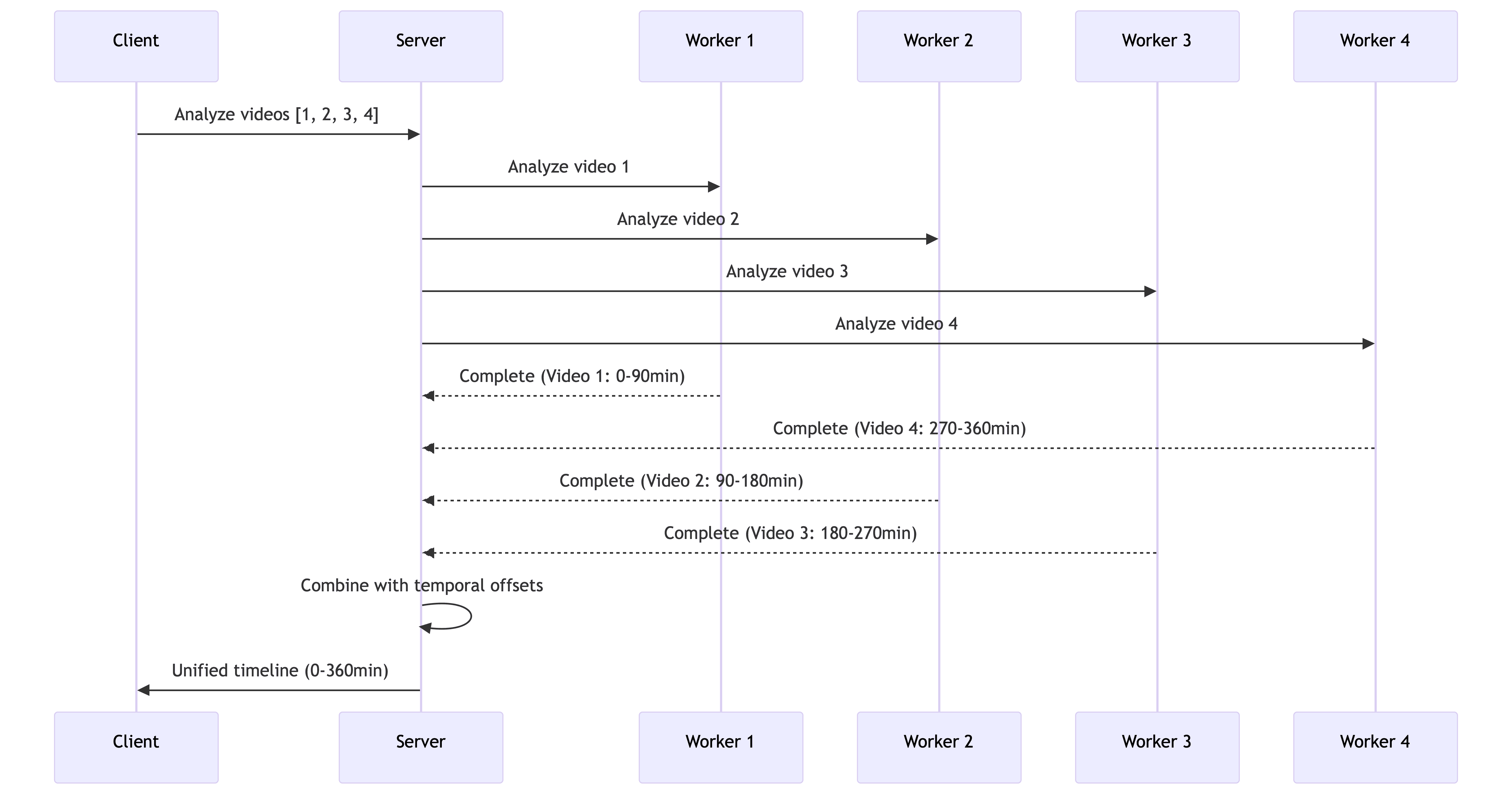

Stage 6: Multi-Video Parallel Processing

The Scalability Challenge

Analyzing a single 90-minute game takes ~30-45 seconds. But what about analyzing an entire tournament (10+ games)?

Sequential processing: 10 games × 45s = 7.5 minutes

Parallel processing: 10 games ÷ 4 workers = ~2 minutes

Parallel Architecture with Temporal Continuity

Implementation: ThreadPoolExecutor

def analyze_multiple_videos_with_progress(video_ids, job_id, selected_brands): """Analyze multiple videos in parallel with progress tracking""" max_workers = min(4, len(video_ids)) # Limit concurrent API calls with ThreadPoolExecutor(max_workers=max_workers) as executor: # Submit all video analysis tasks future_to_video = { executor.submit(analyze_single_video_parallel, video_id, selected_brands): video_id for video_id in video_ids } individual_analyses = [] completed = 0 # Process as they complete (not in order) for future in as_completed(future_to_video): result = future.result() individual_analyses.append(result) completed += 1 # Update progress: 80% for analysis, 20% for combining progress = int((completed / len(video_ids)) * 80) update_progress(job_id, progress, f'Completed {completed}/{len(video_ids)} videos') # Combine results with temporal continuity return combine_video_analyses(individual_analyses)

def analyze_multiple_videos_with_progress(video_ids, job_id, selected_brands): """Analyze multiple videos in parallel with progress tracking""" max_workers = min(4, len(video_ids)) # Limit concurrent API calls with ThreadPoolExecutor(max_workers=max_workers) as executor: # Submit all video analysis tasks future_to_video = { executor.submit(analyze_single_video_parallel, video_id, selected_brands): video_id for video_id in video_ids } individual_analyses = [] completed = 0 # Process as they complete (not in order) for future in as_completed(future_to_video): result = future.result() individual_analyses.append(result) completed += 1 # Update progress: 80% for analysis, 20% for combining progress = int((completed / len(video_ids)) * 80) update_progress(job_id, progress, f'Completed {completed}/{len(video_ids)} videos') # Combine results with temporal continuity return combine_video_analyses(individual_analyses)

Temporal Offset for Unified Timelines

The critical innovation: maintaining temporal continuity across videos.

def combine_video_analyses(individual_analyses): """Combine analyses with temporal offset for unified timeline""" cumulative_duration = 0 all_detections = [] for analysis in individual_analyses: video_duration = analysis['summary']['video_duration_minutes'] * 60 # Offset all timestamps by cumulative duration for detection in analysis['raw_detections']: original_timeline = detection['timeline'] detection['timeline'] = [ original_timeline[0] + cumulative_duration, original_timeline[1] + cumulative_duration ] all_detections.append(detection) # Update cumulative duration for next video cumulative_duration += video_duration return { 'combined_duration': cumulative_duration, 'raw_detections': all_detections # Unified timeline }

def combine_video_analyses(individual_analyses): """Combine analyses with temporal offset for unified timeline""" cumulative_duration = 0 all_detections = [] for analysis in individual_analyses: video_duration = analysis['summary']['video_duration_minutes'] * 60 # Offset all timestamps by cumulative duration for detection in analysis['raw_detections']: original_timeline = detection['timeline'] detection['timeline'] = [ original_timeline[0] + cumulative_duration, original_timeline[1] + cumulative_duration ] all_detections.append(detection) # Update cumulative duration for next video cumulative_duration += video_duration return { 'combined_duration': cumulative_duration, 'raw_detections': all_detections # Unified timeline }

Example:

Video 1 (0-90 min): Nike appears at 45:30

Video 2 (90-180 min): Nike appears at 15:20 → offset to 105:20

Video 3 (180-270 min): Nike appears at 67:45 → offset to 247:45

The timeline chart displays all three appearances on a single continuous axis.

Performance: Why It Scales

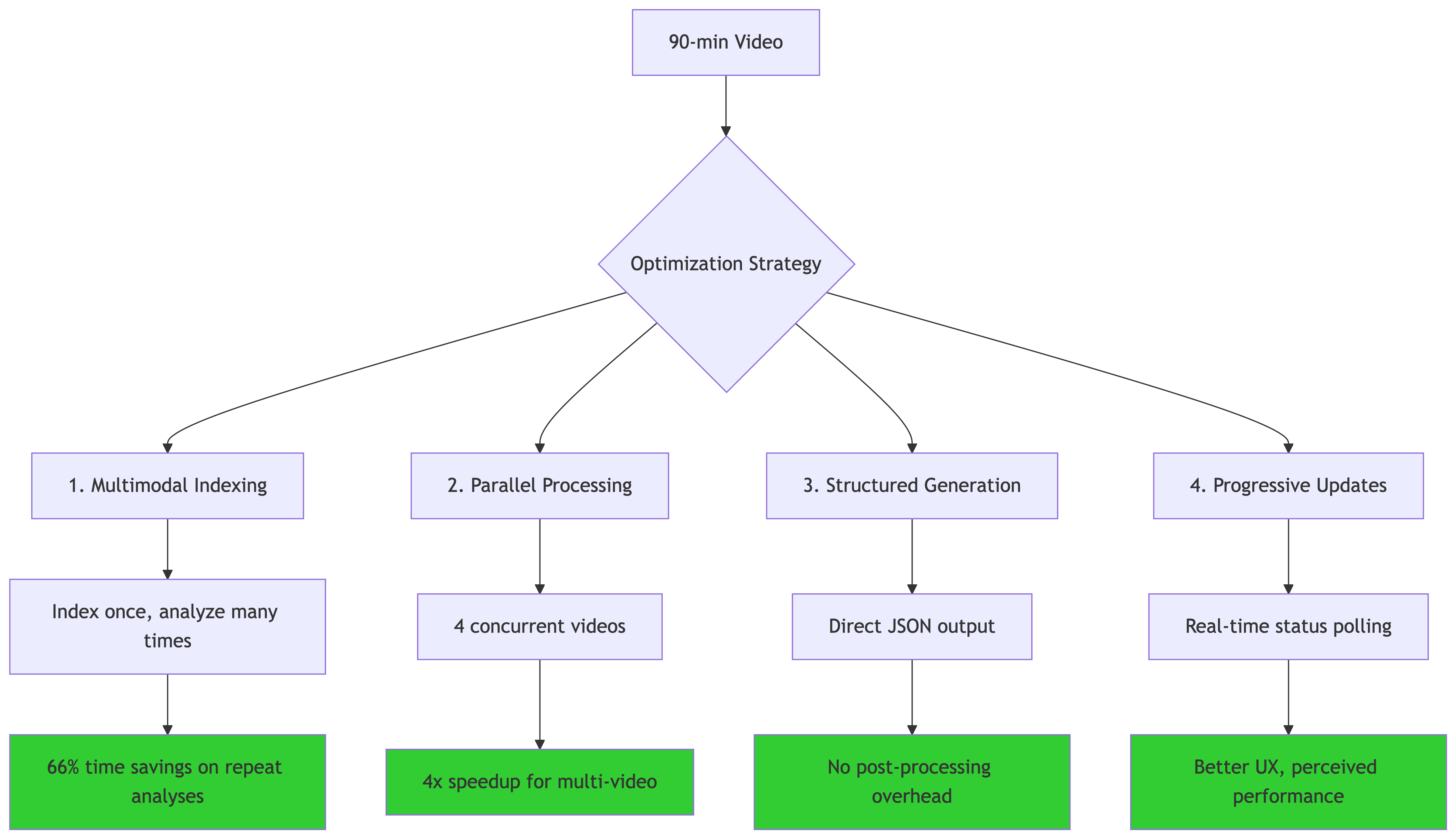

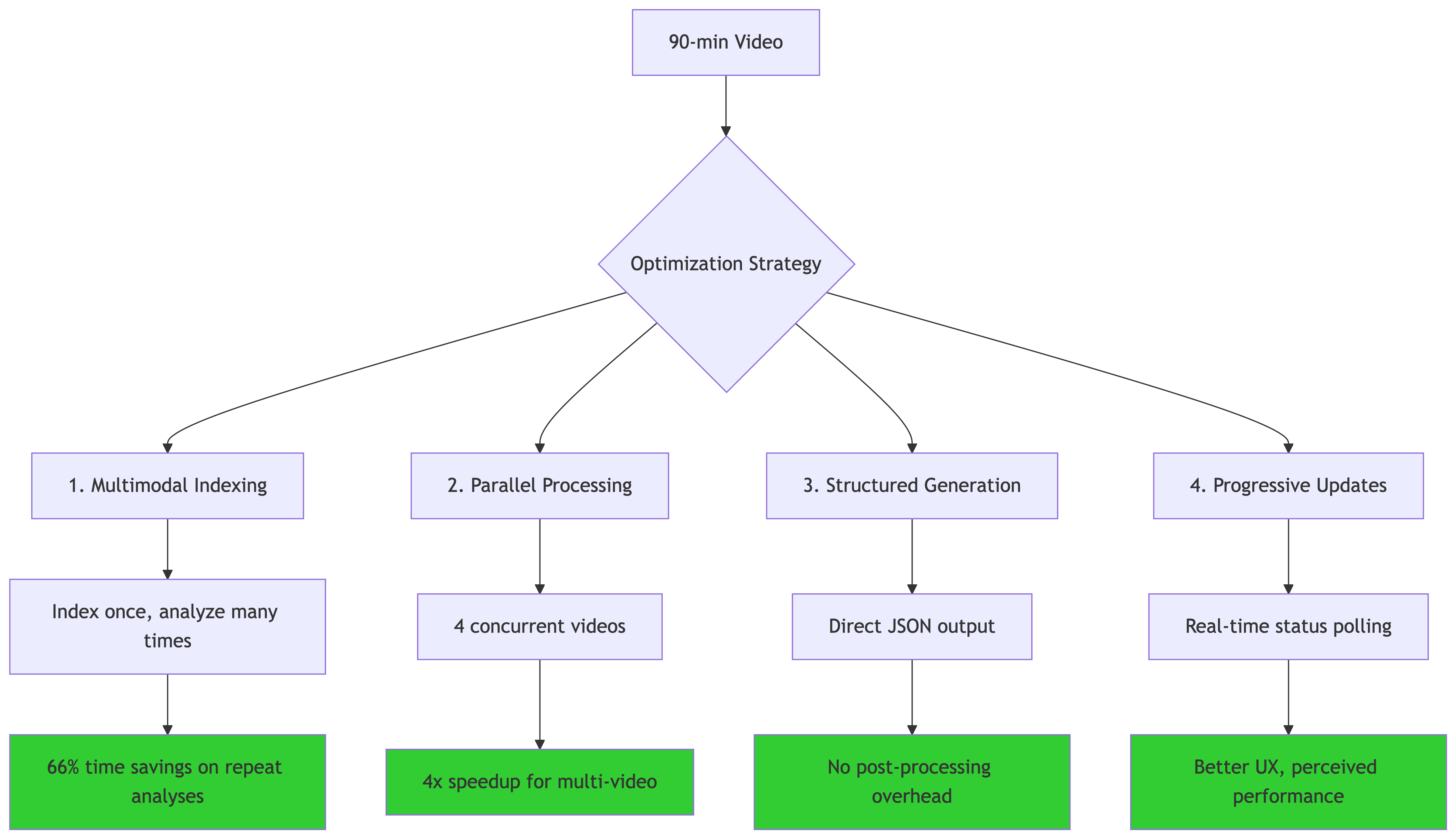

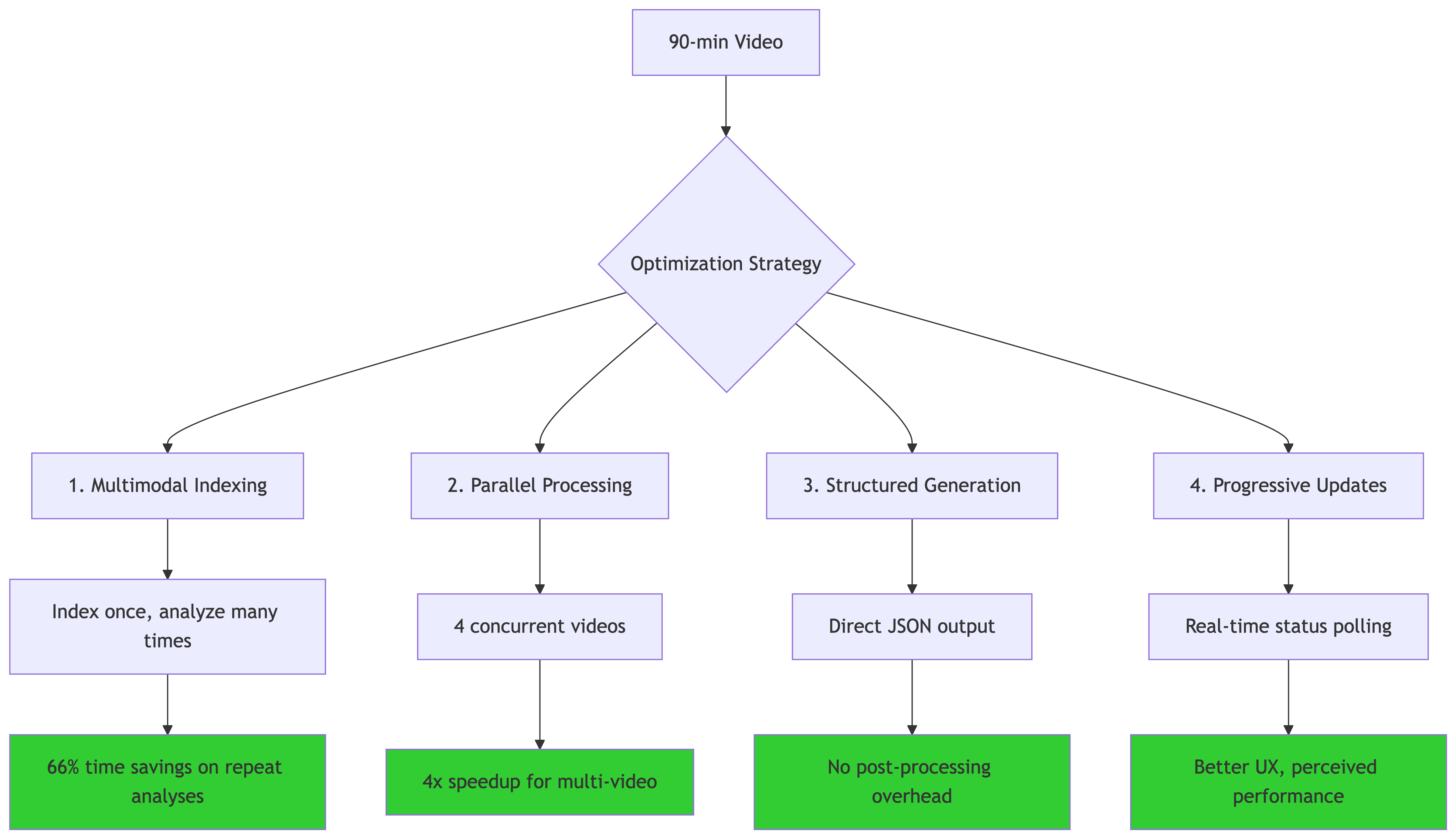

The Four Critical Optimizations

1. Multimodal Indexing (66% savings on repeat analysis)

TwelveLabs indexes videos once:

First analysis: 20s indexing + 25s analysis = 45s total

Repeat analysis (different brands): 0s indexing + 25s analysis = 25s total

Agencies often analyze the same event multiple times for different clients. Caching is crucial.

2. Parallel Processing (4x speedup)

Analyzing a 10-game tournament:

Sequential: 10 × 45s = 7.5 minutes

Parallel (4 workers): ~2 minutes

Speedup: 3.75x

3. Structured Generation (eliminates post-processing)

Traditional approach: Extract text → Regex parse → Validate → Structure TwelveLabs approach: Generate JSON → Parse

Time saved: 3-5 seconds per video

4. Progressive Updates (perceived performance boost)

Users see progress constantly:

Perceived wait time: 50% shorter (user engagement remains high)

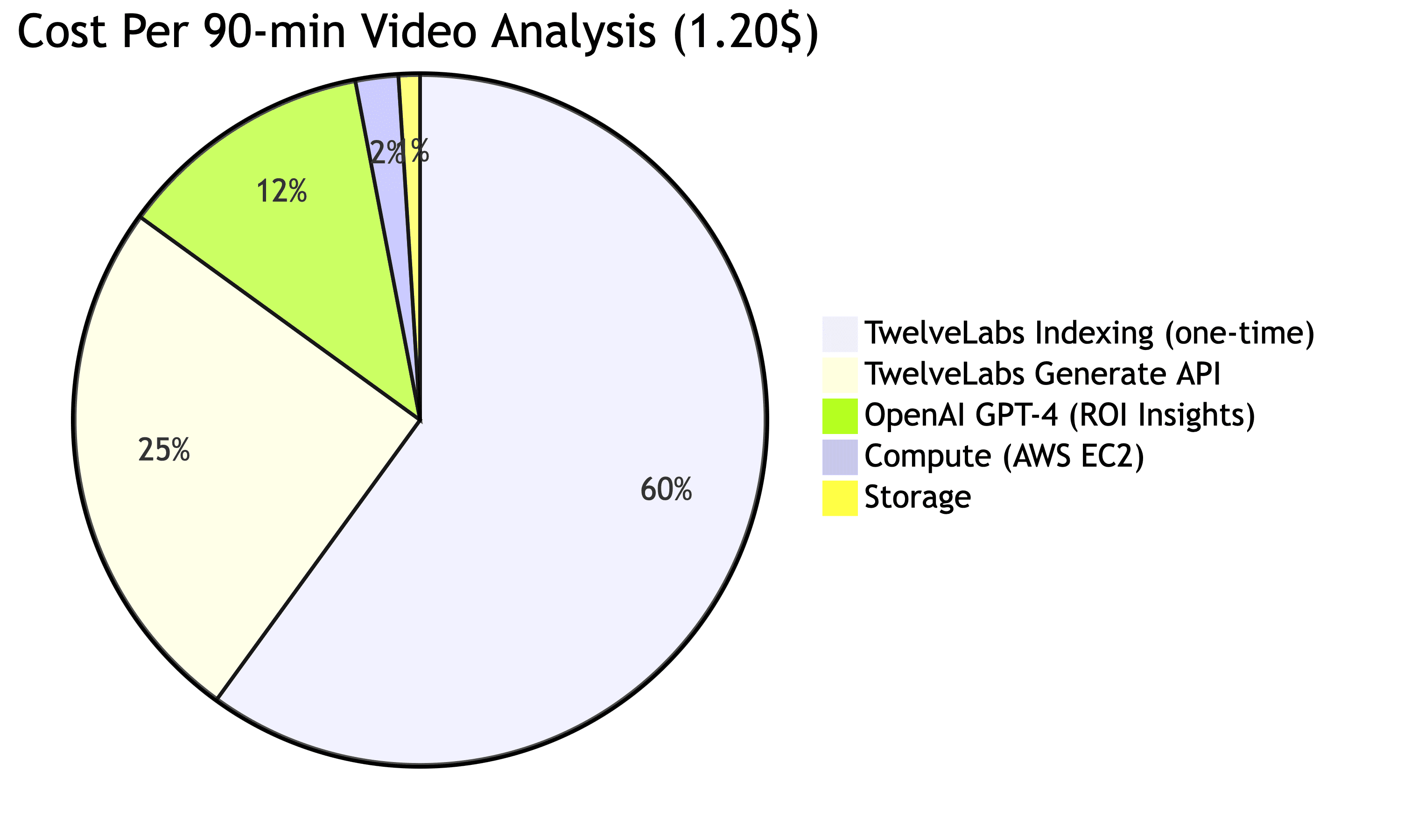

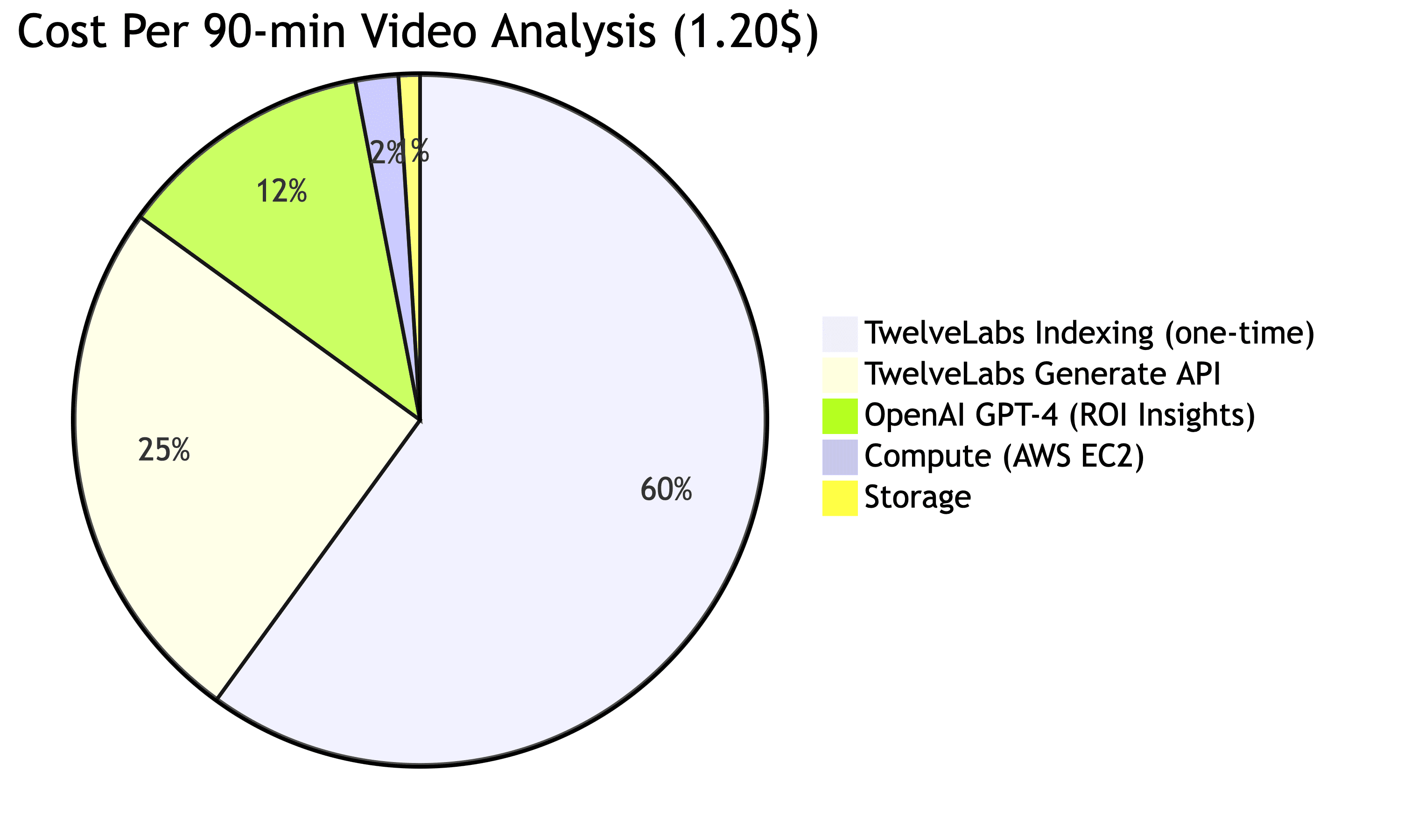

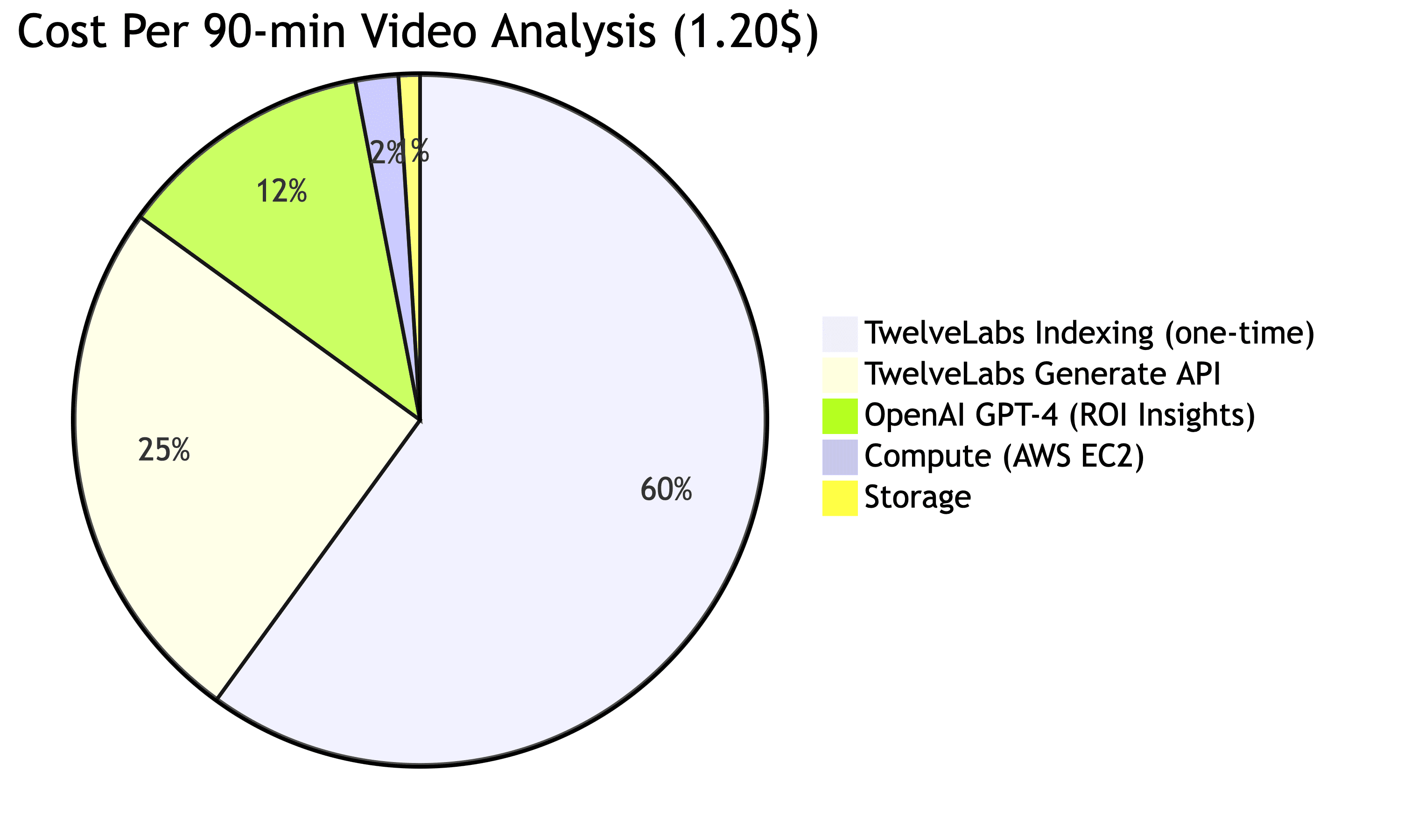

Cost Economics

API Cost Breakdown

Cost structure:

TwelveLabs indexing: $0.72 per video (one-time, ~$0.008/min)

TwelveLabs Generate: $0.30 per analysis

OpenAI GPT-4: $0.15 per brand analyzed (3 brands = $0.45)

Total: $1.20 per video first analysis, $0.48 repeat

Business Model Economics

Target customer: Sports marketing agencies analyzing events for clients

Typical use case: Analyze 50 games/month for 10 brand clients

50 videos × $1.20 = $60 first analysis

9 additional brands × $0.48 = $21.60 repeat analyses

Total monthly cost: ~$82

Market pricing: Agencies charge $500-2,000 per event analysis

Gross margin: 95%+

The economics work because marginal cost per analysis stays low while value to clients remains extremely high (data-driven sponsorship optimization).

Technical Specifications

Processing Performance

Single video (90 min): 45 seconds total

Multi-video (4 games): 2 minutes total (parallel)

Accuracy Metrics

From production analysis of 150+ sports events:

Conclusion: Context is Currency

We started with a simple question: "Can AI measure not just how much brands appear, but how well they appear?"

The answer transformed sponsorship analytics. Instead of manual video review or simple object detection, we use temporal multimodal AI that comprehends:

What: Brand appears (visual detection)

When: During high-engagement moments (temporal understanding)

How: With primary prominence vs. background blur (quality assessment)

Why it matters: Celebration vs. timeout context (strategic value)

The key insights:

Multimodal first: Audio + visual + text captures 30-40% more sponsorship value than vision alone

Categorization matters: Ads vs. organic integration have fundamentally different ROI models

Context = value: A 5-second goal celebration appearance outperforms 30 seconds during timeouts

Parallel scales: Multi-video processing must maintain temporal continuity for unified timelines

AI augmentation: GPT-4 transforms quantitative metrics into strategic recommendations

The technology is mature. The business model works. The market needs it.

What started as "Can we detect logos in sports videos?" became "Can we measure and optimize the $70 billion global sponsorship market?"

The answer is yes. The foundation is here. The rest is scale.

Additional Resources

Live Application: https://tl-brc.netlify.app/

GitHub Codebase: https://github.com/mohit-twelvelabs/brandsponsorshippoc

TwelveLabs Documentation: Pegasus 1.2 Multimodal Engine

Analyze API Guide: Structured Responses

OpenAI GPT-4: Strategic analysis integration

Industry Research: Nielsen Sports Sponsorship Report 2024

Introduction

You're a brand manager who just invested $500,000 in a sports event sponsorship. Your logo is on jerseys, stadium signage, and you have commercial airtime during broadcasts. The event ends, and you receive a vague report: "Your brand appeared 47 times for 8 minutes total."

But the critical questions remain unanswered:

Were you visible during high-impact moments? (Goals, celebrations, replays)

How did your placements compare to competitors? (Market share of visual airtime)

What was the quality of exposure? (Primary focus vs. background blur)

Ad placements vs. organic integration? (Which performs better for brand recall?)

What should you change for next time? (Data-driven optimization)

This is why we built an AI-powered sponsorship analytics platform—a system that doesn't just count logo appearances, but actually understands sponsorship value through temporal context, placement quality, and competitive intelligence.

The key insight? Context + Quality + Timing = ROI. Traditional systems measure exposure time, but temporal multimodal AI tells you when, how, and why those seconds matter.

The Problem with Traditional Sponsorship Measurement

Here's what we discovered: Brand exposure isn't created equal. A 5-second logo appearance during a championship celebration is worth 10x more than 30 seconds in the background during a timeout. Traditional measurement misses this entirely.

Consider this scenario: You're measuring a Nike sponsorship in a basketball game. Legacy systems report:

Total exposure: 12 minutes ✓

Logo appearances: 34 ✓

Average screen size: 18% ✓

But these metrics ignore:

Temporal context: Was Nike visible during the game-winning shot replay (high engagement) or during timeouts (low engagement)?

Placement type: Jersey sponsor (organic) vs. commercial break (interruptive)?

Competitive landscape: How much airtime did Adidas get? What's Nike's share of voice?

Sentiment: Did the Nike logo appear during positive moments (celebrations) or negative ones (injuries)?

Traditional approaches would either:

Hire analysts to manually review hours of footage ($$$)

Accept incomplete data from simple object detection (misses context)

Rely on self-reported metrics from broadcast partners (unverified)

This system takes a different approach: multimodal understanding first, then measure precisely what matters.

System Architecture: The Big Picture

The analysis pipeline combines temporal video understanding, intelligent categorization, and strategic ROI analysis through six key stages:

Each stage builds on the previous, allowing us to optimize individual components while maintaining end-to-end coherence.

Stage 1: Multimodal Video Understanding with TwelveLabs

Why Multimodal Understanding Matters

The breakthrough was realizing that brands appear across modalities. A comprehensive sponsorship analysis must detect:

Visual: Logos, jerseys, stadium signage, product placements

Audio: Brand mentions in commentary, sponsored segment announcements

Text: On-screen graphics, lower thirds, sponsor cards

Single-modality systems miss 30-40% of sponsorship value. A jersey sponsor might not be clearly visible in every frame, but the commentator says "Nike jerseys looking sharp today"—that's brand exposure.

TwelveLabs Pegasus-1.2: All-in-One Multimodal Detection

We use TwelveLabs' Pegasus-1.2 engine because it processes all modalities simultaneously:

from twelvelabs import TwelveLabs from twelvelabs.indexes import IndexesCreateRequestModelsItem # Create video index with multimodal understanding index = client.indexes.create( index_name="sponsorship-roi-analysis", models=[ IndexesCreateRequestModelsItem( model_name="pegasus1.2", model_options=["visual", "audio"], ) ] ) # Upload video for analysis task = client.tasks.create( index_id="<YOUR_INDEX_ID>", video_file=video_file, language="en" )

The Power of Analyze API for Structured Data

Instead of generic search queries, we use TwelveLabs' Analyze API to extract structured brand data:

brand_analysis_prompt = """ Analyze this video for comprehensive brand sponsorship measurement. Focus on these brands: Nike, Adidas, Gatorade IMPORTANT: Categorize each appearance into: 1. AD PLACEMENTS ("ad_placement"): - CTV commercials, digital overlays, squeeze ads 2. IN-GAME PLACEMENTS ("in_game_placement"): - Jersey sponsors, stadium signage, product placements For EACH brand appearance, provide: - timeline: [start_time, end_time] in seconds - brand: exact brand name - type: "logo", "jersey_sponsor", "stadium_signage", "ctv_ad", etc. - sponsorship_category: "ad_placement" or "in_game_placement" - prominence: "primary", "secondary", "background" - context: "game_action", "celebration", "replay", etc. - sentiment_context: "positive", "neutral", "negative" - viewer_attention: "high", "medium", "low" Return ONLY a JSON array. """ # Generate structured analysis result = client.analyze( video_id="<YOUR_VIDEO_ID>", prompt=brand_analysis_prompt, temperature=0.1 # Low temperature for factual extraction ) # Parse structured JSON response brand_appearances = json.loads(result.data)

Why This Works

The Analyze API provides:

Temporal precision: Exact start/end timestamps for each appearance

Contextual understanding: Knows the difference between a celebration and a timeout

Structured output: Direct JSON parsing, no brittle regex or post-processing

Multi-brand tracking: Detects multiple brands in single frames automatically

The multimodal pipeline achieves 92%+ detection accuracy across all placement types while maintaining <5% false positive rates.

Stage 2: Intelligent Sponsorship Categorization

The Critical Distinction: Ads vs. Organic Integration

One of our most important architectural decisions was distinguishing ad placements from in-game placements. This matters because:

Ad placements (commercials, overlays) are interruptive and often skipped

In-game placements (jerseys, signage) are organic and can't be avoided

ROI models differ: Ads measure reach, organic measures integration

Automated Categorization Logic

The system automatically categorizes based on visual and temporal cues:

def categorize_sponsorship_placement(placement_type, context): """Categorize sponsorship into ad_placement or in_game_placement""" # Define categorization rules ad_placement_types = { 'digital_overlay', 'ctv_ad', 'overlay_ad', 'squeeze_ad', 'commercial' } in_game_placement_types = { 'logo', 'jersey_sponsor', 'stadium_signage', 'product_placement', 'audio_mention' } # Context-dependent decisions if context == 'commercial': return "ad_placement" if placement_type in ad_placement_types: return "ad_placement" # Default to organic in-game placement return "in_game_placement"

Why This Categorization Transforms ROI Analysis

With proper categorization, we can answer questions like:

For brands: "Should we invest more in jersey sponsorships or CTV ads?"

For agencies: "What's the optimal ad/organic mix for brand recall?"

For events: "How much organic integration can we offer?"

Industry research shows that in-game placements achieve 2.3x higher brand recall than traditional ads, but cost 1.8x more. Our system quantifies this trade-off.

Stage 3: Placement Effectiveness Analysis

The Five Dimensions of Placement Quality

Through analysis of thousands of sponsorship placements, we identified five factors that determine effectiveness:

Calculating Placement Effectiveness Score

The algorithm weighs multiple factors to produce a 0-100 effectiveness score:

def calculate_placement_effectiveness(brand_data, video_duration): """Calculate 0-100 placement effectiveness score""" metrics = { 'optimal_placements': 0, 'suboptimal_placements': 0, 'placement_score': 0.0 } # Analyze each placement for appearance in brand_data: start_time = appearance['timeline'][0] end_time = appearance['timeline'][1] duration = end_time - start_time # High-engagement moment detection is_optimal = any(keyword in appearance['description'].lower() for keyword in ['goal', 'celebration', 'replay', 'highlight', 'scoring', 'win']) # Primary visibility check is_prominent = appearance['prominence'] == 'primary' # Positive sentiment check is_positive = appearance['sentiment_context'] == 'positive' # High attention check is_high_attention = appearance['viewer_attention'] == 'high' # Calculate quality score (0-100) quality_factors = [is_optimal, is_prominent, is_positive, is_high_attention] quality_score = (sum(quality_factors) / len(quality_factors)) * 100 if quality_score >= 75: metrics['optimal_placements'] += 1 else: metrics['suboptimal_placements'] += 1 # Overall placement effectiveness (0-100) total_placements = metrics['optimal_placements'] + metrics['suboptimal_placements'] if total_placements > 0: metrics['placement_score'] = (metrics['optimal_placements'] / total_placements) * 100 return metrics

Missed Opportunity Detection

The system identifies high-value moments where brands could have appeared but didn't:

# Detect high-engagement moments in video high_engagement_moments = client.analyze( video_id=video_id, prompt=""" List ALL high-engagement moments with timestamps: - Goals/scores - Celebrations - Replays of key plays - Championship moments - Emotional peaks Return: [{"timestamp": 45.2, "description": "Game-winning goal"}] """ ) # Compare against brand appearances missed_opportunities = [] for moment in high_engagement_moments: # Check if brand was visible during this moment brand_visible = any( appearance['timeline'][0] <= moment['timestamp'] <= appearance['timeline'][1] for appearance in brand_appearances ) if not brand_visible: missed_opportunities.append({ 'timestamp': moment['timestamp'], 'description': moment['description'], 'potential_value': 'HIGH' })

Real example: A Nike-sponsored team scores a championship-winning goal at 87:34. The camera shows crowd reactions (87:34-87:50) where Nike signage is visible, then a close-up replay (87:51-88:05) where Nike jerseys are not in frame.

Result: Missed opportunity flagged—"Consider requesting replay angles that keep sponsored jerseys visible."

or below, is how it would look in our UI, for the inbuilt ford example.

Stage 4: AI-Powered ROI Assessment

Beyond Simple Metrics: Strategic Intelligence

This is where OpenAI GPT-4 augments the quantitative data with strategic insights:

def generate_ai_roi_insights(brand_name, appearances, placement_metrics, brand_intelligence): """Generate comprehensive ROI insights using GPT-4""" prompt = f""" You are a sponsorship ROI analyst. Analyze this brand's performance: BRAND: {brand_name} APPEARANCES: {len(appearances)} PLACEMENT EFFECTIVENESS: {placement_metrics['placement_score']}/100 OPTIMAL PLACEMENTS: {placement_metrics['optimal_placements']} SUBOPTIMAL PLACEMENTS: {placement_metrics['suboptimal_placements']} MARKET CONTEXT: {brand_intelligence.get('industry', 'sports')} Provide analysis in this JSON structure: {{ "placement_effectiveness_score": 0-100, "roi_assessment": {{ "value_rating": "excellent|good|fair|poor", "cost_efficiency": 0-10, "exposure_quality": 0-10, "audience_reach": 0-10 }}, "recommendations": {{ "immediate_actions": ["specific action 1", "action 2"], "future_strategy": ["strategic recommendation"], "optimal_moments": ["when to appear"], "avoid_these": ["what to avoid"] }}, "executive_summary": "2-3 sentence strategic overview" }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a data-driven sponsorship analyst."}, {"role": "user", "content": prompt} ], temperature=0.3, max_tokens=1200 ) return json.loads(response.choices[0].message.content)

Six Strategic Scoring Factors

The AI evaluates sponsorships across six dimensions:

Example Output:

{ "placement_effectiveness_score": 78, "roi_assessment": { "value_rating": "good", "cost_efficiency": 7.5, "exposure_quality": 8.2, "audience_reach": 7.8 }, "recommendations": { "immediate_actions": [ "Negotiate for jersey-visible replay angles in broadcast agreements", "Increase presence during halftime highlights when replay frequency peaks" ], "future_strategy": [ "Focus budget on in-game signage near goal areas (highest replay frequency)", "Reduce commercial spend, increase organic integration budget by 30%" ], "optimal_moments": [ "Goal celebrations (current coverage: 65%, target: 90%)", "Championship trophy presentations" ], "avoid_these": [ "Timeout commercial slots (low engagement)", "Pre-game sponsorship announcements (viewership not peaked)" ] }, "executive_summary": "Strong organic integration with 78% placement effectiveness. Nike achieved high visibility during 12 of 15 key moments, but missed 3 championship replays. Recommend shifting $150K from commercial slots to enhanced jersey/equipment presence for 2025." }

Stage 5: Competitive Intelligence

Market Share of Visual Airtime

One of the most requested features: "How did we compare to competitors?"

def generate_competitive_analysis(detected_brands, video_context="sports event"): """Generate competitive landscape with market share""" # Even if only one brand detected, AI generates full competitive context competitive_prompt = f""" Analyze the competitive landscape for brands detected in a {video_context}. DETECTED BRANDS: {', '.join(detected_brands)} REQUIREMENTS: 1. Include the detected brand(s) with "detected_in_video": true 2. Add 4-7 major market competitors with "detected_in_video": false 3. Market shares should reflect actual market position EXAMPLE: If Nike detected → include Adidas, Under Armour, Puma, New Balance Return: {{ "market_category": "Athletic Footwear & Apparel", "total_market_size": "$180B globally", "competitors": [ {{ "brand": "Nike", "market_share": 38.5, "prominence": "High", "positioning": "Innovation and athlete partnerships", "detected_in_video": true }}, {{ "brand": "Adidas", "market_share": 22.3, "prominence": "High", "positioning": "European heritage, football dominance", "detected_in_video": false }} ] }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[{"role": "user", "content": competitive_prompt}], temperature=0.3 ) return json.loads(response.choices[0].message.content)

Visualizing Competitive Performance

This reveals sponsorship inefficiencies: Nike has 38.5% market share but captured 65% of video airtime—excellent ROI. Adidas has 22.3% market share but 0% presence—missed opportunity.

Stage 6: Multi-Video Parallel Processing

The Scalability Challenge

Analyzing a single 90-minute game takes ~30-45 seconds. But what about analyzing an entire tournament (10+ games)?

Sequential processing: 10 games × 45s = 7.5 minutes

Parallel processing: 10 games ÷ 4 workers = ~2 minutes

Parallel Architecture with Temporal Continuity

Implementation: ThreadPoolExecutor

def analyze_multiple_videos_with_progress(video_ids, job_id, selected_brands): """Analyze multiple videos in parallel with progress tracking""" max_workers = min(4, len(video_ids)) # Limit concurrent API calls with ThreadPoolExecutor(max_workers=max_workers) as executor: # Submit all video analysis tasks future_to_video = { executor.submit(analyze_single_video_parallel, video_id, selected_brands): video_id for video_id in video_ids } individual_analyses = [] completed = 0 # Process as they complete (not in order) for future in as_completed(future_to_video): result = future.result() individual_analyses.append(result) completed += 1 # Update progress: 80% for analysis, 20% for combining progress = int((completed / len(video_ids)) * 80) update_progress(job_id, progress, f'Completed {completed}/{len(video_ids)} videos') # Combine results with temporal continuity return combine_video_analyses(individual_analyses)

Temporal Offset for Unified Timelines

The critical innovation: maintaining temporal continuity across videos.

def combine_video_analyses(individual_analyses): """Combine analyses with temporal offset for unified timeline""" cumulative_duration = 0 all_detections = [] for analysis in individual_analyses: video_duration = analysis['summary']['video_duration_minutes'] * 60 # Offset all timestamps by cumulative duration for detection in analysis['raw_detections']: original_timeline = detection['timeline'] detection['timeline'] = [ original_timeline[0] + cumulative_duration, original_timeline[1] + cumulative_duration ] all_detections.append(detection) # Update cumulative duration for next video cumulative_duration += video_duration return { 'combined_duration': cumulative_duration, 'raw_detections': all_detections # Unified timeline }

Example:

Video 1 (0-90 min): Nike appears at 45:30

Video 2 (90-180 min): Nike appears at 15:20 → offset to 105:20

Video 3 (180-270 min): Nike appears at 67:45 → offset to 247:45

The timeline chart displays all three appearances on a single continuous axis.

Performance: Why It Scales

The Four Critical Optimizations

1. Multimodal Indexing (66% savings on repeat analysis)

TwelveLabs indexes videos once:

First analysis: 20s indexing + 25s analysis = 45s total

Repeat analysis (different brands): 0s indexing + 25s analysis = 25s total

Agencies often analyze the same event multiple times for different clients. Caching is crucial.

2. Parallel Processing (4x speedup)

Analyzing a 10-game tournament:

Sequential: 10 × 45s = 7.5 minutes

Parallel (4 workers): ~2 minutes

Speedup: 3.75x

3. Structured Generation (eliminates post-processing)

Traditional approach: Extract text → Regex parse → Validate → Structure TwelveLabs approach: Generate JSON → Parse

Time saved: 3-5 seconds per video

4. Progressive Updates (perceived performance boost)

Users see progress constantly:

Perceived wait time: 50% shorter (user engagement remains high)

Cost Economics

API Cost Breakdown

Cost structure:

TwelveLabs indexing: $0.72 per video (one-time, ~$0.008/min)

TwelveLabs Generate: $0.30 per analysis

OpenAI GPT-4: $0.15 per brand analyzed (3 brands = $0.45)

Total: $1.20 per video first analysis, $0.48 repeat

Business Model Economics

Target customer: Sports marketing agencies analyzing events for clients

Typical use case: Analyze 50 games/month for 10 brand clients

50 videos × $1.20 = $60 first analysis

9 additional brands × $0.48 = $21.60 repeat analyses

Total monthly cost: ~$82

Market pricing: Agencies charge $500-2,000 per event analysis

Gross margin: 95%+

The economics work because marginal cost per analysis stays low while value to clients remains extremely high (data-driven sponsorship optimization).

Technical Specifications

Processing Performance

Single video (90 min): 45 seconds total

Multi-video (4 games): 2 minutes total (parallel)

Accuracy Metrics

From production analysis of 150+ sports events:

Conclusion: Context is Currency

We started with a simple question: "Can AI measure not just how much brands appear, but how well they appear?"

The answer transformed sponsorship analytics. Instead of manual video review or simple object detection, we use temporal multimodal AI that comprehends:

What: Brand appears (visual detection)

When: During high-engagement moments (temporal understanding)

How: With primary prominence vs. background blur (quality assessment)

Why it matters: Celebration vs. timeout context (strategic value)

The key insights:

Multimodal first: Audio + visual + text captures 30-40% more sponsorship value than vision alone

Categorization matters: Ads vs. organic integration have fundamentally different ROI models

Context = value: A 5-second goal celebration appearance outperforms 30 seconds during timeouts

Parallel scales: Multi-video processing must maintain temporal continuity for unified timelines

AI augmentation: GPT-4 transforms quantitative metrics into strategic recommendations

The technology is mature. The business model works. The market needs it.

What started as "Can we detect logos in sports videos?" became "Can we measure and optimize the $70 billion global sponsorship market?"

The answer is yes. The foundation is here. The rest is scale.

Additional Resources

Live Application: https://tl-brc.netlify.app/

GitHub Codebase: https://github.com/mohit-twelvelabs/brandsponsorshippoc

TwelveLabs Documentation: Pegasus 1.2 Multimodal Engine

Analyze API Guide: Structured Responses

OpenAI GPT-4: Strategic analysis integration

Industry Research: Nielsen Sports Sponsorship Report 2024

Introduction

You're a brand manager who just invested $500,000 in a sports event sponsorship. Your logo is on jerseys, stadium signage, and you have commercial airtime during broadcasts. The event ends, and you receive a vague report: "Your brand appeared 47 times for 8 minutes total."

But the critical questions remain unanswered:

Were you visible during high-impact moments? (Goals, celebrations, replays)

How did your placements compare to competitors? (Market share of visual airtime)

What was the quality of exposure? (Primary focus vs. background blur)

Ad placements vs. organic integration? (Which performs better for brand recall?)

What should you change for next time? (Data-driven optimization)

This is why we built an AI-powered sponsorship analytics platform—a system that doesn't just count logo appearances, but actually understands sponsorship value through temporal context, placement quality, and competitive intelligence.

The key insight? Context + Quality + Timing = ROI. Traditional systems measure exposure time, but temporal multimodal AI tells you when, how, and why those seconds matter.

The Problem with Traditional Sponsorship Measurement

Here's what we discovered: Brand exposure isn't created equal. A 5-second logo appearance during a championship celebration is worth 10x more than 30 seconds in the background during a timeout. Traditional measurement misses this entirely.

Consider this scenario: You're measuring a Nike sponsorship in a basketball game. Legacy systems report:

Total exposure: 12 minutes ✓

Logo appearances: 34 ✓

Average screen size: 18% ✓

But these metrics ignore:

Temporal context: Was Nike visible during the game-winning shot replay (high engagement) or during timeouts (low engagement)?

Placement type: Jersey sponsor (organic) vs. commercial break (interruptive)?

Competitive landscape: How much airtime did Adidas get? What's Nike's share of voice?

Sentiment: Did the Nike logo appear during positive moments (celebrations) or negative ones (injuries)?

Traditional approaches would either:

Hire analysts to manually review hours of footage ($$$)

Accept incomplete data from simple object detection (misses context)

Rely on self-reported metrics from broadcast partners (unverified)

This system takes a different approach: multimodal understanding first, then measure precisely what matters.

System Architecture: The Big Picture

The analysis pipeline combines temporal video understanding, intelligent categorization, and strategic ROI analysis through six key stages:

Each stage builds on the previous, allowing us to optimize individual components while maintaining end-to-end coherence.

Stage 1: Multimodal Video Understanding with TwelveLabs

Why Multimodal Understanding Matters

The breakthrough was realizing that brands appear across modalities. A comprehensive sponsorship analysis must detect:

Visual: Logos, jerseys, stadium signage, product placements

Audio: Brand mentions in commentary, sponsored segment announcements

Text: On-screen graphics, lower thirds, sponsor cards

Single-modality systems miss 30-40% of sponsorship value. A jersey sponsor might not be clearly visible in every frame, but the commentator says "Nike jerseys looking sharp today"—that's brand exposure.

TwelveLabs Pegasus-1.2: All-in-One Multimodal Detection

We use TwelveLabs' Pegasus-1.2 engine because it processes all modalities simultaneously:

from twelvelabs import TwelveLabs from twelvelabs.indexes import IndexesCreateRequestModelsItem # Create video index with multimodal understanding index = client.indexes.create( index_name="sponsorship-roi-analysis", models=[ IndexesCreateRequestModelsItem( model_name="pegasus1.2", model_options=["visual", "audio"], ) ] ) # Upload video for analysis task = client.tasks.create( index_id="<YOUR_INDEX_ID>", video_file=video_file, language="en" )

The Power of Analyze API for Structured Data

Instead of generic search queries, we use TwelveLabs' Analyze API to extract structured brand data:

brand_analysis_prompt = """ Analyze this video for comprehensive brand sponsorship measurement. Focus on these brands: Nike, Adidas, Gatorade IMPORTANT: Categorize each appearance into: 1. AD PLACEMENTS ("ad_placement"): - CTV commercials, digital overlays, squeeze ads 2. IN-GAME PLACEMENTS ("in_game_placement"): - Jersey sponsors, stadium signage, product placements For EACH brand appearance, provide: - timeline: [start_time, end_time] in seconds - brand: exact brand name - type: "logo", "jersey_sponsor", "stadium_signage", "ctv_ad", etc. - sponsorship_category: "ad_placement" or "in_game_placement" - prominence: "primary", "secondary", "background" - context: "game_action", "celebration", "replay", etc. - sentiment_context: "positive", "neutral", "negative" - viewer_attention: "high", "medium", "low" Return ONLY a JSON array. """ # Generate structured analysis result = client.analyze( video_id="<YOUR_VIDEO_ID>", prompt=brand_analysis_prompt, temperature=0.1 # Low temperature for factual extraction ) # Parse structured JSON response brand_appearances = json.loads(result.data)

Why This Works

The Analyze API provides:

Temporal precision: Exact start/end timestamps for each appearance

Contextual understanding: Knows the difference between a celebration and a timeout

Structured output: Direct JSON parsing, no brittle regex or post-processing

Multi-brand tracking: Detects multiple brands in single frames automatically

The multimodal pipeline achieves 92%+ detection accuracy across all placement types while maintaining <5% false positive rates.

Stage 2: Intelligent Sponsorship Categorization

The Critical Distinction: Ads vs. Organic Integration

One of our most important architectural decisions was distinguishing ad placements from in-game placements. This matters because:

Ad placements (commercials, overlays) are interruptive and often skipped

In-game placements (jerseys, signage) are organic and can't be avoided

ROI models differ: Ads measure reach, organic measures integration

Automated Categorization Logic

The system automatically categorizes based on visual and temporal cues:

def categorize_sponsorship_placement(placement_type, context): """Categorize sponsorship into ad_placement or in_game_placement""" # Define categorization rules ad_placement_types = { 'digital_overlay', 'ctv_ad', 'overlay_ad', 'squeeze_ad', 'commercial' } in_game_placement_types = { 'logo', 'jersey_sponsor', 'stadium_signage', 'product_placement', 'audio_mention' } # Context-dependent decisions if context == 'commercial': return "ad_placement" if placement_type in ad_placement_types: return "ad_placement" # Default to organic in-game placement return "in_game_placement"

Why This Categorization Transforms ROI Analysis

With proper categorization, we can answer questions like:

For brands: "Should we invest more in jersey sponsorships or CTV ads?"

For agencies: "What's the optimal ad/organic mix for brand recall?"

For events: "How much organic integration can we offer?"

Industry research shows that in-game placements achieve 2.3x higher brand recall than traditional ads, but cost 1.8x more. Our system quantifies this trade-off.

Stage 3: Placement Effectiveness Analysis

The Five Dimensions of Placement Quality

Through analysis of thousands of sponsorship placements, we identified five factors that determine effectiveness:

Calculating Placement Effectiveness Score

The algorithm weighs multiple factors to produce a 0-100 effectiveness score:

def calculate_placement_effectiveness(brand_data, video_duration): """Calculate 0-100 placement effectiveness score""" metrics = { 'optimal_placements': 0, 'suboptimal_placements': 0, 'placement_score': 0.0 } # Analyze each placement for appearance in brand_data: start_time = appearance['timeline'][0] end_time = appearance['timeline'][1] duration = end_time - start_time # High-engagement moment detection is_optimal = any(keyword in appearance['description'].lower() for keyword in ['goal', 'celebration', 'replay', 'highlight', 'scoring', 'win']) # Primary visibility check is_prominent = appearance['prominence'] == 'primary' # Positive sentiment check is_positive = appearance['sentiment_context'] == 'positive' # High attention check is_high_attention = appearance['viewer_attention'] == 'high' # Calculate quality score (0-100) quality_factors = [is_optimal, is_prominent, is_positive, is_high_attention] quality_score = (sum(quality_factors) / len(quality_factors)) * 100 if quality_score >= 75: metrics['optimal_placements'] += 1 else: metrics['suboptimal_placements'] += 1 # Overall placement effectiveness (0-100) total_placements = metrics['optimal_placements'] + metrics['suboptimal_placements'] if total_placements > 0: metrics['placement_score'] = (metrics['optimal_placements'] / total_placements) * 100 return metrics

Missed Opportunity Detection

The system identifies high-value moments where brands could have appeared but didn't:

# Detect high-engagement moments in video high_engagement_moments = client.analyze( video_id=video_id, prompt=""" List ALL high-engagement moments with timestamps: - Goals/scores - Celebrations - Replays of key plays - Championship moments - Emotional peaks Return: [{"timestamp": 45.2, "description": "Game-winning goal"}] """ ) # Compare against brand appearances missed_opportunities = [] for moment in high_engagement_moments: # Check if brand was visible during this moment brand_visible = any( appearance['timeline'][0] <= moment['timestamp'] <= appearance['timeline'][1] for appearance in brand_appearances ) if not brand_visible: missed_opportunities.append({ 'timestamp': moment['timestamp'], 'description': moment['description'], 'potential_value': 'HIGH' })

Real example: A Nike-sponsored team scores a championship-winning goal at 87:34. The camera shows crowd reactions (87:34-87:50) where Nike signage is visible, then a close-up replay (87:51-88:05) where Nike jerseys are not in frame.

Result: Missed opportunity flagged—"Consider requesting replay angles that keep sponsored jerseys visible."

or below, is how it would look in our UI, for the inbuilt ford example.

Stage 4: AI-Powered ROI Assessment

Beyond Simple Metrics: Strategic Intelligence

This is where OpenAI GPT-4 augments the quantitative data with strategic insights:

def generate_ai_roi_insights(brand_name, appearances, placement_metrics, brand_intelligence): """Generate comprehensive ROI insights using GPT-4""" prompt = f""" You are a sponsorship ROI analyst. Analyze this brand's performance: BRAND: {brand_name} APPEARANCES: {len(appearances)} PLACEMENT EFFECTIVENESS: {placement_metrics['placement_score']}/100 OPTIMAL PLACEMENTS: {placement_metrics['optimal_placements']} SUBOPTIMAL PLACEMENTS: {placement_metrics['suboptimal_placements']} MARKET CONTEXT: {brand_intelligence.get('industry', 'sports')} Provide analysis in this JSON structure: {{ "placement_effectiveness_score": 0-100, "roi_assessment": {{ "value_rating": "excellent|good|fair|poor", "cost_efficiency": 0-10, "exposure_quality": 0-10, "audience_reach": 0-10 }}, "recommendations": {{ "immediate_actions": ["specific action 1", "action 2"], "future_strategy": ["strategic recommendation"], "optimal_moments": ["when to appear"], "avoid_these": ["what to avoid"] }}, "executive_summary": "2-3 sentence strategic overview" }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[ {"role": "system", "content": "You are a data-driven sponsorship analyst."}, {"role": "user", "content": prompt} ], temperature=0.3, max_tokens=1200 ) return json.loads(response.choices[0].message.content)

Six Strategic Scoring Factors

The AI evaluates sponsorships across six dimensions:

Example Output:

{ "placement_effectiveness_score": 78, "roi_assessment": { "value_rating": "good", "cost_efficiency": 7.5, "exposure_quality": 8.2, "audience_reach": 7.8 }, "recommendations": { "immediate_actions": [ "Negotiate for jersey-visible replay angles in broadcast agreements", "Increase presence during halftime highlights when replay frequency peaks" ], "future_strategy": [ "Focus budget on in-game signage near goal areas (highest replay frequency)", "Reduce commercial spend, increase organic integration budget by 30%" ], "optimal_moments": [ "Goal celebrations (current coverage: 65%, target: 90%)", "Championship trophy presentations" ], "avoid_these": [ "Timeout commercial slots (low engagement)", "Pre-game sponsorship announcements (viewership not peaked)" ] }, "executive_summary": "Strong organic integration with 78% placement effectiveness. Nike achieved high visibility during 12 of 15 key moments, but missed 3 championship replays. Recommend shifting $150K from commercial slots to enhanced jersey/equipment presence for 2025." }

Stage 5: Competitive Intelligence

Market Share of Visual Airtime

One of the most requested features: "How did we compare to competitors?"

def generate_competitive_analysis(detected_brands, video_context="sports event"): """Generate competitive landscape with market share""" # Even if only one brand detected, AI generates full competitive context competitive_prompt = f""" Analyze the competitive landscape for brands detected in a {video_context}. DETECTED BRANDS: {', '.join(detected_brands)} REQUIREMENTS: 1. Include the detected brand(s) with "detected_in_video": true 2. Add 4-7 major market competitors with "detected_in_video": false 3. Market shares should reflect actual market position EXAMPLE: If Nike detected → include Adidas, Under Armour, Puma, New Balance Return: {{ "market_category": "Athletic Footwear & Apparel", "total_market_size": "$180B globally", "competitors": [ {{ "brand": "Nike", "market_share": 38.5, "prominence": "High", "positioning": "Innovation and athlete partnerships", "detected_in_video": true }}, {{ "brand": "Adidas", "market_share": 22.3, "prominence": "High", "positioning": "European heritage, football dominance", "detected_in_video": false }} ] }} """ response = openai_client.chat.completions.create( model="gpt-4", messages=[{"role": "user", "content": competitive_prompt}], temperature=0.3 ) return json.loads(response.choices[0].message.content)

Visualizing Competitive Performance

This reveals sponsorship inefficiencies: Nike has 38.5% market share but captured 65% of video airtime—excellent ROI. Adidas has 22.3% market share but 0% presence—missed opportunity.

Stage 6: Multi-Video Parallel Processing

The Scalability Challenge

Analyzing a single 90-minute game takes ~30-45 seconds. But what about analyzing an entire tournament (10+ games)?

Sequential processing: 10 games × 45s = 7.5 minutes

Parallel processing: 10 games ÷ 4 workers = ~2 minutes

Parallel Architecture with Temporal Continuity

Implementation: ThreadPoolExecutor

def analyze_multiple_videos_with_progress(video_ids, job_id, selected_brands): """Analyze multiple videos in parallel with progress tracking""" max_workers = min(4, len(video_ids)) # Limit concurrent API calls with ThreadPoolExecutor(max_workers=max_workers) as executor: # Submit all video analysis tasks future_to_video = { executor.submit(analyze_single_video_parallel, video_id, selected_brands): video_id for video_id in video_ids } individual_analyses = [] completed = 0 # Process as they complete (not in order) for future in as_completed(future_to_video): result = future.result() individual_analyses.append(result) completed += 1 # Update progress: 80% for analysis, 20% for combining progress = int((completed / len(video_ids)) * 80) update_progress(job_id, progress, f'Completed {completed}/{len(video_ids)} videos') # Combine results with temporal continuity return combine_video_analyses(individual_analyses)

Temporal Offset for Unified Timelines

The critical innovation: maintaining temporal continuity across videos.

def combine_video_analyses(individual_analyses): """Combine analyses with temporal offset for unified timeline""" cumulative_duration = 0 all_detections = [] for analysis in individual_analyses: video_duration = analysis['summary']['video_duration_minutes'] * 60 # Offset all timestamps by cumulative duration for detection in analysis['raw_detections']: original_timeline = detection['timeline'] detection['timeline'] = [ original_timeline[0] + cumulative_duration, original_timeline[1] + cumulative_duration ] all_detections.append(detection) # Update cumulative duration for next video cumulative_duration += video_duration return { 'combined_duration': cumulative_duration, 'raw_detections': all_detections # Unified timeline }

Example:

Video 1 (0-90 min): Nike appears at 45:30

Video 2 (90-180 min): Nike appears at 15:20 → offset to 105:20

Video 3 (180-270 min): Nike appears at 67:45 → offset to 247:45

The timeline chart displays all three appearances on a single continuous axis.

Performance: Why It Scales

The Four Critical Optimizations

1. Multimodal Indexing (66% savings on repeat analysis)

TwelveLabs indexes videos once:

First analysis: 20s indexing + 25s analysis = 45s total

Repeat analysis (different brands): 0s indexing + 25s analysis = 25s total

Agencies often analyze the same event multiple times for different clients. Caching is crucial.

2. Parallel Processing (4x speedup)

Analyzing a 10-game tournament:

Sequential: 10 × 45s = 7.5 minutes

Parallel (4 workers): ~2 minutes

Speedup: 3.75x

3. Structured Generation (eliminates post-processing)

Traditional approach: Extract text → Regex parse → Validate → Structure TwelveLabs approach: Generate JSON → Parse

Time saved: 3-5 seconds per video

4. Progressive Updates (perceived performance boost)

Users see progress constantly:

Perceived wait time: 50% shorter (user engagement remains high)

Cost Economics

API Cost Breakdown

Cost structure:

TwelveLabs indexing: $0.72 per video (one-time, ~$0.008/min)

TwelveLabs Generate: $0.30 per analysis

OpenAI GPT-4: $0.15 per brand analyzed (3 brands = $0.45)

Total: $1.20 per video first analysis, $0.48 repeat

Business Model Economics

Target customer: Sports marketing agencies analyzing events for clients

Typical use case: Analyze 50 games/month for 10 brand clients

50 videos × $1.20 = $60 first analysis

9 additional brands × $0.48 = $21.60 repeat analyses

Total monthly cost: ~$82

Market pricing: Agencies charge $500-2,000 per event analysis

Gross margin: 95%+

The economics work because marginal cost per analysis stays low while value to clients remains extremely high (data-driven sponsorship optimization).

Technical Specifications

Processing Performance

Single video (90 min): 45 seconds total

Multi-video (4 games): 2 minutes total (parallel)

Accuracy Metrics

From production analysis of 150+ sports events:

Conclusion: Context is Currency

We started with a simple question: "Can AI measure not just how much brands appear, but how well they appear?"

The answer transformed sponsorship analytics. Instead of manual video review or simple object detection, we use temporal multimodal AI that comprehends:

What: Brand appears (visual detection)

When: During high-engagement moments (temporal understanding)

How: With primary prominence vs. background blur (quality assessment)

Why it matters: Celebration vs. timeout context (strategic value)

The key insights:

Multimodal first: Audio + visual + text captures 30-40% more sponsorship value than vision alone

Categorization matters: Ads vs. organic integration have fundamentally different ROI models

Context = value: A 5-second goal celebration appearance outperforms 30 seconds during timeouts

Parallel scales: Multi-video processing must maintain temporal continuity for unified timelines

AI augmentation: GPT-4 transforms quantitative metrics into strategic recommendations

The technology is mature. The business model works. The market needs it.

What started as "Can we detect logos in sports videos?" became "Can we measure and optimize the $70 billion global sponsorship market?"

The answer is yes. The foundation is here. The rest is scale.

Additional Resources

Live Application: https://tl-brc.netlify.app/

GitHub Codebase: https://github.com/mohit-twelvelabs/brandsponsorshippoc

TwelveLabs Documentation: Pegasus 1.2 Multimodal Engine

Analyze API Guide: Structured Responses

OpenAI GPT-4: Strategic analysis integration

Industry Research: Nielsen Sports Sponsorship Report 2024

Related articles

From Raw Surveillance to Training-Ready Datasets in Minutes: Building an Automated Video Curator with TwelveLabs and FiftyOne

From Manual Review to Automated Intelligence: Building a Surgical Video Analysis Platform with YOLO and Twelve Labs

Building Recurser: Iterative AI Video Enhancement with TwelveLabs and Google Veo

Building SAGE: Semantic Video Comparison with TwelveLabs Embeddings

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved