Tutorial

Tutorial

Tutorial

Building An Enterprise Video Analysis Agent on AWS AgentCore with TwelveLabs and Strands Agent

Nathan Che

Nathan Che

Nathan Che

In this tutorial, you will learn about TwelveLab’s latest integration into Strands Agent, an AWS-native AI agent building platform that helps developers bring agents to production in seconds. Not only will you learn key technical concepts, but you will apply them by building and deploying an AI agent in AWS Agentcore that directly interacts with tools from Slack, TwelveLabs, and the Windows/Mac operating system.

In this tutorial, you will learn about TwelveLab’s latest integration into Strands Agent, an AWS-native AI agent building platform that helps developers bring agents to production in seconds. Not only will you learn key technical concepts, but you will apply them by building and deploying an AI agent in AWS Agentcore that directly interacts with tools from Slack, TwelveLabs, and the Windows/Mac operating system.

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Dec 28, 2025

Dec 28, 2025

Dec 28, 2025

15 Minutes

15 Minutes

15 Minutes

Copy link to article

Copy link to article

Copy link to article

Introduction

From an engineer’s daily standup on Zoom to a recruiter’s 5th candidate call of the day, any working professional knows how frequent and vital meetings are in everyday work. In fact, according to leading meeting platforms like Zoom, the average employee spends nearly 392 hours in meetings per year. With all those meeting video archives, notes, and easily forgotten to-do lists, employees and enterprises are often left with unproductive post-meeting tasks and videos.

What if there was a way to easily search through 1000+ hours of this video content to find exact moments and find personalized answers to your meeting content? Not only that, what if these insights could then be immediately provided to your entire enterprise through popular communication platforms like Slack?

Thanks to the latest integration of TwelveLabs into AWS Strands Agent this is no longer a dream, in fact in today’s tutorial we are going to build this exact feature using AWS AgentCore, TwelveLabs, and ElectronJS.

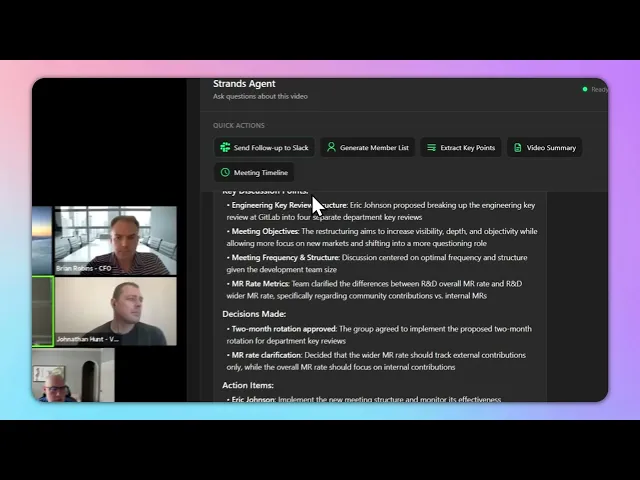

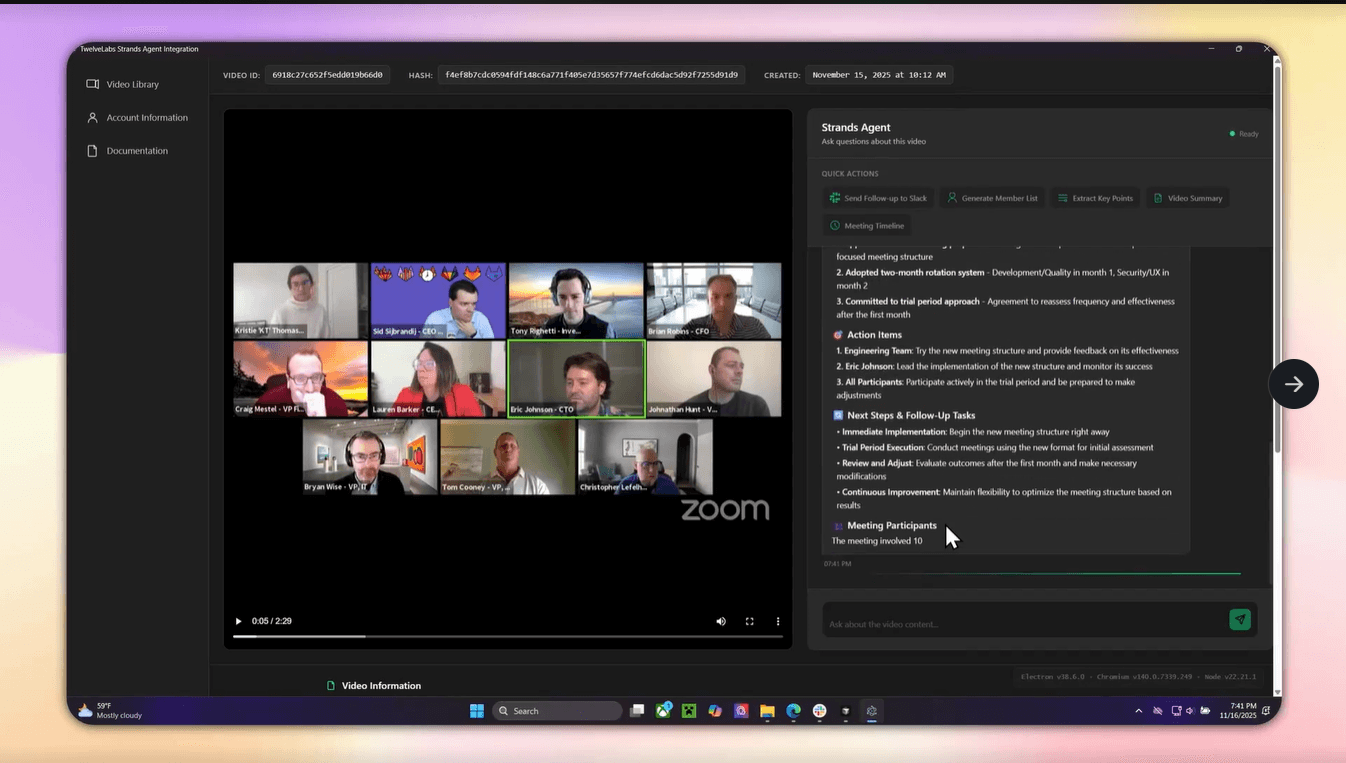

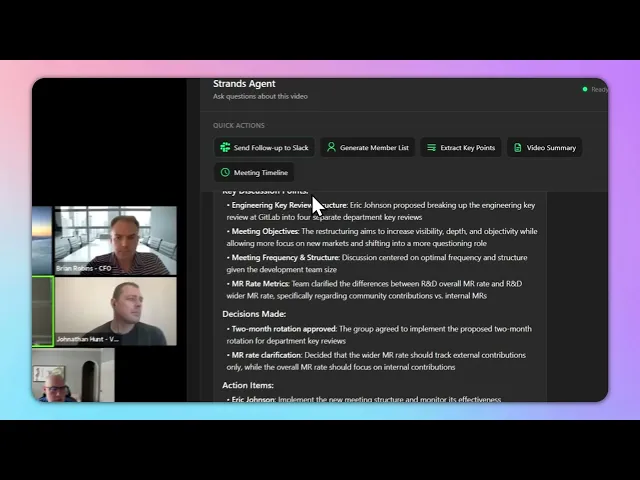

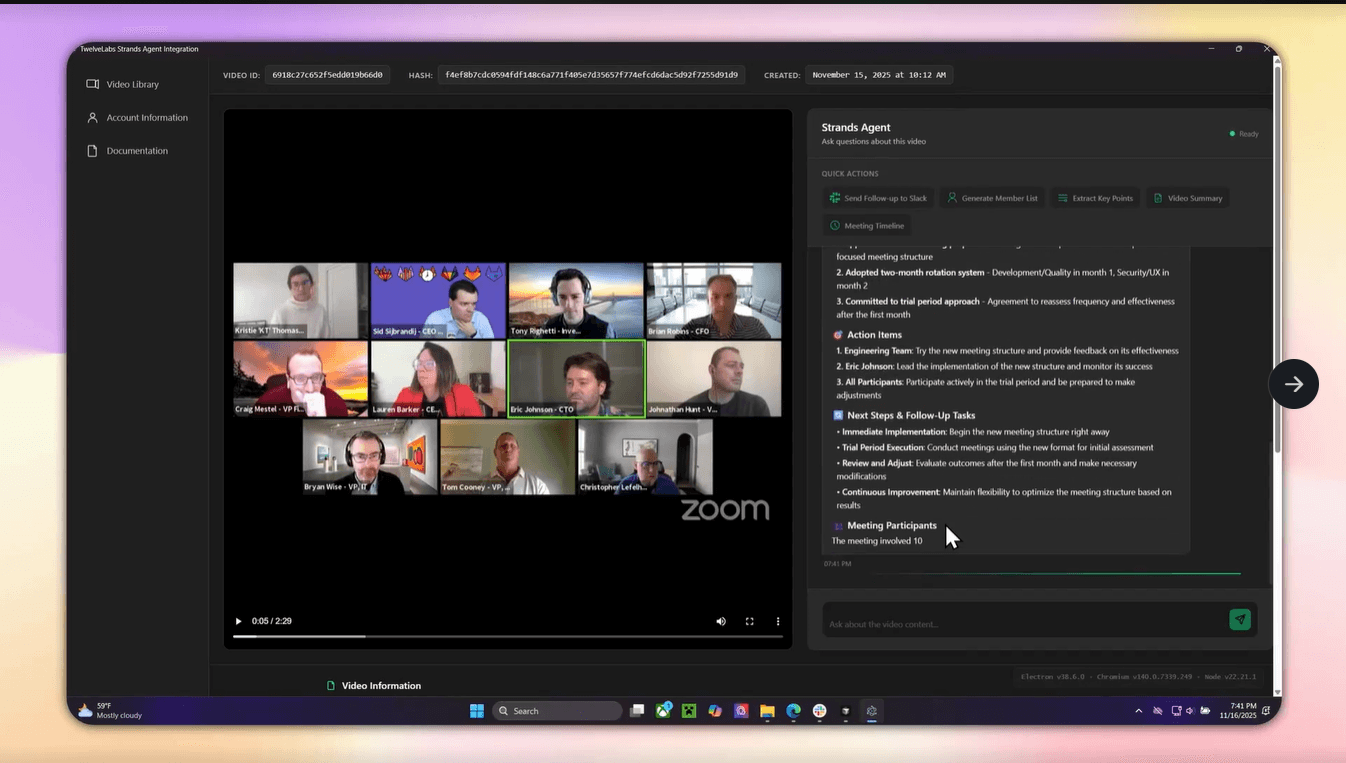

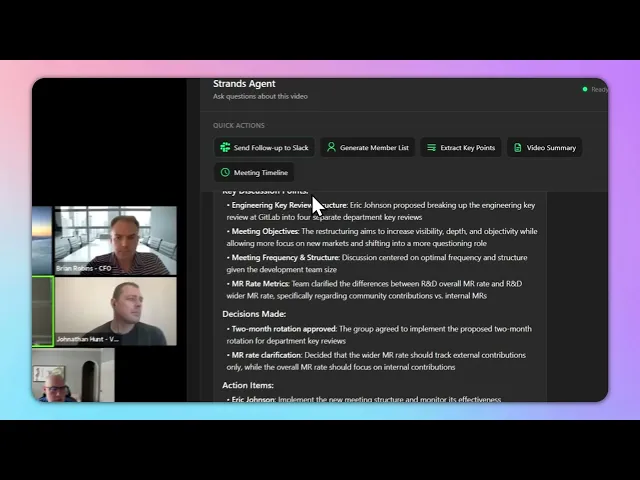

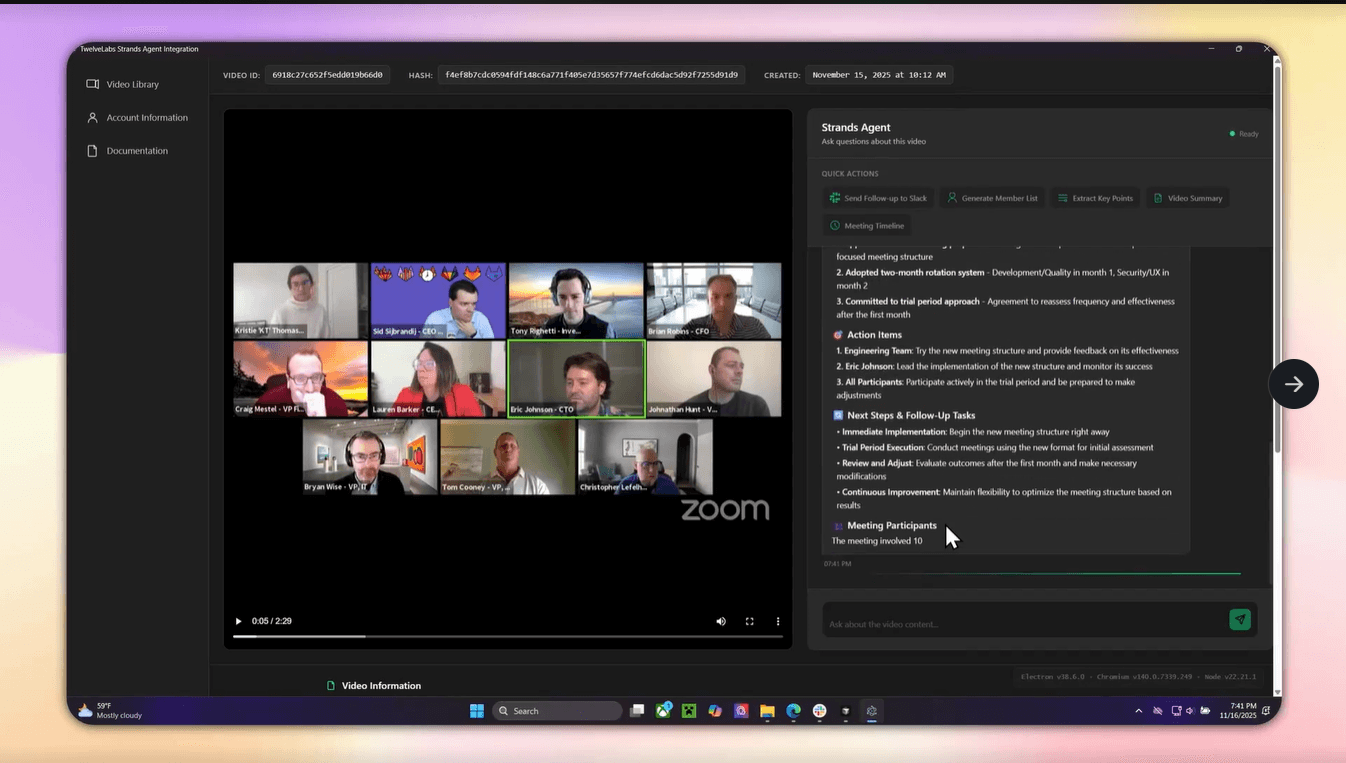

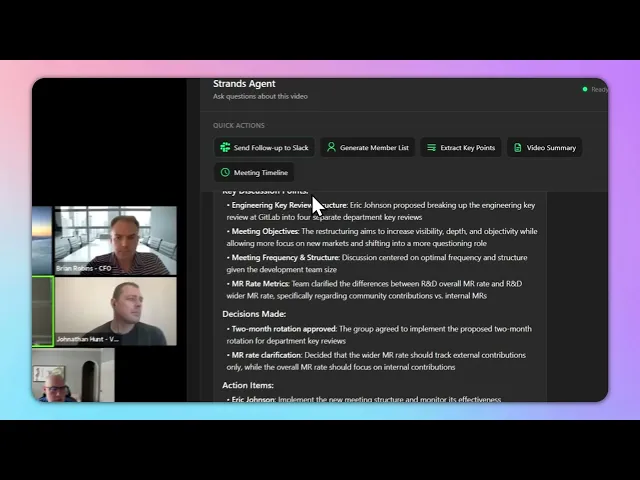

Application Demo

Before we begin coding, let’s quickly preview what we’ll be building.

If you’d like to try it on your own MacOS or Windows device feel free to download the latest release or preview the codebase below.

Download Latest Release: https://github.com/nathanchess/twelvelabs-agentcore-demo/releases/tag/v1.0.0

Github Codebase: https://github.com/nathanchess/twelvelabs-agentcore-demo

Now that we know what we’re building, let’s get started on building it ourselves! 😊

Learning Objectives

In this tutorial you will:

Deploy a production ready AI agent on AWS AgentCore, leveraging different LLM providers within the AWS ecosystem such as AWS Bedrock, OpenAI, Ollama, and more.

Build custom tools to allow your AI agent to interact with external services beyond the Strands Tools.

Integrate external tooling with Slack and TwelveLabs into your AI agent with pre-built tools.

Add observability to AI agents, allowing precise cost estimation and debug logs, using the AWS ecosystem and services like CloudWatch.

Compile your own cross-platform desktop application using ElectronJS.

Prerequisites

Node.JS 20+: Node.js — Download Node.js®

Bundled with node package manager (npm)

TwelveLabs API Key: Authentication | TwelveLabs

Python 3.8+: Download Python | Python.org

AWS Access Key: Credentials - Boto3 1.40.12 documentation

Slack Bot & App Token: https://docs.slack.dev/authentication/tokens/

Both tokens are auto-generated when you create a Slackbot via. the Slack API.

AWS Console Account and permission to provision AgentCore, Bedrock, and CloudWatch.

Intermediate understanding of Python and JavaScript.

Building the Agent with Strands Agent

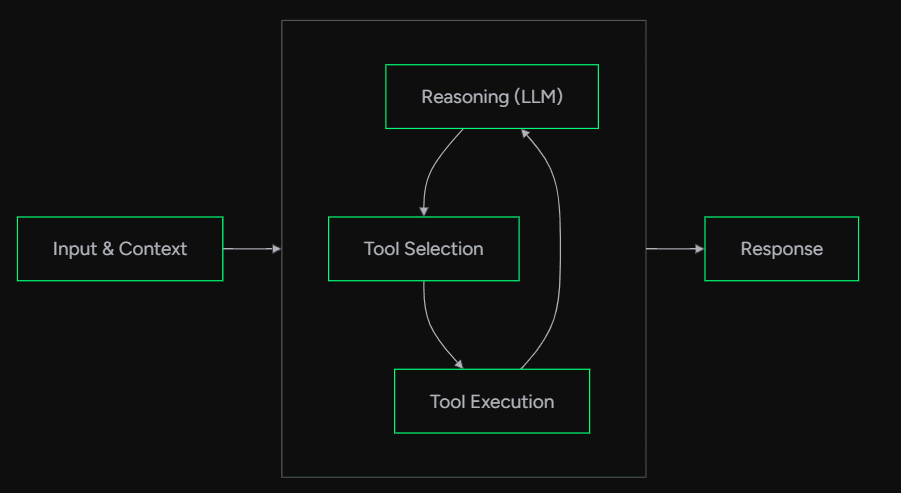

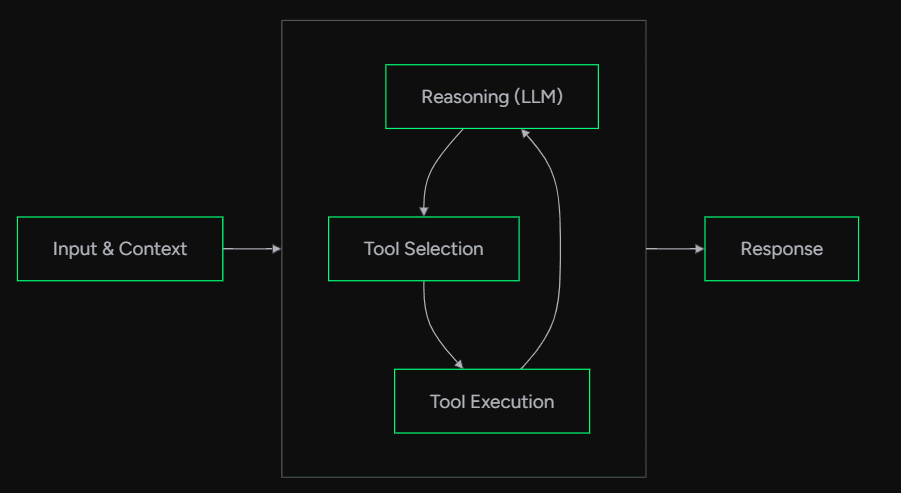

Before integrating features like the Slackbot or TwelveLab’s video intelligence models, it’s important to gain an understanding of the underlying mechanics behind AI agents. According to the Strands Agent documentation, an AI agent is primarily an LLM with an additional orchestration layer. This orchestration layer allows an LLM to take action for a variety of reasons, including finding information, querying databases, running code, and more!

💡 This entire orchestration layer is known as the Agent Loop.

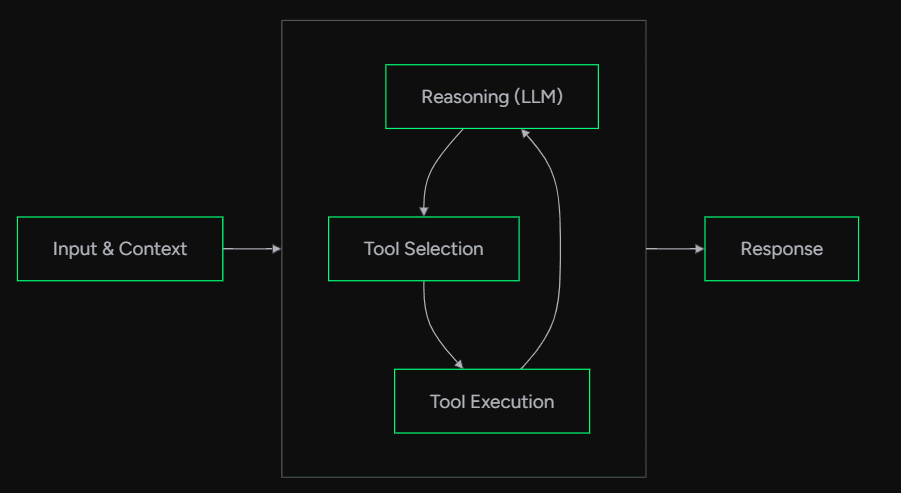

Figure 1: Agent Loop Figure from AWS Strands

💡 Tool: Direct function calls or APIs, formatted in a way that allows the Reasoning LLM to call to access specific actions based on input & context.

In the figure above, we see that there is an additional box in the middle containing tool execution, tool selection, and reasoning (LLM). Each of these steps all serve a vital purpose:

Reasoning (LLM) — Deduces the correct or if any tool needs to be called for given input.

Tool Selection — Finds requested tools based on Agent configuration.

Tool Execution — Runs tool and passes result back to reasoning LLM to deduce next steps.

You now may be asking how difficult it would be to build such a framework. How can the reasoning LLM recognize tools, but more importantly, call tools on-demand? How can one properly describe a tool, to give it meaning for the LLM to interpret? These are all difficult and complex subtopics that are fortunately built for us on AWS Strands Agent.

Though explaining through each concept is beyond the scope of this blog, I highly recommend you to learn in-depth these complex mechanisms via. the Strands Agent codebase: https://github.com/strands-agents.

With this framework, creating an Agent is as simple as a few lines:

from bedrock_agentcore.runtime import BedrockAgentCoreApp from strands import Agent app = BedrockAgentCoreApp() agent = Agent() @app.entrypoint def invoke(payload): """Process user input and return a response""" user_message = payload.get("prompt", "Hello") result = agent(user_message) return {"result": result.message} if __name__ == "__main__": app.run()

With these simple lines, you have just created an AI agent! Albeit, it acts much like your standard LLM as we haven’t provisioned any tools yet, so it can only handle question and answer tasks.

Adding TwelveLabs to Strands Agent

Great, now we have a fully working AI Agent that excels at answering questions given input and context. However, as you test around more, you’ll find there are several limitations:

Cannot handle video data or really any other data format beyond strings properly.

Confined to just Q&A tasks.

Lack of observability in what the Reasoning LLM thinks and how it decides, which leads to poor debugging of AI agents in production. (You don’t know what’s really happening 😲)

In order to expand the AI agent’s capabilities, we now must introduce tools into our code! As mentioned before, tools tend to be direct functions or API calls that the LLM can directly access. In Strands Agent they can be formatted and provided to AI agent in two ways:

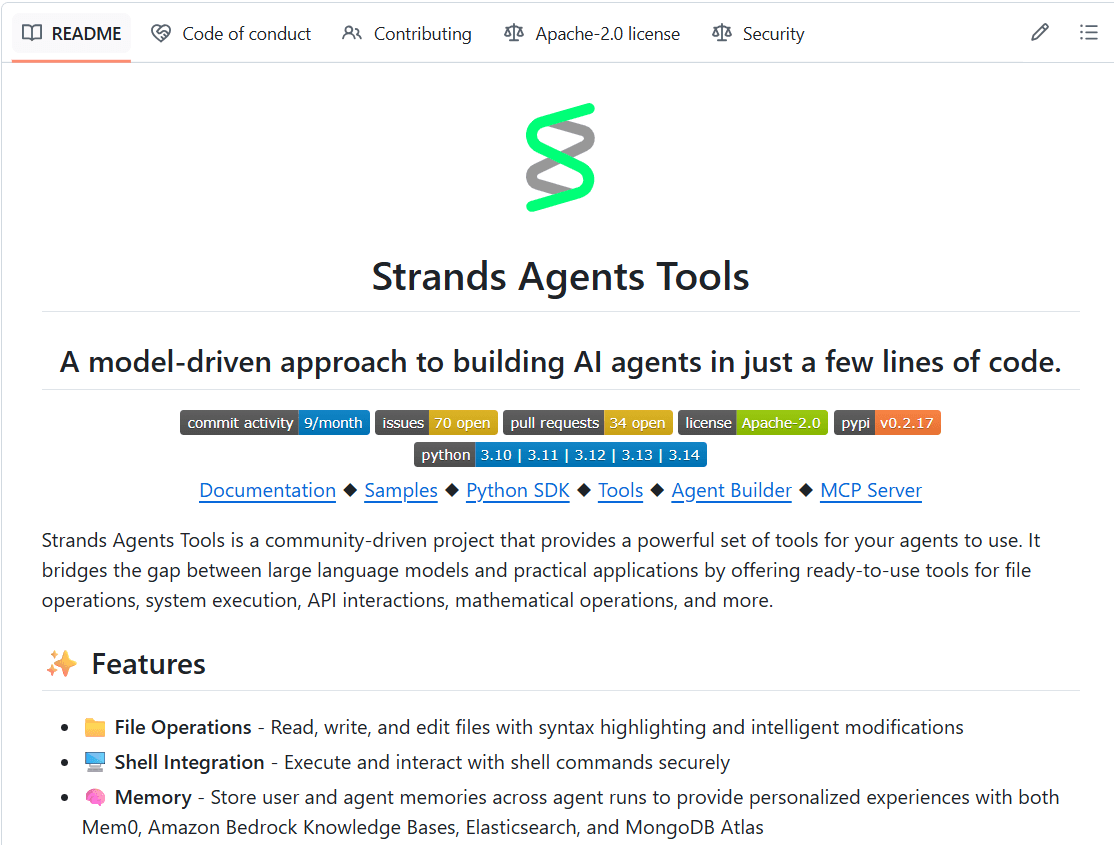

1 - Default tools provided by strands_tools Python package:

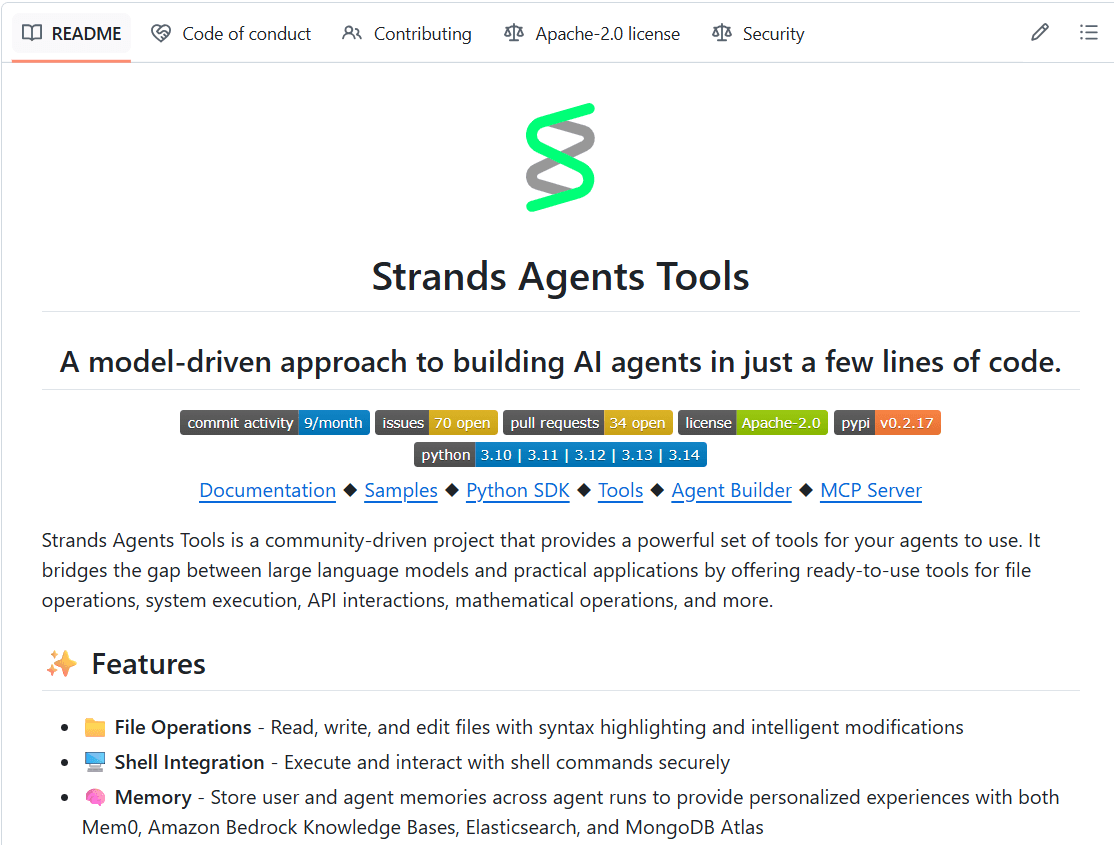

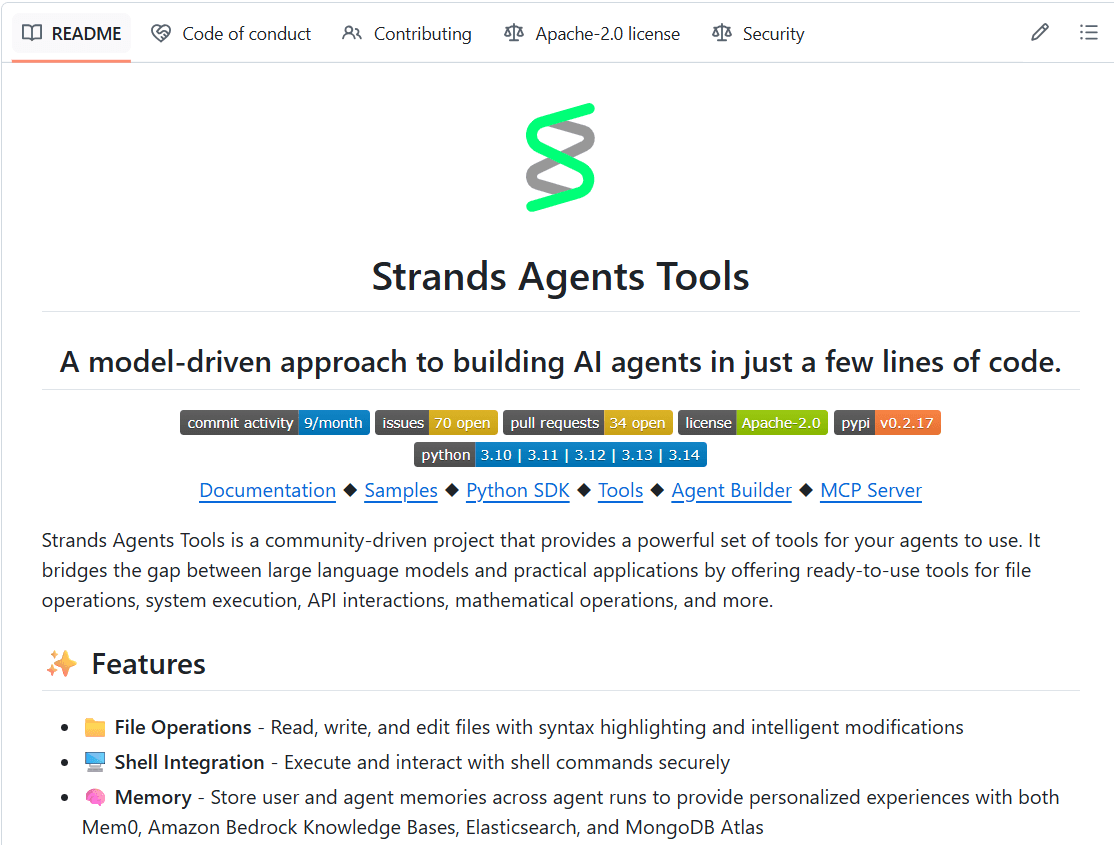

Figure 2: Strands Agent Tools Documentation

Strands Agent provides nearly 20+ tools by default that you can add to your AI agent! These tools range from simple File operations on the Shell all the way to direct platform integrations to Slack, TwelveLabs, and AWS.

In this project we will use the following tools:

TwelveLabs — Provides the base video intelligence with Pegasus and the new Marengo 3.0 model.

Slack — Allows us to directly interact with our enterprise at SlackBot

Note: Credentials must be provided via. Slack Bot and Slack App Token.

Environment — Simple tool that sets environment variables, key to AgentCore session handling.

Adding these tools is just as simple as creating the agent, with a few lines of code:

from strands import Agent from strands_tools import calculator, file_read, shell # Add tools to our agent agent = Agent( tools=[calculator, file_read, shell] ) # Agent will automatically determine when to use the calculator tool agent("What is 42 ^ 9") print("\n\n") # Print new lines # Agent will use the shell and file reader tool when appropriate agent("Show me the contents of a single file in this directory")

As you might notice in the code snippet above, we simply import the tools we want using strands_tools then plug in those tools as an additional parameter inside Agent(). Just like that you have expanded your Agent’s capabilities once more so that it can now take action! The entire agent loop described above will happen in the background, but now it can call the calculator, file_read, and shell tool if needed!

2 - Custom tooling built in Python with Strands Agent:

Though we will not need to build custom tools for our application, it’s important to note that Strands Agent provides a helpful framework to build your custom tools, by not only defining the function itself but the metadata associated with a function so it can be properly referenced.

from strands import tool @tool def weather_forecast(city: str, days: int = 3) -> str: """Get weather forecast for a city. Args: city: The name of the city days: Number of days for the forecast """ return f"Weather forecast for {city} for the next {days} days..."

As seen in the code snippet above, a tool can be created with pre-existing functions by adding the @tool decorator above your functions. The docstring is then used as metadata to be interpreted by the reasoning LLM in tool deduction.

With those two methods in mind, let’s go ahead and implement the following tools in our own AI Agent!

import json import os import asyncio from dotenv import load_dotenv load_dotenv() from bedrock_agentcore.runtime import BedrockAgentCoreApp from strands import Agent from strands_tools import environment from custom_tools import chat_video, search_video, get_slack_channel_ids, get_video_index, slack, fetch_video_url os.environ["BYPASS_TOOL_CONSENT"] = "true" os.environ["STRANDS_SLACK_AUTO_REPLY"] = "true" os.environ["STRANDS_SLACK_LISTEN_ONLY_TAG"] = "" def get_tools(): return [slack, environment, chat_video, search_video, get_slack_channel_ids, get_video_index, fetch_video_url] app = BedrockAgentCoreApp() agent = Agent( tools=get_tools() ) # Track if socket mode has been started to avoid multiple starts _socket_mode_started = False @app.entrypoint async def invoke(payload): """ Process system request directly and ONLY from Electron app. """ global _socket_mode_started system_message = payload.get("prompt") # Process the agent stream (independent of socket mode) stream = agent.stream_async(system_message) async for event in stream: if "data" in event: yield event['data'] if __name__ == '__main__': app.run()

In the code snippet above, we have loaded in tools from strands_tools specifically:

TwelveLabs:

chat_video,search_videoSlack:

slack,get_slack_channel_ids

💡These tools are the official integrations from their respective teams, hence I highly recommend diving deeper into the Strands Tools repository to see how production-grade tools are written for AI agents!

More importantly, thanks to the latest integration into TwelveLabs, you now can deploy production grade AI agents that interact with your video data into a single line of code. More information on our specific integrations can be found in this blog: https://www.twelvelabs.io/blog/twelve-labs-and-strands-agents.

Deploying on AWS AgentCore

Now that we have a working AI agent on our local machine we need to bring this to production so that end users can also access these amazing tools and agents! Luckily for us, Strands Agent allows us to deploy to the AWS ecosystem in a single command.

# Deploy to AWS

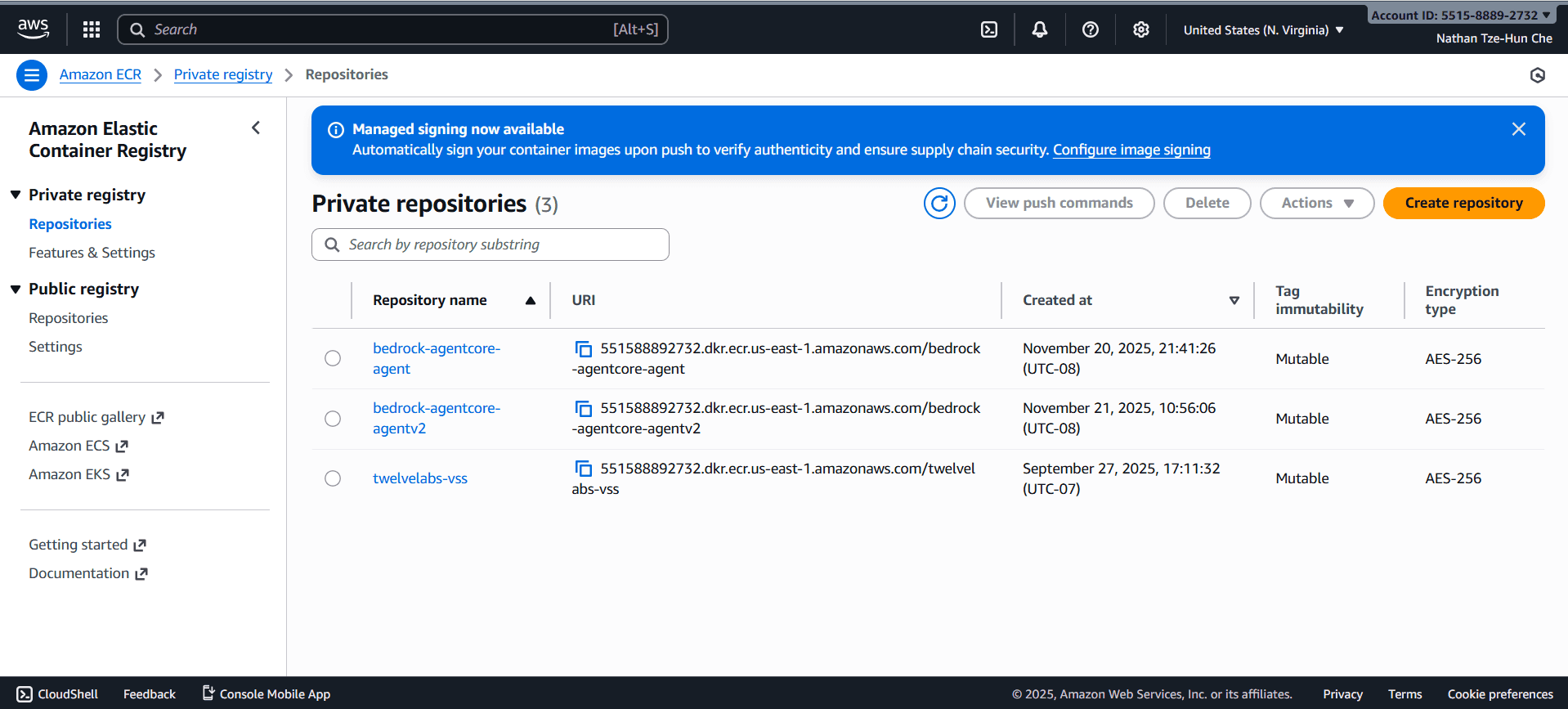

Upon running this command, several things will happen to your local AI agent:

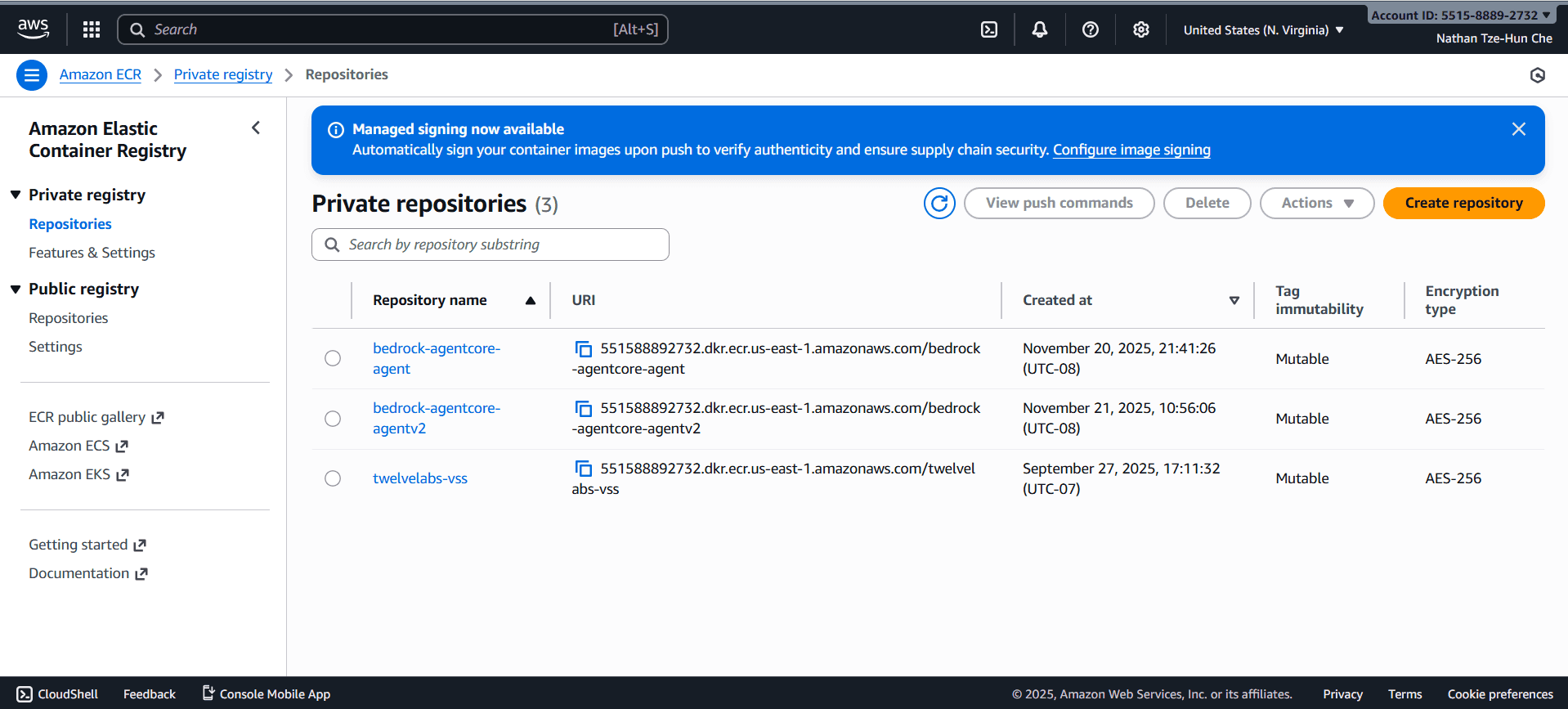

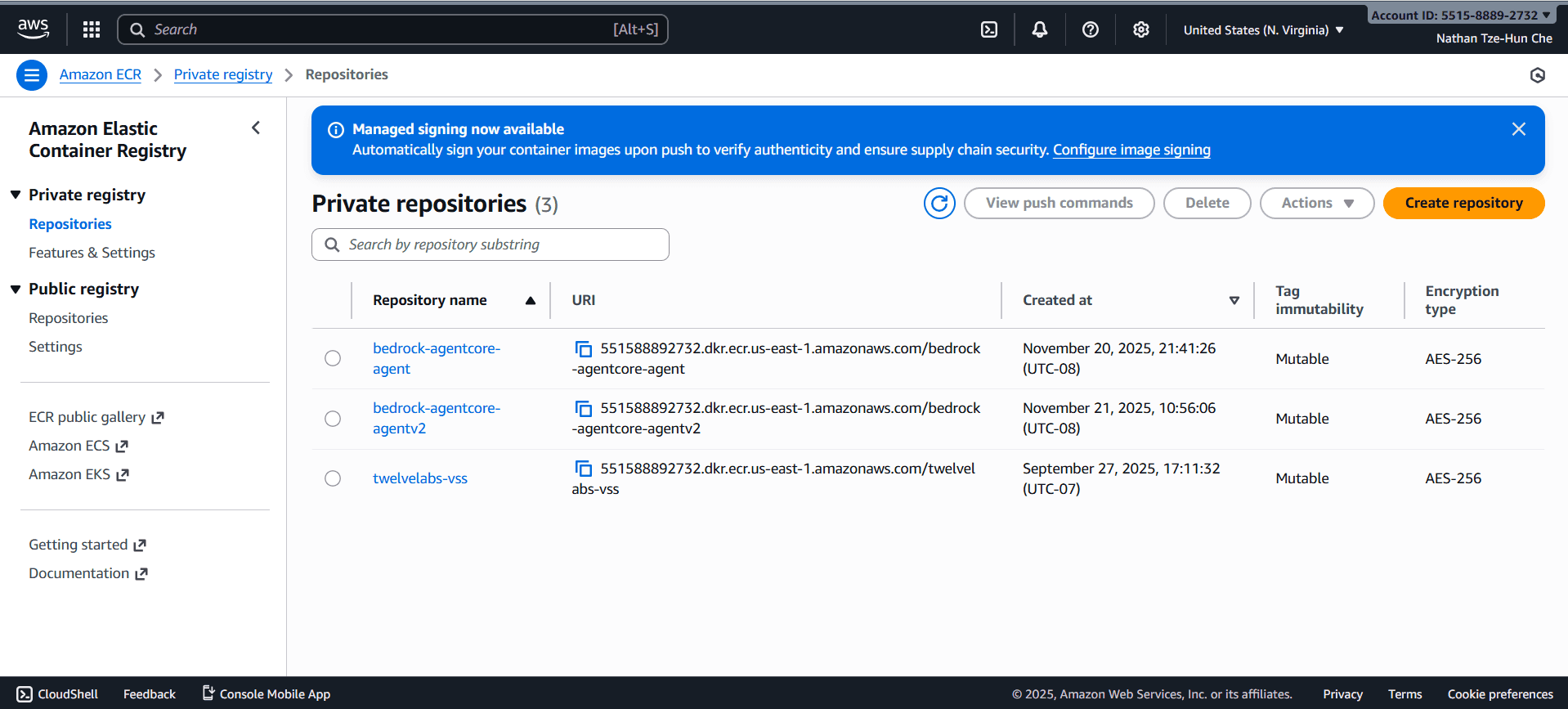

The project will be containerized with Docker, Finch, or Podman then uploaded to AWS Elastic Container Registry to hold your code, logic, and custom tools if any.

Depending on the name provided during launch, it will be assigned a unique repository URI and viewable within your ECR dashboard.

Docker file is automatically created to install necessary dependencies, forward ports (to enable the AI agent to be called like an API), and run specific entrypoint.

FROM ghcr.io/astral-sh/uv:python3.13-bookworm-slim WORKDIR /app # All environment variables in one layer ENV UV_SYSTEM_PYTHON=1 \ UV_COMPILE_BYTECODE=1 \ UV_NO_PROGRESS=1 \ PYTHONUNBUFFERED=1 \ DOCKER_CONTAINER=1 \ AWS_REGION=us-east-1 \ AWS_DEFAULT_REGION=us-east-1 COPY requirements.txt requirements.txt # Install from requirements file RUN uv pip install -r requirements.txt # Install FFmpeg RUN apt-get update && \ apt-get install -y ffmpeg && \ apt-get clean && \ rm -rf /var/lib/apt/lists/* RUN uv pip install aws-opentelemetry-distro==0.12.2 # Signal that this is running in Docker for host binding logic ENV DOCKER_CONTAINER=1 # Create non-root user RUN useradd -m -u 1000 bedrock_agentcore USER bedrock_agentcore EXPOSE 9000 EXPOSE 8000 EXPOSE 8080 # Copy entire project (respecting .dockerignore) COPY . . # Use the full module path CMD ["opentelemetry-instrument", "python", "-m", "agent"

This auto-generated file is what is passed into your container within ECR, so that when AWS AgentCore attempts to host your agent it will know exact dependencies, entrypoint, and ports to expose the API.

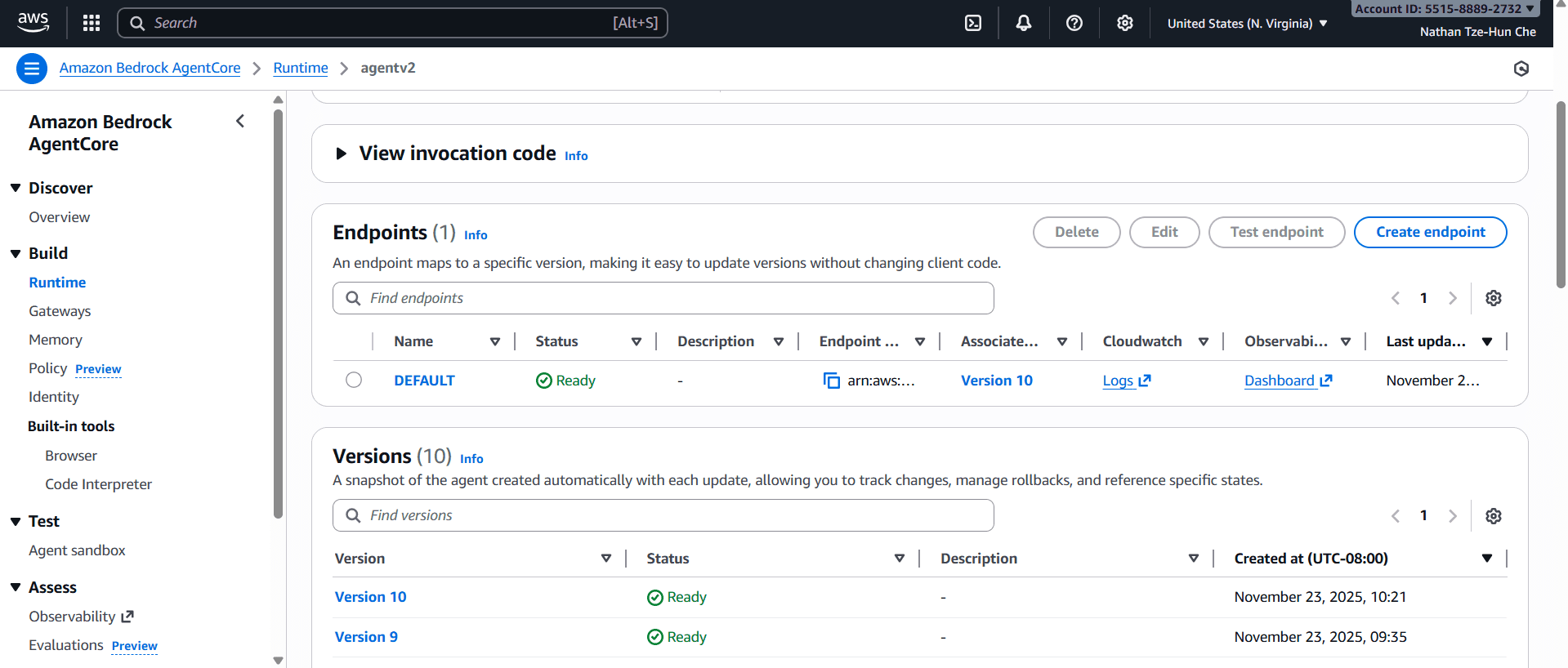

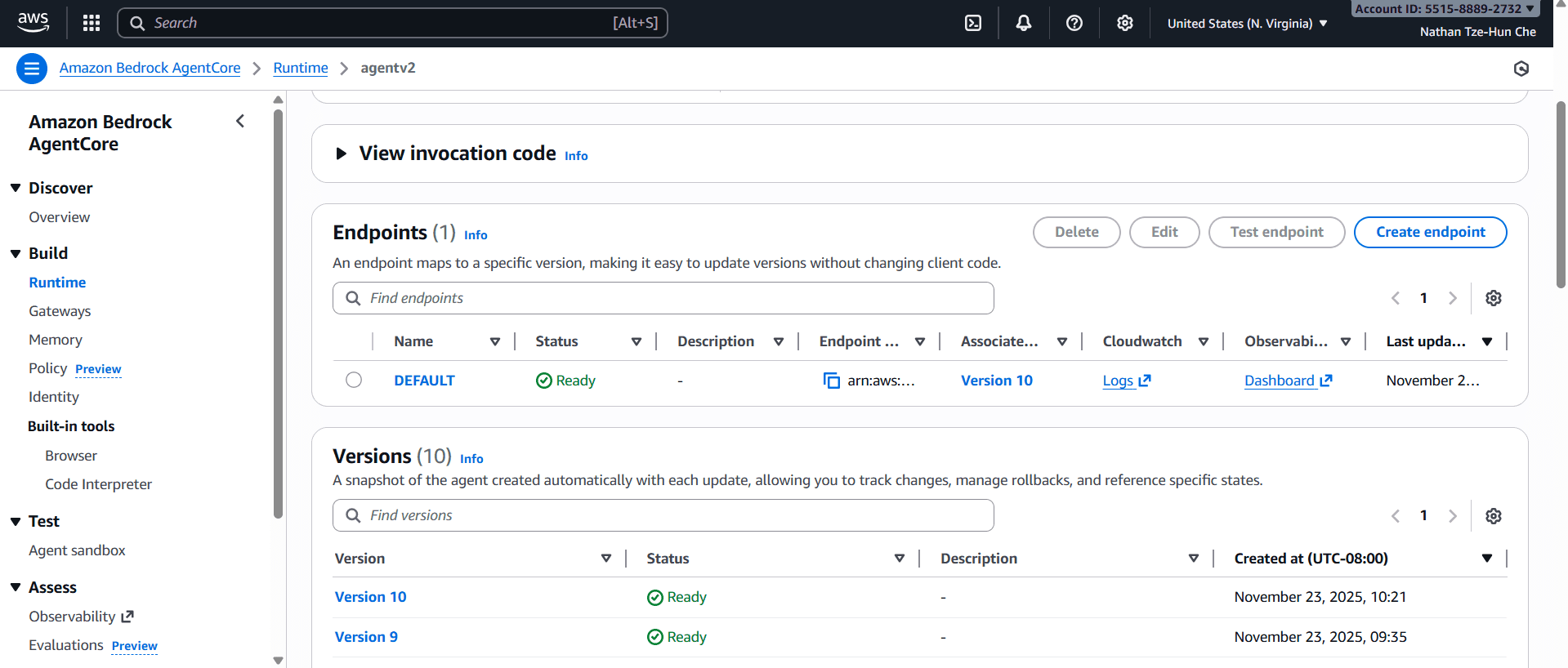

AWS AgentCore launches your ECR container on specific ports and acts as a REST API.

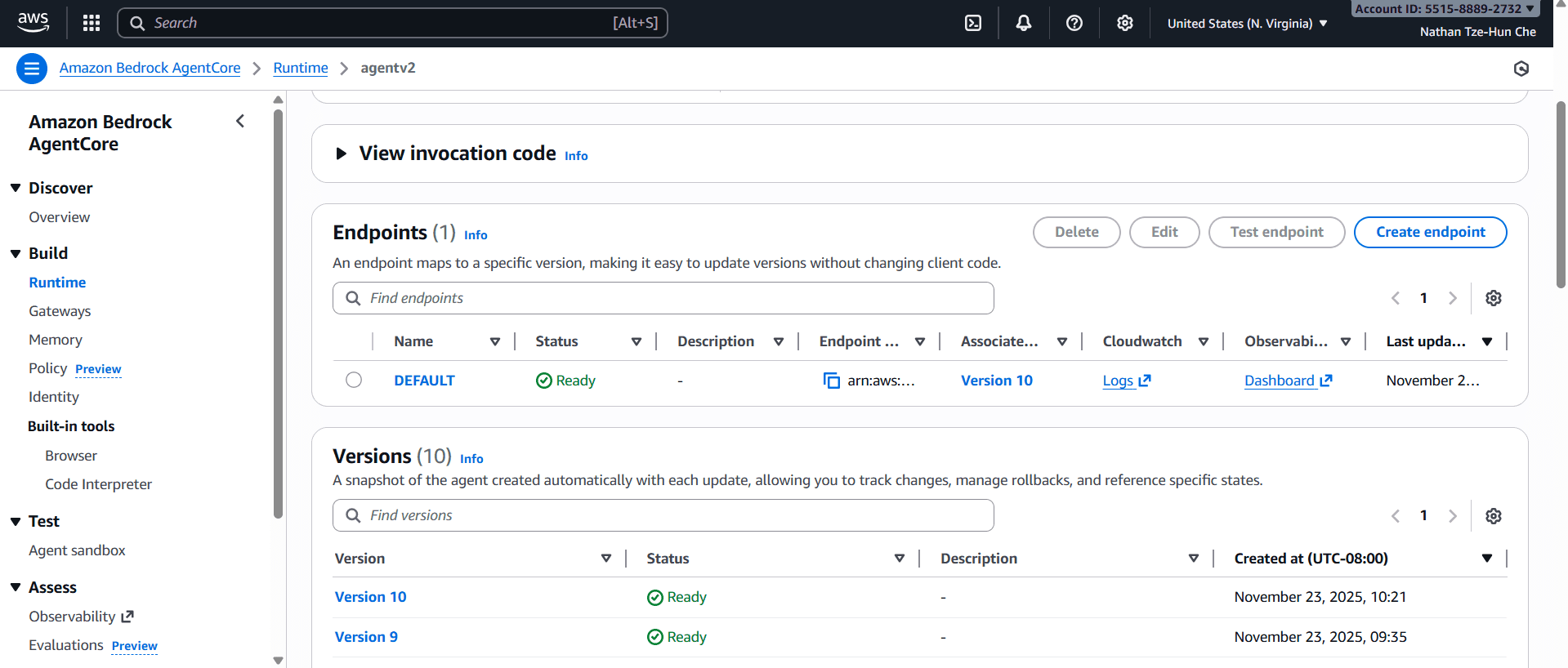

Here you can see real-time updates and important statistics regarding your AI deployment including version history, runtime sessions, error rate, and more!

💡Despite seeming like a simple dashboard, observability is a key component of building production-ready AI agents. These statistics allow you to calculate accurate price estimates, see where the code is failing, and active number of chat sessions.

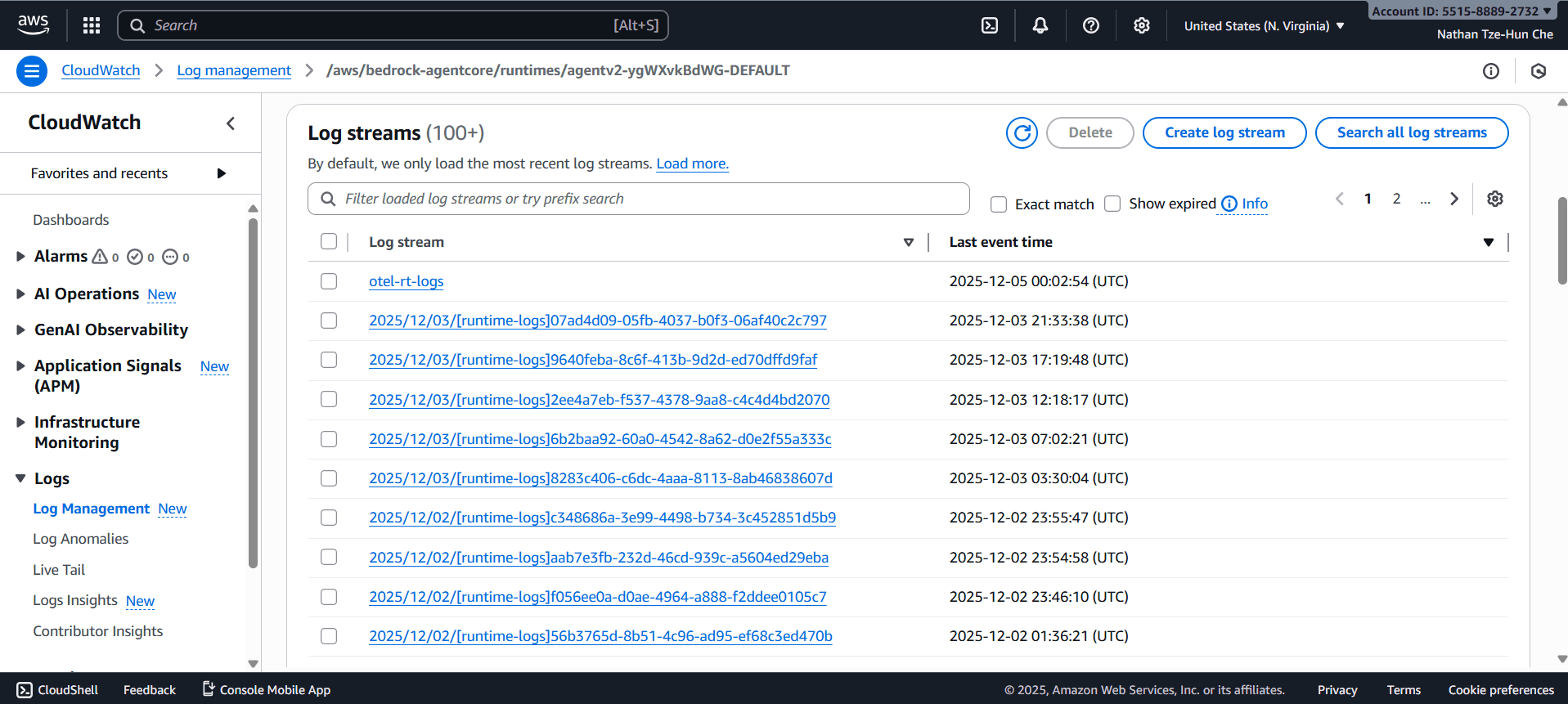

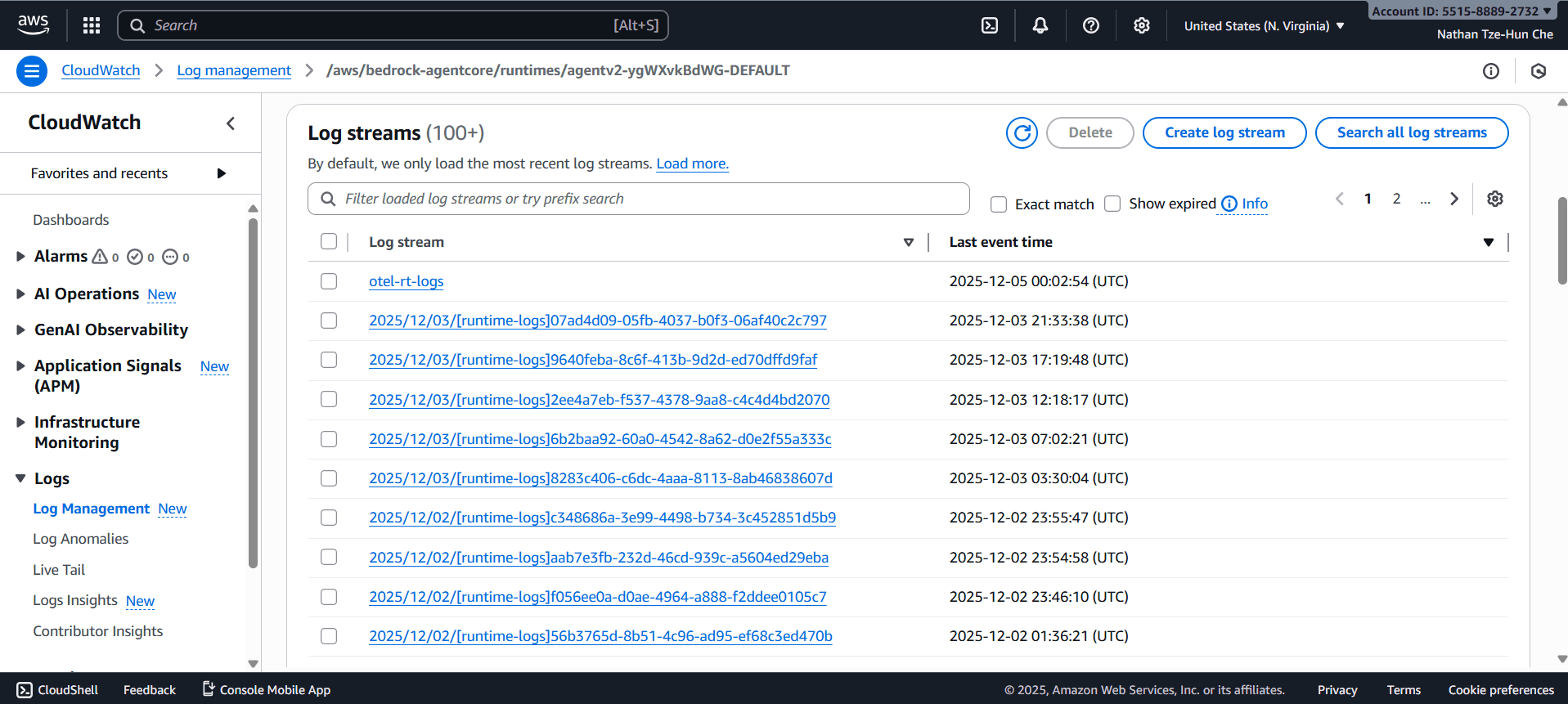

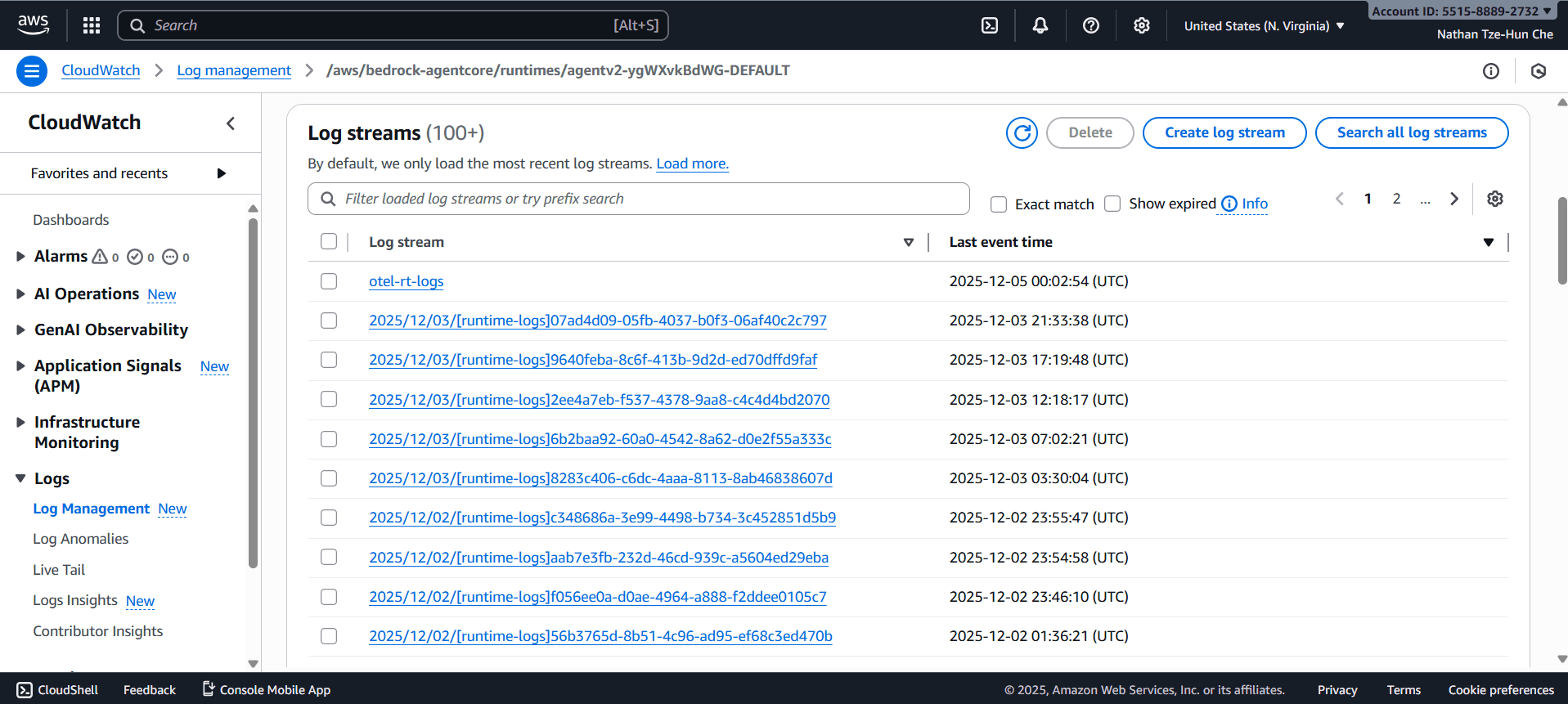

Agentcore runtime is linked to CloudWatch for additional code-level observability.

Alongside the AgentCore dashboard, CloudWatch logs give code-level errors and reports on each individual chat session ever had with the AI agent in production. This again provides invaluable insight as to how to debug.

With that being said, you have now fully deployed an AI agent that has access to TwelveLabs and Slack API tools using Strands Agent! 🎉

Agent Technical Architecture

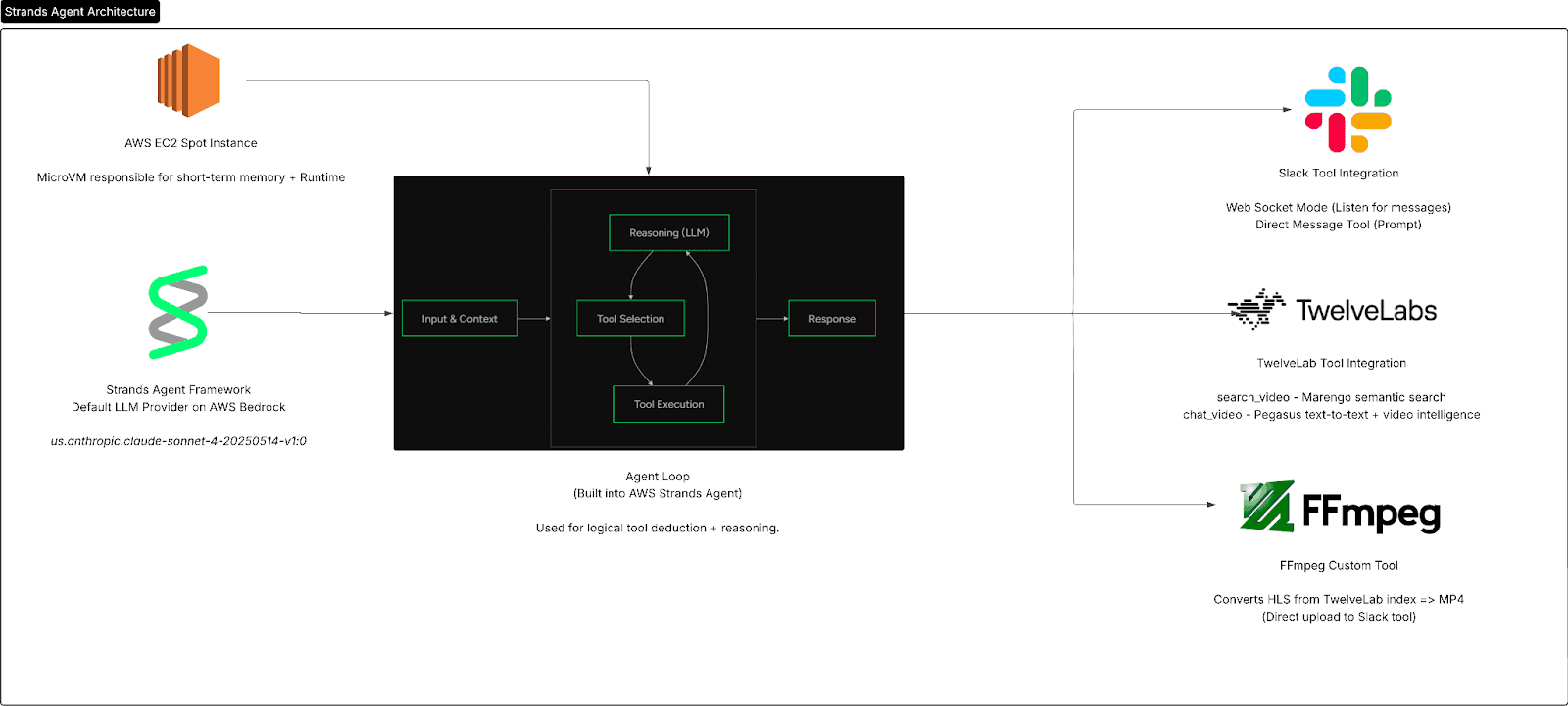

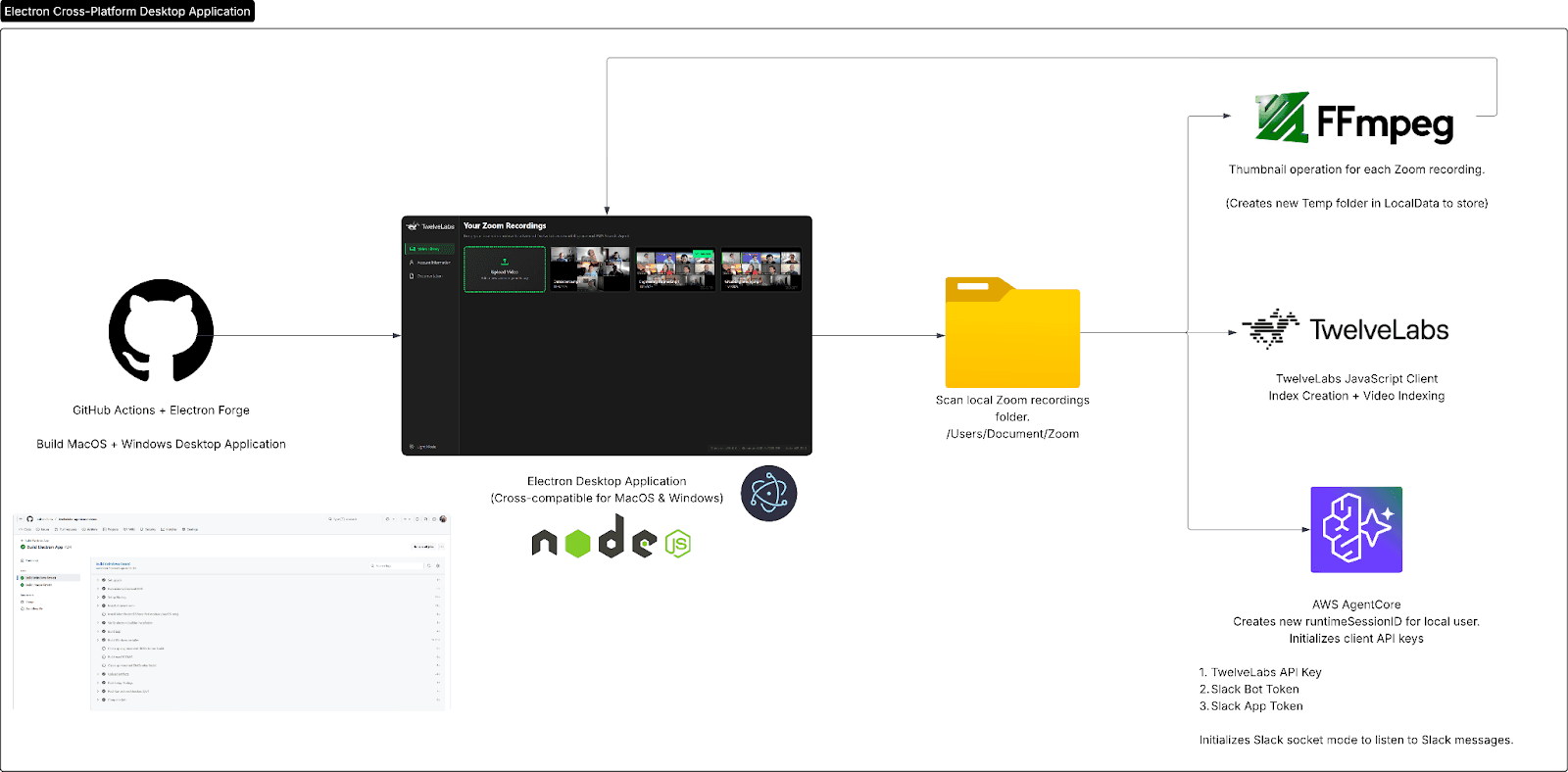

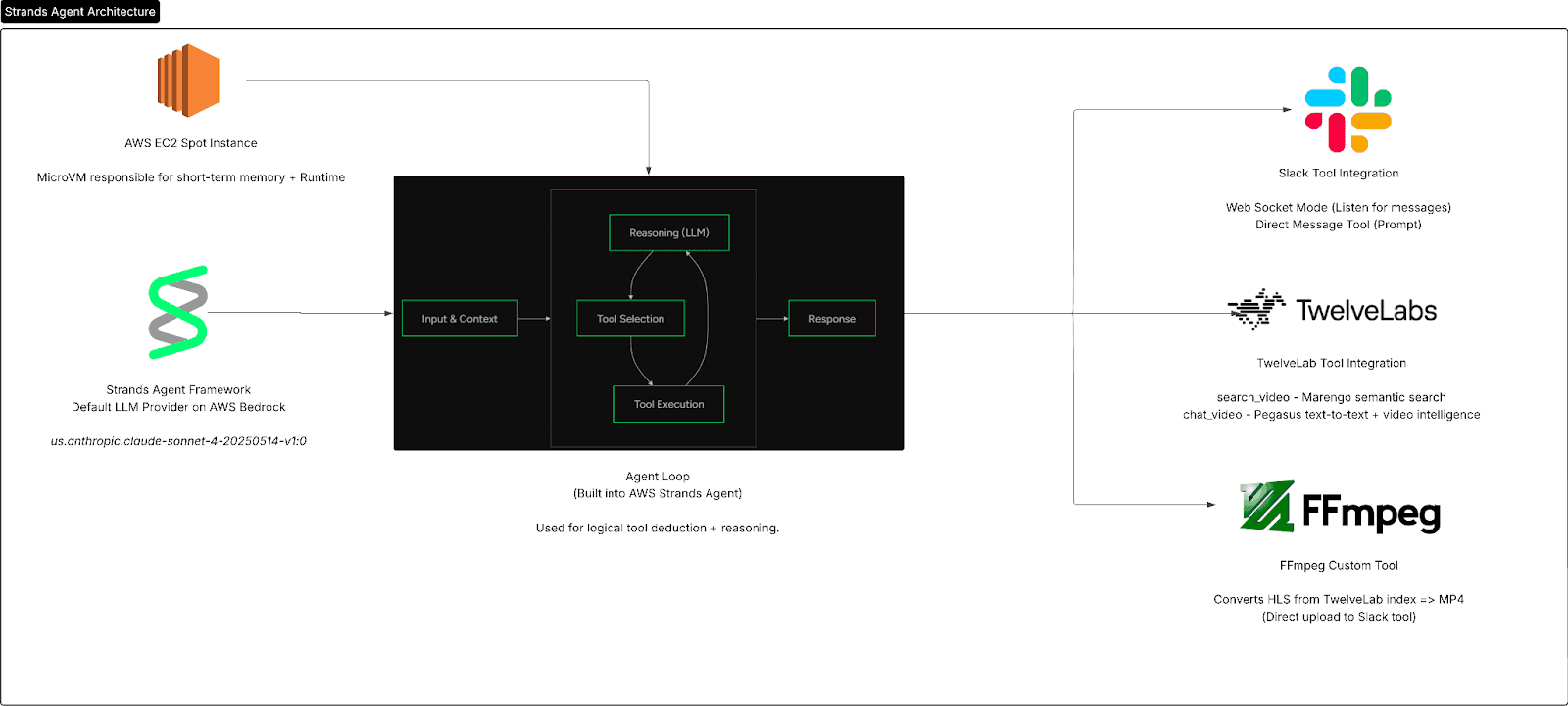

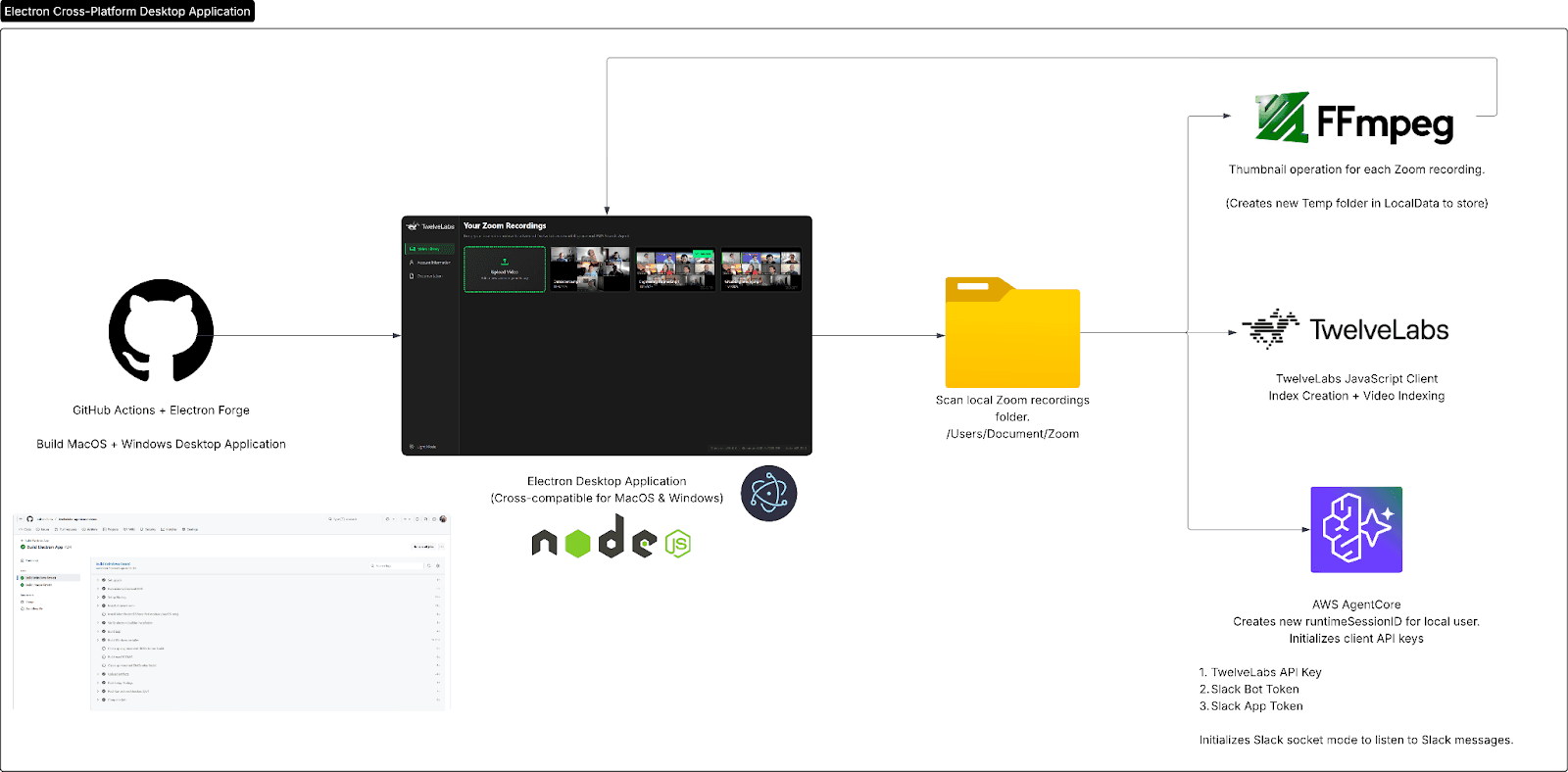

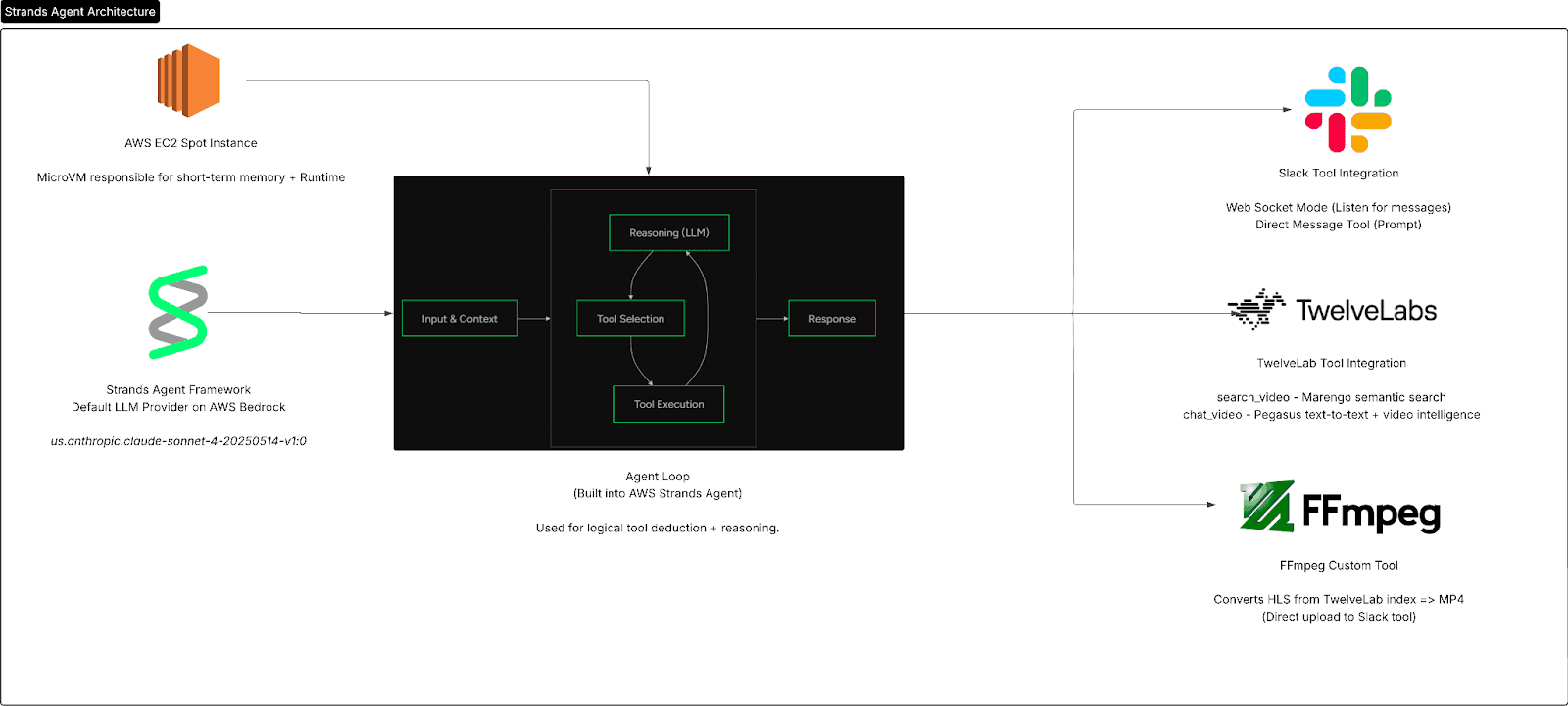

Before we move on to building out the frontend of this application it is important to have a clear understanding of the key decisions behind why we built our backend the way we did. Below are the technical architectures drawn in LucidCharts, that go more in-depth of how the data flows within our backend.

As you can see from the technical architecture above, Strands Agent serves as the foundational entry point for our AI agent, the agent loop then decides on tools, and in our case has the option to decide between 3 tools: Slack, TwelveLabs, or FFmpeg for video preprocessing.

One thing to note is the usage of AWS EC2 spot instances for AgentCore. This cloud server is automatically provisioned as the default server for AgentCore. Can you think why?

💡Learning Opportunity: Spot instances are instances are unused EC2 instances within the AWS ecosystem. This means that there is a major saving opportunity in terms of cloud computing cost if you do not need consistent long-term reservation of cloud resources. For AgentCore this is perfectly viable as cloud computation is only needed periodically on prompt!

The backend also has several cool technical details including agent memory and session maintainer via. heartbeat/ack POST requests, but these are all much too in-depth for the purpose of this blog. If you’d like to read more I highly encourage you to check out the official GitHub repository for this software.

Building Cross-Platform Desktop Application

I hope that so far you have a good understanding of not only the underlying mechanisms behind our AI agent, but also how easy it is to now implement advanced video intelligence capabilities into your agentic models thanks to this latest Strands Agent integration.

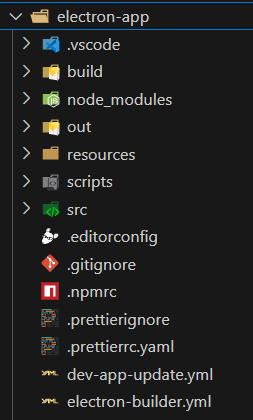

With that in mind, we can finally begin developing the frontend desktop application. The official technology stack for the application is ElectronJS and NodeJS, so ensure that you have those installed before pulling the code from the repository!

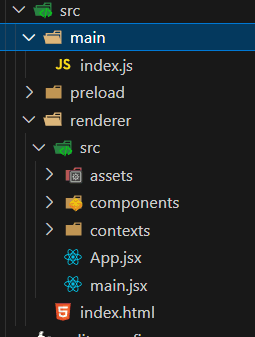

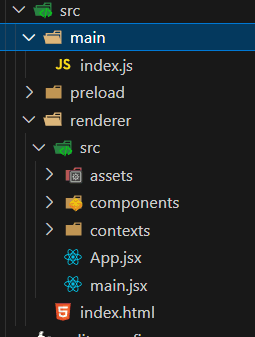

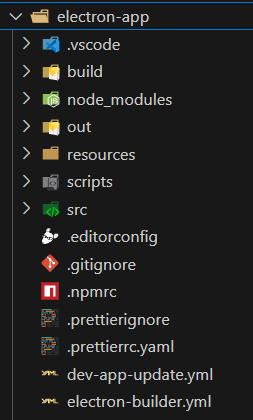

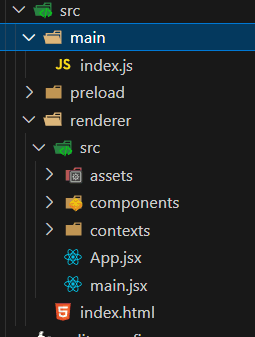

Let’s start by taking a look at the file structure.

Without going too much into the frontend development, as this blog is meant to focus on AgentCore and TwelveLabs, building this desktop application is much like building a ReactJS website. Specifically, this frontend desktop application is highly modular, divided into both frontend components, context, and logic based in main.js.

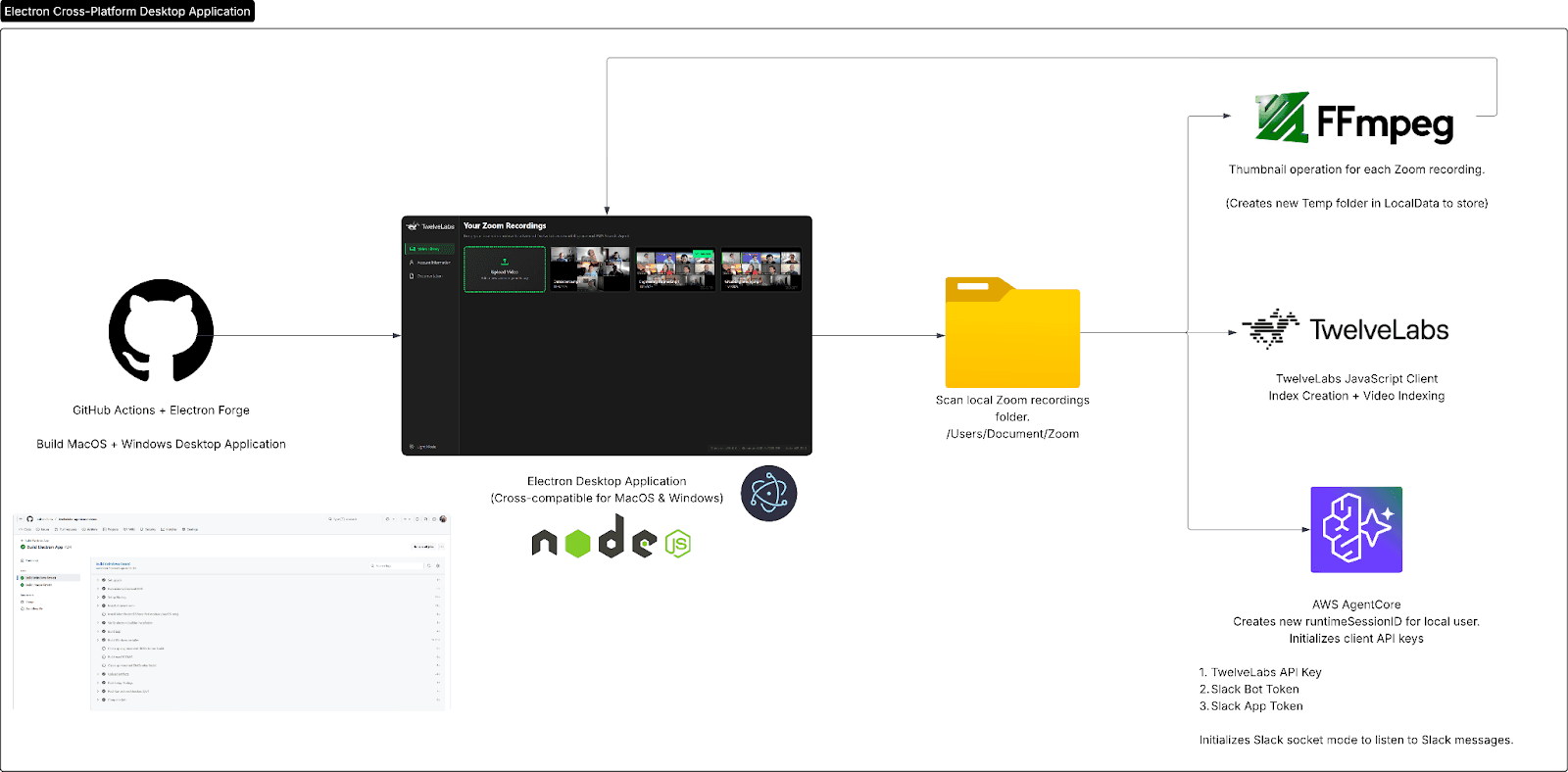

Above is the official technical architecture, which shows exactly where this desktop application slots into the rest of the software. To keep it short, we use GitHub Actions as a CI/CD pipeline to build for both MacOS and Windows (which you can download from the releases here) and use FFmpeg and NodeJS to handle preprocessing of videos. Finally NodeJS directly calls the API endpoint hosted from AWS AgentCore to access our AI Agent.

I highly encourage you to look further on the key decisions and technical architecture in this in-depth document here: https://docs.google.com/document/d/1jvfr4kleZ-ghZ7v8RwLEaRPO0W9GEiMHqToBy8Mr9y0/edit?usp=sharing

Conclusion

Congratulations! 🎉 You have just completed this tutorial and learned not only how to build an AWS AgentCore agent from the Strands Agent framework, but many of the underlying concepts revolving around agentic AI. Furthermore, you have learned about how easy it can be to integrate advanced video intelligence technology like TwelveLabs into your agentic AI.

Check out some more in-depth resources here:

Official GitHub Repository: https://github.com/nathanchess/twelvelabs-agentcore-demo

Technical Architecture Diagram (LucidChart): https://lucid.app/lucidchart/40b7aa79-6da6-4bed-bd63-5f34e3955685/edit?viewport_loc=424%2C-502%2C4025%2C1778%2C0_0&invitationId=inv_ba388ce0-6ad8-4f77-9ac1-c8a4edc405db

Official Software Release for this Application: https://github.com/nathanchess/twelvelabs-agentcore-demo/releases/tag/v1.0.0

Strands Agent Documentation: https://strandsagents.com/latest/

AgentCore Documentation: https://aws.amazon.com/bedrock/agentcore/

Introduction

From an engineer’s daily standup on Zoom to a recruiter’s 5th candidate call of the day, any working professional knows how frequent and vital meetings are in everyday work. In fact, according to leading meeting platforms like Zoom, the average employee spends nearly 392 hours in meetings per year. With all those meeting video archives, notes, and easily forgotten to-do lists, employees and enterprises are often left with unproductive post-meeting tasks and videos.

What if there was a way to easily search through 1000+ hours of this video content to find exact moments and find personalized answers to your meeting content? Not only that, what if these insights could then be immediately provided to your entire enterprise through popular communication platforms like Slack?

Thanks to the latest integration of TwelveLabs into AWS Strands Agent this is no longer a dream, in fact in today’s tutorial we are going to build this exact feature using AWS AgentCore, TwelveLabs, and ElectronJS.

Application Demo

Before we begin coding, let’s quickly preview what we’ll be building.

If you’d like to try it on your own MacOS or Windows device feel free to download the latest release or preview the codebase below.

Download Latest Release: https://github.com/nathanchess/twelvelabs-agentcore-demo/releases/tag/v1.0.0

Github Codebase: https://github.com/nathanchess/twelvelabs-agentcore-demo

Now that we know what we’re building, let’s get started on building it ourselves! 😊

Learning Objectives

In this tutorial you will:

Deploy a production ready AI agent on AWS AgentCore, leveraging different LLM providers within the AWS ecosystem such as AWS Bedrock, OpenAI, Ollama, and more.

Build custom tools to allow your AI agent to interact with external services beyond the Strands Tools.

Integrate external tooling with Slack and TwelveLabs into your AI agent with pre-built tools.

Add observability to AI agents, allowing precise cost estimation and debug logs, using the AWS ecosystem and services like CloudWatch.

Compile your own cross-platform desktop application using ElectronJS.

Prerequisites

Node.JS 20+: Node.js — Download Node.js®

Bundled with node package manager (npm)

TwelveLabs API Key: Authentication | TwelveLabs

Python 3.8+: Download Python | Python.org

AWS Access Key: Credentials - Boto3 1.40.12 documentation

Slack Bot & App Token: https://docs.slack.dev/authentication/tokens/

Both tokens are auto-generated when you create a Slackbot via. the Slack API.

AWS Console Account and permission to provision AgentCore, Bedrock, and CloudWatch.

Intermediate understanding of Python and JavaScript.

Building the Agent with Strands Agent

Before integrating features like the Slackbot or TwelveLab’s video intelligence models, it’s important to gain an understanding of the underlying mechanics behind AI agents. According to the Strands Agent documentation, an AI agent is primarily an LLM with an additional orchestration layer. This orchestration layer allows an LLM to take action for a variety of reasons, including finding information, querying databases, running code, and more!

💡 This entire orchestration layer is known as the Agent Loop.

Figure 1: Agent Loop Figure from AWS Strands

💡 Tool: Direct function calls or APIs, formatted in a way that allows the Reasoning LLM to call to access specific actions based on input & context.

In the figure above, we see that there is an additional box in the middle containing tool execution, tool selection, and reasoning (LLM). Each of these steps all serve a vital purpose:

Reasoning (LLM) — Deduces the correct or if any tool needs to be called for given input.

Tool Selection — Finds requested tools based on Agent configuration.

Tool Execution — Runs tool and passes result back to reasoning LLM to deduce next steps.

You now may be asking how difficult it would be to build such a framework. How can the reasoning LLM recognize tools, but more importantly, call tools on-demand? How can one properly describe a tool, to give it meaning for the LLM to interpret? These are all difficult and complex subtopics that are fortunately built for us on AWS Strands Agent.

Though explaining through each concept is beyond the scope of this blog, I highly recommend you to learn in-depth these complex mechanisms via. the Strands Agent codebase: https://github.com/strands-agents.

With this framework, creating an Agent is as simple as a few lines:

from bedrock_agentcore.runtime import BedrockAgentCoreApp from strands import Agent app = BedrockAgentCoreApp() agent = Agent() @app.entrypoint def invoke(payload): """Process user input and return a response""" user_message = payload.get("prompt", "Hello") result = agent(user_message) return {"result": result.message} if __name__ == "__main__": app.run()

With these simple lines, you have just created an AI agent! Albeit, it acts much like your standard LLM as we haven’t provisioned any tools yet, so it can only handle question and answer tasks.

Adding TwelveLabs to Strands Agent

Great, now we have a fully working AI Agent that excels at answering questions given input and context. However, as you test around more, you’ll find there are several limitations:

Cannot handle video data or really any other data format beyond strings properly.

Confined to just Q&A tasks.

Lack of observability in what the Reasoning LLM thinks and how it decides, which leads to poor debugging of AI agents in production. (You don’t know what’s really happening 😲)

In order to expand the AI agent’s capabilities, we now must introduce tools into our code! As mentioned before, tools tend to be direct functions or API calls that the LLM can directly access. In Strands Agent they can be formatted and provided to AI agent in two ways:

1 - Default tools provided by strands_tools Python package:

Figure 2: Strands Agent Tools Documentation

Strands Agent provides nearly 20+ tools by default that you can add to your AI agent! These tools range from simple File operations on the Shell all the way to direct platform integrations to Slack, TwelveLabs, and AWS.

In this project we will use the following tools:

TwelveLabs — Provides the base video intelligence with Pegasus and the new Marengo 3.0 model.

Slack — Allows us to directly interact with our enterprise at SlackBot

Note: Credentials must be provided via. Slack Bot and Slack App Token.

Environment — Simple tool that sets environment variables, key to AgentCore session handling.

Adding these tools is just as simple as creating the agent, with a few lines of code:

from strands import Agent from strands_tools import calculator, file_read, shell # Add tools to our agent agent = Agent( tools=[calculator, file_read, shell] ) # Agent will automatically determine when to use the calculator tool agent("What is 42 ^ 9") print("\n\n") # Print new lines # Agent will use the shell and file reader tool when appropriate agent("Show me the contents of a single file in this directory")

As you might notice in the code snippet above, we simply import the tools we want using strands_tools then plug in those tools as an additional parameter inside Agent(). Just like that you have expanded your Agent’s capabilities once more so that it can now take action! The entire agent loop described above will happen in the background, but now it can call the calculator, file_read, and shell tool if needed!

2 - Custom tooling built in Python with Strands Agent:

Though we will not need to build custom tools for our application, it’s important to note that Strands Agent provides a helpful framework to build your custom tools, by not only defining the function itself but the metadata associated with a function so it can be properly referenced.

from strands import tool @tool def weather_forecast(city: str, days: int = 3) -> str: """Get weather forecast for a city. Args: city: The name of the city days: Number of days for the forecast """ return f"Weather forecast for {city} for the next {days} days..."

As seen in the code snippet above, a tool can be created with pre-existing functions by adding the @tool decorator above your functions. The docstring is then used as metadata to be interpreted by the reasoning LLM in tool deduction.

With those two methods in mind, let’s go ahead and implement the following tools in our own AI Agent!

import json import os import asyncio from dotenv import load_dotenv load_dotenv() from bedrock_agentcore.runtime import BedrockAgentCoreApp from strands import Agent from strands_tools import environment from custom_tools import chat_video, search_video, get_slack_channel_ids, get_video_index, slack, fetch_video_url os.environ["BYPASS_TOOL_CONSENT"] = "true" os.environ["STRANDS_SLACK_AUTO_REPLY"] = "true" os.environ["STRANDS_SLACK_LISTEN_ONLY_TAG"] = "" def get_tools(): return [slack, environment, chat_video, search_video, get_slack_channel_ids, get_video_index, fetch_video_url] app = BedrockAgentCoreApp() agent = Agent( tools=get_tools() ) # Track if socket mode has been started to avoid multiple starts _socket_mode_started = False @app.entrypoint async def invoke(payload): """ Process system request directly and ONLY from Electron app. """ global _socket_mode_started system_message = payload.get("prompt") # Process the agent stream (independent of socket mode) stream = agent.stream_async(system_message) async for event in stream: if "data" in event: yield event['data'] if __name__ == '__main__': app.run()

In the code snippet above, we have loaded in tools from strands_tools specifically:

TwelveLabs:

chat_video,search_videoSlack:

slack,get_slack_channel_ids

💡These tools are the official integrations from their respective teams, hence I highly recommend diving deeper into the Strands Tools repository to see how production-grade tools are written for AI agents!

More importantly, thanks to the latest integration into TwelveLabs, you now can deploy production grade AI agents that interact with your video data into a single line of code. More information on our specific integrations can be found in this blog: https://www.twelvelabs.io/blog/twelve-labs-and-strands-agents.

Deploying on AWS AgentCore

Now that we have a working AI agent on our local machine we need to bring this to production so that end users can also access these amazing tools and agents! Luckily for us, Strands Agent allows us to deploy to the AWS ecosystem in a single command.

# Deploy to AWS

Upon running this command, several things will happen to your local AI agent:

The project will be containerized with Docker, Finch, or Podman then uploaded to AWS Elastic Container Registry to hold your code, logic, and custom tools if any.

Depending on the name provided during launch, it will be assigned a unique repository URI and viewable within your ECR dashboard.

Docker file is automatically created to install necessary dependencies, forward ports (to enable the AI agent to be called like an API), and run specific entrypoint.

FROM ghcr.io/astral-sh/uv:python3.13-bookworm-slim WORKDIR /app # All environment variables in one layer ENV UV_SYSTEM_PYTHON=1 \ UV_COMPILE_BYTECODE=1 \ UV_NO_PROGRESS=1 \ PYTHONUNBUFFERED=1 \ DOCKER_CONTAINER=1 \ AWS_REGION=us-east-1 \ AWS_DEFAULT_REGION=us-east-1 COPY requirements.txt requirements.txt # Install from requirements file RUN uv pip install -r requirements.txt # Install FFmpeg RUN apt-get update && \ apt-get install -y ffmpeg && \ apt-get clean && \ rm -rf /var/lib/apt/lists/* RUN uv pip install aws-opentelemetry-distro==0.12.2 # Signal that this is running in Docker for host binding logic ENV DOCKER_CONTAINER=1 # Create non-root user RUN useradd -m -u 1000 bedrock_agentcore USER bedrock_agentcore EXPOSE 9000 EXPOSE 8000 EXPOSE 8080 # Copy entire project (respecting .dockerignore) COPY . . # Use the full module path CMD ["opentelemetry-instrument", "python", "-m", "agent"

This auto-generated file is what is passed into your container within ECR, so that when AWS AgentCore attempts to host your agent it will know exact dependencies, entrypoint, and ports to expose the API.

AWS AgentCore launches your ECR container on specific ports and acts as a REST API.

Here you can see real-time updates and important statistics regarding your AI deployment including version history, runtime sessions, error rate, and more!

💡Despite seeming like a simple dashboard, observability is a key component of building production-ready AI agents. These statistics allow you to calculate accurate price estimates, see where the code is failing, and active number of chat sessions.

Agentcore runtime is linked to CloudWatch for additional code-level observability.

Alongside the AgentCore dashboard, CloudWatch logs give code-level errors and reports on each individual chat session ever had with the AI agent in production. This again provides invaluable insight as to how to debug.

With that being said, you have now fully deployed an AI agent that has access to TwelveLabs and Slack API tools using Strands Agent! 🎉

Agent Technical Architecture

Before we move on to building out the frontend of this application it is important to have a clear understanding of the key decisions behind why we built our backend the way we did. Below are the technical architectures drawn in LucidCharts, that go more in-depth of how the data flows within our backend.

As you can see from the technical architecture above, Strands Agent serves as the foundational entry point for our AI agent, the agent loop then decides on tools, and in our case has the option to decide between 3 tools: Slack, TwelveLabs, or FFmpeg for video preprocessing.

One thing to note is the usage of AWS EC2 spot instances for AgentCore. This cloud server is automatically provisioned as the default server for AgentCore. Can you think why?

💡Learning Opportunity: Spot instances are instances are unused EC2 instances within the AWS ecosystem. This means that there is a major saving opportunity in terms of cloud computing cost if you do not need consistent long-term reservation of cloud resources. For AgentCore this is perfectly viable as cloud computation is only needed periodically on prompt!

The backend also has several cool technical details including agent memory and session maintainer via. heartbeat/ack POST requests, but these are all much too in-depth for the purpose of this blog. If you’d like to read more I highly encourage you to check out the official GitHub repository for this software.

Building Cross-Platform Desktop Application

I hope that so far you have a good understanding of not only the underlying mechanisms behind our AI agent, but also how easy it is to now implement advanced video intelligence capabilities into your agentic models thanks to this latest Strands Agent integration.

With that in mind, we can finally begin developing the frontend desktop application. The official technology stack for the application is ElectronJS and NodeJS, so ensure that you have those installed before pulling the code from the repository!

Let’s start by taking a look at the file structure.

Without going too much into the frontend development, as this blog is meant to focus on AgentCore and TwelveLabs, building this desktop application is much like building a ReactJS website. Specifically, this frontend desktop application is highly modular, divided into both frontend components, context, and logic based in main.js.

Above is the official technical architecture, which shows exactly where this desktop application slots into the rest of the software. To keep it short, we use GitHub Actions as a CI/CD pipeline to build for both MacOS and Windows (which you can download from the releases here) and use FFmpeg and NodeJS to handle preprocessing of videos. Finally NodeJS directly calls the API endpoint hosted from AWS AgentCore to access our AI Agent.

I highly encourage you to look further on the key decisions and technical architecture in this in-depth document here: https://docs.google.com/document/d/1jvfr4kleZ-ghZ7v8RwLEaRPO0W9GEiMHqToBy8Mr9y0/edit?usp=sharing

Conclusion

Congratulations! 🎉 You have just completed this tutorial and learned not only how to build an AWS AgentCore agent from the Strands Agent framework, but many of the underlying concepts revolving around agentic AI. Furthermore, you have learned about how easy it can be to integrate advanced video intelligence technology like TwelveLabs into your agentic AI.

Check out some more in-depth resources here:

Official GitHub Repository: https://github.com/nathanchess/twelvelabs-agentcore-demo

Technical Architecture Diagram (LucidChart): https://lucid.app/lucidchart/40b7aa79-6da6-4bed-bd63-5f34e3955685/edit?viewport_loc=424%2C-502%2C4025%2C1778%2C0_0&invitationId=inv_ba388ce0-6ad8-4f77-9ac1-c8a4edc405db

Official Software Release for this Application: https://github.com/nathanchess/twelvelabs-agentcore-demo/releases/tag/v1.0.0

Strands Agent Documentation: https://strandsagents.com/latest/

AgentCore Documentation: https://aws.amazon.com/bedrock/agentcore/

Introduction

From an engineer’s daily standup on Zoom to a recruiter’s 5th candidate call of the day, any working professional knows how frequent and vital meetings are in everyday work. In fact, according to leading meeting platforms like Zoom, the average employee spends nearly 392 hours in meetings per year. With all those meeting video archives, notes, and easily forgotten to-do lists, employees and enterprises are often left with unproductive post-meeting tasks and videos.

What if there was a way to easily search through 1000+ hours of this video content to find exact moments and find personalized answers to your meeting content? Not only that, what if these insights could then be immediately provided to your entire enterprise through popular communication platforms like Slack?

Thanks to the latest integration of TwelveLabs into AWS Strands Agent this is no longer a dream, in fact in today’s tutorial we are going to build this exact feature using AWS AgentCore, TwelveLabs, and ElectronJS.

Application Demo

Before we begin coding, let’s quickly preview what we’ll be building.

If you’d like to try it on your own MacOS or Windows device feel free to download the latest release or preview the codebase below.

Download Latest Release: https://github.com/nathanchess/twelvelabs-agentcore-demo/releases/tag/v1.0.0

Github Codebase: https://github.com/nathanchess/twelvelabs-agentcore-demo

Now that we know what we’re building, let’s get started on building it ourselves! 😊

Learning Objectives

In this tutorial you will:

Deploy a production ready AI agent on AWS AgentCore, leveraging different LLM providers within the AWS ecosystem such as AWS Bedrock, OpenAI, Ollama, and more.

Build custom tools to allow your AI agent to interact with external services beyond the Strands Tools.

Integrate external tooling with Slack and TwelveLabs into your AI agent with pre-built tools.

Add observability to AI agents, allowing precise cost estimation and debug logs, using the AWS ecosystem and services like CloudWatch.

Compile your own cross-platform desktop application using ElectronJS.

Prerequisites

Node.JS 20+: Node.js — Download Node.js®

Bundled with node package manager (npm)

TwelveLabs API Key: Authentication | TwelveLabs

Python 3.8+: Download Python | Python.org

AWS Access Key: Credentials - Boto3 1.40.12 documentation

Slack Bot & App Token: https://docs.slack.dev/authentication/tokens/

Both tokens are auto-generated when you create a Slackbot via. the Slack API.

AWS Console Account and permission to provision AgentCore, Bedrock, and CloudWatch.

Intermediate understanding of Python and JavaScript.

Building the Agent with Strands Agent

Before integrating features like the Slackbot or TwelveLab’s video intelligence models, it’s important to gain an understanding of the underlying mechanics behind AI agents. According to the Strands Agent documentation, an AI agent is primarily an LLM with an additional orchestration layer. This orchestration layer allows an LLM to take action for a variety of reasons, including finding information, querying databases, running code, and more!

💡 This entire orchestration layer is known as the Agent Loop.

Figure 1: Agent Loop Figure from AWS Strands

💡 Tool: Direct function calls or APIs, formatted in a way that allows the Reasoning LLM to call to access specific actions based on input & context.

In the figure above, we see that there is an additional box in the middle containing tool execution, tool selection, and reasoning (LLM). Each of these steps all serve a vital purpose:

Reasoning (LLM) — Deduces the correct or if any tool needs to be called for given input.

Tool Selection — Finds requested tools based on Agent configuration.

Tool Execution — Runs tool and passes result back to reasoning LLM to deduce next steps.

You now may be asking how difficult it would be to build such a framework. How can the reasoning LLM recognize tools, but more importantly, call tools on-demand? How can one properly describe a tool, to give it meaning for the LLM to interpret? These are all difficult and complex subtopics that are fortunately built for us on AWS Strands Agent.

Though explaining through each concept is beyond the scope of this blog, I highly recommend you to learn in-depth these complex mechanisms via. the Strands Agent codebase: https://github.com/strands-agents.

With this framework, creating an Agent is as simple as a few lines:

from bedrock_agentcore.runtime import BedrockAgentCoreApp from strands import Agent app = BedrockAgentCoreApp() agent = Agent() @app.entrypoint def invoke(payload): """Process user input and return a response""" user_message = payload.get("prompt", "Hello") result = agent(user_message) return {"result": result.message} if __name__ == "__main__": app.run()

With these simple lines, you have just created an AI agent! Albeit, it acts much like your standard LLM as we haven’t provisioned any tools yet, so it can only handle question and answer tasks.

Adding TwelveLabs to Strands Agent

Great, now we have a fully working AI Agent that excels at answering questions given input and context. However, as you test around more, you’ll find there are several limitations:

Cannot handle video data or really any other data format beyond strings properly.

Confined to just Q&A tasks.

Lack of observability in what the Reasoning LLM thinks and how it decides, which leads to poor debugging of AI agents in production. (You don’t know what’s really happening 😲)

In order to expand the AI agent’s capabilities, we now must introduce tools into our code! As mentioned before, tools tend to be direct functions or API calls that the LLM can directly access. In Strands Agent they can be formatted and provided to AI agent in two ways:

1 - Default tools provided by strands_tools Python package:

Figure 2: Strands Agent Tools Documentation

Strands Agent provides nearly 20+ tools by default that you can add to your AI agent! These tools range from simple File operations on the Shell all the way to direct platform integrations to Slack, TwelveLabs, and AWS.

In this project we will use the following tools:

TwelveLabs — Provides the base video intelligence with Pegasus and the new Marengo 3.0 model.

Slack — Allows us to directly interact with our enterprise at SlackBot

Note: Credentials must be provided via. Slack Bot and Slack App Token.

Environment — Simple tool that sets environment variables, key to AgentCore session handling.

Adding these tools is just as simple as creating the agent, with a few lines of code:

from strands import Agent from strands_tools import calculator, file_read, shell # Add tools to our agent agent = Agent( tools=[calculator, file_read, shell] ) # Agent will automatically determine when to use the calculator tool agent("What is 42 ^ 9") print("\n\n") # Print new lines # Agent will use the shell and file reader tool when appropriate agent("Show me the contents of a single file in this directory")

As you might notice in the code snippet above, we simply import the tools we want using strands_tools then plug in those tools as an additional parameter inside Agent(). Just like that you have expanded your Agent’s capabilities once more so that it can now take action! The entire agent loop described above will happen in the background, but now it can call the calculator, file_read, and shell tool if needed!

2 - Custom tooling built in Python with Strands Agent:

Though we will not need to build custom tools for our application, it’s important to note that Strands Agent provides a helpful framework to build your custom tools, by not only defining the function itself but the metadata associated with a function so it can be properly referenced.

from strands import tool @tool def weather_forecast(city: str, days: int = 3) -> str: """Get weather forecast for a city. Args: city: The name of the city days: Number of days for the forecast """ return f"Weather forecast for {city} for the next {days} days..."

As seen in the code snippet above, a tool can be created with pre-existing functions by adding the @tool decorator above your functions. The docstring is then used as metadata to be interpreted by the reasoning LLM in tool deduction.

With those two methods in mind, let’s go ahead and implement the following tools in our own AI Agent!

import json import os import asyncio from dotenv import load_dotenv load_dotenv() from bedrock_agentcore.runtime import BedrockAgentCoreApp from strands import Agent from strands_tools import environment from custom_tools import chat_video, search_video, get_slack_channel_ids, get_video_index, slack, fetch_video_url os.environ["BYPASS_TOOL_CONSENT"] = "true" os.environ["STRANDS_SLACK_AUTO_REPLY"] = "true" os.environ["STRANDS_SLACK_LISTEN_ONLY_TAG"] = "" def get_tools(): return [slack, environment, chat_video, search_video, get_slack_channel_ids, get_video_index, fetch_video_url] app = BedrockAgentCoreApp() agent = Agent( tools=get_tools() ) # Track if socket mode has been started to avoid multiple starts _socket_mode_started = False @app.entrypoint async def invoke(payload): """ Process system request directly and ONLY from Electron app. """ global _socket_mode_started system_message = payload.get("prompt") # Process the agent stream (independent of socket mode) stream = agent.stream_async(system_message) async for event in stream: if "data" in event: yield event['data'] if __name__ == '__main__': app.run()

In the code snippet above, we have loaded in tools from strands_tools specifically:

TwelveLabs:

chat_video,search_videoSlack:

slack,get_slack_channel_ids

💡These tools are the official integrations from their respective teams, hence I highly recommend diving deeper into the Strands Tools repository to see how production-grade tools are written for AI agents!

More importantly, thanks to the latest integration into TwelveLabs, you now can deploy production grade AI agents that interact with your video data into a single line of code. More information on our specific integrations can be found in this blog: https://www.twelvelabs.io/blog/twelve-labs-and-strands-agents.

Deploying on AWS AgentCore

Now that we have a working AI agent on our local machine we need to bring this to production so that end users can also access these amazing tools and agents! Luckily for us, Strands Agent allows us to deploy to the AWS ecosystem in a single command.

# Deploy to AWS

Upon running this command, several things will happen to your local AI agent:

The project will be containerized with Docker, Finch, or Podman then uploaded to AWS Elastic Container Registry to hold your code, logic, and custom tools if any.

Depending on the name provided during launch, it will be assigned a unique repository URI and viewable within your ECR dashboard.

Docker file is automatically created to install necessary dependencies, forward ports (to enable the AI agent to be called like an API), and run specific entrypoint.

FROM ghcr.io/astral-sh/uv:python3.13-bookworm-slim WORKDIR /app # All environment variables in one layer ENV UV_SYSTEM_PYTHON=1 \ UV_COMPILE_BYTECODE=1 \ UV_NO_PROGRESS=1 \ PYTHONUNBUFFERED=1 \ DOCKER_CONTAINER=1 \ AWS_REGION=us-east-1 \ AWS_DEFAULT_REGION=us-east-1 COPY requirements.txt requirements.txt # Install from requirements file RUN uv pip install -r requirements.txt # Install FFmpeg RUN apt-get update && \ apt-get install -y ffmpeg && \ apt-get clean && \ rm -rf /var/lib/apt/lists/* RUN uv pip install aws-opentelemetry-distro==0.12.2 # Signal that this is running in Docker for host binding logic ENV DOCKER_CONTAINER=1 # Create non-root user RUN useradd -m -u 1000 bedrock_agentcore USER bedrock_agentcore EXPOSE 9000 EXPOSE 8000 EXPOSE 8080 # Copy entire project (respecting .dockerignore) COPY . . # Use the full module path CMD ["opentelemetry-instrument", "python", "-m", "agent"

This auto-generated file is what is passed into your container within ECR, so that when AWS AgentCore attempts to host your agent it will know exact dependencies, entrypoint, and ports to expose the API.

AWS AgentCore launches your ECR container on specific ports and acts as a REST API.

Here you can see real-time updates and important statistics regarding your AI deployment including version history, runtime sessions, error rate, and more!

💡Despite seeming like a simple dashboard, observability is a key component of building production-ready AI agents. These statistics allow you to calculate accurate price estimates, see where the code is failing, and active number of chat sessions.

Agentcore runtime is linked to CloudWatch for additional code-level observability.

Alongside the AgentCore dashboard, CloudWatch logs give code-level errors and reports on each individual chat session ever had with the AI agent in production. This again provides invaluable insight as to how to debug.

With that being said, you have now fully deployed an AI agent that has access to TwelveLabs and Slack API tools using Strands Agent! 🎉

Agent Technical Architecture

Before we move on to building out the frontend of this application it is important to have a clear understanding of the key decisions behind why we built our backend the way we did. Below are the technical architectures drawn in LucidCharts, that go more in-depth of how the data flows within our backend.

As you can see from the technical architecture above, Strands Agent serves as the foundational entry point for our AI agent, the agent loop then decides on tools, and in our case has the option to decide between 3 tools: Slack, TwelveLabs, or FFmpeg for video preprocessing.

One thing to note is the usage of AWS EC2 spot instances for AgentCore. This cloud server is automatically provisioned as the default server for AgentCore. Can you think why?

💡Learning Opportunity: Spot instances are instances are unused EC2 instances within the AWS ecosystem. This means that there is a major saving opportunity in terms of cloud computing cost if you do not need consistent long-term reservation of cloud resources. For AgentCore this is perfectly viable as cloud computation is only needed periodically on prompt!

The backend also has several cool technical details including agent memory and session maintainer via. heartbeat/ack POST requests, but these are all much too in-depth for the purpose of this blog. If you’d like to read more I highly encourage you to check out the official GitHub repository for this software.

Building Cross-Platform Desktop Application

I hope that so far you have a good understanding of not only the underlying mechanisms behind our AI agent, but also how easy it is to now implement advanced video intelligence capabilities into your agentic models thanks to this latest Strands Agent integration.

With that in mind, we can finally begin developing the frontend desktop application. The official technology stack for the application is ElectronJS and NodeJS, so ensure that you have those installed before pulling the code from the repository!

Let’s start by taking a look at the file structure.

Without going too much into the frontend development, as this blog is meant to focus on AgentCore and TwelveLabs, building this desktop application is much like building a ReactJS website. Specifically, this frontend desktop application is highly modular, divided into both frontend components, context, and logic based in main.js.

Above is the official technical architecture, which shows exactly where this desktop application slots into the rest of the software. To keep it short, we use GitHub Actions as a CI/CD pipeline to build for both MacOS and Windows (which you can download from the releases here) and use FFmpeg and NodeJS to handle preprocessing of videos. Finally NodeJS directly calls the API endpoint hosted from AWS AgentCore to access our AI Agent.

I highly encourage you to look further on the key decisions and technical architecture in this in-depth document here: https://docs.google.com/document/d/1jvfr4kleZ-ghZ7v8RwLEaRPO0W9GEiMHqToBy8Mr9y0/edit?usp=sharing

Conclusion

Congratulations! 🎉 You have just completed this tutorial and learned not only how to build an AWS AgentCore agent from the Strands Agent framework, but many of the underlying concepts revolving around agentic AI. Furthermore, you have learned about how easy it can be to integrate advanced video intelligence technology like TwelveLabs into your agentic AI.

Check out some more in-depth resources here:

Official GitHub Repository: https://github.com/nathanchess/twelvelabs-agentcore-demo

Technical Architecture Diagram (LucidChart): https://lucid.app/lucidchart/40b7aa79-6da6-4bed-bd63-5f34e3955685/edit?viewport_loc=424%2C-502%2C4025%2C1778%2C0_0&invitationId=inv_ba388ce0-6ad8-4f77-9ac1-c8a4edc405db

Official Software Release for this Application: https://github.com/nathanchess/twelvelabs-agentcore-demo/releases/tag/v1.0.0

Strands Agent Documentation: https://strandsagents.com/latest/

AgentCore Documentation: https://aws.amazon.com/bedrock/agentcore/

Related articles

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved