Product

Video-to-Text Arena: From Pixels to Text with Video-Language Models

Hrishikesh Yadav

The Video-to-Text Arena is an open-source platform designed to assess the true capabilities of current AI models in understanding video content. It offers a standardized evaluation process, enabling users to compare various multimodal AI models side-by-side. The platform specifically measures how accurately each model translates visual actions, scenes, and events into analyzed text, highlighting which models best capture context, timing, and meaning.

The Video-to-Text Arena is an open-source platform designed to assess the true capabilities of current AI models in understanding video content. It offers a standardized evaluation process, enabling users to compare various multimodal AI models side-by-side. The platform specifically measures how accurately each model translates visual actions, scenes, and events into analyzed text, highlighting which models best capture context, timing, and meaning.

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Nov 17, 2025

9 Minutes

Copy link to article

Introduction

The Video-to-Text Arena is an open-source platform designed to assess the true capabilities of current AI models in understanding video content. It offers a standardized evaluation process, enabling users to compare various multimodal AI models side-by-side. The platform specifically measures how accurately each model translates visual actions, scenes, and events into analyzed text, highlighting which models best capture context, timing, and meaning.

Modern specialized video understanding models are built to process visual, audio, and textual information simultaneously, moving beyond simple concatenation to deep integration of modalities. These models should be capable of sophisticated video understanding, including cause and effect relationships, temporal ordering, and long-range dependencies across video sequences. The Arena's goal is to bring clarity to this field through practical, transparent comparisons.

Currently, the Arena supports models such as Twelve Labs (Pegasus 1.2), OpenAI (GPT-4o), Google (Gemini 2.0 Flash and 2.5 Pro), and AWS (nova-lite-v1:0). This guide focuses on evaluating these models under consistent qualitative conditions to demonstrate their strengths in various video understanding scenarios.

Thanks to its modular architecture, the Arena can easily integrate more models, and contributors are encouraged to add their own. This guide also provides instructions on how to effectively contribute to the Arena.

Demo

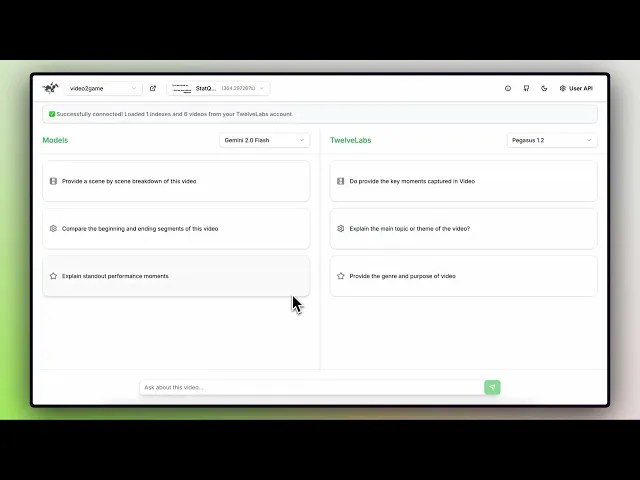

Below is a demo showing how different models are selected and used for the video analysis to compare their responses with Pegasus-1.2 (TwelveLabs). This setup helps analyze videos across various scenario understanding.

Video Understanding Challenges

Video understanding presents a fundamental temporal challenge, as numerous model pipelines currently sample at approximately one frame per second or even lower, in an effort to minimize computational resources and associated costs. This reduced sampling rate results in a significant information deficit, leading to the omission of rapid actions and brief events, a loss of timestamp granularity, and an impaired synchronization between visual and auditory components, thereby hindering comprehensive video comprehension.

1 - Temporal Reasoning and Sequential Understanding Limitations

Video understanding models encounter substantial difficulties in temporal reasoning and the comprehension of events across video sequences. Models such as GPT-4V exhibit limitations in maintaining coherent temporal understanding when processing extended videos, frequently treating individual frames as discrete snapshots rather than continuous temporal flows. This fundamental constraint arises from frame sampling strategies that downsample videos into sparse representations, leading models to overlook crucial transitional moments and temporal dependencies. While these models generally perform adequately on isolated, moment-specific queries, they demonstrate significant challenges when tasks necessitate a comprehensive understanding of event sequences over time or the tracking of long-range context across several minutes or even hours of video.

2 - Spatial Details Recognition and Object Tracking Deficiencies

Multimodal understanding models exhibit significant deficiencies in spatial reasoning and object tracking within video sequences. Models such as Gemini-2.5-pro and GPT-4o demonstrate a limited capacity to maintain object identity across frames, struggle with in-frame text recognition, and perform poorly in spatial localization tasks. Recent research, specifically the SlowFocus study, indicates that current Vid-LLMs, which process sequences of frames, consume more tokens for higher-quality frames. This creates a trade-off between spatial detail and temporal coverage, preventing Vid-LLMs from simultaneously retaining high-quality frame-level semantic information and comprehensive video-level temporal information. Consequently, numerous failures occur in various scenarios, including tracking specific objects through scene changes, accurately counting objects in high-speed videos, and comprehending precise spatial relationships between objects.

This limitation is exacerbated in longer videos due to memory constraints that necessitate aggressive compression of visual information, thereby hindering accurate scene understanding and leading to token overflow issues.

3 - Hallucination and the Grounding Problems in the Multimodal Context

Video hallucination, a prevalent issue in major multimodal LLMs, results in the generation of factually incorrect information. Benchmarks such as VidHalluc demonstrate the susceptibility of these models to hallucination across three crucial dimensions: action recognition, temporal sequence accuracy, and scene transition understanding. This problem is particularly pronounced in longer videos, where models are required to condense visual information into limited token budgets, leading to over-generalization and fabricated details. A primary approach to mitigate hallucination involves the use of visual encoders capable of discerning subtle differences between similar scenes, combined with LLMs, to ensure strict adherence to visual evidence.

4 - Context Window, Memory and Computational Constraints

Video understanding models encounter limitations concerning context window capacity and computational resource demands. While GPT-4o offers a 128K-token context and Gemini-2.5-Pro supports up to 1M tokens, these capacities remain insufficient for comprehensive, long-form video analysis, given the extensive token requirements for accurate video frame representation. Models inherently struggle to achieve a balance between sampling frequency (temporal resolution) and the overall duration of video they can process. Current methodologies either omit crucial moments by skipping frames or exceed computational thresholds before analysis completion.

As we have explored, the landscape of video understanding models contends with challenges in temporal reasoning and long-form content analysis. The TwelveLabs Pegasus 1.2 model represents an advancement specifically designed to address the fundamental limitations of general-purpose Multimodal Large Language Models (MLLMs). Pegasus 1.2 employs a spatio-temporal comprehension approach that directly confronts the previously discussed challenges. Unlike GPT-4o, Gemini, and Claude, which were primarily developed for general multimodal tasks and subsequently adapted for video understanding, Pegasus 1.2 is purpose-built as a video language model. The model is capable of processing videos up to an hour in length with low latency, high accuracy, and more cost-effective repeated queries to the same video content. Due to its specialized architecture, Pegasus 1.2's ability to process hour-long videos without the accuracy degradation observed in general-purpose MLLMs positions it as the preferred solution for enterprise applications, including content summarization, video captioning, timestamp-accurate event identification, and comprehensive video content analysis.

Qualitative Insights

In this section, we evaluate the video analysis text generated by different models across multiple parameters to assess their video understanding capabilities.

A. Temporal Context Understanding

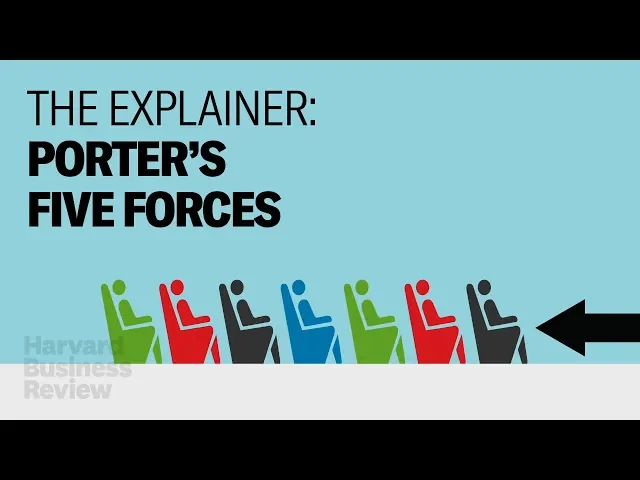

In this analysis, we focus on temporal context understanding in video content using the example video, “Five Forces of Market Competition,” which visually explains Porter’s Five Forces principle.

Query – “Describe the sequence of events in the video with timestamps.”

The ensuing analysis contrasts gemini-2.5-pro with pegasus-1.2. The Gemini model produces granular timestamps with high temporal precision, yet it exhibits difficulty in correlating visual segments with their underlying business concepts. Its frame-level segmentation results in a fragmented narration that describes what transpires rather than why. Conversely, Pegasus offers a more conceptually structured and causally coherent explanation, adeptly linking each visual transition to the corresponding competitive force.

While Gemini's precision is advantageous for technical tasks such as event localization, Pegasus surpasses it in interpretability, clarity, and topic relevance, rendering it more appropriate for comprehension and communication.

The OpenAI model (GPT-4o) encountered difficulties in processing the video due to its length, which exceeded the token limit when analyzed at a rate of one frame per second. In contrast, AWS Nova (nova-lite-v1:0), as demonstrated below, furnished a superficial temporal summary, characterized by limited detail and an absence of substantive conceptual connections.

B. Scene Transition and Continuity Tracking

For the understanding of the scene transition and the continuity tracking, the video analyzed is titled “How the Eiffel Tower Was Built”.

Query – “For each transition, describe how context changes.”

Upon analysis, the Gemini (gemini-2.5-pro) response is characterized by its extensive and repetitive nature, exhibiting poor structural organization. This results in lengthy paragraphs that combine multiple transitions without distinct timestamp separations. The absence of adequate formatting and contextual clarity hinders the ability to easily trace the narrative progression from one stage of construction to the next.

Conversely, Pegasus offers a well-organized and visually coherent response. Each transition is presented with a bold title, a concise description, and precise timestamps. The inclusion of timestamps further enhances readability, facilitating the identification and comprehension of how the video's context evolves across the various stages of the Eiffel Tower's construction.

When the identical task was executed using AWS Nova, the model encountered difficulty in accurately interpreting the query and consequently failed to provide the anticipated response. Its output consisted of general titles that did not adequately represent the video's transitions or shifts in context. As a result, the response lacked both pertinence and thoroughness, offering only superficial information without effectively elucidating the contextual changes throughout the video.

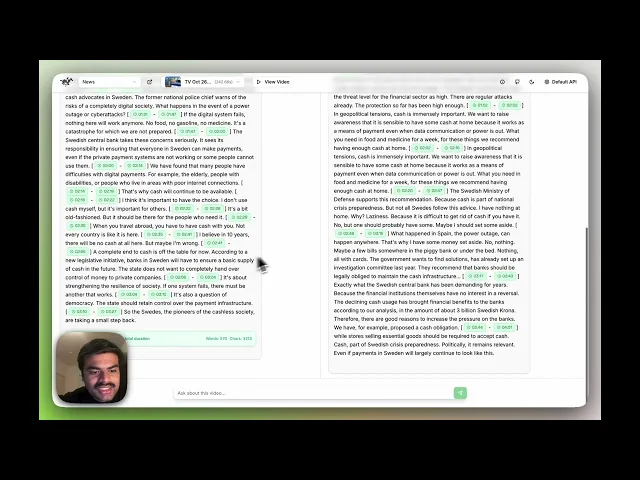

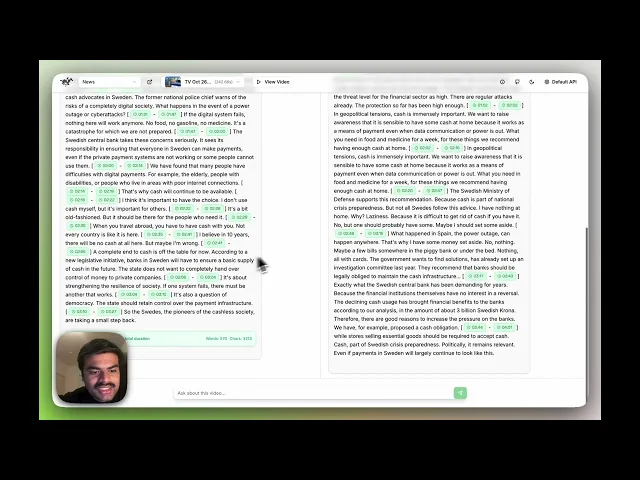

C. Dialogue and Audio Content in Video

Most Multimodal Large Language Models (MLLMs) are not yet fully adapted to comprehend or interpret the audio component within videos. Specifically, AWS Nova’s (nova-lite-v1:0) video understanding currently lacks audio support, thereby restricting its capacity to capture spoken content, tone, or background context. Consequently, this section will qualitatively evaluate audio content understanding, examining the efficacy with which different models can interpret, transcribe, and explain the spoken and auditory elements present in video data.

An open-source video from a German news channel was analyzed herein.

Query — “Could you please transcribe the content with the proper timestamp. Needed for directly to be added as a transcript file. Just provide the words which are there in the audio. The timestamp of the format [mm:ss - mm:ss]”

The analysis indicates that Pegasus 1.2 significantly surpasses Gemini 2.5 Pro in both accuracy and adherence to the specified format.

While Gemini provides timestamps in the requested [mm:ss – mm:ss] format, its transcription is fragmented and excessively segmented, resulting in incomplete, choppy, and contextually disjointed content. Furthermore, Gemini's timestamps are inconsistent with the video duration (the input video is approximately 4 minutes, yet Gemini's output covers only about 3 minutes). Another observed issue is that, given the original video language was German, Gemini initiates transcription in German before switching to English, thereby compromising consistency.

In contrast, the Pegasus 1.2 output presents a comprehensive and continuous transcription with longer, coherent dialogue segments. It maintains the original language throughout and provides accurately aligned timestamps, capturing full sentences and preserving the natural conversational flow.

When presented with a request for English transcription within the same query, Gemini again demonstrated subpar performance, yielding an abstract summary rather than an accurate transliterated transcript. It paraphrased and condensed the content, failing to accurately reflect the spoken dialogue, and its timestamps remained imprecise. In contrast, Pegasus delivered an accurate transliterated transcription with appropriately formatted timestamps, suitable for direct export and subsequent production use.

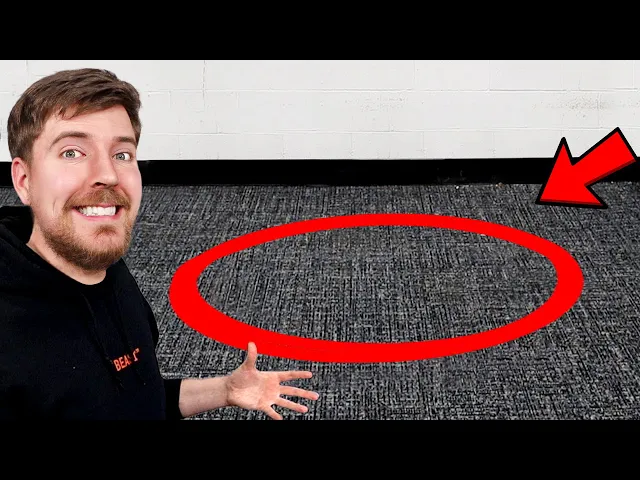

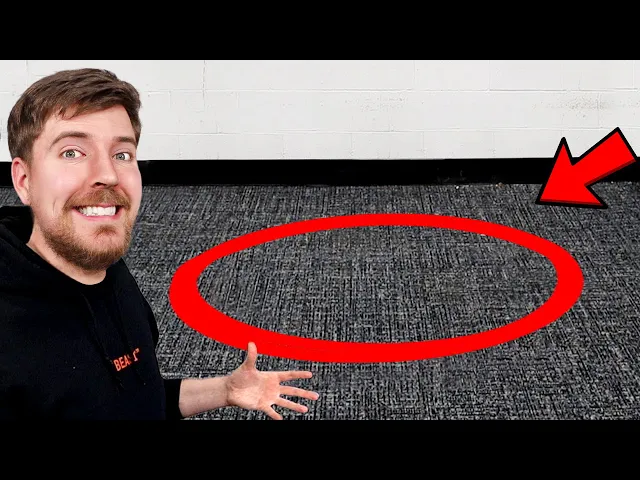

D. Object & Entity Fidelity

The objective of this analysis is to evaluate the model's capacity to identify and comprehend objects and entities within a video. For the experimental setup, a video illustrating the task of placing objects inside a circle was utilized.

Query – "Kindly provide the start of the second circle task, the list of product names within that circle, and the total amount."

In the preceding analysis, Pegasus 1.2 demonstrates significantly superior accuracy, whereas Gemini 2.5 Pro completely fails. Despite the user's explicit request for information regarding the "2nd circle task," Gemini incorrectly identifies its location as a "red square on a table" and provides a list of 12 products, which appears to correspond to the initial challenge. Gemini's response reveals a fundamental lack of understanding concerning spatial and temporal sequencing within the video. Conversely, Pegasus accurately identifies the second circle task, providing precise timestamps [192s-199s], correctly describes its occurrence on the floor of a grocery store aisle (not a table), and furnishes the correct product list.

Pegasus also accurately identifies the total amount as $20,000 (with timestamps [304s-307s]), while Gemini erroneously reports $6,100. Pegasus's content is factually accurate, while Gemini's entire response addresses the incorrect question, thereby demonstrating poor video comprehension and object tracking.

E. Contextual Reasoning and Descriptiveness

This evaluation will assess the system's proficiency in comprehending input queries and generating comprehensive, contextually relevant responses. The test's foundational content is a video elucidating the EU AI Act. Our analysis will concentrate on the system's capacity to interpret the subject matter and furnish thorough, accurate, and pertinent insights derived from the content.

Query — "Furnish a response comprising a concise summary (approximately 30 words) and an elaborate narrative (approximately 100 words) of this video. Incorporate timestamps for each principal topic transition."

The following analysis indicates that Pegasus 1.2 exhibits superior contextual reasoning and descriptive capabilities when compared to both Gemini 2.5 Pro and AWS Nova. Pegasus provides a comprehensive and coherent narrative, accurately referencing individuals such as Sam Altman, CEO of OpenAI, and establishing meaningful connections between concepts, including the EU AI Act, ChatGPT’s risk classification, and China's social credit system. In contrast, Gemini 2.5 Pro, while competent, offers a more fragmented explanation with reduced depth and fewer contextual links.

AWS Nova consistently demonstrates a significant deficiency in contextual comprehension, offering only superficial descriptions of visual elements, such as "a woman in a dark background" and "a man standing in front of a map." It provides no substantive interpretation of the EU AI Act discussion, lacking analytical depth beyond basic scene recognition.

Pegasus 1.2, in contrast, effectively timestamps transitions and articulates the regulatory framework, risk tiers, and policy implications in a well-structured and logically coherent manner. Pegasus not only identifies the subject matter but also synthesizes the evolving discussion across the video timeline, transforming raw information into a clear and informative narrative. AWS Nova, however, demonstrates limited capability beyond object identification, exhibiting minimal understanding of the video's thematic significance or legal context.

How to contribute to the Video-to-Text Arena codebase

To list a model or any other video understanding/multimodal model for comparison on the platform, integrate it within approximately 30 lines of code in the video-to-text Arena. The following steps and files require updates to integrate a new model and ensure its visibility in the user interface.

Any Multimodal Large Language Models (MLLMs) adapted for video understanding or any other specialized video model can be integrated into the platform. Each model can be mapped to its own dedicated processing utility, defined within the same class structure. The diagram below illustrates the utilization of different models across the platform, along with their corresponding processing pipelines.

Preparation Steps

Obtain your API key from the TwelveLabs Playground and configure your environment variable.

Clone the project from Github Repo.

Create a .env file to store your TwelveLabs API KEY.

Upon completion of these steps, you're ready to start developing!

Backend Setup

Step 1: Create Model Class

A new model class should be created, with its associated processing utilities defined within this class. The class may be defined as a separate model file within the models/ folder.

Step 2: Update Configuration

Integrate your model's configuration into config.py, primarily specifying the inference API and the Base URL of the endpoint.

Step 3: Register your Model

Update models/__init__.py to reference the newly defined model's class.

Step 4: Integrate with Main Application

Modify app.py to incorporate your new model by defining its class within the model_dict.

Step 5: Add API Route

Update routes/api_routes.py to include your model in the routes by defining it within the model_dict.

The backend server is now fully configured. Only minimal frontend adjustments are required to make the newly defined model visible.

Frontend Setup

Step 1: Update API Service Type Definitions

Add your model to the ModelStatus interface in lib/api.ts.

Step 2: Update Model Availability Functions

The components/model-evaluation-platform.tsx component manages model selection. Update the model availability functions by defining the newly integrated model function.

The newly added model will subsequently appear in the arena, ready for exploration and experimentation.

Conclusion

At TwelveLabs, our Pegasus-1.2 model stands at the forefront of specialized video understanding, uniquely engineered to overcome the inherent limitations of general-purpose Multimodal LLMs. Pegasus meticulously captures the profound nuances of video, discerning contextual information, temporal relationships, and subtle visual cues that other AI systems often miss.

The seamless integration of Pegasus with advanced tool-use and autonomous workflows marks a new era in intelligent video processing. This synergy empowers our model to move beyond mere analysis, actively interpreting, reasoning about, and ultimately acting upon video content. This groundbreaking capability unlocks a multitude of transformative applications, revolutionizing how our users interact with and leverage video data.

For example, in content moderation, Pegasus autonomously identifies and flags inappropriate or harmful content, significantly enhancing the efficiency and accuracy of platform safety measures for our clients. In entertainment, personalized video recommendations powered by Pegasus become far more precise and engaging, catering to individual tastes by understanding the underlying themes and emotions within videos. Furthermore, intelligent scene-based editing leverages Pegasus to automate complex video production tasks, allowing for the seamless creation of highlight reels, dynamic transitions, and contextually aware cuts, thereby streamlining the creative process and opening new possibilities for video storytelling. The ability of Pegasus to not just understand but also interact with and manipulate video content represents a significant leap forward in video understanding, with far-reaching implications across various industries and applications that we are proud to enable.

Additional Resources

Learn more about the analyze video engine—Pegasus-1.2. To explore TwelveLabs further and enhance your understanding of video content analysis, check out these resources:

Join the Conversation: Share your feedback on this integration in the TwelveLabs Discord.

Explore Sample Apps: Dive deeper into TwelveLabs capabilities with our comprehensive tutorials

We encourage you to use these resources to expand your knowledge and create innovative applications using TwelveLabs video understanding technology.

Introduction

The Video-to-Text Arena is an open-source platform designed to assess the true capabilities of current AI models in understanding video content. It offers a standardized evaluation process, enabling users to compare various multimodal AI models side-by-side. The platform specifically measures how accurately each model translates visual actions, scenes, and events into analyzed text, highlighting which models best capture context, timing, and meaning.

Modern specialized video understanding models are built to process visual, audio, and textual information simultaneously, moving beyond simple concatenation to deep integration of modalities. These models should be capable of sophisticated video understanding, including cause and effect relationships, temporal ordering, and long-range dependencies across video sequences. The Arena's goal is to bring clarity to this field through practical, transparent comparisons.

Currently, the Arena supports models such as Twelve Labs (Pegasus 1.2), OpenAI (GPT-4o), Google (Gemini 2.0 Flash and 2.5 Pro), and AWS (nova-lite-v1:0). This guide focuses on evaluating these models under consistent qualitative conditions to demonstrate their strengths in various video understanding scenarios.

Thanks to its modular architecture, the Arena can easily integrate more models, and contributors are encouraged to add their own. This guide also provides instructions on how to effectively contribute to the Arena.

Demo

Below is a demo showing how different models are selected and used for the video analysis to compare their responses with Pegasus-1.2 (TwelveLabs). This setup helps analyze videos across various scenario understanding.

Video Understanding Challenges

Video understanding presents a fundamental temporal challenge, as numerous model pipelines currently sample at approximately one frame per second or even lower, in an effort to minimize computational resources and associated costs. This reduced sampling rate results in a significant information deficit, leading to the omission of rapid actions and brief events, a loss of timestamp granularity, and an impaired synchronization between visual and auditory components, thereby hindering comprehensive video comprehension.

1 - Temporal Reasoning and Sequential Understanding Limitations

Video understanding models encounter substantial difficulties in temporal reasoning and the comprehension of events across video sequences. Models such as GPT-4V exhibit limitations in maintaining coherent temporal understanding when processing extended videos, frequently treating individual frames as discrete snapshots rather than continuous temporal flows. This fundamental constraint arises from frame sampling strategies that downsample videos into sparse representations, leading models to overlook crucial transitional moments and temporal dependencies. While these models generally perform adequately on isolated, moment-specific queries, they demonstrate significant challenges when tasks necessitate a comprehensive understanding of event sequences over time or the tracking of long-range context across several minutes or even hours of video.

2 - Spatial Details Recognition and Object Tracking Deficiencies

Multimodal understanding models exhibit significant deficiencies in spatial reasoning and object tracking within video sequences. Models such as Gemini-2.5-pro and GPT-4o demonstrate a limited capacity to maintain object identity across frames, struggle with in-frame text recognition, and perform poorly in spatial localization tasks. Recent research, specifically the SlowFocus study, indicates that current Vid-LLMs, which process sequences of frames, consume more tokens for higher-quality frames. This creates a trade-off between spatial detail and temporal coverage, preventing Vid-LLMs from simultaneously retaining high-quality frame-level semantic information and comprehensive video-level temporal information. Consequently, numerous failures occur in various scenarios, including tracking specific objects through scene changes, accurately counting objects in high-speed videos, and comprehending precise spatial relationships between objects.

This limitation is exacerbated in longer videos due to memory constraints that necessitate aggressive compression of visual information, thereby hindering accurate scene understanding and leading to token overflow issues.

3 - Hallucination and the Grounding Problems in the Multimodal Context

Video hallucination, a prevalent issue in major multimodal LLMs, results in the generation of factually incorrect information. Benchmarks such as VidHalluc demonstrate the susceptibility of these models to hallucination across three crucial dimensions: action recognition, temporal sequence accuracy, and scene transition understanding. This problem is particularly pronounced in longer videos, where models are required to condense visual information into limited token budgets, leading to over-generalization and fabricated details. A primary approach to mitigate hallucination involves the use of visual encoders capable of discerning subtle differences between similar scenes, combined with LLMs, to ensure strict adherence to visual evidence.

4 - Context Window, Memory and Computational Constraints

Video understanding models encounter limitations concerning context window capacity and computational resource demands. While GPT-4o offers a 128K-token context and Gemini-2.5-Pro supports up to 1M tokens, these capacities remain insufficient for comprehensive, long-form video analysis, given the extensive token requirements for accurate video frame representation. Models inherently struggle to achieve a balance between sampling frequency (temporal resolution) and the overall duration of video they can process. Current methodologies either omit crucial moments by skipping frames or exceed computational thresholds before analysis completion.

As we have explored, the landscape of video understanding models contends with challenges in temporal reasoning and long-form content analysis. The TwelveLabs Pegasus 1.2 model represents an advancement specifically designed to address the fundamental limitations of general-purpose Multimodal Large Language Models (MLLMs). Pegasus 1.2 employs a spatio-temporal comprehension approach that directly confronts the previously discussed challenges. Unlike GPT-4o, Gemini, and Claude, which were primarily developed for general multimodal tasks and subsequently adapted for video understanding, Pegasus 1.2 is purpose-built as a video language model. The model is capable of processing videos up to an hour in length with low latency, high accuracy, and more cost-effective repeated queries to the same video content. Due to its specialized architecture, Pegasus 1.2's ability to process hour-long videos without the accuracy degradation observed in general-purpose MLLMs positions it as the preferred solution for enterprise applications, including content summarization, video captioning, timestamp-accurate event identification, and comprehensive video content analysis.

Qualitative Insights

In this section, we evaluate the video analysis text generated by different models across multiple parameters to assess their video understanding capabilities.

A. Temporal Context Understanding

In this analysis, we focus on temporal context understanding in video content using the example video, “Five Forces of Market Competition,” which visually explains Porter’s Five Forces principle.

Query – “Describe the sequence of events in the video with timestamps.”

The ensuing analysis contrasts gemini-2.5-pro with pegasus-1.2. The Gemini model produces granular timestamps with high temporal precision, yet it exhibits difficulty in correlating visual segments with their underlying business concepts. Its frame-level segmentation results in a fragmented narration that describes what transpires rather than why. Conversely, Pegasus offers a more conceptually structured and causally coherent explanation, adeptly linking each visual transition to the corresponding competitive force.

While Gemini's precision is advantageous for technical tasks such as event localization, Pegasus surpasses it in interpretability, clarity, and topic relevance, rendering it more appropriate for comprehension and communication.

The OpenAI model (GPT-4o) encountered difficulties in processing the video due to its length, which exceeded the token limit when analyzed at a rate of one frame per second. In contrast, AWS Nova (nova-lite-v1:0), as demonstrated below, furnished a superficial temporal summary, characterized by limited detail and an absence of substantive conceptual connections.

B. Scene Transition and Continuity Tracking

For the understanding of the scene transition and the continuity tracking, the video analyzed is titled “How the Eiffel Tower Was Built”.

Query – “For each transition, describe how context changes.”

Upon analysis, the Gemini (gemini-2.5-pro) response is characterized by its extensive and repetitive nature, exhibiting poor structural organization. This results in lengthy paragraphs that combine multiple transitions without distinct timestamp separations. The absence of adequate formatting and contextual clarity hinders the ability to easily trace the narrative progression from one stage of construction to the next.

Conversely, Pegasus offers a well-organized and visually coherent response. Each transition is presented with a bold title, a concise description, and precise timestamps. The inclusion of timestamps further enhances readability, facilitating the identification and comprehension of how the video's context evolves across the various stages of the Eiffel Tower's construction.

When the identical task was executed using AWS Nova, the model encountered difficulty in accurately interpreting the query and consequently failed to provide the anticipated response. Its output consisted of general titles that did not adequately represent the video's transitions or shifts in context. As a result, the response lacked both pertinence and thoroughness, offering only superficial information without effectively elucidating the contextual changes throughout the video.

C. Dialogue and Audio Content in Video

Most Multimodal Large Language Models (MLLMs) are not yet fully adapted to comprehend or interpret the audio component within videos. Specifically, AWS Nova’s (nova-lite-v1:0) video understanding currently lacks audio support, thereby restricting its capacity to capture spoken content, tone, or background context. Consequently, this section will qualitatively evaluate audio content understanding, examining the efficacy with which different models can interpret, transcribe, and explain the spoken and auditory elements present in video data.

An open-source video from a German news channel was analyzed herein.

Query — “Could you please transcribe the content with the proper timestamp. Needed for directly to be added as a transcript file. Just provide the words which are there in the audio. The timestamp of the format [mm:ss - mm:ss]”

The analysis indicates that Pegasus 1.2 significantly surpasses Gemini 2.5 Pro in both accuracy and adherence to the specified format.

While Gemini provides timestamps in the requested [mm:ss – mm:ss] format, its transcription is fragmented and excessively segmented, resulting in incomplete, choppy, and contextually disjointed content. Furthermore, Gemini's timestamps are inconsistent with the video duration (the input video is approximately 4 minutes, yet Gemini's output covers only about 3 minutes). Another observed issue is that, given the original video language was German, Gemini initiates transcription in German before switching to English, thereby compromising consistency.

In contrast, the Pegasus 1.2 output presents a comprehensive and continuous transcription with longer, coherent dialogue segments. It maintains the original language throughout and provides accurately aligned timestamps, capturing full sentences and preserving the natural conversational flow.

When presented with a request for English transcription within the same query, Gemini again demonstrated subpar performance, yielding an abstract summary rather than an accurate transliterated transcript. It paraphrased and condensed the content, failing to accurately reflect the spoken dialogue, and its timestamps remained imprecise. In contrast, Pegasus delivered an accurate transliterated transcription with appropriately formatted timestamps, suitable for direct export and subsequent production use.

D. Object & Entity Fidelity

The objective of this analysis is to evaluate the model's capacity to identify and comprehend objects and entities within a video. For the experimental setup, a video illustrating the task of placing objects inside a circle was utilized.

Query – "Kindly provide the start of the second circle task, the list of product names within that circle, and the total amount."

In the preceding analysis, Pegasus 1.2 demonstrates significantly superior accuracy, whereas Gemini 2.5 Pro completely fails. Despite the user's explicit request for information regarding the "2nd circle task," Gemini incorrectly identifies its location as a "red square on a table" and provides a list of 12 products, which appears to correspond to the initial challenge. Gemini's response reveals a fundamental lack of understanding concerning spatial and temporal sequencing within the video. Conversely, Pegasus accurately identifies the second circle task, providing precise timestamps [192s-199s], correctly describes its occurrence on the floor of a grocery store aisle (not a table), and furnishes the correct product list.

Pegasus also accurately identifies the total amount as $20,000 (with timestamps [304s-307s]), while Gemini erroneously reports $6,100. Pegasus's content is factually accurate, while Gemini's entire response addresses the incorrect question, thereby demonstrating poor video comprehension and object tracking.

E. Contextual Reasoning and Descriptiveness

This evaluation will assess the system's proficiency in comprehending input queries and generating comprehensive, contextually relevant responses. The test's foundational content is a video elucidating the EU AI Act. Our analysis will concentrate on the system's capacity to interpret the subject matter and furnish thorough, accurate, and pertinent insights derived from the content.

Query — "Furnish a response comprising a concise summary (approximately 30 words) and an elaborate narrative (approximately 100 words) of this video. Incorporate timestamps for each principal topic transition."

The following analysis indicates that Pegasus 1.2 exhibits superior contextual reasoning and descriptive capabilities when compared to both Gemini 2.5 Pro and AWS Nova. Pegasus provides a comprehensive and coherent narrative, accurately referencing individuals such as Sam Altman, CEO of OpenAI, and establishing meaningful connections between concepts, including the EU AI Act, ChatGPT’s risk classification, and China's social credit system. In contrast, Gemini 2.5 Pro, while competent, offers a more fragmented explanation with reduced depth and fewer contextual links.

AWS Nova consistently demonstrates a significant deficiency in contextual comprehension, offering only superficial descriptions of visual elements, such as "a woman in a dark background" and "a man standing in front of a map." It provides no substantive interpretation of the EU AI Act discussion, lacking analytical depth beyond basic scene recognition.

Pegasus 1.2, in contrast, effectively timestamps transitions and articulates the regulatory framework, risk tiers, and policy implications in a well-structured and logically coherent manner. Pegasus not only identifies the subject matter but also synthesizes the evolving discussion across the video timeline, transforming raw information into a clear and informative narrative. AWS Nova, however, demonstrates limited capability beyond object identification, exhibiting minimal understanding of the video's thematic significance or legal context.

How to contribute to the Video-to-Text Arena codebase

To list a model or any other video understanding/multimodal model for comparison on the platform, integrate it within approximately 30 lines of code in the video-to-text Arena. The following steps and files require updates to integrate a new model and ensure its visibility in the user interface.

Any Multimodal Large Language Models (MLLMs) adapted for video understanding or any other specialized video model can be integrated into the platform. Each model can be mapped to its own dedicated processing utility, defined within the same class structure. The diagram below illustrates the utilization of different models across the platform, along with their corresponding processing pipelines.

Preparation Steps

Obtain your API key from the TwelveLabs Playground and configure your environment variable.

Clone the project from Github Repo.

Create a .env file to store your TwelveLabs API KEY.

Upon completion of these steps, you're ready to start developing!

Backend Setup

Step 1: Create Model Class

A new model class should be created, with its associated processing utilities defined within this class. The class may be defined as a separate model file within the models/ folder.

Step 2: Update Configuration

Integrate your model's configuration into config.py, primarily specifying the inference API and the Base URL of the endpoint.

Step 3: Register your Model

Update models/__init__.py to reference the newly defined model's class.

Step 4: Integrate with Main Application

Modify app.py to incorporate your new model by defining its class within the model_dict.

Step 5: Add API Route

Update routes/api_routes.py to include your model in the routes by defining it within the model_dict.

The backend server is now fully configured. Only minimal frontend adjustments are required to make the newly defined model visible.

Frontend Setup

Step 1: Update API Service Type Definitions

Add your model to the ModelStatus interface in lib/api.ts.

Step 2: Update Model Availability Functions

The components/model-evaluation-platform.tsx component manages model selection. Update the model availability functions by defining the newly integrated model function.

The newly added model will subsequently appear in the arena, ready for exploration and experimentation.

Conclusion

At TwelveLabs, our Pegasus-1.2 model stands at the forefront of specialized video understanding, uniquely engineered to overcome the inherent limitations of general-purpose Multimodal LLMs. Pegasus meticulously captures the profound nuances of video, discerning contextual information, temporal relationships, and subtle visual cues that other AI systems often miss.

The seamless integration of Pegasus with advanced tool-use and autonomous workflows marks a new era in intelligent video processing. This synergy empowers our model to move beyond mere analysis, actively interpreting, reasoning about, and ultimately acting upon video content. This groundbreaking capability unlocks a multitude of transformative applications, revolutionizing how our users interact with and leverage video data.

For example, in content moderation, Pegasus autonomously identifies and flags inappropriate or harmful content, significantly enhancing the efficiency and accuracy of platform safety measures for our clients. In entertainment, personalized video recommendations powered by Pegasus become far more precise and engaging, catering to individual tastes by understanding the underlying themes and emotions within videos. Furthermore, intelligent scene-based editing leverages Pegasus to automate complex video production tasks, allowing for the seamless creation of highlight reels, dynamic transitions, and contextually aware cuts, thereby streamlining the creative process and opening new possibilities for video storytelling. The ability of Pegasus to not just understand but also interact with and manipulate video content represents a significant leap forward in video understanding, with far-reaching implications across various industries and applications that we are proud to enable.

Additional Resources

Learn more about the analyze video engine—Pegasus-1.2. To explore TwelveLabs further and enhance your understanding of video content analysis, check out these resources:

Join the Conversation: Share your feedback on this integration in the TwelveLabs Discord.

Explore Sample Apps: Dive deeper into TwelveLabs capabilities with our comprehensive tutorials

We encourage you to use these resources to expand your knowledge and create innovative applications using TwelveLabs video understanding technology.

Introduction

The Video-to-Text Arena is an open-source platform designed to assess the true capabilities of current AI models in understanding video content. It offers a standardized evaluation process, enabling users to compare various multimodal AI models side-by-side. The platform specifically measures how accurately each model translates visual actions, scenes, and events into analyzed text, highlighting which models best capture context, timing, and meaning.

Modern specialized video understanding models are built to process visual, audio, and textual information simultaneously, moving beyond simple concatenation to deep integration of modalities. These models should be capable of sophisticated video understanding, including cause and effect relationships, temporal ordering, and long-range dependencies across video sequences. The Arena's goal is to bring clarity to this field through practical, transparent comparisons.

Currently, the Arena supports models such as Twelve Labs (Pegasus 1.2), OpenAI (GPT-4o), Google (Gemini 2.0 Flash and 2.5 Pro), and AWS (nova-lite-v1:0). This guide focuses on evaluating these models under consistent qualitative conditions to demonstrate their strengths in various video understanding scenarios.

Thanks to its modular architecture, the Arena can easily integrate more models, and contributors are encouraged to add their own. This guide also provides instructions on how to effectively contribute to the Arena.

Demo

Below is a demo showing how different models are selected and used for the video analysis to compare their responses with Pegasus-1.2 (TwelveLabs). This setup helps analyze videos across various scenario understanding.

Video Understanding Challenges

Video understanding presents a fundamental temporal challenge, as numerous model pipelines currently sample at approximately one frame per second or even lower, in an effort to minimize computational resources and associated costs. This reduced sampling rate results in a significant information deficit, leading to the omission of rapid actions and brief events, a loss of timestamp granularity, and an impaired synchronization between visual and auditory components, thereby hindering comprehensive video comprehension.

1 - Temporal Reasoning and Sequential Understanding Limitations

Video understanding models encounter substantial difficulties in temporal reasoning and the comprehension of events across video sequences. Models such as GPT-4V exhibit limitations in maintaining coherent temporal understanding when processing extended videos, frequently treating individual frames as discrete snapshots rather than continuous temporal flows. This fundamental constraint arises from frame sampling strategies that downsample videos into sparse representations, leading models to overlook crucial transitional moments and temporal dependencies. While these models generally perform adequately on isolated, moment-specific queries, they demonstrate significant challenges when tasks necessitate a comprehensive understanding of event sequences over time or the tracking of long-range context across several minutes or even hours of video.

2 - Spatial Details Recognition and Object Tracking Deficiencies

Multimodal understanding models exhibit significant deficiencies in spatial reasoning and object tracking within video sequences. Models such as Gemini-2.5-pro and GPT-4o demonstrate a limited capacity to maintain object identity across frames, struggle with in-frame text recognition, and perform poorly in spatial localization tasks. Recent research, specifically the SlowFocus study, indicates that current Vid-LLMs, which process sequences of frames, consume more tokens for higher-quality frames. This creates a trade-off between spatial detail and temporal coverage, preventing Vid-LLMs from simultaneously retaining high-quality frame-level semantic information and comprehensive video-level temporal information. Consequently, numerous failures occur in various scenarios, including tracking specific objects through scene changes, accurately counting objects in high-speed videos, and comprehending precise spatial relationships between objects.

This limitation is exacerbated in longer videos due to memory constraints that necessitate aggressive compression of visual information, thereby hindering accurate scene understanding and leading to token overflow issues.

3 - Hallucination and the Grounding Problems in the Multimodal Context

Video hallucination, a prevalent issue in major multimodal LLMs, results in the generation of factually incorrect information. Benchmarks such as VidHalluc demonstrate the susceptibility of these models to hallucination across three crucial dimensions: action recognition, temporal sequence accuracy, and scene transition understanding. This problem is particularly pronounced in longer videos, where models are required to condense visual information into limited token budgets, leading to over-generalization and fabricated details. A primary approach to mitigate hallucination involves the use of visual encoders capable of discerning subtle differences between similar scenes, combined with LLMs, to ensure strict adherence to visual evidence.

4 - Context Window, Memory and Computational Constraints

Video understanding models encounter limitations concerning context window capacity and computational resource demands. While GPT-4o offers a 128K-token context and Gemini-2.5-Pro supports up to 1M tokens, these capacities remain insufficient for comprehensive, long-form video analysis, given the extensive token requirements for accurate video frame representation. Models inherently struggle to achieve a balance between sampling frequency (temporal resolution) and the overall duration of video they can process. Current methodologies either omit crucial moments by skipping frames or exceed computational thresholds before analysis completion.

As we have explored, the landscape of video understanding models contends with challenges in temporal reasoning and long-form content analysis. The TwelveLabs Pegasus 1.2 model represents an advancement specifically designed to address the fundamental limitations of general-purpose Multimodal Large Language Models (MLLMs). Pegasus 1.2 employs a spatio-temporal comprehension approach that directly confronts the previously discussed challenges. Unlike GPT-4o, Gemini, and Claude, which were primarily developed for general multimodal tasks and subsequently adapted for video understanding, Pegasus 1.2 is purpose-built as a video language model. The model is capable of processing videos up to an hour in length with low latency, high accuracy, and more cost-effective repeated queries to the same video content. Due to its specialized architecture, Pegasus 1.2's ability to process hour-long videos without the accuracy degradation observed in general-purpose MLLMs positions it as the preferred solution for enterprise applications, including content summarization, video captioning, timestamp-accurate event identification, and comprehensive video content analysis.

Qualitative Insights

In this section, we evaluate the video analysis text generated by different models across multiple parameters to assess their video understanding capabilities.

A. Temporal Context Understanding

In this analysis, we focus on temporal context understanding in video content using the example video, “Five Forces of Market Competition,” which visually explains Porter’s Five Forces principle.

Query – “Describe the sequence of events in the video with timestamps.”

The ensuing analysis contrasts gemini-2.5-pro with pegasus-1.2. The Gemini model produces granular timestamps with high temporal precision, yet it exhibits difficulty in correlating visual segments with their underlying business concepts. Its frame-level segmentation results in a fragmented narration that describes what transpires rather than why. Conversely, Pegasus offers a more conceptually structured and causally coherent explanation, adeptly linking each visual transition to the corresponding competitive force.

While Gemini's precision is advantageous for technical tasks such as event localization, Pegasus surpasses it in interpretability, clarity, and topic relevance, rendering it more appropriate for comprehension and communication.

The OpenAI model (GPT-4o) encountered difficulties in processing the video due to its length, which exceeded the token limit when analyzed at a rate of one frame per second. In contrast, AWS Nova (nova-lite-v1:0), as demonstrated below, furnished a superficial temporal summary, characterized by limited detail and an absence of substantive conceptual connections.

B. Scene Transition and Continuity Tracking

For the understanding of the scene transition and the continuity tracking, the video analyzed is titled “How the Eiffel Tower Was Built”.

Query – “For each transition, describe how context changes.”

Upon analysis, the Gemini (gemini-2.5-pro) response is characterized by its extensive and repetitive nature, exhibiting poor structural organization. This results in lengthy paragraphs that combine multiple transitions without distinct timestamp separations. The absence of adequate formatting and contextual clarity hinders the ability to easily trace the narrative progression from one stage of construction to the next.

Conversely, Pegasus offers a well-organized and visually coherent response. Each transition is presented with a bold title, a concise description, and precise timestamps. The inclusion of timestamps further enhances readability, facilitating the identification and comprehension of how the video's context evolves across the various stages of the Eiffel Tower's construction.

When the identical task was executed using AWS Nova, the model encountered difficulty in accurately interpreting the query and consequently failed to provide the anticipated response. Its output consisted of general titles that did not adequately represent the video's transitions or shifts in context. As a result, the response lacked both pertinence and thoroughness, offering only superficial information without effectively elucidating the contextual changes throughout the video.

C. Dialogue and Audio Content in Video

Most Multimodal Large Language Models (MLLMs) are not yet fully adapted to comprehend or interpret the audio component within videos. Specifically, AWS Nova’s (nova-lite-v1:0) video understanding currently lacks audio support, thereby restricting its capacity to capture spoken content, tone, or background context. Consequently, this section will qualitatively evaluate audio content understanding, examining the efficacy with which different models can interpret, transcribe, and explain the spoken and auditory elements present in video data.

An open-source video from a German news channel was analyzed herein.

Query — “Could you please transcribe the content with the proper timestamp. Needed for directly to be added as a transcript file. Just provide the words which are there in the audio. The timestamp of the format [mm:ss - mm:ss]”

The analysis indicates that Pegasus 1.2 significantly surpasses Gemini 2.5 Pro in both accuracy and adherence to the specified format.

While Gemini provides timestamps in the requested [mm:ss – mm:ss] format, its transcription is fragmented and excessively segmented, resulting in incomplete, choppy, and contextually disjointed content. Furthermore, Gemini's timestamps are inconsistent with the video duration (the input video is approximately 4 minutes, yet Gemini's output covers only about 3 minutes). Another observed issue is that, given the original video language was German, Gemini initiates transcription in German before switching to English, thereby compromising consistency.

In contrast, the Pegasus 1.2 output presents a comprehensive and continuous transcription with longer, coherent dialogue segments. It maintains the original language throughout and provides accurately aligned timestamps, capturing full sentences and preserving the natural conversational flow.

When presented with a request for English transcription within the same query, Gemini again demonstrated subpar performance, yielding an abstract summary rather than an accurate transliterated transcript. It paraphrased and condensed the content, failing to accurately reflect the spoken dialogue, and its timestamps remained imprecise. In contrast, Pegasus delivered an accurate transliterated transcription with appropriately formatted timestamps, suitable for direct export and subsequent production use.

D. Object & Entity Fidelity

The objective of this analysis is to evaluate the model's capacity to identify and comprehend objects and entities within a video. For the experimental setup, a video illustrating the task of placing objects inside a circle was utilized.

Query – "Kindly provide the start of the second circle task, the list of product names within that circle, and the total amount."

In the preceding analysis, Pegasus 1.2 demonstrates significantly superior accuracy, whereas Gemini 2.5 Pro completely fails. Despite the user's explicit request for information regarding the "2nd circle task," Gemini incorrectly identifies its location as a "red square on a table" and provides a list of 12 products, which appears to correspond to the initial challenge. Gemini's response reveals a fundamental lack of understanding concerning spatial and temporal sequencing within the video. Conversely, Pegasus accurately identifies the second circle task, providing precise timestamps [192s-199s], correctly describes its occurrence on the floor of a grocery store aisle (not a table), and furnishes the correct product list.

Pegasus also accurately identifies the total amount as $20,000 (with timestamps [304s-307s]), while Gemini erroneously reports $6,100. Pegasus's content is factually accurate, while Gemini's entire response addresses the incorrect question, thereby demonstrating poor video comprehension and object tracking.

E. Contextual Reasoning and Descriptiveness

This evaluation will assess the system's proficiency in comprehending input queries and generating comprehensive, contextually relevant responses. The test's foundational content is a video elucidating the EU AI Act. Our analysis will concentrate on the system's capacity to interpret the subject matter and furnish thorough, accurate, and pertinent insights derived from the content.

Query — "Furnish a response comprising a concise summary (approximately 30 words) and an elaborate narrative (approximately 100 words) of this video. Incorporate timestamps for each principal topic transition."

The following analysis indicates that Pegasus 1.2 exhibits superior contextual reasoning and descriptive capabilities when compared to both Gemini 2.5 Pro and AWS Nova. Pegasus provides a comprehensive and coherent narrative, accurately referencing individuals such as Sam Altman, CEO of OpenAI, and establishing meaningful connections between concepts, including the EU AI Act, ChatGPT’s risk classification, and China's social credit system. In contrast, Gemini 2.5 Pro, while competent, offers a more fragmented explanation with reduced depth and fewer contextual links.

AWS Nova consistently demonstrates a significant deficiency in contextual comprehension, offering only superficial descriptions of visual elements, such as "a woman in a dark background" and "a man standing in front of a map." It provides no substantive interpretation of the EU AI Act discussion, lacking analytical depth beyond basic scene recognition.

Pegasus 1.2, in contrast, effectively timestamps transitions and articulates the regulatory framework, risk tiers, and policy implications in a well-structured and logically coherent manner. Pegasus not only identifies the subject matter but also synthesizes the evolving discussion across the video timeline, transforming raw information into a clear and informative narrative. AWS Nova, however, demonstrates limited capability beyond object identification, exhibiting minimal understanding of the video's thematic significance or legal context.

How to contribute to the Video-to-Text Arena codebase

To list a model or any other video understanding/multimodal model for comparison on the platform, integrate it within approximately 30 lines of code in the video-to-text Arena. The following steps and files require updates to integrate a new model and ensure its visibility in the user interface.

Any Multimodal Large Language Models (MLLMs) adapted for video understanding or any other specialized video model can be integrated into the platform. Each model can be mapped to its own dedicated processing utility, defined within the same class structure. The diagram below illustrates the utilization of different models across the platform, along with their corresponding processing pipelines.

Preparation Steps

Obtain your API key from the TwelveLabs Playground and configure your environment variable.

Clone the project from Github Repo.

Create a .env file to store your TwelveLabs API KEY.

Upon completion of these steps, you're ready to start developing!

Backend Setup

Step 1: Create Model Class

A new model class should be created, with its associated processing utilities defined within this class. The class may be defined as a separate model file within the models/ folder.

Step 2: Update Configuration

Integrate your model's configuration into config.py, primarily specifying the inference API and the Base URL of the endpoint.

Step 3: Register your Model

Update models/__init__.py to reference the newly defined model's class.

Step 4: Integrate with Main Application

Modify app.py to incorporate your new model by defining its class within the model_dict.

Step 5: Add API Route

Update routes/api_routes.py to include your model in the routes by defining it within the model_dict.

The backend server is now fully configured. Only minimal frontend adjustments are required to make the newly defined model visible.

Frontend Setup

Step 1: Update API Service Type Definitions

Add your model to the ModelStatus interface in lib/api.ts.

Step 2: Update Model Availability Functions

The components/model-evaluation-platform.tsx component manages model selection. Update the model availability functions by defining the newly integrated model function.

The newly added model will subsequently appear in the arena, ready for exploration and experimentation.

Conclusion

At TwelveLabs, our Pegasus-1.2 model stands at the forefront of specialized video understanding, uniquely engineered to overcome the inherent limitations of general-purpose Multimodal LLMs. Pegasus meticulously captures the profound nuances of video, discerning contextual information, temporal relationships, and subtle visual cues that other AI systems often miss.

The seamless integration of Pegasus with advanced tool-use and autonomous workflows marks a new era in intelligent video processing. This synergy empowers our model to move beyond mere analysis, actively interpreting, reasoning about, and ultimately acting upon video content. This groundbreaking capability unlocks a multitude of transformative applications, revolutionizing how our users interact with and leverage video data.

For example, in content moderation, Pegasus autonomously identifies and flags inappropriate or harmful content, significantly enhancing the efficiency and accuracy of platform safety measures for our clients. In entertainment, personalized video recommendations powered by Pegasus become far more precise and engaging, catering to individual tastes by understanding the underlying themes and emotions within videos. Furthermore, intelligent scene-based editing leverages Pegasus to automate complex video production tasks, allowing for the seamless creation of highlight reels, dynamic transitions, and contextually aware cuts, thereby streamlining the creative process and opening new possibilities for video storytelling. The ability of Pegasus to not just understand but also interact with and manipulate video content represents a significant leap forward in video understanding, with far-reaching implications across various industries and applications that we are proud to enable.

Additional Resources

Learn more about the analyze video engine—Pegasus-1.2. To explore TwelveLabs further and enhance your understanding of video content analysis, check out these resources:

Join the Conversation: Share your feedback on this integration in the TwelveLabs Discord.

Explore Sample Apps: Dive deeper into TwelveLabs capabilities with our comprehensive tutorials

We encourage you to use these resources to expand your knowledge and create innovative applications using TwelveLabs video understanding technology.

Related articles

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved