Tutorial

Tutorial

Tutorial

Search, Match, Measure: A Full‑Stack Creator Platform with Twelve Labs

Meeran Kim

Meeran Kim

Meeran Kim

Creator Discovery is a Next.js 15 application that showcases Twelve Labs video-understanding technology through three production-ready demos: (1) Creator – Brand Match – find the best creator videos for a given brand video (and vice-versa) using multimodal embeddings stored in Pinecone; (2) Semantic Search – unified text & image search across brand/creator indices with rich facet filtering; and (3) Brand Mention Detection – automatic extraction and visualization of brand/product appearances inside creator videos. Together these features illustrate how to combine Twelve Labs APIs with vector search and modern React tooling to build powerful media-intelligence products.

Creator Discovery is a Next.js 15 application that showcases Twelve Labs video-understanding technology through three production-ready demos: (1) Creator – Brand Match – find the best creator videos for a given brand video (and vice-versa) using multimodal embeddings stored in Pinecone; (2) Semantic Search – unified text & image search across brand/creator indices with rich facet filtering; and (3) Brand Mention Detection – automatic extraction and visualization of brand/product appearances inside creator videos. Together these features illustrate how to combine Twelve Labs APIs with vector search and modern React tooling to build powerful media-intelligence products.

Join our newsletter

Receive the latest advancements, tutorials, and industry insights in video understanding

Search, analyze, and explore your videos with AI.

Nov 10, 2025

Nov 10, 2025

Nov 10, 2025

13 Minutes

13 Minutes

13 Minutes

Copy link to article

Copy link to article

Copy link to article

Introduction

In today's creator economy, brands and content creators face a critical challenge: finding the right partnerships that drive authentic engagement and measurable results. Traditional methods of creator discovery rely on manual screening, basic keyword matching, and gut feelings—processes that are time-consuming, subjective, and often miss the most valuable opportunities.

This tutorial explores how we built a comprehensive Creator Discovery platform that solves these challenges by leveraging Twelve Labs' advanced video understanding technology. Our application demonstrates three powerful use cases - hybrid embedding search, interactive brand mention visualization, and multi-modal semantic search - that showcase how AI can transform video content analysis from a manual, error-prone process into an intelligent, automated system that delivers precise insights and meaningful connections.

📌 Link to Creator Discovery demo app

App Demo

📌 Link to app demo video

The Challenge: Why Traditional Creator Discovery Falls Short

Traditional creator discovery relies on manual screening, basic keyword matching, and gut feelings—processes that are time-consuming, subjective, and often miss the most valuable opportunities. Brands struggle to find creators whose content authentically aligns with their products, while creators have difficulty demonstrating their value to potential partners. Both sides face inefficient search methods that miss nuanced content and don't scale with growing content libraries.

Our Solution: AI-Powered Creator Discovery

Our Creator Discovery platform transforms video content analysis from a manual, error-prone process into an intelligent, automated system using Twelve Labs' advanced video understanding technology. The platform demonstrates three powerful capabilities:

Creator Brand Match - Intelligent partnership discovery using multimodal embeddings

Brand Mention Detection - Automated brand exposure analysis with visual heatmaps

Semantic Search - Natural language and visual search across video content

Each feature showcases different aspects of Twelve Labs' video understanding capabilities and delivers measurable business value.

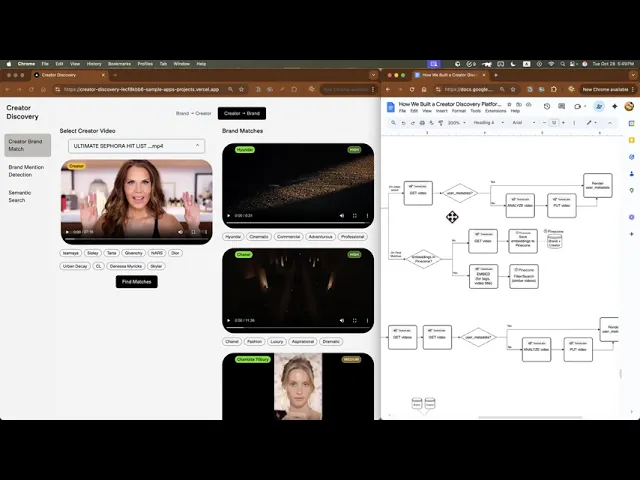

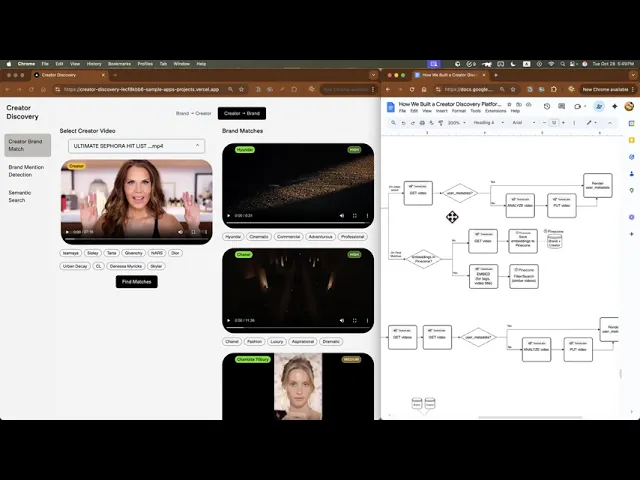

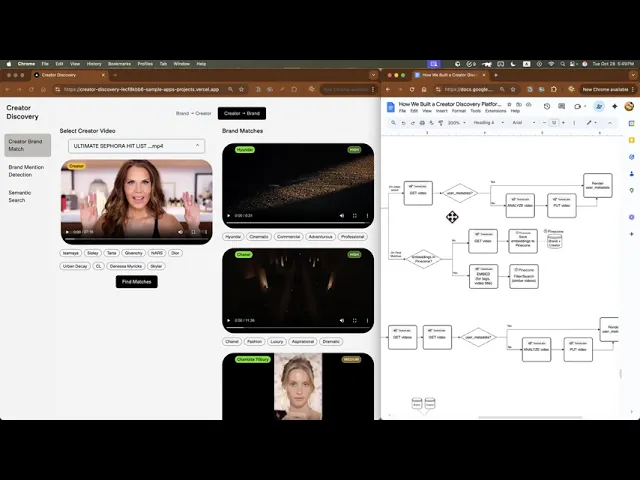

Feature 1: Creator Brand Match - Finding Perfect Partnerships

Traditional creator discovery relies on follower counts, basic demographics, and manual content review—methods that often miss the most valuable partnerships. Our Creator Brand Match feature leverages Twelve Labs' Analyze API and Embed API to create intelligent matching between brand and creator content. Here's how it works:

Content Analysis

When a user selects a video, our system first checks if the video has been analyzed. If not, it automatically sends the video to Twelve Labs' Analyze API with a sophisticated prompt that extracts:

Brand tags and product mentions with precise timing

Content tone and style (energetic, professional, casual, etc.)

Visual context (location, positioning, prominence of brand elements)

Creator identification from watermarks or introductions

This analysis happens automatically in the background, transforming raw video content into structured, searchable metadata.

Multimodal Embedding Generation

For similarity matching, we use Twelve Labs' Embed API to generate two types of embeddings:

Text embeddings from video titles, descriptions, and extracted brand tags

Video embeddings from the actual video content using Twelve Labs' advanced video understanding models

These embeddings capture the semantic meaning of content, not just keywords, enabling matches based on conceptual similarity rather than exact text matches.

Similarity Search

We store these embeddings in Pinecone, a vector database optimized for similarity search. When users click "Find Matches," our system:

Retrieves embeddings for the source video using Twelve Labs' models

Searches for similar content across both brand and creator video libraries

Combines text and video similarity scores with a boost for videos that appear in both search types

Returns ranked results that represent true content alignment

Business Value

This technology helps brands and creators connect more intelligently through genuine content alignment rather than vanity metrics. Brands can discover hidden gems—creators with smaller audiences but highly relevant content that perfectly fits their image and values. AI-powered analysis enables authentic partnerships by matching brand campaigns with creators whose tone, topics, and audience naturally complement the brand’s story. It also streamlines the process with efficient screening, automatically surfacing the most relevant creators from massive content libraries. Because discovery is bidirectional, creators can also find brand content that resonates with their unique style and audience, opening the door to more meaningful and long-term collaborations.

Beyond Creator Discovery

The underlying technology extends far beyond influencer marketing. Its core capability — understanding video and text content semantically — can be applied to content recommendation, where streaming platforms suggest videos with similar visual or thematic elements. In education, e-learning platforms can leverage it for student-course matching, pairing learners with classes that suit their preferred learning styles or interests. And in commerce, it enables product discovery, allowing e-commerce platforms to find items that match a user’s lifestyle, aesthetic, or user-generated content.

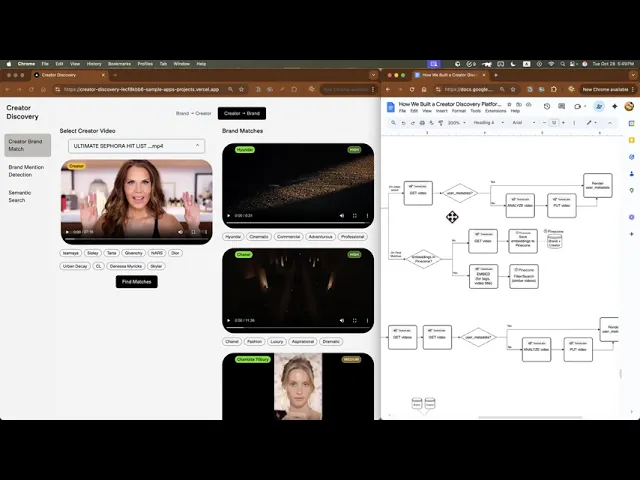

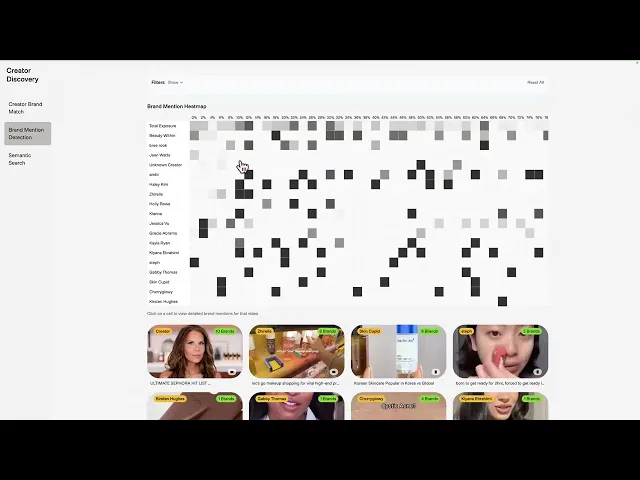

Feature 2: Brand Mention Detection - Measuring True Brand Impact

Brands invest significant resources in creator partnerships but struggle to measure actual impact. Traditional metrics like views and likes don't capture the quality, duration, or context of brand exposure. Our Brand Mention Detection feature uses Twelve Labs' Analyze API to automatically detect and analyze every brand mention, logo appearance, and product placement in creator videos.

Brand Detection

The system uses a sophisticated prompt that instructs Twelve Labs' Analyze AI to:

Scan entire videos from start to finish (0%-100%) without stopping early

Detect visible logos and branding with precise timing information

Identify product placements and brand mentions in context

Track positioning details (logo location, size, prominence in frame)

Segment appearances into micro-segments (≤8 seconds) for accurate timing

Advanced Analysis

Twelve Labs' Analyze API doesn't just detect brands—it understands context:

Location precision: "logo prominently displayed on sail in center of frame"

Timing accuracy: Exact start/end times for each brand appearance

Context understanding: Whether the brand appears on products, clothing, backgrounds, or signage

Quality assessment: How prominently and clearly the brand is displayed

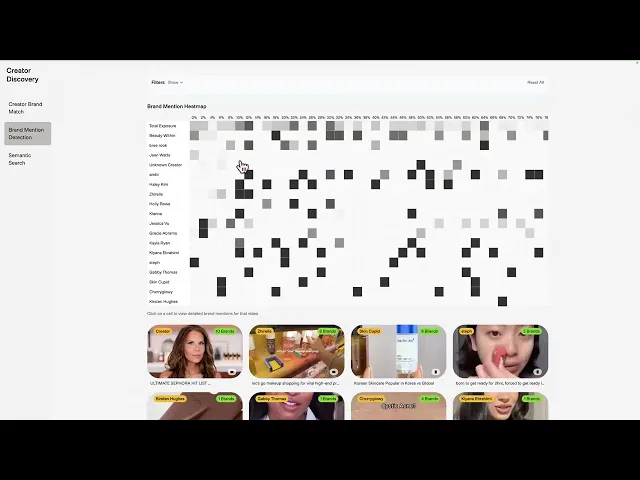

Visual Heatmap Generation

The detected brand mentions are transformed into interactive heatmaps that show:

Per-video analysis: Brand exposure patterns throughout individual videos

Library-wide insights: Aggregate brand exposure across multiple creators

Time-based visualization: When and for how long brands appear

Total exposure metrics: Comprehensive brand visibility measurements

Business Value

This technology enables both brands and creators to measure and optimize real brand exposure across video content. For brands, it provides a new level of transparency—quantifying not just how often their logo appears, but how long and in what context. With this insight, brands can accurately measure ROI, identify which creators deliver the highest visibility, and refine their content strategy based on the types of videos that generate the most meaningful exposure. Continuous performance tracking also makes it easy to monitor brand mention trends across creator partnerships and campaigns.

For creators, it provides a way to demonstrate tangible value to potential brand partners. They can show how much exposure their content truly provides, understand which creative styles perform best for brand visibility, and back up their collaborations with data-driven evidence.

Beyond Creator Partnerships

Beyond creator partnerships, this technology’s core capability—automated visual and contextual brand detection—has wide applications across industries. In sports sponsorship, it can track logo visibility during broadcasts or live events. In entertainment, it can measure product placements in movies, TV, and streaming. In education, it can identify brand mentions in learning materials, and in news and media, it enables monitoring of brand exposure across interviews and reports.

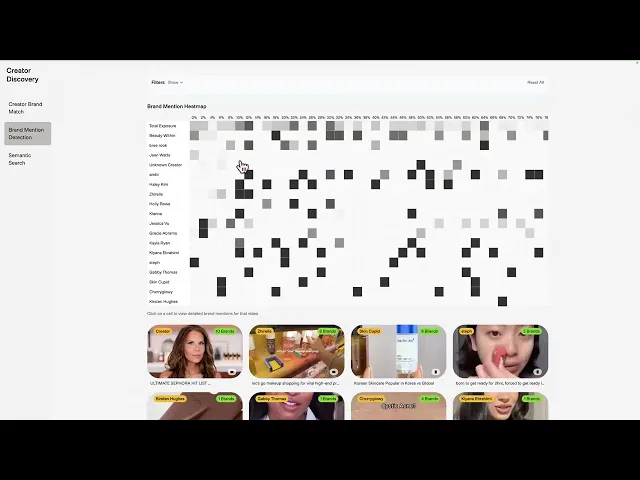

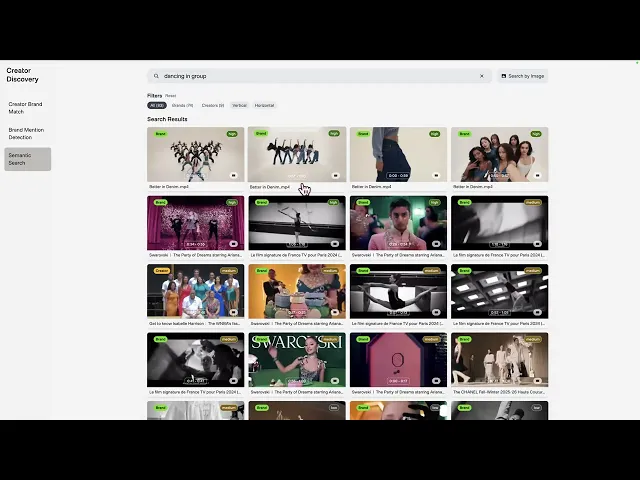

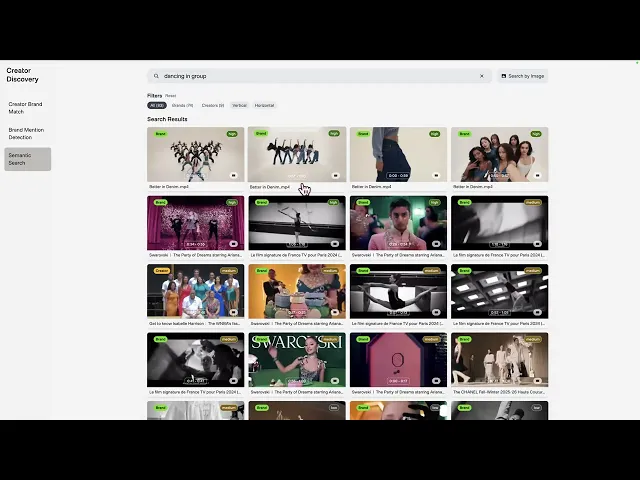

Feature 3: Semantic Search - Beyond Keywords to Understanding

Traditional search relies on exact keyword matching, missing content that's conceptually relevant but uses different terminology. Our Semantic Search feature leverages Twelve Labs' Search API to enable two powerful search modalities that understand content meaning, not just keywords.

Text Search: Natural Language Understanding

Users can search using natural language queries like:

"beauty tutorial with natural makeup look" - finds videos showing this specific style, even if not explicitly described

"skincare routine for sensitive skin" - finds relevant beauty content regardless of exact terminology

"luxury brand unboxing experience" - finds premium brand content regardless of specific brand names

Image Search: Visual Similarity Matching

Users can upload images to find visually similar content:

Upload a product photo to find videos featuring that product

Share a scene image to find similar visual content

Use lifestyle images to find content with matching aesthetics

Intelligent Search Architecture

The system searches across both brand and creator video indices simultaneously, then:

Merges results from multiple sources

Ranks by relevance using Twelve Labs' advanced scoring

Provides faceted filtering by content type, format, and other metadata

Enables scope control to search specific content libraries

Business Value

Semantic search transforms how organizations find, understand, and leverage their video content. By searching through meaning—not just keywords—it allows teams to uncover precise moments, insights, and themes in seconds. This eliminates hours of manual tagging and review, dramatically improving content discovery efficiency and enabling faster decision-making.

With multimodal understanding across text, visuals, and speech, users can search using natural language or images to find the exact scenes that matter. This drives creative productivity, helps marketing and media teams reuse high-value assets, and empowers enterprises to extract measurable insights from their vast video libraries.

Beyond Creator Discovery

The same technology extends well beyond creator discovery. In media, it enables quick retrieval of scenes or concepts across vast archives. In education, it helps match students with materials aligned to their learning style and subject matter. In e-commerce, it allows users to find products that visually match their lifestyle content.

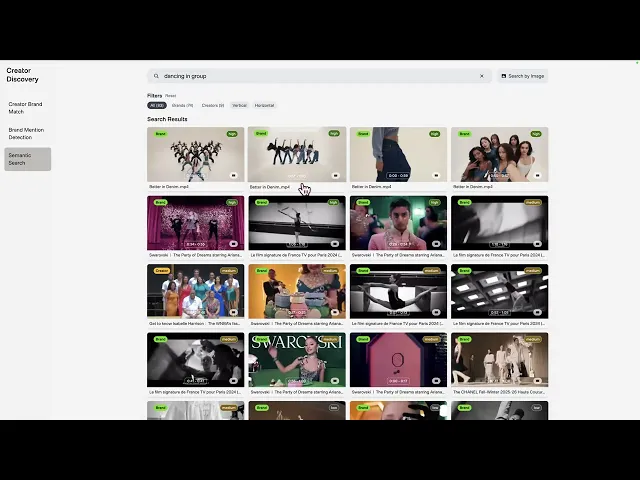

Technical Architecture: How It All Works Together

The Twelve Labs Integration

Our platform demonstrates three key Twelve Labs APIs working in harmony:

Analyze API 🔗

Purpose: Extract structured insights from video content

Use Cases: Brand detection, content analysis, metadata generation

Business Value: Transforms unstructured video into searchable, actionable data

Embed API 🔗

Purpose: Generate semantic embeddings for similarity search

Use Cases: Content matching, recommendation systems, semantic search

Business Value: Enables intelligent content discovery beyond keyword matching

Search API 🔗

Purpose: Natural language and visual search across video libraries

Use Cases: Content discovery, trend analysis, competitive intelligence

Business Value: Makes large video libraries searchable and accessible

Technical Requirements

Find the codebase from GitHub and configure environment

Twelve Labs API Access: Sign up for Twelve Labs API access

Vector Database: Pinecone for embedding storage and similarity search

Modern Web Framework: Next.js, React, or similar for the frontend

API Integration: RESTful API design for Twelve Labs integration

The End-to-End Workflow

Creator Brand Match: The Creator Brand Match feature operates in two distinct phases. On video selection, the system checks for existing user metadata. If metadata is missing, it triggers Twelve Labs' Analyze API🔗 to generate brand tags and updates the video with new metadata. On "Find Matches" button click, the system verifies if embeddings exist in Pinecone. Missing embeddings are retrieved from Twelve Labs Get Video API🔗 and stored in Pinecone, followed by similarity search to find matching videos across Brand and Creator indices.

Brand Mention Detection: This feature focuses on visualizing brand mentions in Creator videos. The system fetches Creator videos from Twelve Labs and checks for existing user metadata containing brand mention events. When metadata is absent, it automatically triggers the Analyze API🔗 to generate brand mention data, then updates the video and renders the results as interactive heatmaps showing brand exposure patterns over time.

Semantic Search: The Semantic Search feature provides two search modalities. Text Search allows users to query videos using natural language, while Image Search enables visual similarity matching by uploading images. Both methods utilize Twelve Labs Search API🔗 to query across Brand and Creator indices simultaneously, with results merged and sorted by relevance score.

Conclusion

The Creator Discovery application shows how to combine Twelve Labs' video analysis with Pinecone's vector search to build practical video intelligence features. From hybrid embedding search to interactive brand mention visualization, the platform demonstrates effective patterns for integrating AI services while maintaining good performance. I hope this walkthrough helps you understand how these technologies work together in practice, and provides useful insights for your own video intelligence projects!

Introduction

In today's creator economy, brands and content creators face a critical challenge: finding the right partnerships that drive authentic engagement and measurable results. Traditional methods of creator discovery rely on manual screening, basic keyword matching, and gut feelings—processes that are time-consuming, subjective, and often miss the most valuable opportunities.

This tutorial explores how we built a comprehensive Creator Discovery platform that solves these challenges by leveraging Twelve Labs' advanced video understanding technology. Our application demonstrates three powerful use cases - hybrid embedding search, interactive brand mention visualization, and multi-modal semantic search - that showcase how AI can transform video content analysis from a manual, error-prone process into an intelligent, automated system that delivers precise insights and meaningful connections.

📌 Link to Creator Discovery demo app

App Demo

📌 Link to app demo video

The Challenge: Why Traditional Creator Discovery Falls Short

Traditional creator discovery relies on manual screening, basic keyword matching, and gut feelings—processes that are time-consuming, subjective, and often miss the most valuable opportunities. Brands struggle to find creators whose content authentically aligns with their products, while creators have difficulty demonstrating their value to potential partners. Both sides face inefficient search methods that miss nuanced content and don't scale with growing content libraries.

Our Solution: AI-Powered Creator Discovery

Our Creator Discovery platform transforms video content analysis from a manual, error-prone process into an intelligent, automated system using Twelve Labs' advanced video understanding technology. The platform demonstrates three powerful capabilities:

Creator Brand Match - Intelligent partnership discovery using multimodal embeddings

Brand Mention Detection - Automated brand exposure analysis with visual heatmaps

Semantic Search - Natural language and visual search across video content

Each feature showcases different aspects of Twelve Labs' video understanding capabilities and delivers measurable business value.

Feature 1: Creator Brand Match - Finding Perfect Partnerships

Traditional creator discovery relies on follower counts, basic demographics, and manual content review—methods that often miss the most valuable partnerships. Our Creator Brand Match feature leverages Twelve Labs' Analyze API and Embed API to create intelligent matching between brand and creator content. Here's how it works:

Content Analysis

When a user selects a video, our system first checks if the video has been analyzed. If not, it automatically sends the video to Twelve Labs' Analyze API with a sophisticated prompt that extracts:

Brand tags and product mentions with precise timing

Content tone and style (energetic, professional, casual, etc.)

Visual context (location, positioning, prominence of brand elements)

Creator identification from watermarks or introductions

This analysis happens automatically in the background, transforming raw video content into structured, searchable metadata.

Multimodal Embedding Generation

For similarity matching, we use Twelve Labs' Embed API to generate two types of embeddings:

Text embeddings from video titles, descriptions, and extracted brand tags

Video embeddings from the actual video content using Twelve Labs' advanced video understanding models

These embeddings capture the semantic meaning of content, not just keywords, enabling matches based on conceptual similarity rather than exact text matches.

Similarity Search

We store these embeddings in Pinecone, a vector database optimized for similarity search. When users click "Find Matches," our system:

Retrieves embeddings for the source video using Twelve Labs' models

Searches for similar content across both brand and creator video libraries

Combines text and video similarity scores with a boost for videos that appear in both search types

Returns ranked results that represent true content alignment

Business Value

This technology helps brands and creators connect more intelligently through genuine content alignment rather than vanity metrics. Brands can discover hidden gems—creators with smaller audiences but highly relevant content that perfectly fits their image and values. AI-powered analysis enables authentic partnerships by matching brand campaigns with creators whose tone, topics, and audience naturally complement the brand’s story. It also streamlines the process with efficient screening, automatically surfacing the most relevant creators from massive content libraries. Because discovery is bidirectional, creators can also find brand content that resonates with their unique style and audience, opening the door to more meaningful and long-term collaborations.

Beyond Creator Discovery

The underlying technology extends far beyond influencer marketing. Its core capability — understanding video and text content semantically — can be applied to content recommendation, where streaming platforms suggest videos with similar visual or thematic elements. In education, e-learning platforms can leverage it for student-course matching, pairing learners with classes that suit their preferred learning styles or interests. And in commerce, it enables product discovery, allowing e-commerce platforms to find items that match a user’s lifestyle, aesthetic, or user-generated content.

Feature 2: Brand Mention Detection - Measuring True Brand Impact

Brands invest significant resources in creator partnerships but struggle to measure actual impact. Traditional metrics like views and likes don't capture the quality, duration, or context of brand exposure. Our Brand Mention Detection feature uses Twelve Labs' Analyze API to automatically detect and analyze every brand mention, logo appearance, and product placement in creator videos.

Brand Detection

The system uses a sophisticated prompt that instructs Twelve Labs' Analyze AI to:

Scan entire videos from start to finish (0%-100%) without stopping early

Detect visible logos and branding with precise timing information

Identify product placements and brand mentions in context

Track positioning details (logo location, size, prominence in frame)

Segment appearances into micro-segments (≤8 seconds) for accurate timing

Advanced Analysis

Twelve Labs' Analyze API doesn't just detect brands—it understands context:

Location precision: "logo prominently displayed on sail in center of frame"

Timing accuracy: Exact start/end times for each brand appearance

Context understanding: Whether the brand appears on products, clothing, backgrounds, or signage

Quality assessment: How prominently and clearly the brand is displayed

Visual Heatmap Generation

The detected brand mentions are transformed into interactive heatmaps that show:

Per-video analysis: Brand exposure patterns throughout individual videos

Library-wide insights: Aggregate brand exposure across multiple creators

Time-based visualization: When and for how long brands appear

Total exposure metrics: Comprehensive brand visibility measurements

Business Value

This technology enables both brands and creators to measure and optimize real brand exposure across video content. For brands, it provides a new level of transparency—quantifying not just how often their logo appears, but how long and in what context. With this insight, brands can accurately measure ROI, identify which creators deliver the highest visibility, and refine their content strategy based on the types of videos that generate the most meaningful exposure. Continuous performance tracking also makes it easy to monitor brand mention trends across creator partnerships and campaigns.

For creators, it provides a way to demonstrate tangible value to potential brand partners. They can show how much exposure their content truly provides, understand which creative styles perform best for brand visibility, and back up their collaborations with data-driven evidence.

Beyond Creator Partnerships

Beyond creator partnerships, this technology’s core capability—automated visual and contextual brand detection—has wide applications across industries. In sports sponsorship, it can track logo visibility during broadcasts or live events. In entertainment, it can measure product placements in movies, TV, and streaming. In education, it can identify brand mentions in learning materials, and in news and media, it enables monitoring of brand exposure across interviews and reports.

Feature 3: Semantic Search - Beyond Keywords to Understanding

Traditional search relies on exact keyword matching, missing content that's conceptually relevant but uses different terminology. Our Semantic Search feature leverages Twelve Labs' Search API to enable two powerful search modalities that understand content meaning, not just keywords.

Text Search: Natural Language Understanding

Users can search using natural language queries like:

"beauty tutorial with natural makeup look" - finds videos showing this specific style, even if not explicitly described

"skincare routine for sensitive skin" - finds relevant beauty content regardless of exact terminology

"luxury brand unboxing experience" - finds premium brand content regardless of specific brand names

Image Search: Visual Similarity Matching

Users can upload images to find visually similar content:

Upload a product photo to find videos featuring that product

Share a scene image to find similar visual content

Use lifestyle images to find content with matching aesthetics

Intelligent Search Architecture

The system searches across both brand and creator video indices simultaneously, then:

Merges results from multiple sources

Ranks by relevance using Twelve Labs' advanced scoring

Provides faceted filtering by content type, format, and other metadata

Enables scope control to search specific content libraries

Business Value

Semantic search transforms how organizations find, understand, and leverage their video content. By searching through meaning—not just keywords—it allows teams to uncover precise moments, insights, and themes in seconds. This eliminates hours of manual tagging and review, dramatically improving content discovery efficiency and enabling faster decision-making.

With multimodal understanding across text, visuals, and speech, users can search using natural language or images to find the exact scenes that matter. This drives creative productivity, helps marketing and media teams reuse high-value assets, and empowers enterprises to extract measurable insights from their vast video libraries.

Beyond Creator Discovery

The same technology extends well beyond creator discovery. In media, it enables quick retrieval of scenes or concepts across vast archives. In education, it helps match students with materials aligned to their learning style and subject matter. In e-commerce, it allows users to find products that visually match their lifestyle content.

Technical Architecture: How It All Works Together

The Twelve Labs Integration

Our platform demonstrates three key Twelve Labs APIs working in harmony:

Analyze API 🔗

Purpose: Extract structured insights from video content

Use Cases: Brand detection, content analysis, metadata generation

Business Value: Transforms unstructured video into searchable, actionable data

Embed API 🔗

Purpose: Generate semantic embeddings for similarity search

Use Cases: Content matching, recommendation systems, semantic search

Business Value: Enables intelligent content discovery beyond keyword matching

Search API 🔗

Purpose: Natural language and visual search across video libraries

Use Cases: Content discovery, trend analysis, competitive intelligence

Business Value: Makes large video libraries searchable and accessible

Technical Requirements

Find the codebase from GitHub and configure environment

Twelve Labs API Access: Sign up for Twelve Labs API access

Vector Database: Pinecone for embedding storage and similarity search

Modern Web Framework: Next.js, React, or similar for the frontend

API Integration: RESTful API design for Twelve Labs integration

The End-to-End Workflow

Creator Brand Match: The Creator Brand Match feature operates in two distinct phases. On video selection, the system checks for existing user metadata. If metadata is missing, it triggers Twelve Labs' Analyze API🔗 to generate brand tags and updates the video with new metadata. On "Find Matches" button click, the system verifies if embeddings exist in Pinecone. Missing embeddings are retrieved from Twelve Labs Get Video API🔗 and stored in Pinecone, followed by similarity search to find matching videos across Brand and Creator indices.

Brand Mention Detection: This feature focuses on visualizing brand mentions in Creator videos. The system fetches Creator videos from Twelve Labs and checks for existing user metadata containing brand mention events. When metadata is absent, it automatically triggers the Analyze API🔗 to generate brand mention data, then updates the video and renders the results as interactive heatmaps showing brand exposure patterns over time.

Semantic Search: The Semantic Search feature provides two search modalities. Text Search allows users to query videos using natural language, while Image Search enables visual similarity matching by uploading images. Both methods utilize Twelve Labs Search API🔗 to query across Brand and Creator indices simultaneously, with results merged and sorted by relevance score.

Conclusion

The Creator Discovery application shows how to combine Twelve Labs' video analysis with Pinecone's vector search to build practical video intelligence features. From hybrid embedding search to interactive brand mention visualization, the platform demonstrates effective patterns for integrating AI services while maintaining good performance. I hope this walkthrough helps you understand how these technologies work together in practice, and provides useful insights for your own video intelligence projects!

Introduction

In today's creator economy, brands and content creators face a critical challenge: finding the right partnerships that drive authentic engagement and measurable results. Traditional methods of creator discovery rely on manual screening, basic keyword matching, and gut feelings—processes that are time-consuming, subjective, and often miss the most valuable opportunities.

This tutorial explores how we built a comprehensive Creator Discovery platform that solves these challenges by leveraging Twelve Labs' advanced video understanding technology. Our application demonstrates three powerful use cases - hybrid embedding search, interactive brand mention visualization, and multi-modal semantic search - that showcase how AI can transform video content analysis from a manual, error-prone process into an intelligent, automated system that delivers precise insights and meaningful connections.

📌 Link to Creator Discovery demo app

App Demo

📌 Link to app demo video

The Challenge: Why Traditional Creator Discovery Falls Short

Traditional creator discovery relies on manual screening, basic keyword matching, and gut feelings—processes that are time-consuming, subjective, and often miss the most valuable opportunities. Brands struggle to find creators whose content authentically aligns with their products, while creators have difficulty demonstrating their value to potential partners. Both sides face inefficient search methods that miss nuanced content and don't scale with growing content libraries.

Our Solution: AI-Powered Creator Discovery

Our Creator Discovery platform transforms video content analysis from a manual, error-prone process into an intelligent, automated system using Twelve Labs' advanced video understanding technology. The platform demonstrates three powerful capabilities:

Creator Brand Match - Intelligent partnership discovery using multimodal embeddings

Brand Mention Detection - Automated brand exposure analysis with visual heatmaps

Semantic Search - Natural language and visual search across video content

Each feature showcases different aspects of Twelve Labs' video understanding capabilities and delivers measurable business value.

Feature 1: Creator Brand Match - Finding Perfect Partnerships

Traditional creator discovery relies on follower counts, basic demographics, and manual content review—methods that often miss the most valuable partnerships. Our Creator Brand Match feature leverages Twelve Labs' Analyze API and Embed API to create intelligent matching between brand and creator content. Here's how it works:

Content Analysis

When a user selects a video, our system first checks if the video has been analyzed. If not, it automatically sends the video to Twelve Labs' Analyze API with a sophisticated prompt that extracts:

Brand tags and product mentions with precise timing

Content tone and style (energetic, professional, casual, etc.)

Visual context (location, positioning, prominence of brand elements)

Creator identification from watermarks or introductions

This analysis happens automatically in the background, transforming raw video content into structured, searchable metadata.

Multimodal Embedding Generation

For similarity matching, we use Twelve Labs' Embed API to generate two types of embeddings:

Text embeddings from video titles, descriptions, and extracted brand tags

Video embeddings from the actual video content using Twelve Labs' advanced video understanding models

These embeddings capture the semantic meaning of content, not just keywords, enabling matches based on conceptual similarity rather than exact text matches.

Similarity Search

We store these embeddings in Pinecone, a vector database optimized for similarity search. When users click "Find Matches," our system:

Retrieves embeddings for the source video using Twelve Labs' models

Searches for similar content across both brand and creator video libraries

Combines text and video similarity scores with a boost for videos that appear in both search types

Returns ranked results that represent true content alignment

Business Value

This technology helps brands and creators connect more intelligently through genuine content alignment rather than vanity metrics. Brands can discover hidden gems—creators with smaller audiences but highly relevant content that perfectly fits their image and values. AI-powered analysis enables authentic partnerships by matching brand campaigns with creators whose tone, topics, and audience naturally complement the brand’s story. It also streamlines the process with efficient screening, automatically surfacing the most relevant creators from massive content libraries. Because discovery is bidirectional, creators can also find brand content that resonates with their unique style and audience, opening the door to more meaningful and long-term collaborations.

Beyond Creator Discovery

The underlying technology extends far beyond influencer marketing. Its core capability — understanding video and text content semantically — can be applied to content recommendation, where streaming platforms suggest videos with similar visual or thematic elements. In education, e-learning platforms can leverage it for student-course matching, pairing learners with classes that suit their preferred learning styles or interests. And in commerce, it enables product discovery, allowing e-commerce platforms to find items that match a user’s lifestyle, aesthetic, or user-generated content.

Feature 2: Brand Mention Detection - Measuring True Brand Impact

Brands invest significant resources in creator partnerships but struggle to measure actual impact. Traditional metrics like views and likes don't capture the quality, duration, or context of brand exposure. Our Brand Mention Detection feature uses Twelve Labs' Analyze API to automatically detect and analyze every brand mention, logo appearance, and product placement in creator videos.

Brand Detection

The system uses a sophisticated prompt that instructs Twelve Labs' Analyze AI to:

Scan entire videos from start to finish (0%-100%) without stopping early

Detect visible logos and branding with precise timing information

Identify product placements and brand mentions in context

Track positioning details (logo location, size, prominence in frame)

Segment appearances into micro-segments (≤8 seconds) for accurate timing

Advanced Analysis

Twelve Labs' Analyze API doesn't just detect brands—it understands context:

Location precision: "logo prominently displayed on sail in center of frame"

Timing accuracy: Exact start/end times for each brand appearance

Context understanding: Whether the brand appears on products, clothing, backgrounds, or signage

Quality assessment: How prominently and clearly the brand is displayed

Visual Heatmap Generation

The detected brand mentions are transformed into interactive heatmaps that show:

Per-video analysis: Brand exposure patterns throughout individual videos

Library-wide insights: Aggregate brand exposure across multiple creators

Time-based visualization: When and for how long brands appear

Total exposure metrics: Comprehensive brand visibility measurements

Business Value

This technology enables both brands and creators to measure and optimize real brand exposure across video content. For brands, it provides a new level of transparency—quantifying not just how often their logo appears, but how long and in what context. With this insight, brands can accurately measure ROI, identify which creators deliver the highest visibility, and refine their content strategy based on the types of videos that generate the most meaningful exposure. Continuous performance tracking also makes it easy to monitor brand mention trends across creator partnerships and campaigns.

For creators, it provides a way to demonstrate tangible value to potential brand partners. They can show how much exposure their content truly provides, understand which creative styles perform best for brand visibility, and back up their collaborations with data-driven evidence.

Beyond Creator Partnerships

Beyond creator partnerships, this technology’s core capability—automated visual and contextual brand detection—has wide applications across industries. In sports sponsorship, it can track logo visibility during broadcasts or live events. In entertainment, it can measure product placements in movies, TV, and streaming. In education, it can identify brand mentions in learning materials, and in news and media, it enables monitoring of brand exposure across interviews and reports.

Feature 3: Semantic Search - Beyond Keywords to Understanding

Traditional search relies on exact keyword matching, missing content that's conceptually relevant but uses different terminology. Our Semantic Search feature leverages Twelve Labs' Search API to enable two powerful search modalities that understand content meaning, not just keywords.

Text Search: Natural Language Understanding

Users can search using natural language queries like:

"beauty tutorial with natural makeup look" - finds videos showing this specific style, even if not explicitly described

"skincare routine for sensitive skin" - finds relevant beauty content regardless of exact terminology

"luxury brand unboxing experience" - finds premium brand content regardless of specific brand names

Image Search: Visual Similarity Matching

Users can upload images to find visually similar content:

Upload a product photo to find videos featuring that product

Share a scene image to find similar visual content

Use lifestyle images to find content with matching aesthetics

Intelligent Search Architecture

The system searches across both brand and creator video indices simultaneously, then:

Merges results from multiple sources

Ranks by relevance using Twelve Labs' advanced scoring

Provides faceted filtering by content type, format, and other metadata

Enables scope control to search specific content libraries

Business Value

Semantic search transforms how organizations find, understand, and leverage their video content. By searching through meaning—not just keywords—it allows teams to uncover precise moments, insights, and themes in seconds. This eliminates hours of manual tagging and review, dramatically improving content discovery efficiency and enabling faster decision-making.

With multimodal understanding across text, visuals, and speech, users can search using natural language or images to find the exact scenes that matter. This drives creative productivity, helps marketing and media teams reuse high-value assets, and empowers enterprises to extract measurable insights from their vast video libraries.

Beyond Creator Discovery

The same technology extends well beyond creator discovery. In media, it enables quick retrieval of scenes or concepts across vast archives. In education, it helps match students with materials aligned to their learning style and subject matter. In e-commerce, it allows users to find products that visually match their lifestyle content.

Technical Architecture: How It All Works Together

The Twelve Labs Integration

Our platform demonstrates three key Twelve Labs APIs working in harmony:

Analyze API 🔗

Purpose: Extract structured insights from video content

Use Cases: Brand detection, content analysis, metadata generation

Business Value: Transforms unstructured video into searchable, actionable data

Embed API 🔗

Purpose: Generate semantic embeddings for similarity search

Use Cases: Content matching, recommendation systems, semantic search

Business Value: Enables intelligent content discovery beyond keyword matching

Search API 🔗

Purpose: Natural language and visual search across video libraries

Use Cases: Content discovery, trend analysis, competitive intelligence

Business Value: Makes large video libraries searchable and accessible

Technical Requirements

Find the codebase from GitHub and configure environment

Twelve Labs API Access: Sign up for Twelve Labs API access

Vector Database: Pinecone for embedding storage and similarity search

Modern Web Framework: Next.js, React, or similar for the frontend

API Integration: RESTful API design for Twelve Labs integration

The End-to-End Workflow

Creator Brand Match: The Creator Brand Match feature operates in two distinct phases. On video selection, the system checks for existing user metadata. If metadata is missing, it triggers Twelve Labs' Analyze API🔗 to generate brand tags and updates the video with new metadata. On "Find Matches" button click, the system verifies if embeddings exist in Pinecone. Missing embeddings are retrieved from Twelve Labs Get Video API🔗 and stored in Pinecone, followed by similarity search to find matching videos across Brand and Creator indices.

Brand Mention Detection: This feature focuses on visualizing brand mentions in Creator videos. The system fetches Creator videos from Twelve Labs and checks for existing user metadata containing brand mention events. When metadata is absent, it automatically triggers the Analyze API🔗 to generate brand mention data, then updates the video and renders the results as interactive heatmaps showing brand exposure patterns over time.

Semantic Search: The Semantic Search feature provides two search modalities. Text Search allows users to query videos using natural language, while Image Search enables visual similarity matching by uploading images. Both methods utilize Twelve Labs Search API🔗 to query across Brand and Creator indices simultaneously, with results merged and sorted by relevance score.

Conclusion

The Creator Discovery application shows how to combine Twelve Labs' video analysis with Pinecone's vector search to build practical video intelligence features. From hybrid embedding search to interactive brand mention visualization, the platform demonstrates effective patterns for integrating AI services while maintaining good performance. I hope this walkthrough helps you understand how these technologies work together in practice, and provides useful insights for your own video intelligence projects!

Related articles

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved

© 2021

-

2026

TwelveLabs, Inc. All Rights Reserved